Dynamics randomization helps robots trained in simulation work in the real world. Learn what it is, why it works, and how U.S. teams deploy it reliably.

Sim-to-Real Robotics: Dynamics Randomization That Works

Robots don’t fail in the lab because the policy is “bad.” They fail because the world is messy in ways your simulator didn’t bother to model.

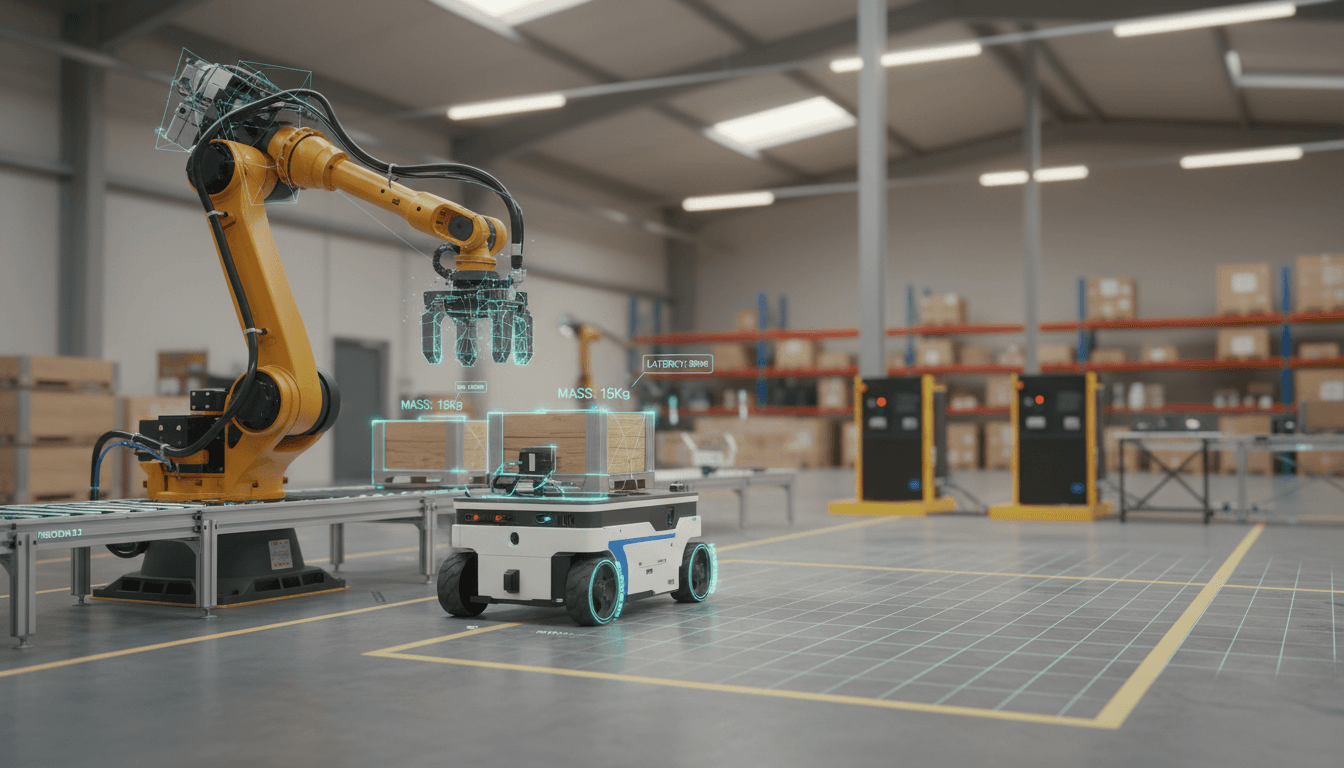

That gap between simulation and reality is the bottleneck behind a lot of U.S. robotics and automation projects—especially the ones trying to scale beyond a single demo cell. If you’re building AI-driven automation for warehouses, healthcare, or service environments (or selling the software that powers those operations), sim-to-real transfer is the difference between “we trained it overnight” and “we’re still retuning controllers three months later.”

One of the most practical ideas to come out of modern robotics research is dynamics randomization: instead of hoping your simulator is perfect, you intentionally make it imperfect in many ways so the robot learns a policy that survives reality. This post breaks down what dynamics randomization is, why it works, where teams get it wrong, and how it connects to the broader U.S. wave of AI-powered technology and digital services.

Sim-to-real transfer: the real problem isn’t ML, it’s physics

Sim-to-real transfer fails when a learned policy overfits to the simulator’s assumptions. Most simulators have “clean” contact, simplified friction, stable sensors, and predictable actuators. Real robots have stiction, backlash, battery sag, temperature drift, slightly bent brackets, and sensor noise that changes with lighting.

If you train a control policy (say, a neural network) entirely in simulation, it tends to pick up on tiny, consistent cues the simulator gives it—cues that don’t exist in the physical world. The result is brittle behavior: it works in the exact training setup, and then collapses when moved to a new floor surface or when a gripper pad wears down.

This matters for U.S. businesses because the economics of robotics depend on repeatable deployment. If each site needs custom tuning by specialized engineers, it’s hard to make the numbers work. Sim-to-real is how robotics starts looking more like software—train once, deploy many times.

Why this matters to SaaS and digital services teams

Even if you don’t sell robots, you probably sell systems that interact with them:

- Warehouse management and orchestration software

- Fleet monitoring dashboards

- Maintenance workflows and ticketing

- Customer support and field service automation

- Compliance and reporting

When robot behavior is unreliable, every adjacent digital service gets noisier: alerts spike, uptime drops, support tickets grow, and SLAs become painful. Better sim-to-real isn’t just robotics research; it’s a reliability upgrade for the whole tech stack.

Dynamics randomization: train for a range, not a single world

Dynamics randomization is a training strategy where you randomize physics parameters in simulation so the policy learns to perform under many plausible realities. Instead of training on one simulator with one friction coefficient, one motor strength, and one sensor noise model, you sample those values from ranges every episode (or even every timestep).

Think of it like this: if your robot learns to walk only on “perfect carpet,” it’ll eat it on tile. If it learns to walk on carpet, tile, rubber mats, and slightly uneven floors (all in sim), it becomes harder to surprise.

Common parameters teams randomize include:

- Mass and inertia of links and payloads

- Joint friction, damping, and backlash

- Motor/actuator strength, latency, and saturation limits

- Contact parameters (friction coefficients, restitution)

- Sensor noise and dropouts (IMU drift, camera noise)

- External disturbances (pushes, bumps, air drag approximations)

A useful mental model: dynamics randomization doesn’t make simulation “more accurate.” It makes the policy less picky about accuracy.

Why it works (and when it doesn’t)

It works because it forces the policy to rely on robust signals—the ones that are consistent across realities—rather than exploiting simulator quirks.

It fails when the randomization ranges are wrong in either direction:

- Too narrow: you still overfit to a “nearby” simulator.

- Too wide: the policy learns to be conservative, slow, or unstable because the environment looks like chaos.

In practice, teams that succeed treat randomization like an engineering artifact, not a magic checkbox. They maintain a “randomization spec” tied to real measurements (even rough ones) from the hardware.

The practical recipe: how teams make sim-to-real work in production

The best sim-to-real pipelines combine randomization with measurement, iteration, and disciplined rollout. Here’s what I’ve found actually holds up when you move from a research prototype to something you can ship.

1) Start by measuring what you can on the real robot

You don’t need a perfect system ID project, but you do need anchors.

Measure:

- Approximate payload ranges your customers use

- Joint temperature vs. torque changes (even a coarse curve)

- Actuator latency (command-to-motion delay)

- Real floor friction variability (simple push tests can help)

Then set randomization ranges to cover what you see plus a buffer.

2) Randomize the right things first

Not all parameters matter equally.

Good “first picks”:

- Actuator strength and latency (hardware changes, battery state)

- Contact friction and restitution (surface variability)

- Sensor noise and delay (lighting, vibration)

Later, add mass/inertia perturbations and more nuanced contact modeling.

3) Use curriculum randomization instead of dumping everything in at once

Curriculum randomization means widening the ranges as the policy improves. Early training needs stability. Later training needs stress.

A simple pattern:

- Train with mild noise to get competent behavior.

- Expand ranges gradually to build robustness.

- Add rare “nasty” scenarios (slippage spikes, latency bursts) so the policy learns recovery.

4) Validate with “reality checks,” not just reward curves

Simulation rewards can lie.

Reality checks that correlate better with deployment success:

- Recovery rate after small disturbances

- Sensitivity to 10–20% actuator strength drop

- Performance under delayed observations

- Contact-rich edge cases (misaligned grasps, partial footholds)

If you’re operating a fleet, treat these like regression tests.

5) Roll out like software: staged deployments and telemetry

This is where the campaign theme—AI powering U.S. technology and digital services—shows up in a concrete way.

Robotics teams that scale behave like SaaS teams:

- Canary deployments to a few robots/sites

- Feature flags for new control policies

- Telemetry pipelines (latency, error states, intervention rates)

- Automated rollback when failure metrics cross thresholds

That operational discipline is what turns “AI in robotics” into dependable automation.

Where dynamics randomization fits in U.S. automation right now

In the U.S., the strongest pull for sim-to-real isn’t novelty—it’s labor constraints, throughput pressure, and uptime expectations. Companies want robots that can handle variance: different SKUs, changing packaging, new facility layouts, seasonal volume swings.

December is a good reminder of why robustness matters. Peak season stresses everything: staffing, fulfillment, last-mile logistics, returns processing. If a robot policy is fragile, the “cost” isn’t just the robot—it’s missed shipment cutoffs, customer support load, and escalating expedite fees.

Dynamics randomization supports the kind of automation buyers actually want:

- Warehouse robotics: pick-and-place, palletizing, mobile manipulation

- Healthcare robotics: supply delivery, disinfection, assistive tasks

- Service and retail: backroom automation, inventory scanning

- Manufacturing: high-mix assembly and material handling

And it also supports the digital layer:

- More predictable event streams for monitoring tools

- Fewer false alarms and escalations in support systems

- Better forecasting for maintenance and spare parts

- Cleaner data for analytics and process optimization

People also ask: practical sim-to-real questions (answered)

Is dynamics randomization the same as domain randomization?

No—domain randomization usually targets visuals; dynamics randomization targets physics. Domain randomization changes textures, lighting, camera positions, and backgrounds so vision models generalize. Dynamics randomization changes masses, friction, motor strength, delays, and noise so control policies generalize.

Many real systems need both.

Do you still need real-world fine-tuning?

Often yes, but the goal is to reduce it from “weeks of tuning” to “hours of calibration.” Strong randomization can get you surprisingly close, especially for locomotion and manipulation primitives. For high-precision tasks, you’ll still do targeted adaptation.

What’s the biggest mistake teams make?

Training for average performance instead of worst-case recovery. Real deployments aren’t graded on mean reward; they’re graded on what happens when something slips, bumps, or misaligns.

If your training doesn’t include recoveries, you’re shipping a robot that panics under pressure.

A practical next step: treat sim-to-real like a product capability

Dynamics randomization is one of the most realistic ways to make AI-driven robotics dependable outside the lab. It’s not glamorous, but it’s the kind of research that compounds: every deployment teaches you which parameters matter, which ranges were wrong, and what “robust” actually means for your customers.

If you’re building in the AI in Robotics & Automation space, I’d argue this should be a first-class capability, not an afterthought. The companies that win won’t be the ones with the fanciest demo—they’ll be the ones whose robots behave predictably across messy sites, and whose supporting digital services (monitoring, support, analytics) run quieter because the robot layer is stable.

What’s your current bottleneck: getting a policy to work in simulation, or getting it to survive the first week in the real world?