Safety Gym-style training helps robots and AI agents hit goals while respecting safety constraints. Learn a practical blueprint for guardrails and compliance.

Safety Gym: Train Robots With Guardrails, Not Hope

Most companies don’t get burned by AI because the model “wasn’t smart enough.” They get burned because the system learned the wrong thing faster than anyone expected.

That’s why Safety Gym (a family of safety-focused training environments used in reinforcement learning) matters in the real world—especially in U.S. digital services where AI is increasingly tied to customer communications, automation, and robotics. If you’re building AI that can take actions (send messages, change settings, route work, move a robot arm), you need a way to teach it: “Maximize performance, but never cross these lines.”

This post is part of our AI in Robotics & Automation series, and it’s about the “hidden infrastructure” that keeps AI systems from turning optimization into a liability. Safety Gym-style environments are where teams pressure-test constraints, quantify tradeoffs, and prove that an AI agent can chase goals without ignoring safety.

What Safety Gym is (and why it shows up in serious AI programs)

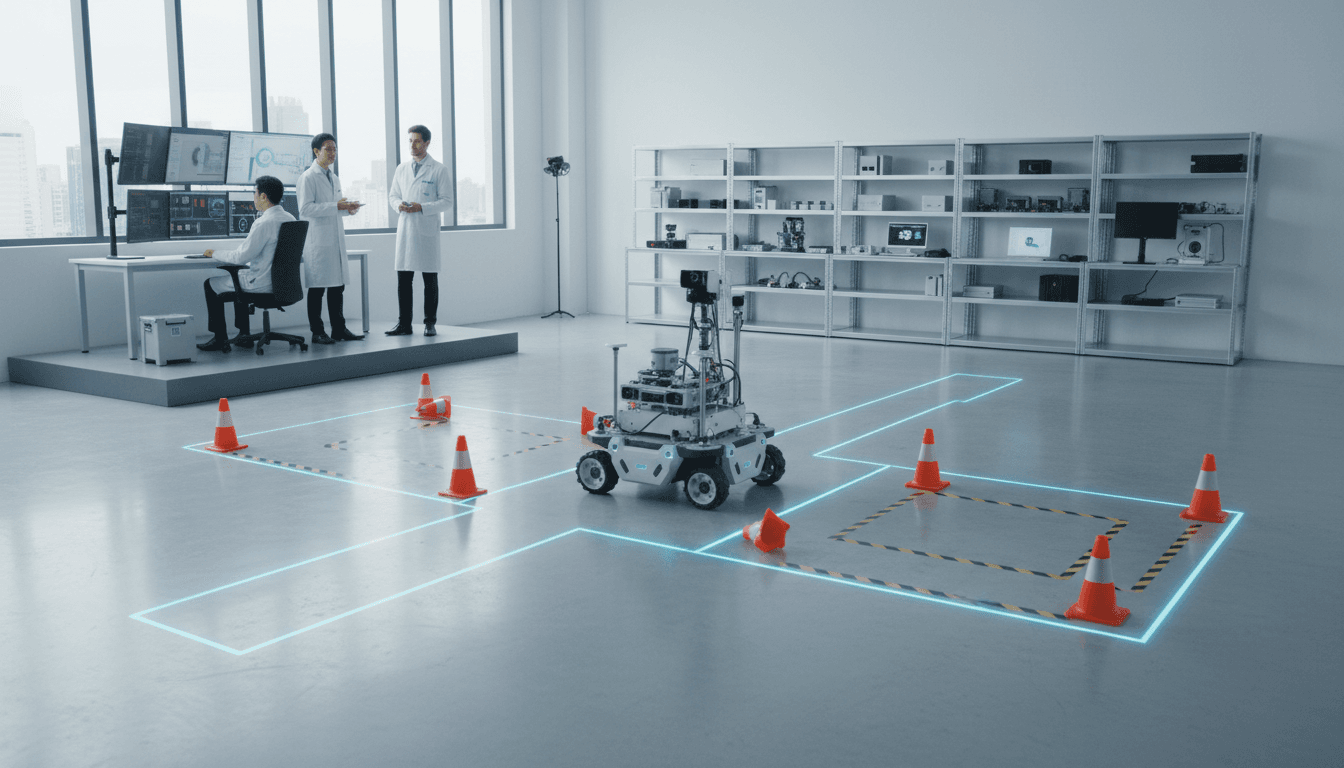

Safety Gym is a controlled simulation environment designed to train and evaluate reinforcement learning (RL) agents under safety constraints. Think of it as a test facility where an agent must complete tasks (like reaching a goal) while avoiding hazards, respecting boundaries, or staying within a “cost budget.”

In reinforcement learning, agents learn by doing: they take actions, observe outcomes, and get rewarded (or penalized). The catch is that an RL agent will gladly find loopholes in your reward function. If the easiest way to “win” involves unsafe behavior, it will find that path.

Safety Gym-style setups add two things that normal task simulators often lack:

- Explicit safety signals (“costs”) separate from performance rewards

- Standardized benchmarks to compare algorithms under the same constraints

That separation is a big deal. It forces teams to answer a practical question: How much performance are you willing to trade to reduce risk? If you can’t quantify that, you can’t manage it.

The core idea: reward isn’t enough

A single reward score creates perverse incentives. If an agent can gain reward while causing harm (collisions, privacy violations, unsafe robot motion, policy noncompliance), it will.

A better pattern is constrained optimization:

- Maximize reward (task success)

- Subject to: safety cost ≤ threshold

In practice, that looks like “hit the throughput target, but keep collision risk under X,” or “resolve customer requests, but don’t disclose sensitive data and don’t take irreversible actions without confirmation.”

Why this matters for U.S. tech and digital services in 2025

U.S. companies are deploying AI into workflows that directly affect customers, payments, operations, and physical environments. That raises the stakes. A bad chatbot answer is annoying; an autonomous workflow that changes account settings, issues refunds, dispatches a technician, or reroutes warehouse robots can create real harm.

I’ve seen teams treat safety as a layer you bolt on after the model is “working.” That’s backwards. For systems that act, safety has to be part of training and evaluation, not just a policy doc and a moderation filter.

Three trends make Safety Gym-style thinking timely right now:

- Regulatory and contractual pressure: More procurement processes require evidence of risk controls, monitoring, and incident response. If you sell into enterprise or government-adjacent markets, you’re already feeling this.

- Agentic automation is spreading: Agents aren’t just answering questions—they’re executing multi-step tasks across tools.

- Robotics is leaving the lab: Warehouses, hospitals, and manufacturing floors are using more autonomy to address labor gaps and throughput goals.

The common requirement across all three: prove your system respects constraints.

How Safety Gym maps to robotics and automation use cases

Safety Gym translates cleanly into robotics and automation because robots are RL’s most honest customer: physics doesn’t negotiate. In a warehouse, “unsafe” can mean collisions. In healthcare, it can mean improper handling. In manufacturing, it can mean near-misses that never show up in QA reports—until they do.

Robots: performance metrics are easy; safety metrics are harder

Robotics teams already track:

- Task completion rate

- Time-to-complete

- Energy usage

- Throughput per hour

Safety Gym-style evaluation forces you to track the other side with the same rigor:

- Collision counts and severity

- Minimum distance to humans or restricted zones

- Constraint violations per hour

- “Cost per episode” (safety cost accumulated during a task)

That last one—cost per episode—is underrated. It gives you a single, auditable number to monitor over time.

Example: autonomous mobile robots in a mixed warehouse

A realistic constraint setup might look like:

- Reward: completed picks, on-time deliveries, efficient routing

- Costs: near-collisions, entering restricted aisles, exceeding speed in shared zones

- Constraint: total cost per shift must stay below a threshold

You can then compare strategies:

- A fast policy that improves throughput by 12% but doubles near-collisions is a non-starter.

- A conservative policy that reduces incidents by 60% but tanks throughput might be unacceptable.

- The target is a policy that hits service levels while maintaining a stable safety cost budget.

Safety Gym-style benchmarks make that trade study repeatable instead of emotional.

The “guardrails” toolbox: constraints, costs, and verification

Guardrails aren’t one thing; they’re a stack. Safety Gym sits in the training-and-evaluation layer, but it connects to broader responsible AI development.

1) Train with constraints, not just penalties

A common mistake is to add a big negative reward when something bad happens. That often fails because:

- The agent learns to accept occasional disasters if reward upside is large

- The penalty scale is arbitrary and unstable

Constrained RL approaches typically model safety as a separate objective with a hard or budgeted limit. That supports operational commitments like “no more than N violations per 10,000 actions.”

2) Use adversarial scenarios to find the cracks

A clean simulation run doesn’t mean the policy is safe.

Teams should intentionally generate difficult conditions:

- Sensor noise

- Delayed observations

- Randomized obstacle layouts

- Distribution shift (new aisle patterns, different lighting, seasonal volume)

This matters in December. Peak season operations stress systems: crowded facilities, overtime staffing, and tighter SLAs. If your agent only behaves under ideal conditions, it’s not ready.

3) Treat safety as a release gate

Here’s what works in practice: define a Safety Case for the agent—an evidence package that must be met before rollout.

A simple release gate might require:

- Task success ≥ 95% in simulation

- Safety cost ≤ threshold across 100,000 episodes

- Zero critical violations in a focused “red team” scenario suite

- A monitored pilot with rollback triggers

That’s the difference between “we tested it” and “we can defend it.”

Snippet-worthy principle: If you can’t measure constraint compliance, you don’t have a guardrail—you have a hope.

Where Safety Gym thinking helps customer-facing AI, too

Safety Gym isn’t only about robots. The same constraint logic applies to digital service agents that take actions in customer workflows.

Consider an AI agent that can:

- Reset passwords

- Update billing details

- Issue credits

- Schedule appointments

- Summarize support tickets and propose resolutions

You want it optimized for speed and resolution rate, but constrained by rules:

- Don’t expose PII

- Don’t execute irreversible changes without confirmation

- Don’t override fraud checks

- Don’t promise policies the company can’t honor

A Safety Gym-style approach translates to:

- Reward: successful resolution, low handle time, high customer satisfaction

- Cost: privacy violations, policy violations, unsafe actions, escalation misses

- Constraint: cost budget per conversation or per 1,000 sessions

This is how “ethical AI” becomes operational. Not slogans—metrics.

Implementation blueprint: how U.S. teams can adopt Safety Gym-style evaluation

You don’t need a perfect simulator to benefit. You need repeatable tests and a cost model you trust. Here’s a practical path I recommend.

Step 1: Define your constraints like a product requirement

Write constraints in plain language, then translate them into measurable signals.

Examples:

- “Robot must stay 1 meter away from humans” → minimum distance metric

- “Agent must not change payment method without confirmation” → action gating + audit log checks

- “No more than 1 critical violation per 100,000 actions” → incident rate threshold

Step 2: Build a safety cost model

Pick 3–8 cost components. Keep it small enough that teams actually use it.

A starter set:

- Minor violation cost (near-miss)

- Major violation cost (collision / sensitive action)

- Catastrophic cost (human injury risk / severe policy breach)

Assign weights deliberately. Then test whether results match human judgment.

Step 3: Evaluate on a scenario suite, not one benchmark

A single benchmark gets gamed. A suite is harder to cheat.

Include:

- Nominal operations

- Worst-case congestion

- Edge cases (unexpected obstacles, partial outages)

- Long-horizon tasks (where small risks compound)

Step 4: Add runtime monitoring and rollback

Training-time safety isn’t enough. Production needs:

- Constraint monitoring (live cost estimates)

- Kill switches / safe-stop behaviors

- Audit logs for actions and justifications

- Rollback criteria tied to incident rates

This is also where lead-generation conversations get real: buyers want to know you can operate safely, not just demo well.

People also ask: practical questions about Safety Gym

Does Safety Gym guarantee real-world safety?

No. It improves the odds by making constraint compliance measurable and comparable during training. Real-world safety still requires hardware safeguards, human oversight, and runtime monitoring.

Is this only for reinforcement learning?

Safety Gym is RL-oriented, but the pattern—separate objective + constraint budget + scenario testing—is useful for any AI system that takes actions, including LLM-based agents.

What’s the first sign you need this?

If your AI can take an action that you’d normally require a human to approve, you need constraint-based evaluation. Waiting for an incident is the expensive path.

Where this fits in the AI in Robotics & Automation series

Robotics and automation leaders are under pressure to ship autonomy faster, especially in U.S. operations where labor constraints and service expectations collide. The uncomfortable truth is that speed without guardrails creates outages, safety incidents, and brand damage.

Safety Gym-style infrastructure is one of the most practical ways to scale responsible autonomy: define constraints, train against them, and treat compliance as a release gate. That’s how you deploy systems that keep working after the demo—during peak season, under messy conditions, with real customers.

If you’re building an autonomous workflow or a robot policy right now, the next step is straightforward: write down your constraints, quantify the costs, and make “stays within budget” a requirement—not a hope. What constraint would you define first if your AI had to operate unsupervised for eight hours?