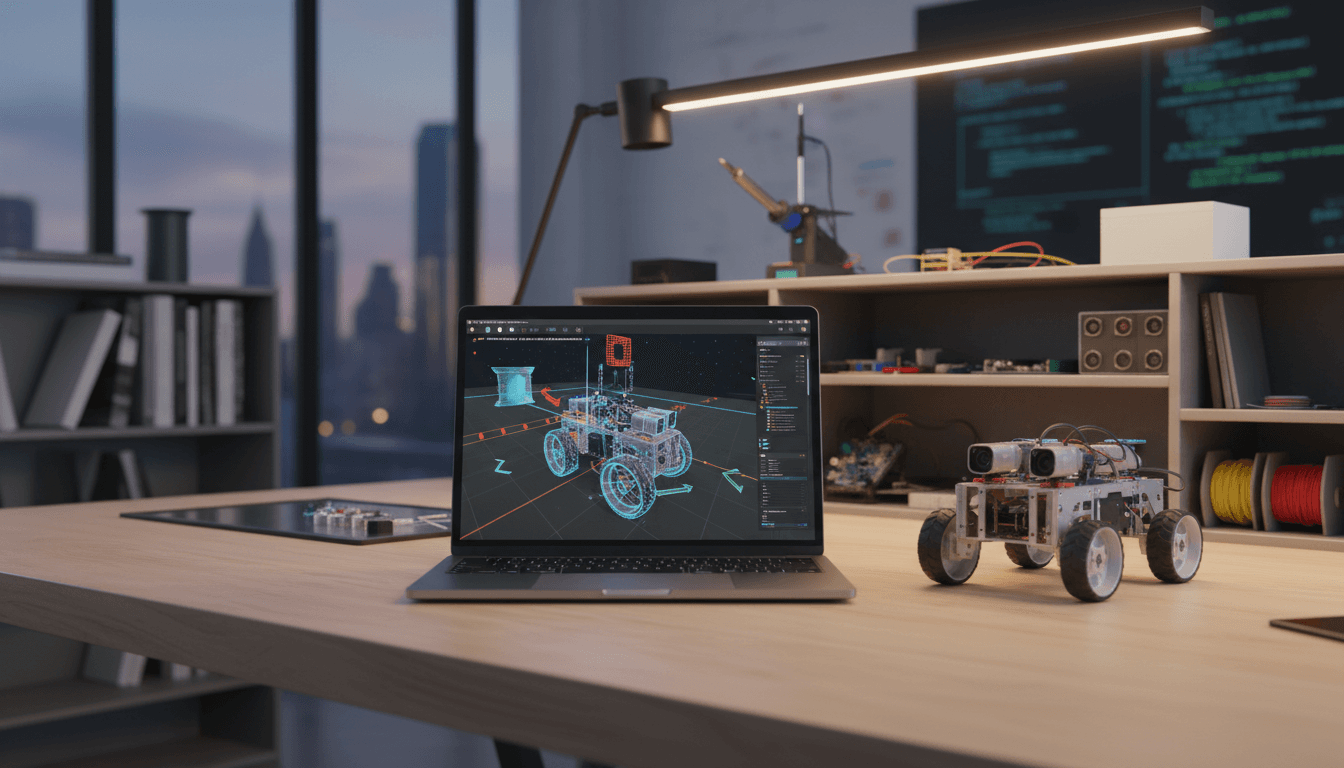

ROS 2 on Apple Silicon macOS is now running natively with Gazebo and ros2_control. Here’s why it matters for AI robotics dev loops and what to do next.

ROS 2 on Apple Silicon: Native macOS Is Finally Real

A lot of robotics teams quietly standardized on a weird development setup over the last few years: macOS laptops on desks, Linux in a VM (or a remote box) for ROS 2, and a constant low-grade fight with graphics, USB devices, networking, and performance.

That’s why the first public demo of ROS 2 Kilted running natively on macOS Apple Silicon matters. Not “it boots” or “a talker/listener works,” but a demo that includes the stuff that usually breaks first: Gazebo (Ionic), ros_gz_bridge with real sensor streaming, and ros2_control behaving reliably. If you’re building AI-enabled robots, it’s not a vanity milestone—it's a practical step toward faster iteration, more portable development, and more local compute for perception and learning.

This post is part of our AI in Robotics & Automation series, where the theme is simple: smart robots ship when the dev loop is tight. Native ROS 2 on Apple Silicon tightens it.

What the demo actually proves (and why it’s different)

Answer first: The demo shows that modern ROS 2 workflows—simulation, bridging, and control—can run directly on Apple Silicon macOS without hiding behind Docker emulation, heavy VMs, or a second machine.

In the Open Robotics community post, developer Dhruv Patel shared a first working demo of ROS 2 Kilted running natively on Apple Silicon macOS, with explicit mentions of support for:

- Gazebo (Ionic)

ros2_control- MoveIt 2 (work in progress toward “solid demos”)

- Navigation2 (same)

The highlight isn’t the distro name. It’s the integration surface area:

Real-time data flow between ROS 2 and Gazebo

Answer first: ros_gz_bridge moving sensor and image streams in real time is a strong signal the “middle” of the stack is healthy.

In robotics, you can often get a core ROS graph running even when the rest of the ecosystem is shaky. The weak points tend to be:

- image transport and large messages

- simulator plugins and rendering paths

- timing/latency issues that show up as soon as you add controllers

A demo showing ros_gz_bridge with sensor and image data flowing between ROS 2 and Gazebo suggests the plumbing is stable enough to support serious development work—not just a screenshot of ros2 topic list.

ros2_control stability is the hard part

Answer first: If ros2_control is stable, you’re much closer to “this is a usable dev machine” than “this is a fun port.”

For teams building AI robotics products, control is where experiments turn into behavior you can trust. Your model might propose actions, but ros2_control (and the controllers behind it) determines whether the robot actually executes those actions safely and repeatably.

When someone says, “ros2_control is running reliably—still verifying edge cases,” I read that as: the main control loop and hardware abstraction patterns are behaving, and what’s left is the kind of platform polish that community testing tends to flush out.

Why native macOS ROS 2 matters for AI robotics developers

Answer first: Native ROS 2 on Apple Silicon reduces dev friction and makes local AI experimentation faster—especially for perception pipelines, simulation-driven learning, and robotics tooling.

Most AI robotics work isn’t a single monolithic app. It’s a pipeline:

- data collection (bags, logs, synthetic data)

- model training (often remote)

- model evaluation (often local)

- deployment + runtime monitoring (robot-side)

Native ROS 2 on macOS helps at multiple points.

1) A shorter “time-to-first-test” loop

Answer first: Removing VMs and emulation removes a whole class of time sinks.

If you’ve ever burned an afternoon on:

- GUI forwarding issues with RViz or simulator rendering

- USB passthrough for lidars, depth cameras, or microcontrollers

- network discovery headaches for DDS traffic across host/guest boundaries

…you know these aren’t “learning opportunities.” They’re schedule killers.

Running ROS 2 natively on the machine you already use for product work means:

- fewer layers between your code and the robot

- fewer environment-specific bugs

- easier onboarding for new hires who already live in macOS

2) Apple Silicon is a strong fit for on-device AI workflows

Answer first: Apple Silicon’s CPU/GPU/Neural Engine balance makes it excellent for local iteration on perception and multimodal pipelines.

Even if your final robot hardware isn’t Apple-based, developers benefit when laptops can run heavier workloads locally:

- running perception nodes (segmentation, detection, depth) while simulating

- pre-processing datasets quickly (image resizing, filtering, labeling helpers)

- testing model-serving patterns (batching, throttling, QoS tuning)

The practical effect is fewer “ship it to the remote GPU box and wait” cycles for every small change.

3) Portability isn’t a luxury in robotics—it's risk management

Answer first: Cross-platform ROS 2 reduces dependency risk and improves hiring and collaboration.

Robotics teams are rarely homogeneous. You might have:

- research folks on macOS

- embedded folks on Linux

- QA and operations on Windows

Better macOS support in ROS 2 reduces the “single point of failure” where only a subset of the team can run the full stack. That’s not just convenience—it directly affects velocity and resilience.

What to expect next: Kilted vs Humble, and “merged upstream” implications

Answer first: The most encouraging detail is that many changes are reportedly merged upstream, which increases the odds that macOS Apple Silicon support becomes a community-visible, maintained path.

From the post:

- A full Kilted branch hadn’t been published yet at the time of writing, pending stronger MoveIt 2 and Nav2 demos.

- A Humble branch was already functional.

- “Most of the related pull requests have been merged upstream.”

Here’s how I’d interpret that if you’re deciding whether to invest time.

If you’re evaluating now (December 2025)

Answer first: Treat this as a serious preview, not guaranteed production support—yet.

A demo plus upstream merges usually means:

- core build fixes and platform patches are landing in the right places

- the remaining work is integration and validation across popular packages

But you should still plan for rough edges in:

- package availability (especially less-maintained dependencies)

- binary distribution completeness

- simulator rendering and plugin ecosystem quirks

- middleware configuration defaults that behave differently on macOS

If you’re planning Q1–Q2 2026 work

Answer first: This is the time to pilot macOS-native developer workflows on one or two internal projects.

A smart rollout pattern:

- Pick a simulation-first project (Nav2 in sim, manipulation planning, perception in Gazebo).

- Define a reproducible dev setup (toolchain versions, package set, build steps).

- Measure what matters: time-to-build, time-to-launch sim, CPU usage, dropped frames, controller jitter.

- Document the gaps and feed them back to maintainers or patch internally.

If you wait until everything is perfect, you’ll miss the period when small contributions have outsized impact.

Practical use cases: where this changes the day-to-day

Answer first: Native ROS 2 on Apple Silicon is most valuable where teams iterate on AI behaviors through simulation, bridging, and control—exactly what the demo emphasizes.

Use case 1: Simulation-driven perception development

A common AI robotics workflow is:

- simulate a sensor suite

- publish camera/lidar streams

- run perception nodes

- validate outputs (topics, overlays, logs)

If your simulator and ROS graph are both native, you reduce latency and “mystery lag.” That makes tuning perception (frame rates, synchronization, QoS) less guessy and more measurable.

Use case 2: Faster prototyping for mobile robots (Nav2-heavy stacks)

Nav2 development often involves lots of short cycles:

- tweak costmap parameters

- adjust sensor fusion

- re-run the same scenario

Native execution helps because you’re not also fighting VM networking and CPU scheduling artifacts that can distort timing.

Use case 3: Manipulation and control validation

MoveIt 2 + controllers is where integration issues show up quickly:

- joint limits

- controller update rates

- trajectory execution delays

- feedback loop stability

Having ros2_control stable on macOS means you can do meaningful work on:

- controller configuration

- launch patterns

- simulation-to-hardware parity

…even before you plug into a real arm.

A “People also ask” FAQ (the questions your team will raise)

Is native macOS ROS 2 just for hobbyists?

Answer first: No—if the ecosystem support stays on track, it’s a serious productivity win for professional teams.

The teams that benefit most are the ones with mixed OS environments and heavy simulation/perception work.

Should we switch our whole robotics stack to macOS?

Answer first: Don’t. Use macOS as a dev platform, keep Linux as the reference deployment target.

Most robots still run Linux in production for good reasons (drivers, real-time options, vendor support). Native macOS ROS 2 is about better developer experience and faster iteration, not replacing deployment reality.

Does this reduce the need for cloud compute?

Answer first: It reduces how often you need cloud compute for small iterations; it doesn’t remove it.

Training large models and running huge simulations still belongs on dedicated GPUs and clusters. But local iteration matters because you do it dozens of times per week.

What I’d do next if I were leading an AI robotics team

Answer first: I’d run a two-week pilot and decide based on measurable developer-time savings.

Here’s a simple plan that consistently exposes the real costs and benefits:

- Pilot scope: Gazebo Ionic +

ros_gz_bridge+ a perception node + a simple controller. - Success metrics:

- clean build from scratch time

- sim launch time

- stable FPS / sensor throughput

- reproducibility across two Mac models

- Risk checklist:

- missing dependencies

- packaging gaps

- debugging tool availability (profilers, tracing)

- differences in DDS discovery behavior

If the pilot saves even 30–60 minutes per developer per day, it pays for itself almost immediately. In robotics, that kind of reclaimed time usually turns into more testing and fewer late surprises.

Where this goes next for AI in Robotics & Automation

Native ROS 2 on Apple Silicon macOS is a platform story, but platform stories change what gets built. When the dev environment gets easier, teams try more experiments: richer perception, more realistic simulation, tighter control integration, and more ambitious automation workflows.

If you’re building AI-enabled robots in manufacturing, healthcare, logistics, or service robotics, the question to ask your team is straightforward: what would you attempt if your ROS 2 + simulation + perception loop was 2× faster to iterate on?