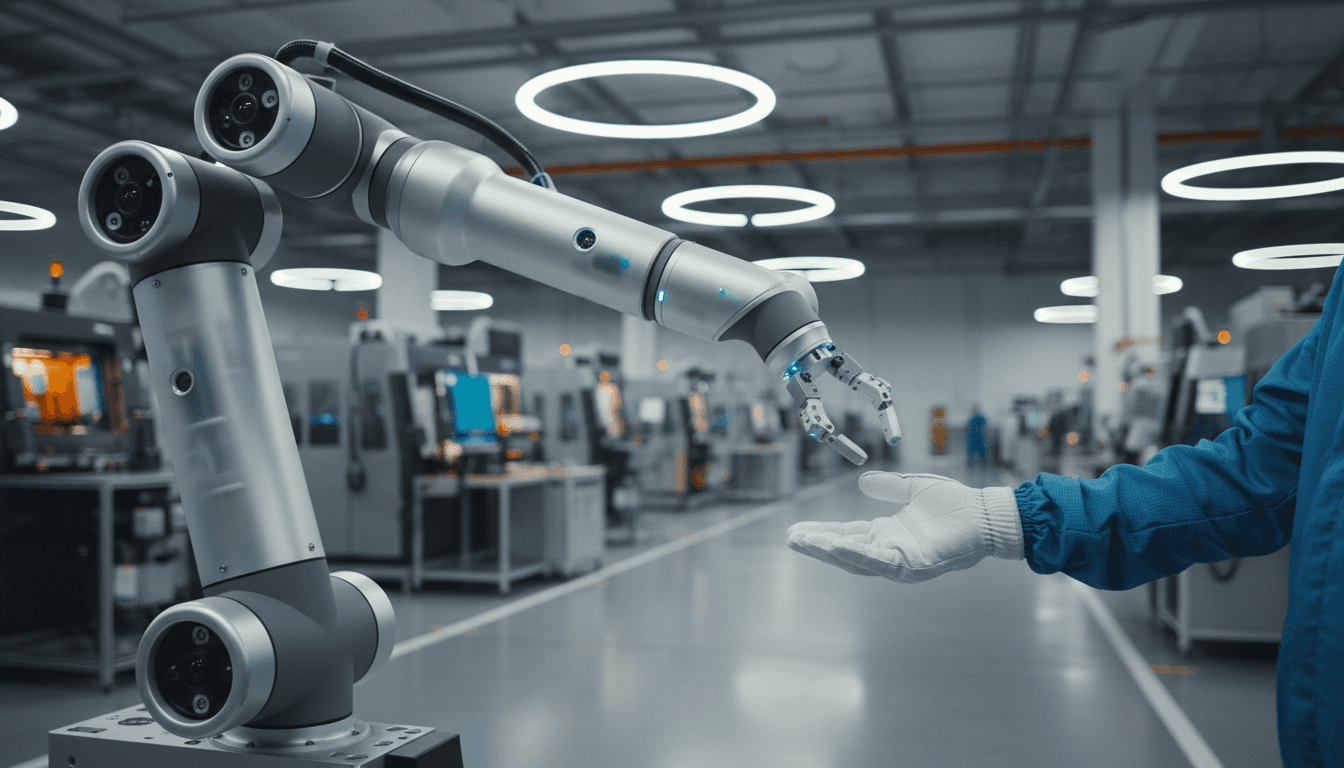

Robots can sense touch and proximity using cameras inside their arms. See how AI perception improves safety and collaboration in real deployments.

Robots That Sense Touch and Proximity Through Their Arms

A robot arm that can’t tell when a person is near is a robot arm you end up fencing off. And once you’ve built cages, light curtains, and strict exclusion zones, you’ve already limited the upside of automation.

That’s why this new direction—robots sensing both proximity and touch by “seeing” through their arms using internal cameras—is such a big deal for real-world deployments. It tackles the two signals collaborative robots struggle to combine well: “someone’s about to get too close” and “we just made contact.” In the context of our AI in Robotics & Automation series, this is exactly the kind of perception upgrade that moves robots from isolated work cells into shared human spaces.

Here’s what’s exciting: putting cameras inside a robot arm isn’t just a clever hardware trick. It’s a bet that AI-powered perception can replace stacks of fragile external sensors, simplify integration, and give robots a more human-like safety reflex.

Why proximity + touch sensing is the safety combo robots need

Answer first: Proximity sensing helps prevent collisions; touch sensing helps detect and respond to contact. You need both to work around people without turning every station into a fortress.

Most teams try to solve human-robot safety with a single approach:

- Proximity-only systems (like lidar, depth cameras, or safety scanners) can slow/stop robots when a person enters a zone—but they can’t confirm what happened at the moment of contact.

- Touch-only systems (like torque sensors, force/torque at the wrist, or tactile skins) can detect collision forces—but only after contact occurs.

In practice, safe collaboration needs a layered response:

- Pre-contact awareness (a person is approaching, a hand is reaching in)

- Contact confirmation (we touched something—human, box, fixture)

- Post-contact reaction (stop, retract, comply, or switch to a low-force mode)

What makes this tricky is that proximity sensors are often mounted externally (and get occluded), while tactile systems are distributed and expensive. The result is a patchwork that’s hard to maintain.

A useful rule of thumb: if your safety system depends on a single sensor modality, you’re one occlusion, smudge, or misalignment away from downtime.

“Seeing through the arm”: what internal cameras change

Answer first: Cameras inside the arm can infer both near-field proximity and contact events by observing deformation or motion cues across the arm’s structure, enabling a single integrated sensing approach.

The RSS summary points to a system where robots use cameras located inside their arms to detect proximity and touch. While we don’t have the full technical paper here, the concept aligns with a growing pattern in robotics: using embedded vision to observe subtle physical changes that correlate with interaction.

The basic idea (in plain terms)

If a robot’s arm has an outer “skin” or compliant layer, then:

- When something gets close, it can change lighting, shading, reflections, or the visible geometry of that skin.

- When something touches the arm, it causes localized deformation, displacement, or vibration.

An internal camera watching that layer can turn these effects into signals. AI models (often computer vision networks) can then learn mappings like:

- Distance to nearest object (proximity estimate)

- Contact location (where the arm was touched)

- Contact type (tap vs press vs sliding contact)

- Contact intensity (approximate force proxy via deformation magnitude)

Why internal cameras are a practical engineering move

External cameras and depth sensors are powerful, but they’re also fragile in production:

- They get occluded by the robot itself, totes, pallets, or humans.

- They need calibration and stable mounting.

- They struggle with glare, dust, and changing lighting.

Internal cameras are protected and move with the arm. That changes the integration story:

- Fewer external fixtures to mount and validate

- Less “sensor drift” from bumped brackets

- Better coverage of the arm surface (especially for near-field interaction)

In other words, you’re shifting sensing from “the cell” to “the robot.” That’s a strong trend in AI-driven automation.

Where AI fits: turning raw pixels into safety behavior

Answer first: AI makes embedded sensing usable by learning robust perception features (deformation, motion, shading) and translating them into actionable safety states in real time.

A camera inside an arm produces video—not safety decisions. The bridge is AI perception plus control.

The perception stack you actually need

Teams evaluating this kind of sensing should think in layers:

- Signal acquisition: internal RGB/IR cameras, controlled illumination (often necessary for consistency)

- Perception model: computer vision that predicts distance/contact maps on the arm surface

- State estimation: fusing predictions over time to reduce false positives

- Control policy: how the robot slows, stops, retracts, or compliantly yields

- Safety validation: proving bounded behavior under faults

The make-or-break detail is latency. If you want proximity to be useful, you need fast inference. In many industrial collaborative applications, reaction times on the order of tens of milliseconds are what separate “nice demo” from “trusted system.”

Reducing false stops (the hidden ROI)

Safety is non-negotiable, but nuisance stops are what kill automation ROI.

Internal camera-based sensing can help here because it can provide more context than a single thresholded sensor. For example:

- A hand approaching the arm looks different than a tote brushing it.

- A momentary shadow isn’t the same as physical contact deformation.

With a well-trained model, you can reduce “stop for nothing” events while still stopping faster when it matters.

My stance: the next wave of cobot value won’t come from faster arms. It’ll come from fewer unnecessary stops and simpler safety commissioning.

Use cases that benefit most in 2026 planning cycles

Answer first: Manufacturing, healthcare, and logistics benefit most because they require close human-robot interaction, variable environments, and high uptime.

As we head into 2026 budgeting and pilot planning, a lot of operations leaders are prioritizing automation that can survive variability: seasonal demand spikes, labor churn, and product mix changes. Sensing that combines touch + proximity is especially relevant in three areas.

Manufacturing: real collaboration, not “cage-free marketing”

In assembly, kitting, and machine tending, the robot’s arm often passes near:

- operators loading parts

- fixtures and clamps

- cables and pneumatic lines

Embedded proximity + touch sensing can support:

- dynamic speed limiting when an operator reaches in

- safe handovers (robot feels contact and sees approach)

- gentle recovery from incidental bumps without faulting out

Logistics: picking, packing, and depalletizing in messy spaces

Warehouses are full of occlusions: shrink wrap, cartons, pallets, and people moving unpredictably. External sensors help, but they’re not perfect.

If the arm itself can sense near-field obstacles and contact, you can improve:

- operation in tight aisles

- interaction near conveyors

- picking from cluttered bins where collisions are common

Healthcare and service robotics: trust is the product

Hospitals and care environments need robots that behave politely:

- slow down when approaching a person

- detect accidental contact immediately

- avoid rigid “industrial” movements

Touch and proximity sensing becomes part of the robot’s bedside manner. AI-driven perception is what lets that feel natural instead of jerky and overcautious.

What to ask vendors (or your robotics team) before you bet on it

Answer first: Evaluate coverage, latency, failure modes, and commissioning time—not just demo performance.

If you’re considering AI-enabled tactile and proximity sensing (whether internal cameras or other modalities), use these as your due diligence checklist.

1) What’s the sensing coverage?

- Does it cover the full arm, or only a section?

- How does it perform near joints and complex geometry?

- Can it detect contact on the “underside” where collisions often occur?

2) What’s the reaction time under load?

Ask for measured latency from:

- sensor capture → model inference → control action

Also ask what happens when the compute is busy (e.g., vision picking + safety inference at once).

3) How does it handle dirty reality?

- dust, oil mist, condensation

- lighting changes (if any optical path is exposed)

- long-term drift (materials aging, skin stiffness changes)

4) What are the failure modes and safe states?

You want clear answers to:

- What happens if a camera feed drops?

- How is sensor degradation detected?

- Does the robot default to reduced speed, stop, or continue?

5) How painful is commissioning?

The fastest path to ROI is often reducing integration time:

- How many parameters need tuning?

- Is there a training/calibration routine per robot?

- Can you redeploy the robot to a new station without redoing everything?

People also ask: practical questions about touch + proximity robots

Can camera-based sensing replace force/torque sensors?

For many tasks, it can reduce reliance on them, but it won’t fully replace wrist force/torque sensing in precision insertion, high-compliance assembly, or force-controlled polishing. The strongest setups combine modalities.

Is this “safe” enough for real collaborative operation?

Safety isn’t a single feature; it’s a system. Embedded sensing can improve safety behavior, but deployment still requires proper risk assessment, validation, and appropriate safety-rated components where mandated.

Won’t cameras inside the arm make maintenance harder?

It depends on the design. If the camera module is sealed and serviceable, it can be easier than maintaining a ring of external sensors. If it requires arm disassembly, it’s a problem. Ask how replacements work and what the mean time to repair looks like.

Where this fits in the AI in Robotics & Automation roadmap

Touch and proximity sensing through internal arm cameras is a strong example of where the field is going: robots that perceive through their own bodies, not just through a head-mounted camera or a cell-wide sensor network.

For leaders buying automation, the takeaway is simple: the biggest safety improvements often come from better perception, not stricter exclusion zones. For robotics teams, the opportunity is even clearer—build systems that respond smoothly to people because they can detect approach and interpret contact.

If you’re planning a 2026 pilot for collaborative robotics in manufacturing, healthcare, or logistics, this is the kind of capability that can turn “interesting demo” into “trusted coworker.”

So here’s the forward-looking question I’d use to pressure-test your roadmap: If a person steps into your robot’s workspace unexpectedly, does your system slow down intelligently—or does it just panic-stop and wait for a reset?