Robotics simulation helps AI learn faster and safer. See how Roboschool-style training environments power U.S. automation in robots and digital services.

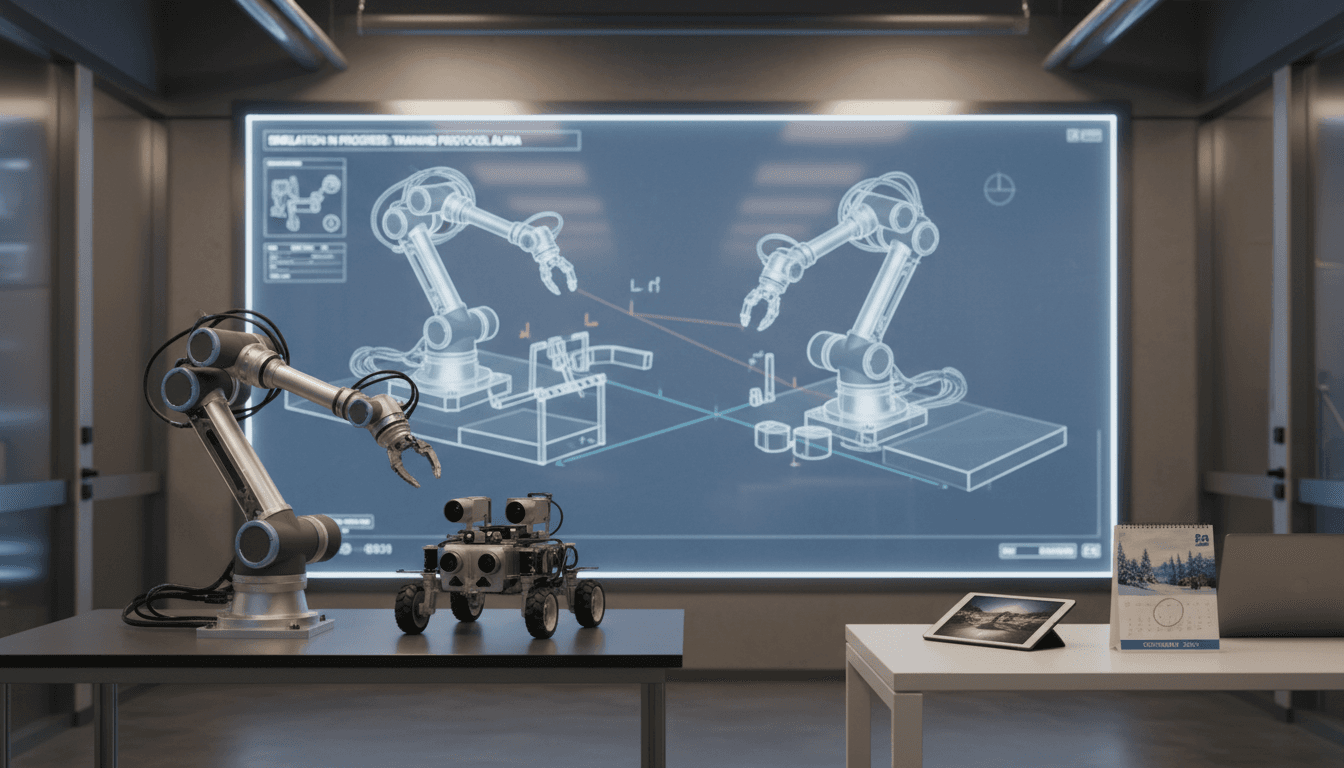

Robotics Simulation: How AI Trains Robots Faster

Most AI robotics projects don’t fail because the model is “bad.” They fail because training in the real world is slow, expensive, and risky. A single mistake can snap a gripper, crash an arm into a fixture, or shut down a work cell for hours. That’s why robotics simulation environments—like the research concept behind Roboschool—matter so much: they let AI learn the messy parts of control and movement without breaking anything.

The catch is that many people treat simulation like a toy problem. I don’t. Simulation is one of the most practical ways the U.S. digital economy is turning AI research into scalable automation—because the same training patterns that teach a robot to walk can also teach software agents to schedule, route, detect anomalies, and optimize workflows in digital services.

This post is part of our “AI in Robotics & Automation” series, and it’s about the real value behind robotics training environments: how they accelerate learning, what they teach that traditional programming can’t, and how U.S. teams can apply the same playbook to automation in manufacturing, logistics, healthcare, and digital operations.

Roboschool’s real lesson: training needs a “safe world”

Answer first: The core idea behind Roboschool-style research is simple—AI needs a controlled training ground where it can practice millions of actions, get feedback, and improve. That safe world is a simulator.

The RSS source content we received was blocked (403/CAPTCHA), but the topic (“Roboschool”) is well known in AI robotics circles as shorthand for a broader category: simulated robotics tasks used in reinforcement learning (RL). Whether a team uses a classic physics engine, a modern simulation stack, or a custom digital twin, the motivation is the same:

- Real robots are slow: you can’t run thousands of training episodes per hour on hardware.

- Real robots are fragile: exploration (trying random actions) is how RL learns, but it’s also how things break.

- Real environments are inconsistent: lighting, friction, wear, and human interference add noise that’s hard to control.

Simulation flips the economics. You can run training 24/7, parallelize it across many machines, and reset the world instantly after failure. That makes it a foundational technique for AI-powered automation.

Why this connects directly to U.S. digital services

Answer first: Robotics simulation isn’t just “for robots.” It’s a template for building AI that improves through feedback loops—exactly how modern digital services scale.

If you run a U.S.-based digital operation—customer support, fraud detection, delivery routing, inventory optimization—your “robot” is often software. The same principle applies: you want an environment where the AI can practice decisions safely before it touches production.

In digital services, “simulation” may look like:

- Replay of historical logs (what would the model have done?)

- Synthetic user journeys (how would changes affect conversions?)

- Sandboxed agent environments (how does the agent behave with guardrails?)

- Digital twins of business processes (what happens if demand spikes 20%?)

Robotics made this mindset unavoidable. And now it’s spreading to the rest of automation.

How AI learns robot control (and why it’s different from rules)

Answer first: Robots are hard because they’re continuous systems—small errors compound fast. AI control methods learn policies that map observations to actions in real time.

Traditional automation often relies on deterministic logic: “if sensor A trips, do B.” That’s fine for simple, stable processes. But robots operate in continuous space: joint angles, torque, velocity, contact forces, and time delays. Hand-writing rules for every edge case is a losing strategy.

Roboschool-like environments popularized a training approach where an agent:

- Observes the simulated world (positions, velocities, sometimes camera feeds)

- Chooses an action (joint torques, target angles)

- Receives a reward (stay upright, move forward, conserve energy)

- Updates its policy to get more reward next time

That’s reinforcement learning in a sentence.

What simulation teaches that real-world pilots often miss

Answer first: Simulation helps you discover failure modes early—especially around recovery, rare events, and “oops moments.”

In the real world, teams tend to avoid risky situations because downtime is expensive. But those risky situations are exactly what models must handle:

- A package slips in the gripper

- A caster wheel hits a cable ramp

- A person steps into the robot’s path

- A conveyor speed changes unexpectedly

A good simulator lets you force those scenarios and see what happens. That’s not academic. That’s how you prevent expensive incidents later.

A concrete benchmark mindset (even if you don’t build robots)

Answer first: The biggest win isn’t the simulator itself—it’s using it to standardize evaluation.

Robotics research environments encourage teams to compare methods on shared tasks, with consistent metrics. Businesses can borrow that approach.

If you’re implementing AI automation in a digital service, define “tasks” and “scores” just as ruthlessly:

- Time-to-resolution (support)

- Cost per route (logistics)

- False positive rate (fraud)

- Uptime impact (operations)

If you can’t measure it, you can’t improve it—and you can’t sell it internally.

From simulation to real robots: the “sim-to-real” gap

Answer first: Simulation accelerates learning, but models can fail in production unless you plan for the differences between simulated physics and real-world chaos.

The classic problem is the sim-to-real gap: friction isn’t identical, motors have backlash, sensors drift, floors flex, loads vary. A policy that’s perfect in simulation can stumble immediately on a real robot.

Here’s what actually works in practice.

Domain randomization: train for variation, not perfection

Answer first: You close the sim-to-real gap by making simulation less perfect, not more.

Teams randomize parameters during training:

- Mass and center-of-gravity of objects

- Surface friction and restitution

- Motor strength and latency

- Sensor noise and dropped readings

The policy learns robustness because it can’t “cheat” by relying on a single perfect physics setting.

Hybrid training: use sim for scale, reality for calibration

Answer first: The practical pipeline is simulation-first, hardware-second.

A common pattern:

- Train in simulation until the policy is competent

- Validate on real hardware with strict safety constraints

- Fine-tune with limited real-world data

- Feed failures back into simulation scenarios

This loop is also how strong digital automation systems mature. You start in a sandbox, validate in staging, then carefully roll out to production with monitoring and rollback.

Safety isn’t optional (and it’s not just a robotics issue)

Answer first: Any AI that takes actions needs guardrails—rate limits, safe action sets, and kill switches.

In robotics, safety means constraints on velocity, force, and workspace boundaries. In digital services, it’s the same idea translated:

- Permission boundaries (what the agent can touch)

- Human approval steps for high-impact actions

- Spending caps, rate limits, and audit logs

- Exception handling and fallbacks

If your AI can’t fail safely, you don’t have automation—you have risk.

Where this shows up in U.S. automation right now

Answer first: Robotics training environments are already shaping U.S. automation in warehouses, manufacturing cells, healthcare operations, and field robotics—because they reduce iteration time.

Even in late 2025, the most valuable AI advantage in automation isn’t a fancy demo. It’s shorter build-measure-learn cycles.

Logistics and warehousing

Answer first: Simulation helps optimize routing, picking, and robot fleet behavior before deploying changes to a live warehouse.

Warehouses are full of interacting constraints: humans, forklifts, AMRs, pick stations, and throughput targets. Simulation supports:

- Testing new pick-path strategies without disrupting shifts

- Stress-testing peak season behavior (holiday surges, weather delays)

- Training policies for congestion avoidance and collision reduction

This is a direct bridge to digital services: the same optimization logic applies to last-mile delivery routing and customer promise times.

Manufacturing and cobots

Answer first: Simulation reduces downtime by letting teams validate work-cell changes virtually.

Manufacturing teams can use simulation to:

- Evaluate whether a new part geometry breaks a grasp strategy

- Adjust robot trajectories for new fixtures

- Check reachability and cycle time before retooling

The practical impact is uptime. If you can avoid one unplanned day of line downtime, the simulator may pay for itself quickly.

Healthcare and service robotics

Answer first: Controlled training environments are essential when the cost of a mistake is high.

Hospitals and care facilities care about reliability, predictable behavior, and safety around people. Simulation helps validate:

- Navigation around dynamic obstacles

- Hand-off behaviors (cart delivery, specimen transport)

- Recovery behaviors when sensors fail or doors don’t open

Even if the “robot” is a software agent coordinating staff schedules and supply delivery, the same theme applies: train on realistic scenarios before going live.

Practical checklist: building your own “Roboschool mindset”

Answer first: You don’t need a physics lab to benefit from this. You need a training environment, a score, and a feedback loop.

If you’re leading an AI automation initiative—robotics or digital services—here’s a checklist I’ve found effective.

1) Define tasks like products, not experiments

Write a crisp task statement:

- Input signals the agent can observe

- Actions it’s allowed to take

- Constraints it must respect

- Success metrics (and failure definitions)

If you can’t describe the task in half a page, it’s probably too broad.

2) Build the simulator you can afford this quarter

Start smaller than you think:

- For robotics: kinematic simulation before full contact physics

- For digital services: log replay before fully synthetic user simulation

Momentum matters more than realism early on.

3) Instrument everything

Your environment should output:

- Episode score (reward or KPI)

- Constraint violations

- Time-to-success

- The “why” behind failures (which state triggered them)

This is how you turn model training into engineering, not guesswork.

4) Plan the rollout path on day one

Decide upfront:

- What’s the staging environment?

- What’s the canary rollout strategy?

- What are the kill conditions?

- Who gets paged when behavior drifts?

Teams that skip this end up with pilots that never ship.

The bigger point for AI in Robotics & Automation

Roboschool is a reminder that training environments are the engine behind practical AI automation. Whether you’re teaching a biped to balance or teaching a service agent to triage tickets, the winning pattern looks the same: create a safe space to practice, measure progress, then ship carefully.

If you’re building AI-powered robotics or AI-powered digital services in the United States, simulation isn’t “extra.” It’s how you move faster than competitors without turning production into a science fair.

What would your organization improve first if you had a safe environment to test thousands of automation decisions per day—before customers, clinicians, or operators ever felt the impact?