Domain randomization and generative AI make robotic grasping more reliable in real facilities. Learn the practical pipeline U.S. teams use to scale automation.

Robotic Grasping with Domain Randomization & GenAI

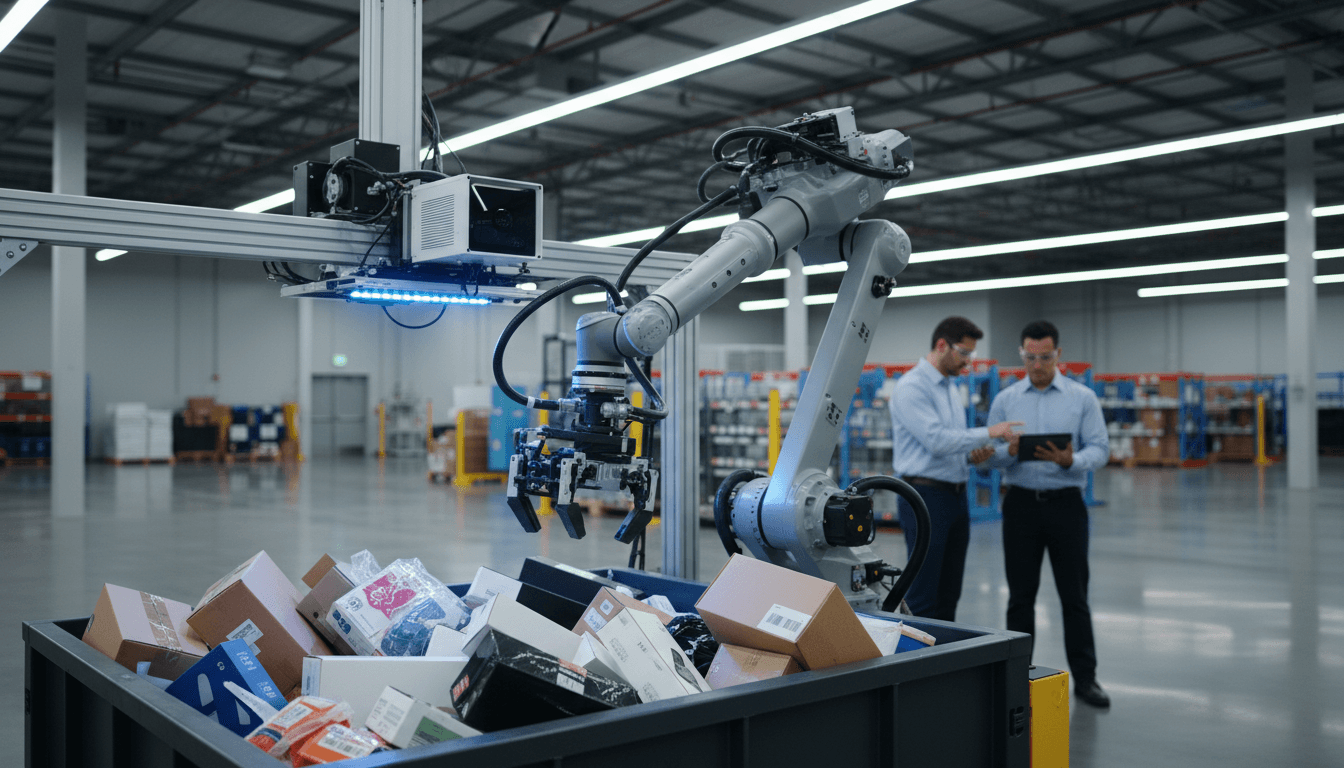

Factories and warehouses don’t lose time because robots can’t move. They lose time because robots can’t handle the messy middle: a crumpled polybag, a glossy box with weird reflections, a part that shifted 2 inches on a vibrating conveyor. Grasping is where automation projects quietly go to die—right after the pilot looks promising.

That’s why domain randomization and generative models matter. They’re two of the most practical ideas in modern AI for getting robotic grasping to work outside a perfectly staged demo. And because this research is coming out of a U.S.-based AI lab ecosystem, it’s also a clear example of how AI is powering technology and digital services in the United States: better simulation pipelines, faster model training, and more reliable automation that can scale.

This post is part of our AI in Robotics & Automation series. I’ll focus on what domain randomization and generative modeling actually do for grasping, where they fail, and how teams can translate the concepts into systems that pick real objects in real facilities.

Why robotic grasping is still hard (and expensive)

Robotic grasping is hard because the real world has endless variation—and small errors compound into failure. A grasp policy can be “90% accurate” in the lab and still be unusable in production when the remaining 10% jams a line, damages product, or triggers manual intervention.

Three failure modes show up repeatedly:

Perception gaps: sensing isn’t truth

Cameras lie in predictable ways: glare, shadows, motion blur, and depth noise. A grasp model trained on clean, well-lit images often learns shortcuts that disappear in a different building with different lighting.

The physics gap: simulation isn’t reality

Simulators approximate friction, deformation, contact dynamics, and sensor noise. Grasping depends on contact. Contact is where physics engines are least trustworthy.

The “long tail” of objects

Most real operations deal with a stream of new SKUs, packaging changes, and partial occlusions. The long tail breaks brittle approaches.

Here’s my stance: if your grasping solution depends on carefully controlling the environment, you don’t have a grasping solution—you have a staging process. Domain randomization and generative models are compelling because they reduce the need for staging.

Domain randomization: the simplest way to make robots less fragile

Domain randomization trains a robot in many randomized simulated worlds so the real world looks like “just another variation.” Instead of building the perfect simulator, you build a simulator that’s wrong in thousands of different ways.

Done well, domain randomization addresses the sim-to-real problem with a blunt but effective trick: overwhelm the model with variety.

What teams actually randomize in practice

A useful domain randomization setup typically varies:

- Lighting: intensity, direction, color temperature, flicker

- Materials and textures: matte vs glossy, albedo shifts, specular highlights

- Camera: intrinsics/extrinsics, lens distortion, noise models

- Object pose and clutter: pile density, occlusions, partial visibility

- Physics: friction coefficients, mass distributions, restitution, damping

- Gripper properties: compliance, jaw alignment, actuator lag

The goal isn’t realism. It’s coverage.

Snippet-worthy rule: If your sim looks beautiful, it might be training your model to overfit.

Where domain randomization shines

For U.S. companies scaling automation across multiple sites, domain randomization is appealing because it shifts effort from data collection per facility to simulation engineering once. You’re essentially investing in a reusable training pipeline.

In practical terms, domain randomization helps when:

- You have lots of variation across facilities (lighting, bins, camera mounts)

- You can’t label enough real grasp failures fast enough

- You’re deploying the same cell design repeatedly and want consistent performance

Where it breaks down

Domain randomization is not magic. It struggles when:

- The simulator misses an entire phenomenon (e.g., suction cup micro-leaks, flexible packaging dynamics)

- The randomization ranges are wrong (too narrow = overfit; too wide = noisy learning)

- The task needs precision contact modeling (thin parts, tight tolerances)

This is where generative models become more than a research curiosity.

Generative models: creating grasp experience at scale

Generative models help robotic grasping by producing plausible variations of sensory input, object geometry, and grasp hypotheses—without requiring you to physically encounter every scenario.

Think of them as controlled imagination.

Three ways generative AI shows up in grasping systems

-

Synthetic visual data generation Generative image models can expand training data with variations that are hard to stage: unusual reflections, background clutter, seasonal lighting changes (yes, winter sun angles can matter through warehouse skylights), and camera noise.

-

Shape completion and 3D priors In clutter, a camera rarely sees the whole object. A generative model can infer likely hidden geometry—useful for selecting stable grasps when only a corner is visible.

-

Sampling candidate grasps Instead of predicting a single grasp, a generative model can propose a distribution of grasps. That matters because grasping is inherently multimodal: many grasps can work, and you want robust options when your first choice is blocked.

Why generative models pair well with domain randomization

Domain randomization gives breadth; generative models add plausibility. Randomization can produce “anything,” including weird combinations that never occur. Generative models can bias synthetic experience toward what’s likely in real operations.

A practical mental model:

- Domain randomization = stress testing

- Generative modeling = realistic scenario expansion

Used together, they reduce the need to collect months of real grasp data before you see stable performance.

A practical pipeline: from simulation to the warehouse floor

The winning approach is a loop: simulate broadly, learn a policy, validate on real hardware, then update the simulation and generative priors based on failures. Teams that treat sim-to-real as a one-time “transfer” event usually stall.

Step 1: Define the grasping contract

Before training anything, write down what “success” means:

- Pick rate target (picks/hour)

- Acceptable drop rate (e.g., drops per 1,000 picks)

- Damage tolerance (especially for consumer goods)

- Recovery behavior (regrasp? place-back? request human assist?)

This is operationally crucial because some models look great on accuracy metrics and still fail throughput.

Step 2: Build a randomized sim that matches your constraints

You don’t need photorealism, but you do need:

- Correct camera placement and field-of-view constraints

- Correct gripper geometry and reach

- Approximate object size distributions

Then randomize the parameters that are likely to drift across facilities.

Step 3: Add generative augmentation where the long tail lives

Apply generative modeling to the parts that are costly to capture:

- Rare packaging (holiday bundles, seasonal promos)

- Reflective or transparent materials

- Deformable items (bags, pouches)

December is a good reminder: Q4 product mixes change fast. The “long tail” gets longer when promotional packaging shows up.

Step 4: Calibrate with small real datasets

Even with excellent simulation, you’ll want a thin layer of real-world calibration:

- A few hours of real grasps per site

- Automated logging of failures with camera snapshots

- A weekly retraining cadence early on

This is the part many teams underestimate: not the first model, but the operational ML pipeline.

Step 5: Monitor drift like a digital service

Robotic grasping is increasingly a digital service: models update, sensors drift, and performance needs monitoring.

Track:

- Pick success rate by SKU family

- Failure modes (slip, collision, miss, double-pick)

- Confidence vs outcome calibration

- Environmental changes (new lighting, new bins, camera moved)

When U.S. companies treat robotics as software—instrumented, monitored, and iterated—deployment scales faster.

Real-world use cases U.S. teams care about

Robotic grasping pays off fastest where labor is variable, SKUs change often, and uptime matters more than perfect picks.

Logistics and e-commerce fulfillment

Bin picking, parcel induction, returns sorting. These environments are chaotic, and domain randomization is directly relevant because each facility’s “look” differs.

Manufacturing kitting and machine tending

The objects are more consistent, but the precision requirements are higher. Generative models that propose multiple grasps can improve robustness when parts aren’t perfectly presented.

Healthcare and lab automation

You get strict process constraints, but also tricky objects (vials, packages, PPE). Here, the value is reliability and traceability—monitoring and controlled updates matter as much as grasp success.

People also ask: domain randomization vs real data

Do you still need real-world data? Yes. Domain randomization reduces how much you need and where you need it, but real data is still the final arbiter.

Is domain randomization better than photorealistic simulation? For many grasping tasks, yes. Photorealism can help, but coverage often matters more than beauty.

Where do generative models help the most? In the long tail: occlusions, partial views, rare packaging, and “we didn’t plan for that” scenarios.

What to do next if you’re evaluating robotic grasping

Most companies get stuck at the same point: they try to buy “a grasping model” rather than building a grasping system. Here’s what works if you’re trying to generate leads internally (budget, approval) or externally (customers, partners):

-

Run a two-week grasp audit Catalog object categories, materials, failure costs, and throughput needs. Identify the top 20% of SKUs causing 80% of the grasp pain.

-

Demand a sim-to-real plan, not a demo Ask how the model handles new SKUs, lighting changes, and camera movement. If the answer is “recalibrate and collect data,” push for specifics: time, staffing, and tooling.

-

Invest in instrumentation early Video + outcome labels + failure taxonomy is your compounding advantage. It turns grasping from a one-off integration into an improving digital service.

-

Start with constrained wins Pick one workflow (returns sorting, kitting, induction) and harden it. Then expand. Broad first deployments burn teams out.

Where robotic grasping is headed in 2026

Robotic grasping is shifting from handcrafted pipelines to learned policies supported by strong data engines. The research direction—domain randomization plus generative models—fits that trajectory because it treats variability as a feature, not a defect.

For the U.S. digital economy, this matters beyond robotics. The same playbook shows up everywhere AI touches operations: build robust training environments, generate useful synthetic data, monitor real-world drift, and ship improvements like software.

If you’re working on an automation roadmap for 2026, the question to ask your team isn’t “Can the robot pick this object?” It’s: “Can our system keep learning as the world changes?”