Perceptive robots are moving from demos to real warehouse work. Here’s what iREX 2025 revealed—and how logistics teams can adopt AI robotics safely in 2026.

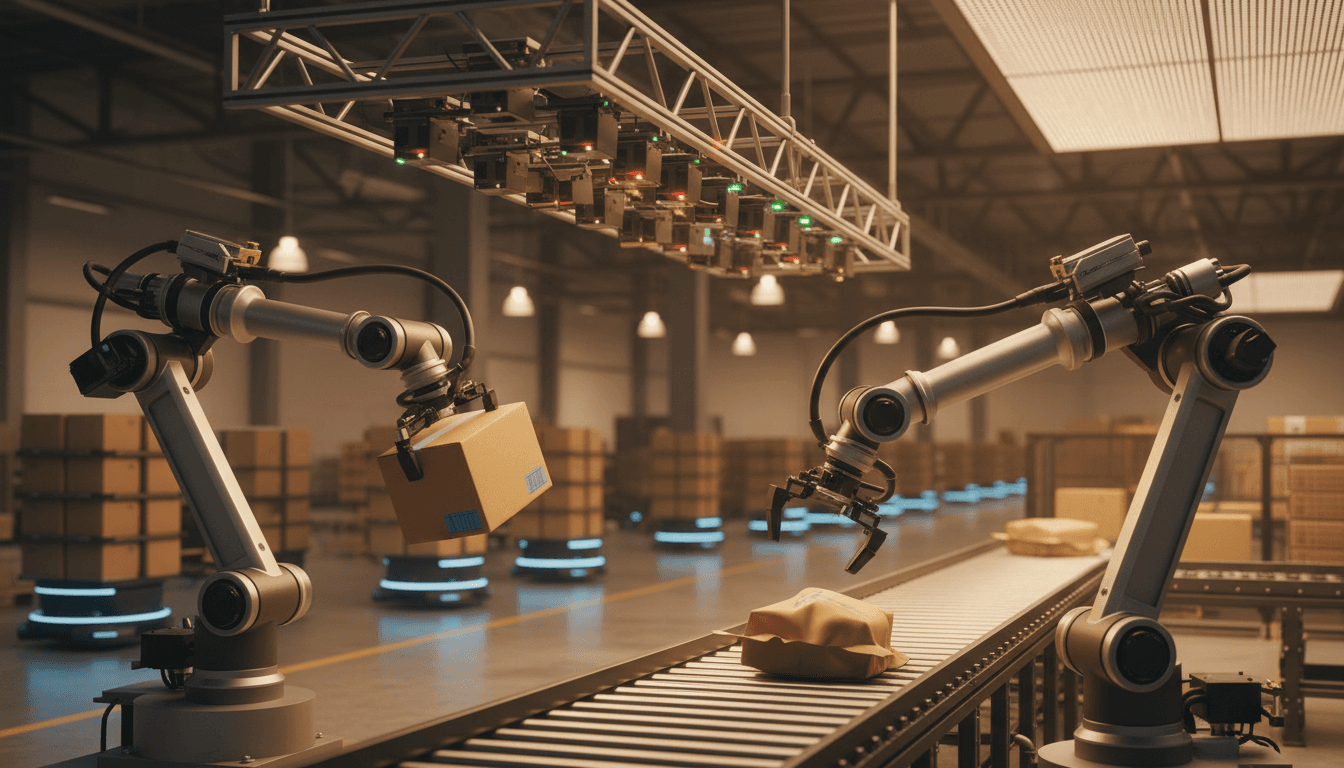

Perceptive Robots Are Coming for Your Warehouse

156,110 people showed up to iREX 2025 in Tokyo to see what robotics looks like right now. That’s not a niche crowd. And with 673 exhibitors—a record—the signal was loud: industrial robotics is shifting from carefully programmed routines to perception + decision-making in real time.

For transportation and logistics leaders, this isn’t a fun tech trend. It’s a practical answer to the hardest parts of warehouse automation: mixed SKUs, constant change, labor constraints, and the “every exception becomes a ticket” reality of operations.

This post is part of our AI in Robotics & Automation series, and I’m going to take a stance: most warehouses don’t need “more robots.” They need robots that can handle variability without breaking workflows. iREX 2025 showed that we’re finally getting there.

The real shift: from programmed motion to perceptive work

Perceptive robots matter because logistics is built on variation. Boxes arrive dented. Items shift in totes. Labels wrinkle. Pallets aren’t square. People improvise. Traditional automation hates all of that.

Perceptive robotics flips the model. Instead of “tell the robot exactly what to do,” you give it sensing + learned behavior + a control loop that can correct on the fly. When that works, the operational payoff is straightforward:

- Higher automation coverage (more of your volume can be automated)

- Fewer manual exception handoffs

- Faster changeovers for new SKUs, packaging, and layouts

- More stable throughput during seasonal spikes (hello, peak season)

iREX 2025’s theme—moving from programmed to perceptive—showed up in three places that logistics teams should pay attention to: practical AI on the floor, open platforms, and better data collection.

Practical AI is finally leaving the lab (and showing up in packing)

The best AI robotics demos weren’t humanoids dancing. They were robots doing unglamorous warehouse work with human-like tolerance for “slightly different.” That’s where ROI lives.

A standout example from iREX: Yaskawa’s dual-arm system demonstrated packing with a level of delicacy that historically required a person. The detail that matters isn’t the two arms—it’s how it learned.

Imitation learning: a faster path to “good enough” manipulation

In the iREX demo, motions were learned by human demonstration (motion capture markers + video), then reproduced by the robot. That approach changes the economics of deployment:

- You can train tasks that are hard to hand-program

- You can capture “tribal knowledge” from skilled workers

- You can iterate without rewriting thousands of lines of motion logic

In warehouse terms, think about what this unlocks:

- Packing mixed items into cartons with less dunnage waste

- Unpacking and decanting variable inbound shipments

- Kitting where part orientation isn’t consistent

I’ve found that many operations teams underestimate how much money disappears into “micro-variability.” A robot doesn’t need to be perfect—it needs to recover. Perception-driven control is what makes recovery possible.

What to ask vendors if they pitch “AI packing”

Not all “AI-enabled” robotics is the same. If you’re evaluating systems for packing, decanting, or piece handling, ask these questions:

- What exactly is learned? Motion policy, grasp selection, object recognition, or just vision inspection?

- What happens on failure? Retries, alternate grasps, human-in-the-loop prompts, or fault stop?

- How much task data do we need? Hours, days, or weeks of demonstrations?

- How do you handle new SKUs? Re-train, few-shot update, or rule-based fallback?

A credible vendor can answer with specifics—not slogans.

Open platforms are becoming a logistics advantage (not a developer hobby)

Openness is showing up because physical AI needs integration. Warehouses don’t run on robot controllers. They run on WMS/WES, labor planning, inventory accuracy, dock schedules, and exception workflows.

At iREX 2025, one of the most meaningful moves was a major manufacturer embracing more open tooling—especially around ROS 2 and modern developer workflows. The operational implication is bigger than “developers like Python.”

Why openness matters in warehouses

When robots are locked into a single OEM stack, you tend to get:

- Slow integrations into WES/WMS

- Limited ability to add third-party perception (vision, barcode, OCR)

- Harder simulation and testing before deployment

- Vendor dependency for every change

Open interfaces (like ROS 2 drivers and standard APIs) reduce friction. That matters because logistics automation increasingly looks like systems engineering, not equipment procurement.

Simulation is no longer optional

The iREX story also highlighted official simulation support via modern robotics simulation tools. In logistics, simulation is how you avoid expensive mistakes:

- Validate cycle times before you buy hardware

- Test exception scenarios (jam, mispick, dropped item)

- Train vision models without stopping the line

- Stress-test peak throughput assumptions

If you’re building automation that has to survive Q4 peak, simulation and digital testing aren’t “nice to have.” They’re what keep you from discovering bottlenecks after go-live.

Data is the new constraint—and logistics is sitting on it

Perceptive robots don’t improve because you “turn on AI.” They improve because you feed them real-world data. That’s why sensing and collection systems are getting so much attention.

At iREX, tactile gloves + vision collection systems and humanoid platforms were positioned as data collection engines. Whether you love humanoids or not, the logic is sound:

- You need large volumes of task interactions

- You need coverage across edge cases (odd shapes, glare, deformable packaging)

- You need feedback signals (force, slip, contact)

The logistics play: treat your warehouse like a data factory

Most warehouses already generate valuable signals, but they’re not captured in a model-ready way. Here’s what to start collecting now if you want perceptive automation later:

- Exception taxonomy (why did the pick fail? why did packing stop?)

- Image snapshots at key points (pick confirmation, label apply, carton close)

- Dimension/weight variance vs. master data

- Rework paths (what humans did to recover)

This matters because the winners in physical AI won’t just have better models—they’ll have better task data and faster learning loops.

Global competition is reshaping robotics supply chains (and your bargaining power)

A blunt reality from iREX: the ecosystem is global, and it’s getting more competitive.

Chinese exhibitors increased sharply at iREX 2025 (84 exhibitors, up from 50 in 2023), with particular strength in areas relevant to logistics automation economics:

- Lower-cost cobots

- Humanoids (as data platforms and general-purpose manipulators)

- Tactile sensing hardware

At the same time, a lot of “physical AI” capability is concentrating around a small set of compute and tooling providers.

What this means for warehouse buyers

Your procurement strategy needs to change. Instead of buying “a robot,” you’re buying an evolving capability. That changes what you should lock in contractually:

- Lifecycle support terms (updates, model refresh, security patches)

- Data ownership and usage rights (who can train on your data?)

- Interoperability commitments (APIs, export formats, integration support)

- Fallback modes when AI confidence drops (safe degrade vs. full stop)

I’m opinionated here: if a vendor can’t explain how they handle updates and model drift over 12–36 months, they’re selling a demo, not a deployment.

Where perceptive robots fit first in transportation & logistics

The first winners won’t be the flashiest robots. They’ll be the deployments that remove labor pain while keeping processes stable. Based on what iREX highlighted, these are the highest-probability early wins:

1) Decanting and induction (inbound)

Inbound is messy: mixed cartons, inconsistent packing, variable orientation. Perception-driven manipulation can stabilize flow into automation downstream.

Success metric: reduction in manual touches per inbound unit.

2) Case packing and repacking (outbound)

If the robot can handle slight variation—item placement, snug packing, gentle handling—you can automate more SKUs.

Success metric: percent of order lines eligible for automated packing.

3) Kitting for light manufacturing and MRO

Kitting failures cause downstream delays and expediting costs. Perceptive robots plus vision verification can reduce “missing part” incidents.

Success metric: kit accuracy and rework rate.

4) Handling moving or semi-structured targets

Some iREX demos focused on tracking and working on moving parts. Translate that to logistics: conveyors, singulation, dynamic pick stations.

Success metric: throughput stability under speed changes.

A practical adoption checklist for 2026 planning

If you’re setting budgets and roadmaps right now (late 2025 planning season), here’s a concrete way to turn “perceptive robotics” into a plan.

- Pick one workflow where variability blocks automation (not the easiest line).

- Instrument it for data: images, exceptions, weights/dims, recovery steps.

- Define safe operating boundaries: what’s in-scope, what triggers human help.

- Require simulation evidence tied to your SKU mix and peak volumes.

- Pilot with a measured ramp: start with a constrained object set, then expand.

- Track two numbers weekly: automation coverage (%) and exception recovery time (minutes).

Those metrics keep the project honest. They also keep stakeholders aligned when the AI needs iteration.

The next step for AI in Robotics & Automation

Perceptive robots are a big deal for logistics because they finally address the part automation has avoided for decades: the messy middle—where real warehouses live.

iREX 2025 made the direction obvious: more practical AI on the floor, more open integration paths, and more emphasis on collecting the data that makes robots adaptable. The companies that win won’t be the ones with the most impressive demo. They’ll be the ones that can deploy, maintain, and improve robots across changing operations.

If you’re considering warehouse automation upgrades for 2026, don’t start by asking, “Which robot should we buy?” Start with: Which exceptions are we paying humans to solve thousands of times per week—and can perceptive AI reduce that burden without adding fragility?