OpenAI Five proved AI can plan and coordinate in chaos. Here’s how its lessons power U.S. SaaS automation, customer comms, and robotics workflows.

OpenAI Five: The AI Milestone Behind Modern Automation

OpenAI Five didn’t just learn to play Dota 2. It proved something much more useful for U.S. businesses: if you can train software to coordinate, plan, and adapt in a messy, real-time environment, you can build AI that runs real digital services—reliably, at scale.

Back in 2018, the OpenAI team reported that a squad of five neural networks was starting to defeat amateur human teams in Dota 2, one of the most complex esports games. The project was famous for its spectacle, but the real story sits under the hood: OpenAI Five trained via self-play at massive scale, learned long-horizon strategy, and coordinated without explicit communication.

That recipe shows up everywhere in 2025—from customer support automation to marketing ops to robotics and warehouse orchestration. This post is part of our AI in Robotics & Automation series, and I’m going to make a clear case: the mechanics that made OpenAI Five work are the same mechanics powering modern SaaS automation in the United States.

OpenAI Five matters because it solved “real-world shaped” problems

Dota 2 is a good testbed because it’s closer to business reality than chess or Go. It forces an AI to make decisions under uncertainty, over long time horizons, with too many possible actions to brute-force.

OpenAI highlighted four properties that made Dota difficult—and they map cleanly onto digital services and automation.

1) Long time horizons: “winning later” beats “looking smart now”

A typical Dota match runs about 45 minutes at 30 frames per second—around 80,000 ticks. OpenAI Five observed every fourth frame, still making about 20,000 decision points per game.

That’s the same core difficulty in automation work:

- A sales cycle isn’t one action; it’s weeks of touches, follow-ups, and timing.

- A support escalation isn’t one chat reply; it’s triage → investigation → resolution → retention.

- A robotic fulfillment workflow isn’t one pick; it’s receiving → putaway → batching → picking → packing → exception handling.

Most companies get this wrong. They optimize AI for the “next response” and ignore the full sequence. OpenAI Five showed that long-horizon optimization isn’t science fiction—you can get it with the right training setup, reward design, and scale.

2) Partial observability: automation has to act without perfect information

Dota’s fog-of-war means you never have the full state. You infer.

Business systems are also partially observed:

- Marketing attribution is incomplete.

- Customer intent is ambiguous.

- Operations data arrives late or noisy.

- Sensors drop packets. Integrations fail. People don’t fill out fields.

The takeaway: AI that waits for perfect information doesn’t ship. Successful automation is built to make strong decisions under uncertainty, then update as new signals arrive.

3) Huge action spaces: the “170,000 options” lesson

OpenAI discretized each hero’s action space into roughly 170,000 possible actions. On any moment, about ~1,000 actions were valid.

That’s not a gaming curiosity. It mirrors modern automation design:

- In customer communication, the “action” isn’t just send message. It’s channel selection, timing, tone, offer, compliance rules, and escalation choices.

- In robotics, the “action” isn’t just move arm. It’s path planning, grasp selection, collision avoidance, task prioritization, and recovery behaviors.

When teams say “we tried automation but it didn’t work,” they often built a brittle rules engine that collapses under combinatorics. OpenAI Five is an argument for policy-based decision-making (learned behavior) rather than endless hand-authored branching logic.

4) High-dimensional observations: real systems have too many signals

OpenAI Five consumed about 20,000 floating-point inputs representing what a human is allowed to know, extracted through the game API.

That’s basically what modern SaaS looks like:

- Product analytics events

- CRM fields + activity history

- Ticket metadata + conversation history

- Billing status

- Feature entitlements

- Risk signals

The practical point: automation improves when you feed it structured signals from your systems, not when you ask it to guess everything from a single channel.

The training approach is the blueprint for scalable digital services

OpenAI Five learned entirely from self-play and used a scaled version of Proximal Policy Optimization (PPO). It didn’t rely on human replays to get started.

Even if your company isn’t training reinforcement learning agents today, the principles behind that approach show up in how successful AI-powered services are built.

Self-play is really “closed-loop improvement”

Self-play means the system improves by competing against itself and past versions of itself. In business terms, the closest analog is:

- Run an automated workflow

- Measure outcomes

- Compare against baselines (old versions, control groups)

- Promote what works, retire what doesn’t

This is how strong AI teams run production: continuous evaluation with controlled rollouts, not “set it and forget it.”

Exploration beats “strategy collapse”

OpenAI noted that agents can collapse into repetitive, narrow strategies. They fought this by playing 80% against current selves and 20% against past selves.

If you run marketing automation or customer support automation, you’ve seen the same failure mode:

- A model finds a shortcut that improves one metric while harming long-term outcomes.

- A support bot over-deflects tickets, spiking CSAT complaints later.

- An email system learns that aggressive subject lines increase opens, then drives unsubscribes.

The fix is also similar:

- Keep historical baselines

- Evaluate against older policies

- Use multi-metric guardrails (retention, escalation rate, refund rate)

Automation needs “anti-collapse” design, or it will optimize itself into a corner.

Reward design is the whole game

OpenAI’s reward included metrics humans track: net worth, kills, deaths, assists, last hits, etc., and they normalized by subtracting the opponent team’s average reward to prevent weird positive-sum behavior.

Translate that into SaaS and digital services:

- If you reward only “tickets closed,” you’ll get fast closures and angry customers.

- If you reward only “leads created,” you’ll get junk leads.

- If you reward only “warehouse throughput,” you’ll get fragile operations and higher error rates.

A good reward function (or KPI stack) needs:

- A primary outcome (revenue, resolution, throughput)

- Counter-metrics (quality, compliance, churn)

- Time-aware weighting (short-term vs long-term)

This is where I’ve found teams get the fastest improvements: define the scorecard before you automate.

Coordination without chat: what “team spirit” teaches product teams

One of the most interesting parts of OpenAI Five is that the five agents didn’t have an explicit communication channel. Coordination emerged via incentives.

They used a “team spirit” parameter: a weight from 0 to 1 that controlled how much each hero cared about individual reward vs the team’s average reward. They annealed it over training.

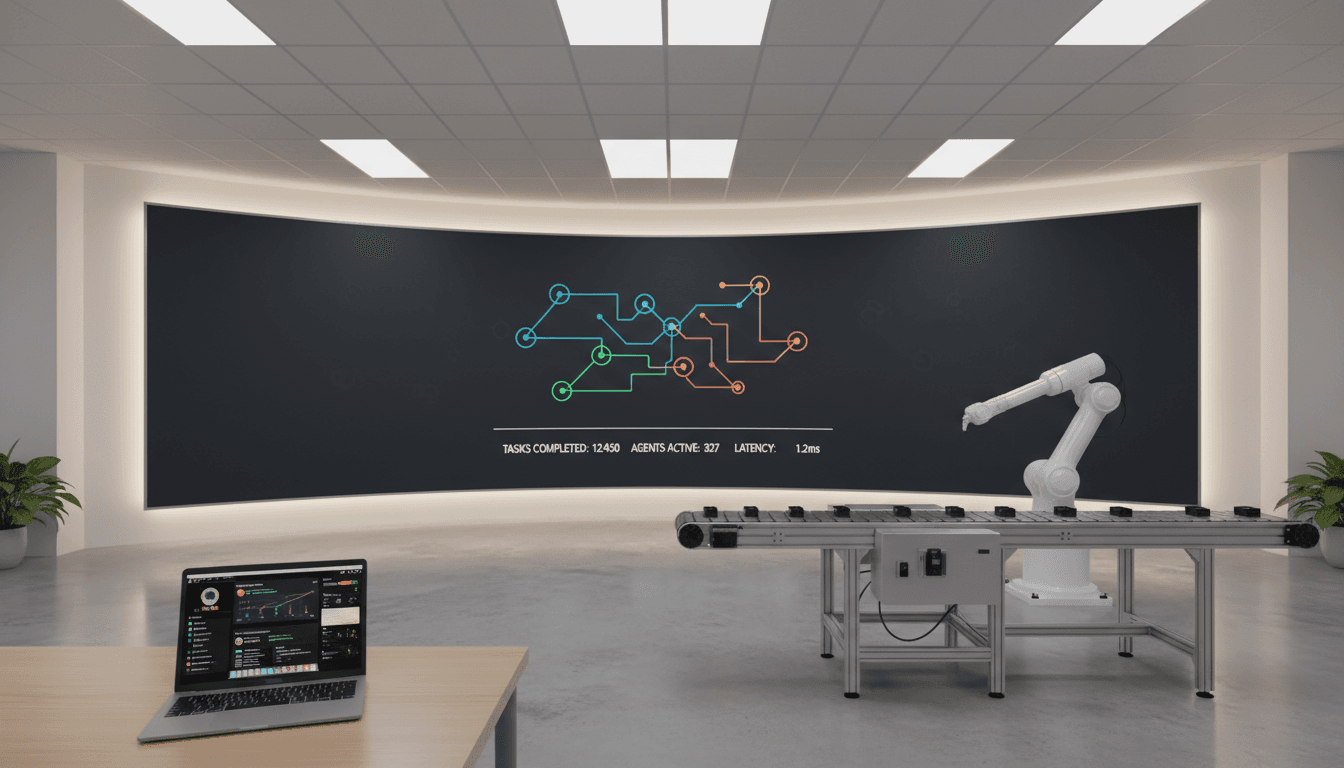

That concept maps cleanly onto multi-agent automation systems in the real world:

- In a modern GTM stack, you have “agents” like lead scoring, routing, sequencing, pricing guidance, and churn prediction.

- In robotics and warehouse automation, you have fleets: pickers, movers, sorters, inventory optimization.

If each component optimizes only its local metric, the system fights itself.

A practical definition: Team spirit is the design discipline of rewarding the workflow, not the module.

When you build AI-driven automation, push shared objectives into the system:

- Shared definitions of success (what counts as a qualified lead?)

- Shared constraints (compliance rules, SLA commitments)

- Shared state (handoff context that persists)

This is how U.S. SaaS companies scale customer communication without chaos: the “agents” cooperate because the incentives and interfaces force cooperation.

From esports to enterprise: where these ideas show up in 2025

OpenAI Five is a milestone, but it’s also a pattern library. Here are three concrete ways its lessons show up in modern U.S. technology and digital services.

1) Customer communication automation that plans, not just responds

The OpenAI Five lesson: single-step reaction is easy; multi-step planning is hard—and valuable.

In enterprise customer communication, the win isn’t “write a nice email.” It’s:

- Identify intent early

- Choose the right channel

- Escalate at the right moment

- Preserve context across handoffs

- Reduce time-to-resolution without inflating reopens

That’s long-horizon optimization in a partially observed environment.

2) Marketing automation that avoids local-metric traps

OpenAI Five’s reward design and anti-collapse approach are directly applicable to marketing ops. A mature automation setup will:

- Run controlled experiments (champion/challenger)

- Monitor secondary harms (spam complaints, unsubscribes)

- Prevent “strategy collapse” where one tactic dominates because it’s temporarily effective

If you’re generating leads, this matters because short-term lift that harms deliverability or brand trust is a net loss.

3) Robotics & automation that can adapt to changing environments

Dota updated frequently, changing the environment. That’s similar to what happens in robotics:

- Layouts change

- SKU mix changes

- Demand spikes (hello, holiday peak)

- Sensors drift

OpenAI used randomization during training to force exploration and robustness. Robotics teams use similar ideas (domain randomization, simulated variability) to get systems that don’t fall apart when reality changes.

It’s December 2025—peak season pressures make this painfully real. If your automation can’t handle exceptions during holiday volume, it’s not automation; it’s a demo.

A practical checklist: applying “OpenAI Five thinking” to your automation

You don’t need 256 GPUs and 128,000 CPU cores to benefit from these ideas. You need the discipline.

Here’s what I’d implement first in an AI-powered automation program (marketing, support, or operations):

- Define a long-horizon goal. Example: “reduce time-to-resolution while keeping reopen rate under X%.”

- Instrument the environment. Pipe structured signals (events, statuses, constraints) into the decision layer.

- Design a scorecard, not a single KPI. Include counter-metrics to prevent perverse optimization.

- Create baselines and past versions. Always compare your current automation against the last stable one.

- Plan for partial observability. Decide what to do when the signal is missing.

- Add guardrails early. Compliance, safety, escalation rules, audit logging.

If you do only one thing: stop judging automation by a single interaction. Judge it by the full workflow outcome.

Where this is headed for U.S. digital services and automation

OpenAI Five was a public proof that large-scale learning systems can coordinate, plan, and adapt in complex environments without hand-coded playbooks. That same shift is why AI-powered SaaS is moving from “assistants” toward systems that run end-to-end processes—especially in customer communication and operational automation.

For leaders building in the United States, the opportunity is straightforward: treat automation like a control system, not a script. Measure it, stress-test it, keep it honest with baselines, and optimize for the outcomes you actually care about.

If you’re thinking about applying AI to marketing automation, customer support workflows, or robotics and warehouse operations, the right next step is to map your process like a game: state, actions, rewards, constraints. Once you can describe it that way, you can improve it.

What would change in your business if your automation could coordinate like a team—not just execute tasks in isolation?