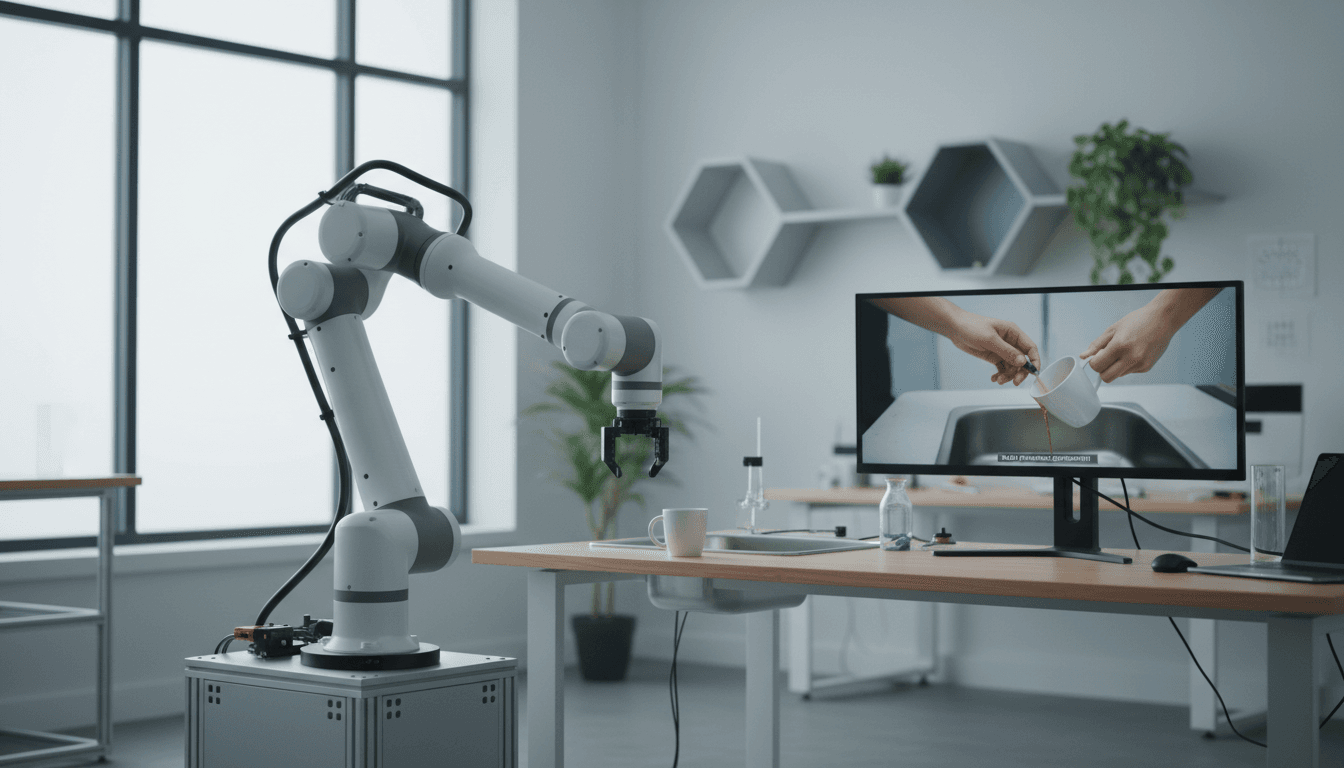

One-shot imitation learning is getting real. See how RHyME trains robots from a single how-to video—and what it means for automation ROI.

Robots That Learn From One How‑To Video (Finally)

Collecting robot training data is the hidden tax on automation. It’s why so many “we could automate that” ideas stall out after a pilot: the robot can do the demo task, but the next slightly different task means weeks of retuning, teleoperation, and more data collection.

Cornell’s new framework, RHyME (Retrieval for Hybrid Imitation under Mismatched Execution), tackles the most painful part of that tax: teaching robots from a single human how‑to video even when humans and robots move nothing alike. The reported results are the kind that make automation leaders pay attention: about 30 minutes of robot data and 50%+ higher task success than prior approaches in lab evaluations.

This post sits in our AI in Robotics & Automation series, where the goal is practical progress: less training time, more adaptability, and a clearer path from research to factory floors, warehouses, hospitals, and service environments.

Why “one‑shot imitation learning” is a big deal in automation

One-shot imitation learning matters because it shrinks the time between “we saw a task once” and “the robot can do it reliably.” In most real deployments, the long pole isn’t the robot arm or the gripper—it’s the training loop.

Here’s what typically breaks when you try to train robots from videos:

- Embodiment mismatch: A human wrist flick isn’t a robot joint trajectory. Even if the goal is the same, the motions aren’t.

- Timing mismatch: Humans accelerate and decelerate fluidly; many robots execute more discretely.

- Viewpoint mismatch: The camera angle in a how‑to video rarely matches the robot’s on-board sensors.

- Error intolerance: Traditional imitation pipelines often assume the demo is clean and the robot can copy it closely. Real work is messy.

RHyME’s core idea is blunt and, in my opinion, correct: stop expecting the robot to mimic a human exactly. Instead, treat the human demo as a high-level intent signal, then let the robot fill in the “how” using its own prior experiences.

For operations teams, that’s the difference between:

- “We need weeks of teleop to collect task-specific data.”

- “We need a short calibration set, then robots learn new variants mostly from observation.”

What RHyME changes: learning despite human–robot mismatch

RHyME works by combining imitation with retrieval from a memory bank of prior robot experiences. When the robot watches a new how‑to video once, it doesn’t try to map every human motion to a robot motion. It retrieves similar robot behaviors it has already performed and composes them into a plan.

A simple example from the source scenario:

- The robot watches a human pick up a mug from a counter and place it in a sink.

- RHyME searches a stored set of robot demonstrations for related skills: grasping cup-like objects, lifting, moving toward basin-like targets, lowering into a container.

- The robot executes the task by stitching together those robot-native behaviors rather than copying the human trajectory.

A quote from the research team frames it well: it’s like translation—mapping “human task” to “robot task,” not “human motion” to “robot motion.”

The “retrieval” part is the practical insight

Retrieval is what makes one-shot feasible. If the model had to invent a new policy from scratch after one video, you’d expect brittleness. But if it can recognize that “placing a mug into a sink” is compositionally similar to “lowering a utensil into a bin,” the robot can generalize with less data.

This is also why the reported 30 minutes of robot data is so interesting. It suggests RHyME isn’t just learning a single task; it’s building a skill library the robot can reuse.

The “hybrid imitation” part is what makes it robust

Hybrid imitation implies mixing learned behaviors with retrieval-based guidance. That combination tends to be more forgiving:

- When the demo is ambiguous, retrieval provides grounded options.

- When the environment differs slightly, the robot can pick a nearby behavior that still works.

- When an action fails, the system can fall back to alternate retrieved behaviors (in principle), instead of collapsing.

In real automation settings, robustness beats elegance every time.

Where this lands first: manufacturing, logistics, and healthcare

The best near-term use of one-shot learning is not a “general home robot.” It’s faster deployment of task families in controlled-but-changing environments. That’s exactly what manufacturing, logistics, and healthcare look like in 2025.

Manufacturing: high-mix, low-volume lines

Many plants are trending toward high-mix production: more SKUs, more changeovers, shorter runs. Traditional robot programming struggles here because each variant is “new.”

Where RHyME-like approaches fit:

- Machine tending variants: different part geometries, different placement fixtures

- Kitting and subassembly: similar picks/places with changing bins and part sizes

- End-of-line packaging: changing box sizes, inserts, or packing patterns

A realistic workflow I’d bet on:

- Record a short set of robot behaviors for foundational actions (grasp, place, insert, push, wipe, open/close).

- Let operators supply a single how-to video for each new variant.

- Use retrieval to map the new job to existing robot skills.

The win isn’t magic autonomy. The win is changeovers measured in hours, not weeks.

Logistics: the “same task” that never repeats exactly

Warehouse work looks repetitive until you zoom in. The carton is dented, the label is shifted, the tote is at a different angle.

RHyME aligns with logistics needs because retrieval-based systems can treat each job as “similar enough” and still perform:

- Item-to-tote placement with shifting target locations

- Returns processing where object appearance varies widely

- Singulation and sortation where timing and positioning drift

If you’re deploying robotics in a warehouse, the most expensive failures are often edge-case handling. Systems that tolerate mismatch reduce the number of times you need an engineer on-site.

Healthcare and service: procedural tasks with human demonstrations

Healthcare environments already rely on “watch once, do later” training—just for humans. Robots that can benefit from a nurse or technician demonstrating a workflow one time (without perfect staging) could accelerate adoption for:

- Supply room stocking and retrieval

- Transport and handoff routines (within safety constraints)

- Room turnover support tasks (bringing items, placing disposables)

The caution: healthcare adds compliance, safety, and human factors. But the training interface—learning from a quick video—is a natural fit.

What leaders should ask before betting on video-trained robots

One-shot learning systems live or die on deployment details. If you’re evaluating vendors or planning internal R&D, these are the questions that separate a compelling demo from a scalable program.

1) What’s in the “memory bank,” and who maintains it?

RHyME depends on stored prior examples. In practice:

- How many skill demos are needed before retrieval becomes useful?

- Can your team add skills without ML engineers?

- Do skills transfer across robot models, grippers, and camera setups?

A strong answer looks like: a small, curated library of reusable skills with clear versioning and validation. A weak answer is: “we’ll just collect more data.”

2) How does the system handle safety and constraints?

In industrial and healthcare robotics, “mostly correct” can still be unacceptable.

Ask how constraints are enforced:

- collision avoidance and force limits

- forbidden zones (people, fragile equipment)

- object drop detection and recovery

Retrieval-based policies are promising, but guardrails are non-negotiable.

3) What happens when the video is messy?

Real how-to videos include occlusions, distractions, and imperfect execution.

You want clarity on:

- tolerance to camera angle changes

- tolerance to partial occlusion (hands blocking objects)

- sensitivity to demo speed

- whether the system needs object labels or can work from raw pixels

A practical deployment plan assumes you’ll get imperfect demos and builds the process around that reality.

4) How do you measure readiness beyond “task success”?

Lab success rates are a start. For production, define:

- success rate by shift and by operator

- mean time to recover when something goes wrong

- number of human interventions per hour

- time to introduce a new SKU or new workstation layout

If the promise is “less training cost,” your metrics should track training hours avoided and engineering time avoided.

A pragmatic playbook: how to use one-shot learning in a real automation program

The fastest path to value is to treat one-shot imitation as a changeover tool, not a full autonomy bet. Here’s a deployment pattern that fits most operations teams.

Step 1: Standardize a small set of reusable skills

Build (or buy) a library for actions your facility repeats:

- grasp & place (multiple grasp types)

- open/close (doors, drawers, lids)

- insert & align (peg-in-hole-like behaviors)

- push/pull, wipe, scoop

Keep it small and high-quality. Retrieval thrives on good references.

Step 2: Choose “task families,” not one-off tasks

Pick workflows where new jobs are variations of a pattern:

- “place object X into container Y”

- “pick from bin A, place to fixture B”

- “retrieve item from shelf, place on cart”

One-shot learning shines when it can reuse structure.

Step 3: Design the operator workflow

The operator experience matters as much as the model:

- define what a “good demo video” looks like (framing, lighting, distance)

- keep a quick checklist near the workstation

- make it easy to redo a demo and compare outcomes

If recording a demo feels like a chore, adoption dies.

Step 4: Validate with controlled “messiness”

Before you scale, intentionally test:

- different object colors and materials

- cluttered backgrounds

- slight relocation of bins and targets

- faster/slower demonstrations

The goal is to find where mismatch tolerance ends—then design around it.

People also ask: quick answers about robots learning from video

Can robots really learn a new task from one video? Yes, in constrained environments and for task families where the robot can reuse prior skills. The “one video” is more like a task specification than full training.

Why is human–robot mismatch such a problem? Humans have different joints, speeds, and dexterity. If a method assumes the robot must copy human motions, small differences compound into failure.

What’s the business value of imitation learning in robotics? Lower training cost and faster changeovers. If you reduce teleoperation and engineering retuning, you speed deployment across more sites and SKUs.

What this signals for 2026 automation roadmaps

RHyME is a research result, not a turnkey product, but the direction is clear: robot training is shifting from “collect huge task-specific datasets” to “reuse skill libraries and learn from minimal demonstrations.” If that holds, the winners won’t just be the teams with the best robots. They’ll be the teams with the best skill management and the cleanest path from observation to validated execution.

If you’re building an AI in Robotics & Automation roadmap for 2026, start treating video-based robot training as a serious option for high-mix operations. Even a modest improvement—say, cutting changeover programming from ten days to two—can justify the investment.

What task in your operation is trained the same way over and over again, even though it’s basically the same sequence with different objects? That’s the first place I’d test one-shot imitation learning.

If you’re exploring AI-powered robotics and want a practical assessment of where video-trained robots fit in your environment (and where they don’t), map your top 10 “task families” and measure how much time you currently spend on data collection and retuning. That number becomes your ROI baseline.