Multi-objective RL can train robots to follow human norms, not just safety rules. Learn how ordered norm objectives reduce violations in real deployments.

Teaching Robots Norms with Multi-Objective RL

Most robotics teams don’t fail at making robots safe. They fail at making robots socially acceptable.

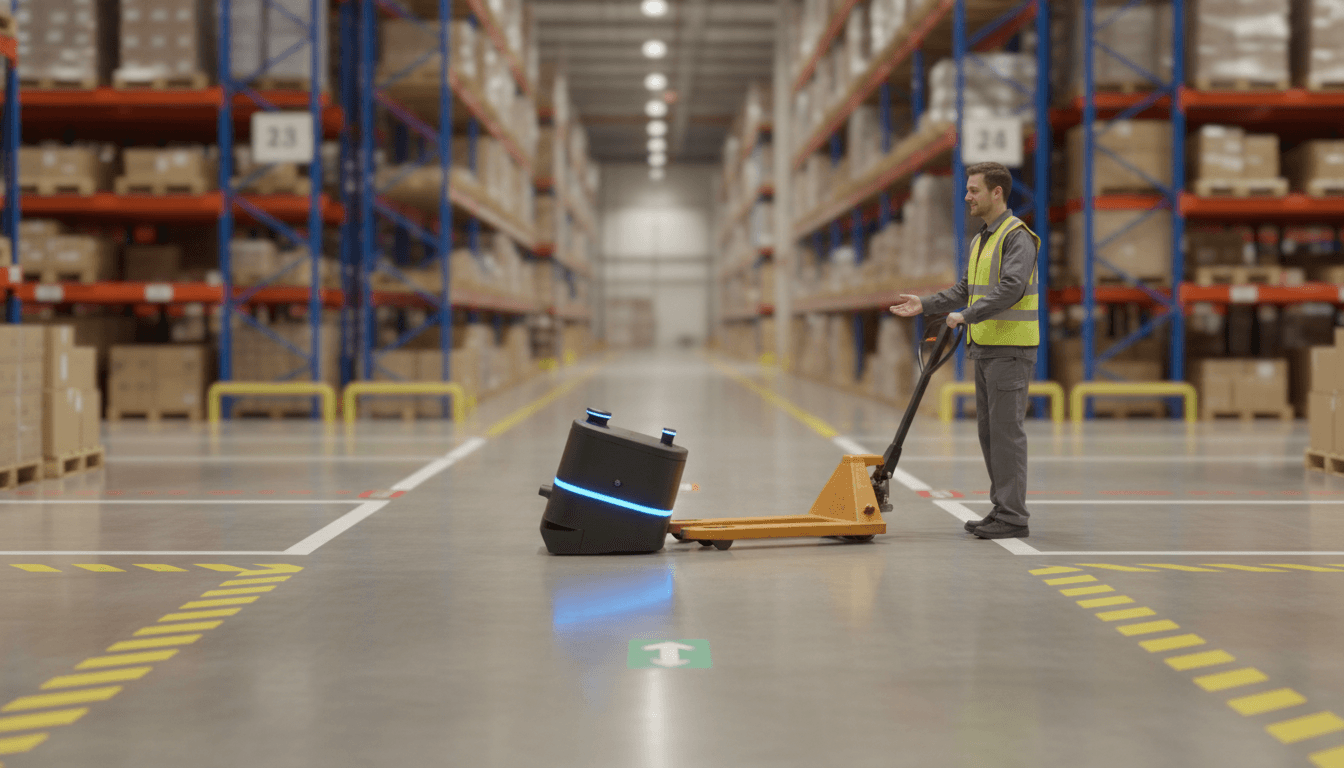

A warehouse AMR can avoid collisions perfectly and still be the robot everyone complains about: blocking aisles, cutting off workers at intersections, “camping” near doors because it found a loophole that technically satisfies the rules. In healthcare, a mobile assistant can follow every safety constraint and still behave in ways that feel disrespectful—like hovering too close to patients or rushing past staff during shift change.

That gap—safe behavior vs. normative behavior—is exactly what a distinguished IJCAI 2025 paper tackles with an approach that combines logic-based rules with multi-objective reinforcement learning (MORL). The result is a practical way to train autonomous agents that don’t just optimize for task reward, but also minimize violations of human norms in a predictable, prioritizable way.

Why “safe RL” isn’t enough for robots in the real world

Safety constraints answer questions like “Will the robot crash into something?” Norms answer questions like “Will the robot behave in a way people accept, trust, and cooperate with?”

In robotics & automation, that difference matters because humans adapt their behavior around machines. If an autonomous forklift technically avoids collisions but drives aggressively, human workers will hesitate, step unpredictably, or avoid certain routes. The robot remains “safe” on paper while the overall system becomes less safe in practice.

A concrete example: the polite robot problem

Consider two navigation policies for an AMR:

- Policy A (safe but rude): Takes the shortest path, regularly squeezes through narrow gaps, forces humans to stop, and “wins” hallway right-of-way.

- Policy B (norm-compliant): Yields at intersections, avoids passing too close, and chooses routes that reduce human disruption.

Traditional reinforcement learning will gravitate to Policy A if it’s rewarded for throughput and only constrained by collision avoidance. The missing piece is a structured way to encode norms like yielding, keeping comfortable distance, not blocking shared spaces, and to handle conflicts when norms collide with deadlines.

The tricky part: norms aren’t just constraints

In many robotics deployments, teams start by treating norms as hard constraints (“never enter area X,” “always stop at crossing”). But real norms include conditional rules, exceptions, and “do the best you can if you already messed up” situations.

A classic example is a contrary-to-duty obligation:

- Maintain a 10-meter following distance.

- If you’re already too close, slow down to restore distance.

Rule (2) only applies after (1) has been violated. That’s normal in driving, logistics, and clinical settings—where the environment is messy, humans are unpredictable, and the robot can’t always keep the primary obligation.

Why conditional norms break naive reward shaping

If you encode a norm as an implication—“if pedestrians are present, then stop”—you get a nasty side effect: the rule is considered satisfied whenever pedestrians aren’t present.

In reinforcement learning terms, that can create free reward for doing nothing useful. An agent may learn to procrastinate or extend episodes to rack up “norm satisfied” points rather than completing its mission.

This is one of those issues that sounds academic until you see it in production: robots that hesitate, stall, or pick odd waiting positions because the objective function accidentally rewards it.

From “restraining bolts” to normative restraining bolts

The IJCAI 2025 work builds on a family of methods known as Restraining Bolts—a Star Wars-inspired name for a very real idea: guide an RL agent with additional rule-based signals.

Restraining Bolts (RB): rewards for satisfying rules

A standard restraining bolt setup pairs each formal specification (expressed in a temporal logic over finite traces) with an extra reward when the agent satisfies it.

That sounds reasonable, but as we just saw, conditional norms can accidentally pay the agent even when the norm isn’t active.

Normative Restraining Bolts (NRB): punish violations instead

The paper’s precursor idea—Normative Restraining Bolts—switches from “reward compliance” to “punish violations.” This is aligned with a common real-world intuition: obligations are meaningful because violating them triggers a sanction.

In robotics terms, it’s closer to how compliance programs work:

- You don’t get a bonus every second you’re compliant.

- You pay a price when you break a rule.

NRBs handle conditional obligations and contrary-to-duty norms better, but they introduce practical headaches:

- You often end up tuning penalty magnitudes by trial and error.

- Conflicts between norms are hard: which violation is “less bad,” and by how much?

- Updating norms can require retraining, because the penalty structure is entangled with the learned policy.

The IJCAI 2025 insight: treat each norm as its own objective

The core contribution is Ordered Normative Restraining Bolts (ONRBs): a framework that recasts norm compliance as a multi-objective reinforcement learning problem.

Here’s the key move:

Each norm becomes its own objective, measured as violation cost, and the agent learns a policy that minimizes violations according to a defined priority order.

That matters because MORL gives you a principled way to deal with multiple competing goals—exactly what robots face outside the lab:

- throughput vs. energy use

- speed vs. comfort near humans

- deadline adherence vs. restricted zones

- task performance vs. privacy and dignity constraints

What “ordered” means in practice

The framework introduces a ranking among norms so the system can resolve conflicts explicitly.

If two norms conflict—say:

- Norm 1: avoid dangerous zones

- Norm 2: reach a critical destination by a deadline

…then the ordering tells the agent which norm has priority. In the paper’s grid-world demonstration, the agent violates the lower-priority norm once (entering a dangerous tile) to satisfy the higher-priority deadline obligation, then behaves conservatively afterward even when additional violations could improve task reward.

That’s a very robotics-native behavior pattern: “break the lesser rule once to satisfy the bigger obligation, then return to compliance.” Humans do this constantly.

Why this is better than penalty tuning

ONRBs use MORL machinery to compute punishment magnitudes so that, if the agent learns an optimal policy, it will:

- minimize norm violations over time (when norms don’t conflict)

- minimize violations with respect to the specified priority order (when they do)

Instead of guessing whether a violation should cost “-3” or “-300,” you get an algorithmic route to values that enforce your intended hierarchy.

Operational bonus: you can activate or deactivate norms

Real deployments change:

- peak season routes in logistics (December surge planning)

- temporary restricted areas in hospitals (infection control)

- safety protocols during maintenance

ONRBs support deactivating/reactivating norms—a practical feature for robotics teams that need policy behavior to change when rules change, without rewriting the whole reward function from scratch.

Where this fits in AI in Robotics & Automation

This research is especially relevant to the “AI in Robotics & Automation” stack because it bridges three things that usually live in separate meetings:

- Robotics performance requirements (KPIs like picks/hour, on-time delivery, utilization)

- Safety engineering (hard constraints, hazard analysis, fault handling)

- Compliance and human factors (norms, etiquette, legal/ethical expectations)

ONRBs offer a way to encode (3) without breaking (1) and (2), and without relying on vague “be nice” reward shaping.

Practical applications worth caring about

Warehouse & manufacturing automation

- AMRs that respect “right-of-way” norms in shared aisles

- Robots that avoid blocking emergency exits even if it reduces path optimality

- Explicit priority: human safety norms > regulatory zones > throughput targets

Healthcare robotics

- Mobile assistants that treat patient proximity as a norm objective (comfort distance)

- Priority handling: patient privacy norms > delivery speed in sensitive areas

- Exceptions and CTDs: if a robot enters a restricted hallway due to emergency rerouting, it must immediately reduce exposure (e.g., move to the nearest compliant waiting zone)

Service robotics in public spaces

- Delivery robots that obey local etiquette like yielding at crosswalks

- Conflict resolution: don’t block pedestrian flow vs. meet delivery deadlines

How to adopt norm-aware RL without getting stuck in theory

Robotics leaders tend to ask the same implementation questions. Here are the ones I consider “make or break,” with practical answers.

What should count as a “norm” in your system?

Treat a norm as a rule that:

- you can observe (or infer) from state and events

- you can detect violations of

- you can justify to a stakeholder

Good starting norms in robotics & automation are behavioral and local:

- yielding behavior at intersections

- maximum time blocking shared choke points

- minimum distance to humans

- restrictions by zone and time window

How do you set priorities without endless debate?

You need a priority order that matches how your organization already behaves under pressure. A workable template is:

- Human safety norms (physical safety, hazardous areas)

- Legal/regulatory norms (restricted zones, compliance protocols)

- Operational norms (right-of-way, noise limits, blocking rules)

- Business objectives (throughput, energy, utilization)

Write it down. Then test it with real conflict scenarios your ops team recognizes.

How do you evaluate “normative behavior” in pilots?

Don’t just measure collisions and task time. Add violation accounting that ops teams can interpret:

- violations per hour (by norm)

- percentage of missions with any high-priority violation

- time-to-recover after a violation (for CTD-like rules)

A strong sign you’re on track: you can point to a log and say, “We broke this low-priority norm once to satisfy the deadline norm, and the robot immediately returned to compliance.”

If you can’t explain a violation in one sentence, you don’t have a norm system—you have a mystery penalty function.

What this changes for robotics teams in 2026

Robotics is entering a phase where “it works in our facility” isn’t the bar anymore. Multi-site deployments, mixed human-robot workflows, and stricter governance mean robots need to behave consistently across contexts.

The ONRB idea pushes the field toward something I like because it’s honest: robots will sometimes violate rules, because the world forces trade-offs. The win is making those trade-offs explicit, ordered, and measurable.

For teams building autonomous robots for warehouses, hospitals, and public spaces, multi-objective norm compliance is becoming a core capability—right next to navigation, perception, and fleet orchestration.

If you’re evaluating AI for robotics & automation projects next quarter, ask your vendor or internal team one blunt question: when goals and norms conflict, which one wins—and how do you prove it?