Multi-goal reinforcement learning builds automation that adapts across tasks. See how robotics research maps to U.S. digital services and smarter workflows.

Multi-Goal Reinforcement Learning for Smarter Automation

Most automation projects fail for a boring reason: they’re trained for one goal in one environment, then we act surprised when real life changes the rules.

That’s why multi-goal reinforcement learning (RL) matters—especially in robotics, and surprisingly, in U.S. digital services too. When a system can learn to pursue many goals, in many conditions, it stops being a fragile demo and starts looking like something you can run in production.

The RSS source for this post was gated (the page returned a 403), but the topic itself—multi-goal reinforcement learning in challenging environments—is one of the clearest bridges between frontier robotics research and the day-to-day automation that drives customer support, logistics, and marketing operations across the United States.

Why multi-goal reinforcement learning matters (beyond the lab)

Multi-goal reinforcement learning is about training an agent to reach different outcomes on demand—not just “do one task well,” but “do lots of tasks when asked.” In robotics, that could mean: pick up the mug, open the drawer, move to the charging dock, or avoid obstacles—all using the same underlying policy.

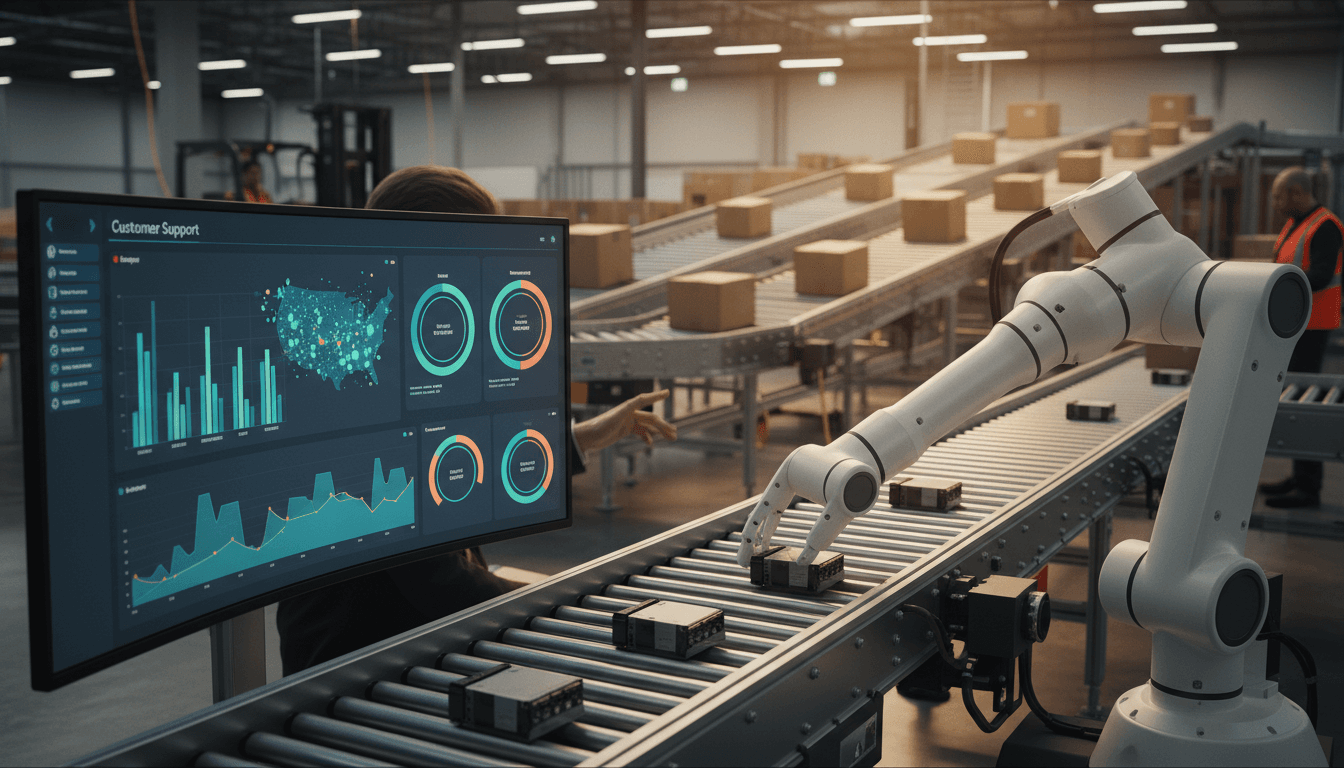

In U.S. businesses, the equivalent is a system that can adapt its “behavior” depending on the goal:

- A contact center AI that can resolve a billing issue, de-escalate an angry customer, or route to compliance depending on context

- A warehouse automation stack that can prioritize same-day shipments during a holiday surge, then switch back to cost-optimized batching in January

- A marketing automation system that can optimize for trial sign-ups, retention, or upsells without rebuilding the whole workflow

Here’s the thing about multi-goal training: it tends to produce agents that learn general skills (navigation, manipulation, planning, correction) that transfer across tasks. That transfer is exactly what most companies want when they say, “We need automation that doesn’t break every time the script changes.”

Single-goal RL is brittle by design

Traditional RL setups often reward one outcome. That encourages “reward hacking” behaviors—solutions that technically maximize reward but don’t generalize.

Multi-goal RL pushes the system to learn goal-conditioned behavior. Done right, it reduces brittleness because the agent can’t overfit as easily to one narrow success path.

A useful mental model: multi-goal RL trains capabilities; single-goal RL trains tricks.

What “challenging environments” actually test

When robotics researchers say “challenging environments,” they usually mean conditions that break naive training:

- Sparse rewards: success feedback only at the end (e.g., “object placed correctly”), making learning slow

- Long-horizon tasks: actions must be correct for many steps in a row

- Partial observability: the agent can’t see everything (occlusions, sensor noise)

- Stochasticity: outcomes vary even with the same action

- Distribution shift: test-time scenarios differ from training

These aren’t just robotics problems. They’re the same problems you hit in digital automation:

- Your “reward” might be a customer staying subscribed months later (sparse, delayed)

- A successful resolution might require 8–20 steps across tools (long horizon)

- Customer context is incomplete or messy (partial observability)

- User behavior varies across regions, seasons, and channels (stochasticity)

- New products, pricing changes, and policy updates create constant shift (distribution shift)

In other words: robotics research isn’t “separate” from SaaS automation. Robotics just forces the issues to show up faster.

How multi-goal RL maps to U.S. digital services

Multi-goal RL can sound like a robotics-only topic until you translate “goals” into the way businesses actually operate.

Goal-conditioned behavior = intent-conditioned automation

In multi-goal RL, the agent gets a goal g (like a target state) and learns a policy π(a | s, g)—actions based on state and goal.

In digital services, g might be:

- “Reduce handle time”

- “Maximize customer satisfaction”

- “Prevent churn risk”

- “Collect missing compliance info”

- “Book a qualified demo”

This matters because most automation systems are built as fixed flows. Multi-goal framing encourages a different approach: build an agent that can choose actions depending on the objective and context.

Robotics has the discipline SaaS automation needs

Robotics teams obsess over:

- safe exploration

- constraints

- monitoring

- rollback

- sim-to-real transfer

U.S. digital services teams often skip those disciplines until something breaks publicly. If you’re putting AI into customer communication, billing, or healthcare scheduling, you need the same seriousness—because the “robot” is now making decisions inside systems that affect people’s money, time, and trust.

Practical patterns: what to borrow from multi-goal RL right now

Not every company should train RL agents from scratch. Many shouldn’t. But you can borrow the patterns and get real value.

1) Write goals as measurable outcomes (not tasks)

A common mistake is setting goals like “respond to customers” or “automate outbound.” Those are tasks.

Multi-goal thinking pushes you to express goals as outcomes:

- “Resolve within 1 interaction”

- “Reduce refund rate by X% without lowering CSAT”

- “Increase trial-to-paid conversion by X% while keeping complaint rate below Y%”

You don’t need RL to benefit from this. You just need the discipline.

2) Treat your tools like an action space

In robotics, actions are torques, velocities, or gripper commands. In digital services, actions are tool calls:

- create ticket

- query CRM

- send message

- apply discount

- schedule follow-up

- request identity verification

If you want adaptive automation, define:

- what actions are allowed

- what data each action can use

- what constraints apply (privacy, policy, budget)

Once that’s explicit, you can start building systems that choose actions dynamically rather than following a rigid script.

3) Design reward signals you can defend

Reward design is where RL systems succeed or fail. In business automation, the “reward” is your KPI—and KPIs can be dangerous if they’re too narrow.

A better approach is multi-objective rewards with guardrails. For example:

- Primary: successful resolution

- Secondary: low handle time

- Constraints: zero policy violations, low escalation regret, protected-class fairness checks

This is how you keep “optimize the metric” from becoming “break the customer experience.”

4) Build evaluation suites, not one-off demos

Robotics research uses benchmark environments because single demos lie.

For digital automation, build a test suite that includes:

- edge cases (angry customer, missing info, contradictory records)

- policy-sensitive flows (refunds, identity, medical scheduling)

- seasonal variation (holiday shipping, end-of-year insurance changes)

- channel variation (email vs chat vs voice)

If you can’t measure robustness, you can’t ship responsibly.

A concrete scenario: holiday logistics meets multi-goal learning

Late December in the U.S. is peak stress for operations: returns, shipping delays, inventory miscounts, and customer support surges.

A single-goal automation system often collapses here because the goal effectively changes daily:

- One day: “Ship as fast as possible.”

- Next day: “Reduce refunds from late deliveries.”

- Next day: “Prioritize VIP customers and minimize complaints.”

Multi-goal RL as a research direction is essentially built for this: the same agent should handle multiple objectives with different priorities depending on context.

Even if you’re not training RL, you can mimic the structure:

- Define the goal vector (speed, cost, satisfaction, compliance)

- Build a policy layer that selects actions based on goal priority

- Monitor outcomes and adjust priorities when conditions shift

This is how automation stops being a brittle workflow and starts acting like an operations teammate.

“People also ask” answers (the useful version)

Is multi-goal reinforcement learning only for robotics?

No. Robotics is where the failures are obvious, but the same approach fits any domain where systems must choose sequences of actions toward changing objectives—customer support, IT operations, fraud workflows, and marketing orchestration.

Do you need reinforcement learning to get multi-goal benefits?

Not always. Many teams can get 60–80% of the value by adopting goal-conditioned design: explicit objectives, explicit action spaces, strong evaluation, and constraint-first thinking.

What’s the biggest risk when applying RL-style automation to customer workflows?

Reward misalignment. If you optimize for speed alone, you’ll create worse outcomes (bad refunds, poor explanations, policy violations). The solution is multi-objective measurement plus hard constraints.

Where this fits in the “AI in Robotics & Automation” series

In this series, we’ve been tracking a pattern: the ideas that make robots reliable—robust perception, planning under uncertainty, safety constraints—also make digital services automation more trustworthy.

Multi-goal reinforcement learning is one of the most direct examples. It’s research aimed at getting agents to handle variety: different tasks, different priorities, different environments. That’s exactly what U.S. companies need as they move from “AI pilots” to automation that touches revenue, service quality, and compliance.

If you’re building AI-powered customer communication, marketing automation, or operational workflows, the best next step is to audit your system like a robotics team would:

- Are the goals explicit and measurable?

- Is the action space constrained and logged?

- Do you have a robustness test suite?

- Do you know what “good” looks like across multiple objectives?

Answer those honestly, and you’ll see where adaptive automation is realistic—and where it’s still just a demo.

Where do you need multi-goal behavior most right now: customer support, sales operations, or back-office workflows? That answer usually points to the highest-leverage place to start.