Multi-agent AI helps automation handle handoffs, conflicts, and customer comms at scale. See patterns, guardrails, and use cases for U.S. digital services.

Multi-Agent AI for Smarter Automation in US Services

Most automation breaks the moment work stops being “one user, one task.” The real world has handoffs, approvals, conflicts, and competing priorities—especially in U.S. digital services where customer expectations are high and margins are tight.

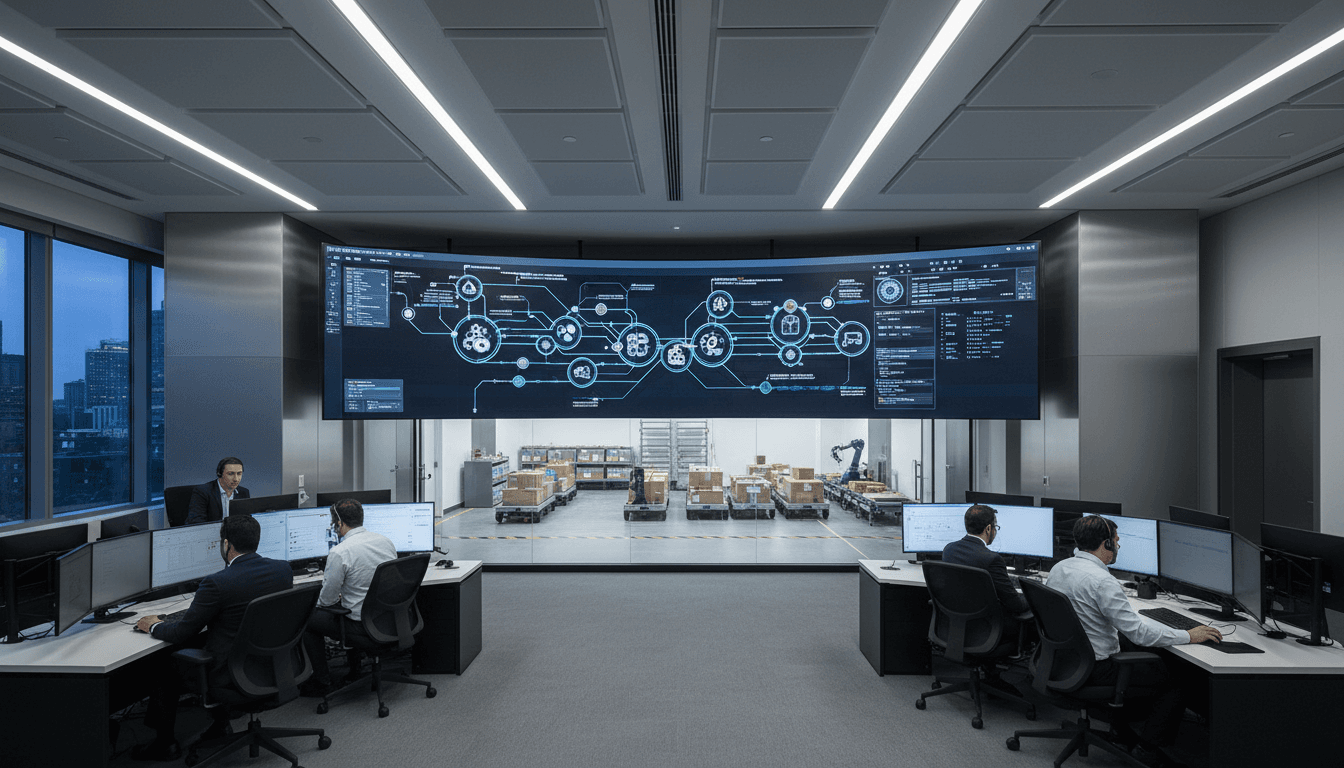

That’s why AI systems that can cooperate, compete, and communicate matter. Even though the source article content wasn’t accessible (blocked behind a 403/CAPTCHA), the theme points to a fast-moving area of research: multi-agent AI and reinforcement learning (RL) methods that train agents to coordinate, negotiate, and share information. In the AI in Robotics & Automation series, this is the missing bridge between “a helpful chatbot” and end-to-end, team-based automation that can run a contact center, a fulfillment operation, or a SaaS back office.

Here’s the practical angle: if your business workflows resemble a team sport, you should be thinking in agents, not single models.

Cooperation, competition, and communication: what it means for automation

The core idea is simple: multi-agent automation works when different AI components have clear roles, shared goals, and controlled incentives. Cooperation handles coordination, competition prevents complacency (or encourages specialization), and communication is how the system avoids duplicated work and missed handoffs.

In a typical U.S. digital services company, one “automation” actually contains multiple jobs:

- Collecting customer context (CRM, billing, product usage)

- Interpreting policy (refund rules, compliance checks)

- Taking action (issuing credits, resetting accounts, dispatching field techs)

- Logging, auditing, and notifying humans

Trying to do all of that with one agent often produces brittle behavior: it overreaches, misses edge cases, or gets stuck when it needs information it can’t safely access.

A multi-agent system splits this into cooperating roles (for example, a “policy agent,” “customer comms agent,” and “actions agent”). Done well, it’s closer to how high-performing ops teams actually work.

The stance I take: single-agent automation is overused

Single-agent tools can be great for narrow tasks. But once a workflow has:

- multiple stakeholders,

- multiple tools,

- competing goals (speed vs. risk), and

- real consequences,

…you’re better off designing for agent-to-agent coordination and human-in-the-loop checkpoints.

Why multi-agent AI fits U.S. SaaS and customer operations right now

The U.S. market rewards speed and responsiveness, but it also punishes mistakes. That tension is exactly where multi-agent design helps.

Customer communication automation is a prime example. A single model drafting a response can sound good—but it may not know the latest policy, the customer’s contract tier, or whether an outage is active. Multi-agent setups let you separate responsibilities:

- A retrieval agent fetches authoritative data (status, policy, account entitlements)

- A risk agent checks for compliance red flags (PII, regulated claims)

- A messaging agent writes in-brand responses and adapts tone

- A resolver agent decides the next action (refund, replacement, escalation)

This mirrors what many teams already do manually—except the handoffs become fast, consistent, and measurable.

Seasonal relevance (December): peak load exposes weak automation

Late December is a stress test in the U.S.: holiday shipping issues, subscription churn after promotions, and staffing gaps. If your automation fails under surge conditions, it’s often because it doesn’t coordinate well. Multi-agent patterns help because they:

- reduce single points of failure (one agent going “off the rails”)

- create explicit checks before taking irreversible actions

- allow parallelism (different agents work simultaneously)

Reinforcement learning: how agents learn to work as a team

Multi-agent behavior doesn’t appear by magic. It’s usually shaped through reinforcement learning (RL) or RL-adjacent training where agents are rewarded for outcomes (resolution rate, time-to-resolution, customer satisfaction proxies) rather than just “sounding right.”

A useful definition you can share internally:

Reinforcement learning trains AI by rewarding decisions that improve outcomes, not just predictions that match past text.

In customer ops or workflow automation, RL-style thinking translates to:

- Define the goal precisely (e.g., resolve in one touch while minimizing policy violations)

- Define the penalties (e.g., unauthorized refunds, PII exposure, incorrect claims)

- Run controlled simulations (sandbox systems, synthetic tickets, staged outages)

- Gradually increase autonomy as the system proves reliability

Cooperation vs. competition: both belong in production systems

“Cooperate” sounds safe, but competition is useful when you want robustness.

Examples that work in practice:

- Two independent agents propose solutions; a third “judge” agent selects or merges them.

- A “red team agent” tries to find policy loopholes or unsafe outputs before messages go out.

- A “cost agent” pushes for cheaper actions (self-serve flows), while a “retention agent” pushes for customer-saving actions—then you arbitrate.

The result is less groupthink and fewer silent failures.

Real-world multi-agent workflows (beyond chat)

If your mental image is “multiple chatbots talking,” you’re underselling it. In AI-driven automation, agents are often services with narrow permissions.

Below are three patterns I’ve seen map cleanly to U.S. digital services and to robotics/automation thinking.

Pattern 1: The “dispatch team” for field service and logistics

Answer first: Multi-agent dispatch improves throughput by separating planning, constraints, and communication.

A field service workflow (telecom installs, appliance repairs, medical device maintenance) can be decomposed into:

- Scheduler agent: builds the route plan

- Constraints agent: enforces SLAs, technician skills, inventory, driving limits

- Customer comms agent: sends arrival windows and updates

- Exception agent: re-plans when cancellations or delays occur

This resembles robotics task allocation: assign jobs, respect constraints, re-plan under uncertainty.

Pattern 2: The “back office pod” for billing, refunds, and disputes

Answer first: Multi-agent back office automation reduces errors by forcing explicit validation steps.

A billing dispute isn’t just “reply nicely.” It involves:

- verifying identity

- checking ledger events

- applying policy

- executing a credit

- logging an audit trail

Give each step its own agent with minimal permissions. Then require consensus or a gate before money moves.

Pattern 3: The “content supply chain” for marketing ops

Answer first: Multi-agent marketing automation scales output without sacrificing governance.

Marketing teams want more content, faster. Legal wants fewer risks. Product wants accuracy. A multi-agent workflow can look like:

- Research agent: summarizes internal docs and product notes

- Claims agent: flags risky statements and unsupported metrics

- SEO agent: proposes keywords and structure

- Brand agent: enforces tone and terminology

- Publisher agent: formats, tags, and schedules

This aligns directly with the campaign bridge point: AI communication and decision-making processes can automate marketing and customer communication at scale.

How to design multi-agent AI that doesn’t create chaos

The failure mode of multi-agent systems is predictable: too many agents, unclear authority, circular debates, and no auditability.

Here’s the approach that actually works.

Start with a workflow map, not a model choice

Answer first: Your best multi-agent design comes from your handoffs, not your prompts.

Pick one high-volume workflow and map:

- inputs (tickets, events, calls, web forms)

- decisions (approve/deny, refund/escalate)

- actions (API calls, emails, updates)

- required evidence (policy sections, account facts)

Then assign agents to roles based on where humans currently specialize.

Give agents “least privilege” tool access

Answer first: Permissions are the safety boundary in AI automation.

A messaging agent shouldn’t have refund permissions. A refund agent shouldn’t improvise policy language to customers. Keep capabilities narrow and composable.

Practical guardrails:

- allow-list tools per agent

- restrict which fields can be read/written

- require “approval tokens” for sensitive actions

- log every tool call with a reason string

Add an arbiter when goals conflict

Answer first: Conflict resolution must be designed, not hoped for.

When speed conflicts with risk, decide who wins:

- Arbiter agent chooses based on a utility score

- Human checkpoint triggers above thresholds (refund > $X, regulated accounts)

- Escalation policy handles uncertainty (“when in doubt, ask”)—yes, this is still valuable automation

Measure outcomes, not vibes

Multi-agent systems invite the illusion of progress because they “talk” a lot. Track metrics that reflect business value:

- first-contact resolution rate

- time-to-resolution

- escalation rate by category

- policy violation rate

- customer satisfaction proxy (survey, sentiment, churn)

- cost per resolved case

People also ask: practical questions before you adopt multi-agent automation

Do I need multi-agent AI if I already use an AI chatbot?

If your chatbot only drafts replies, maybe not. If it’s expected to take actions across systems (CRM, billing, provisioning) and coordinate approvals, multi-agent architecture is usually safer and easier to scale.

What’s the biggest risk in multi-agent systems?

Unclear authority and permissions. If multiple agents can act on the same system without a clean policy for who decides, you’ll see inconsistent outcomes and messy audits.

How does this relate to robotics and automation?

Robotics has decades of work on task allocation, coordination, and planning under uncertainty. Multi-agent AI for digital services borrows the same concepts—just applied to software actions instead of physical actuators.

Where this is headed in 2026: agent teams as the new workflow engine

By 2026, I expect many U.S. SaaS platforms to treat multi-agent orchestration like today’s workflow automation: standard, configurable, and deeply integrated. The differentiator won’t be who has the flashiest model. It’ll be who can deploy reliable agent teams with strong governance.

If you’re building or buying AI-powered automation right now, prioritize systems that:

- support role-based agent design

- provide auditable tool usage

- handle conflict and escalation predictably

- improve through feedback loops (including RL-style evaluation)

That’s the path to scaling customer communication and operational workflows without scaling headcount at the same rate.

The open question worth debating internally: which of your workflows already behave like a team sport—and what would happen if you redesigned them as an agent team instead of a single AI assistant?