Meta-learning helps robots adapt fast—even against stronger opponents. See what robot wrestling teaches U.S. teams building scalable AI automation.

Meta-Learning Robots That Adapt Under Pressure

A robot that only performs well in perfect conditions isn’t “intelligent”—it’s fragile. Most automation failures in the real world look like this: a slightly different layout in a warehouse aisle, an unexpected object on a conveyor, a camera that’s smudged, a gripper that’s wearing down, a competitor’s bot that plays defense differently than yesterday.

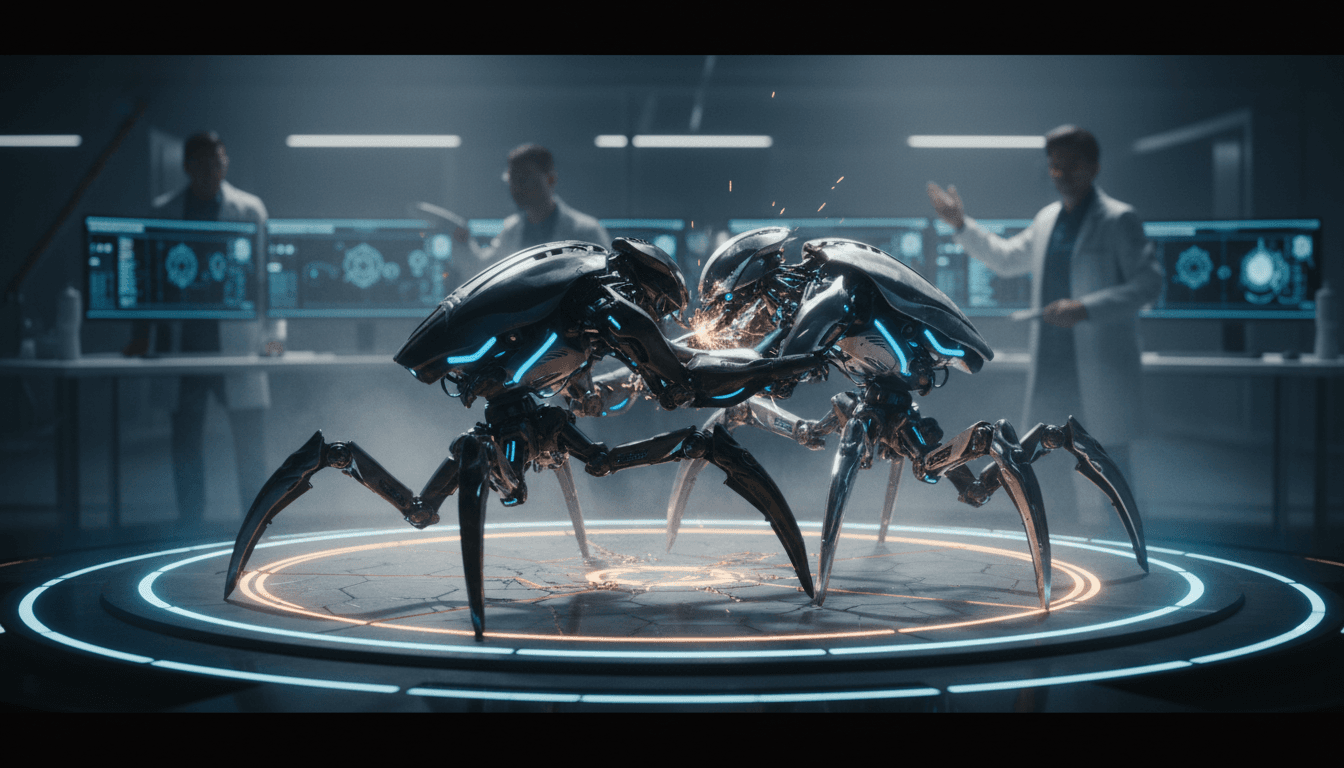

OpenAI’s 2017 “meta-learning for wrestling” research is a useful case study because it’s basically stress testing adaptation. The setup is playful—simulated robot bodies trying to shove each other off a platform—but the lesson is serious: training an AI system to learn quickly during deployment is one of the most practical paths to reliable robotics and scalable digital services.

This post is part of our AI in Robotics & Automation series, where we track the ideas that turn “cool demos” into systems U.S. companies can actually ship, support, and scale.

Meta-learning: training robots to learn fast, not just perform

Meta-learning is about speed of adaptation. Instead of producing a single policy that tries to handle every situation, the goal is to train a policy that starts from a strong default and then improves rapidly with a small amount of new experience.

In the wrestling work, OpenAI built on Model-Agnostic Meta-Learning (MAML). The key intuition of MAML is straightforward: you train a model so that a few gradient updates using fresh experience in a new task gets you to a much better solution than standard training would.

Here’s the business translation I use when explaining it to teams:

A non–meta-learning system ships with “one brain.” A meta-learning system ships with “a brain plus a playbook for updating itself.”

That playbook matters in robotics and automation because you never control all the variables:

- The environment changes (lighting, friction, clutter, traffic patterns)

- The “rules” change (new SKUs, new packaging, different human workflows)

- The robot changes (battery sag, sensor drift, motor wear, partial failures)

A model that can adapt during execution has a better chance of staying useful between maintenance windows and software releases.

What OpenAI changed: learning across pairs of environments

The research extended stock MAML by optimizing against pairs of environments rather than single environments. Put simply: instead of learning “how to get good at Task A after a few updates,” the agent learns “how to adapt when the task shifts.”

That matters because real operations rarely look like independent tasks. They look like continuous variation—today’s warehouse is basically yesterday’s warehouse, but not identical.

Why robot “wrestling” is a great proxy for real-world automation

Wrestling forces non-scripted interaction. Unlike many robotics benchmarks where the environment is passive (pick up object, move to bin), wrestling is an adversarial, dynamic setting. The opponent changes tactics, blocks strategies, and punishes predictable behavior.

In OpenAI’s setup, agents competed in multi-round matches and were allowed to update their policy parameters between rounds based on what they experienced. They tested three body types:

- Ant (4 legs)

- Bug (6 legs)

- Spider (8 legs)

The punchline: agents that could adapt between rounds outcompeted fixed-policy agents—even when facing stronger opponents.

That’s the part SaaS and automation leaders should care about. Most production failures aren’t about average performance. They’re about what happens when conditions drift, opponents respond, or inputs change.

The “stronger opponent” result is the real headline

The research shows a meta-learning agent can quickly defeat a stronger non-meta-learning agent.

If you’ve ever watched a rigid automation rule set get “figured out” by reality—operators taking shortcuts, edge cases piling up, upstream data drifting—you’ve seen the same phenomenon. A fixed policy can be strong in its comfort zone, but brittle when the game changes.

Meta-learning flips the advantage: even if the baseline starts weaker, it improves faster once it’s on the field.

Continuous adaptation in practice: what it changes for U.S. tech teams

Continuous adaptation changes your operating model. It pushes you from “train, ship, pray” to “train, ship, learn safely.”

In U.S. technology and digital services, this matters because more companies are building AI into:

- Warehouse and logistics automation

- Healthcare operations (inventory, lab workflows, device handling)

- Manufacturing inspection and handling

- Field robotics (utilities, agriculture, construction)

- Customer-facing automation that depends on real-world inputs (images, speech, sensor feeds)

If you want lead-generation relevance: the moment a company asks, “How do we scale robotics across 20 sites without 20 different models?”—you’re in meta-learning territory.

Where the wins show up (and where they don’t)

Where meta-learning helps most:

- Multi-site deployments: Each facility has its own quirks. Fast adaptation reduces site-by-site rework.

- Long-tail variability: Rare but costly edge cases (unexpected objects, unusual friction, new packaging).

- Adversarial or interactive settings: Any scenario where other agents (or humans) respond to the system.

- Hardware drift and partial faults: Wear-and-tear is predictable; failures aren’t.

Where it’s not a free lunch:

- Safety-critical constraints: You need guardrails so adaptation doesn’t “learn” unsafe actions.

- Wildly new environments: The original research notes adaptation works as long as the new environment doesn’t diverge too much from training.

- Evaluation complexity: Testing must cover not just performance, but how the model updates.

Adaptation to malfunction: the underappreciated robotics requirement

Robots break in boring ways. A joint gets sticky. A leg loses torque. A sensor starts lying. Cables loosen. In the OpenAI experiments, the meta-learning approach showed promise for adapting even when the agent’s own body changes—like limbs losing functionality.

That’s huge for real operations because it points to a practical reliability strategy:

- Instead of failing hard when hardware degrades

- The system degrades gracefully and compensates

In an automation budget, that shows up as:

- Less downtime

- Fewer emergency interventions

- Longer intervals between recalibration

And it also changes how you think about maintenance. You stop treating “model accuracy” as a static number and start treating it as a control loop: detect drift → adapt within bounds → escalate when bounds are exceeded.

A simple way to frame it for stakeholders

If you’re pitching AI robotics internally, here’s the line that tends to land:

The goal isn’t a robot that never encounters surprises. The goal is a robot that recovers from surprises faster than they happen.

That’s what meta-learning is trying to buy you.

How this maps to SaaS and digital services (yes, really)

Robot wrestling might feel far from software platforms, but the core pattern is identical: systems that get better after deployment.

Consider a few digital-service analogs in the U.S. market:

- Fraud and abuse prevention: Attackers adapt. Fixed rules get outdated fast.

- Customer support automation: New products and policies appear constantly.

- Personalization: Preferences shift by season, region, and even week-to-week behavior.

- Security detection: Adversaries probe what your system flags and what it ignores.

The operational question is the same as in wrestling: are you shipping a fixed policy, or are you shipping a system that can update quickly from fresh experience?

What “learning on the fly” should look like in production

Teams get nervous about online learning because it can go wrong. That’s fair. The answer isn’t “don’t adapt,” it’s “adapt with controls.” In practice, I’ve found the most robust approach looks like this:

- Bounded adaptation: limit how far parameters can move within a window.

- Shadow evaluation: test updates on held-out or simulated scenarios before promoting.

- Rollback + auditability: every update is reversible and logged.

- Human-in-the-loop triggers: route uncertain or high-impact cases to review.

- Environment monitoring: detect when the world diverges beyond what the model was trained to handle.

Robotics adds extra guardrails (physical safety, collision constraints), but the philosophy carries over.

A practical checklist: is meta-learning worth exploring for your automation?

Meta-learning is worth a serious look if at least two of these are true:

- You deploy the same robotic or automation system across multiple locations.

- Your environment changes faster than you can retrain and revalidate models.

- You face interactive dynamics (humans, other robots, competitive behavior).

- Hardware drift or partial faults cause expensive downtime.

- You have enough simulation or historical data to represent “nearby worlds” the system will encounter.

And here’s the hard requirement many teams miss:

- You’re willing to invest in evaluation infrastructure—because adaptation without measurement is just improvisation.

Where the U.S. opportunity is heading in 2026

By late 2025, “AI in robotics” is no longer just about perception or path planning. The competitive edge is increasingly about adaptation, monitoring, and operational scalability—the unglamorous parts that make deployments stick.

Meta-learning research like the robot wrestling work points to a clear direction: robots and automation systems that can adjust to new opponents, new conditions, and even their own wear-and-tear without waiting for the next model release.

If you’re building AI-powered automation or digital services in the United States, the question to ask your team isn’t “Can our model do the task?” It’s: “How quickly can it get better when the task changes?”

If you’re exploring robotics automation, multi-site deployments, or AI agents that have to operate under messy real-world conditions, what part of your system changes most often: the environment, the users, or the hardware? That answer usually tells you whether meta-learning is a research curiosity—or a roadmap item.