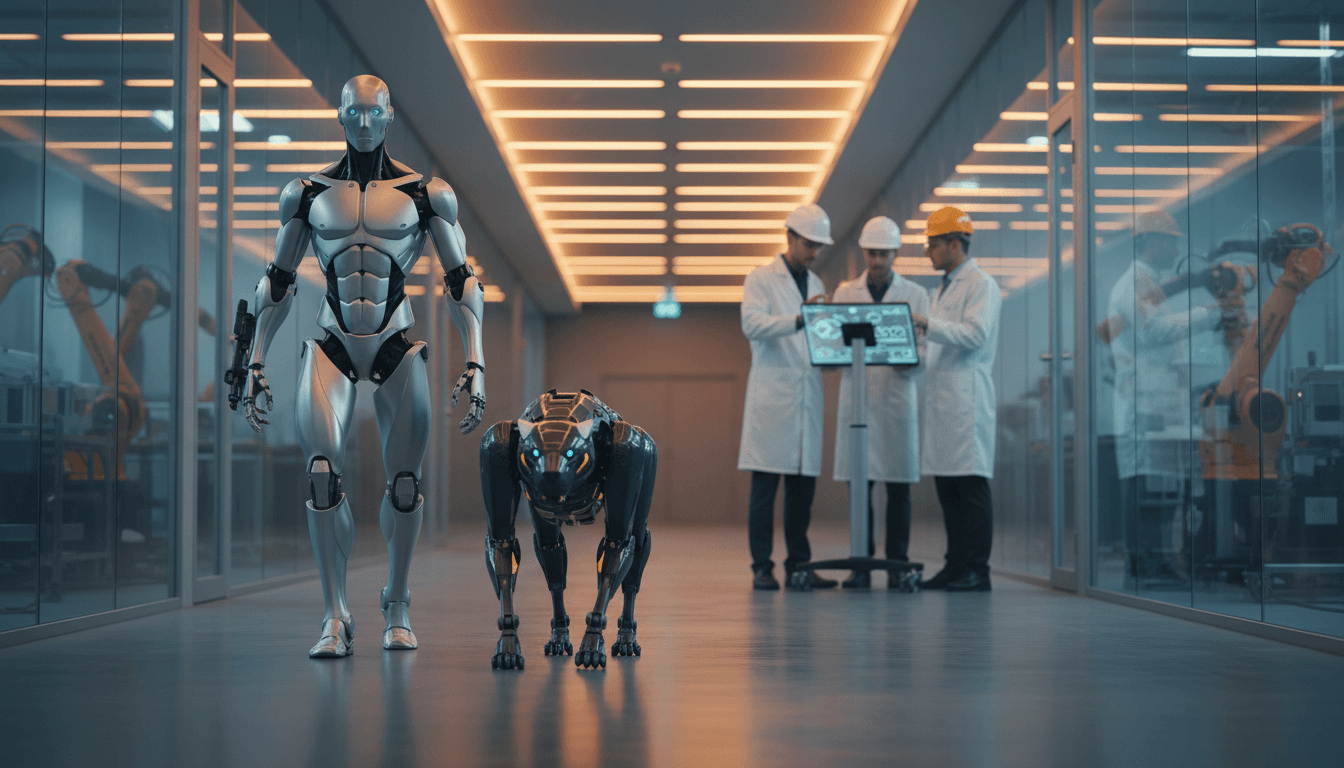

Humanoid robotics hiring is accelerating. Learn the AI + ROS 2 skills employers want in 2026—and how to prove you can ship legged autonomy.

Humanoid Robotics Hiring: Skills That Win in 2026

Humanoid and legged robots aren’t “research projects” anymore—they’re staffing plans. A recent Senior Robotics Engineer opening focused on humanoid, legged, and autonomous mobile robots (AMRs) in Chennai (onsite) is a small signal with a big message: companies are hiring for robots that must walk, balance, perceive, and decide in the messy physical world.

Most teams underestimate what that implies. It’s not just better motors or nicer CAD. The hard part is integrating AI with real-time robotics so a robot can handle uncertainty—slippery floors, shifting loads, sensor dropouts, and humans doing unpredictable human things.

This post is part of our AI in Robotics & Automation series, and I’m going to be blunt: if you’re building (or buying) humanoid/legged robots, your success will depend less on one flashy model and more on whether your team can ship a repeatable autonomy stack—from simulation to hardware—without the robot constantly face-planting in the lab.

What this job opening really tells us about the market

The clearest takeaway: demand is shifting from “robotics generalists” to “AI-integrated robotics engineers.” The role targets autonomous robots across humanoid, legged, and AMR platforms, which is a clue that employers want engineers who can reuse patterns across embodiments.

Here’s what that means in practice:

- Legged robotics is being productized. Companies don’t hire two senior roles onsite unless there’s a delivery timeline and internal stakeholders.

- AI is now expected, not optional. For legged and humanoid robots, classic planning and control are necessary but insufficient. The real world is too variable.

- The stack matters as much as the robot. Mentions and adjacency to the Open Robotics ecosystem (ROS, ros2_control, Gazebo) point to organizations that value integration, tooling, and repeatability.

In late 2025 going into 2026, the hiring pattern I keep seeing is: companies want people who can combine learning-based components (perception, grasp/foot placement priors, behavior policies) with deterministic systems (state estimation, MPC/QP control, safety constraints, fault handling). That hybrid mindset is where reliable robots come from.

Why AI is essential for humanoid and legged robots

Answer first: AI is essential because legged and humanoid robots operate in environments where rules-based autonomy fails—too many edge cases, too much uncertainty, and too many interactions.

The “physics tax” of legs

Wheels are forgiving; legs are not. A legged robot has:

- intermittent contacts

- underactuated dynamics during flight phases

- high sensitivity to friction and compliance

- rapid transitions (heel strike, toe-off, slips)

That makes pure hand-tuned behavior brittle outside the lab. Learning-based perception and adaptation help the robot estimate terrain properties and choose stable actions faster than you can write rules for every surface.

Perception isn’t a nice-to-have—it’s the control input

A humanoid can’t walk well if it doesn’t see well. Modern stacks treat perception outputs (terrain class, obstacle affordances, traversability, contact candidates) as first-class inputs to planning and control.

Where AI fits cleanly:

- semantic perception (recognize stairs, curbs, pallets, cables)

- self-supervised depth and surface understanding in changing lighting

- contact prediction (where a foot will stick vs slip)

- behavior selection (when to step over vs go around)

You still need classical robotics (and teams forget this)

If you’re hiring for humanoid robotics and your plan is “just train a policy,” you’re going to burn months.

Reliable humanoid autonomy usually looks like:

- State estimation: tightly fused IMU + joint encoders + vision/LiDAR

- Whole-body control: constraints for joint limits, torque, balance

- Planning: footstep planning and obstacle-aware motion planning

- AI modules: perception, adaptation, recovery behaviors, policy priors

- Safety: monitors, fall detection, controlled shutdown, envelopes

AI makes the system robust; classical components make it safe and testable.

The skills gap: what employers actually need (and how to prove it)

Answer first: To win these roles, you need to demonstrate you can ship autonomy—on hardware—under constraints: timing, safety, logging, testing, and deployment.

The job post itself is short, but the role title (Senior Robotics Engineer for humanoid & legged robots) and the platform mix (humanoid, legged, AMR) imply a practical skill set. If I were screening candidates for this exact need, here’s what I’d look for.

1) ROS 2 integration that’s production-minded

You don’t need to memorize every ROS 2 API. You need to show you can build a system that survives real usage:

- clean

ros2_controlhardware interfaces and controllers - lifecycle nodes and deterministic startup/shutdown

- message and QoS choices that avoid silent lag

- rosbag workflows for reproducible debugging

Proof idea: bring a repo (even a simplified one) showing a multi-node stack, launch files, parameters, and a recorded bag used to reproduce a bug.

2) Simulation-to-hardware discipline (Gazebo or equivalent)

For legged robots, simulation isn’t optional—hardware iteration is too slow and too risky.

Strong candidates can:

- build a reasonable URDF/SDF model with correct inertias

- validate contact parameters and joint limits

- write tests that compare expected vs observed trajectories

- use simulation to generate datasets for perception or policies

Proof idea: show a “sim-to-real checklist” and one example where simulation caught an integration issue (frame mismatch, latency, wrong inertia, controller instability).

3) State estimation and timing fundamentals

Humanoids punish sloppy estimation. A few milliseconds of jitter or a biased IMU can turn into a fall.

You should be comfortable with:

- EKF/UKF concepts and practical tuning

- frame conventions and transforms

- latency compensation and timestamp hygiene

- diagnosing drift and bias

Proof idea: a short write-up (or internal doc excerpt) explaining a real estimation failure and how you fixed it.

4) AI that fits robotics constraints

Employers want AI, but they want AI that behaves. Useful capabilities:

- deploying perception models with predictable runtime

- understanding failure modes (domain shift, lighting, motion blur)

- building fallbacks when the model is uncertain

- integrating learned outputs into planners/controllers safely

A snippet-worthy stance: A robotics AI model is only “good” if its uncertainty is measurable and its failures are handled.

5) Debugging and observability (the senior differentiator)

Senior engineers don’t just write code—they shorten time-to-fix.

Look for habits like:

- structured logs with event IDs

- metrics for controller stability and CPU budget

- traceable data paths (sensor → filter → planner → controller)

- incident-style postmortems after failures

If you can explain your debugging workflow clearly, you’ll stand out fast.

Where humanoid and legged robots are headed in 2026

Answer first: Near-term growth is in service and industrial settings where mobility beats manipulation—and AI makes the mobility reliable.

Despite all the attention on humanoid hands, many deployments start with “boring” wins:

- patrol and inspection with better mobility than wheels

- logistics support in facilities with ramps, thresholds, and clutter

- retrieval and transport tasks where stairs or uneven floors exist

Humanoid form factors matter most when environments are built for humans—stairs, door handles, tight corridors. But plenty of teams will ship legged platforms first because manipulation adds complexity.

A practical example: recovery behaviors

A legged robot that can walk until it can’t is not commercially useful. A legged robot that can recover from near-falls is.

AI helps here in two ways:

- Predicting instability early (learned slip detection from IMU + joint signals)

- Choosing the right recovery action (widen stance, step, lower CoM, stop)

This is exactly the kind of “AI + control” integration companies quietly pay for—because it reduces downtime and hardware damage.

The open robotics ecosystem as the default substrate

The presence of Open Robotics community channels and tooling around this job post is a reminder: ROS 2 and simulation tools have become the common language across employers.

That matters for buyers and builders:

- Buyers benefit from a larger talent pool and reusable integrations.

- Builders benefit from faster iteration and better interoperability.

If your organization is still treating its robotics stack as a one-off project, you’ll feel the hiring pain first.

People also ask: quick answers for candidates and hiring managers

What’s the most important skill for a senior humanoid robotics engineer?

Systems integration under constraints. If you can’t make perception, planning, control, and hardware play nicely with real-time limits, the robot won’t be reliable.

Is ROS 2 mandatory for humanoid robotics jobs?

Not universally, but it’s increasingly the default. Even teams with custom middleware often expect ROS 2 literacy because of tooling, packages, and hiring availability.

How much AI do you need to know for legged robots?

Enough to deploy models responsibly: runtime profiling, dataset hygiene, uncertainty handling, and integration into safety envelopes. “I trained a model” isn’t the bar.

What kind of projects impress recruiters for these roles?

Projects that show end-to-end delivery: a simulated robot, a controller, a perception module, logged test runs, and a written postmortem when something broke.

Where to go from here (if you’re hiring or applying)

Humanoid robotics hiring is a leading indicator for where automation is headed: more mobility, more autonomy, and more expectation that robots operate around people. If you’re building a team, prioritize engineers who can connect AI to real-time robotics and still sleep at night because the safety story is solid.

If you’re applying, don’t over-index on buzzwords. Show evidence that you can:

- build a stable robotics stack (ROS 2, control, estimation)

- integrate AI modules with clear failure handling

- test in simulation, then validate on hardware

- debug fast using logs, metrics, and replayable data

This series focuses on AI in Robotics & Automation for real-world deployment. The organizations hiring for humanoid and legged robots right now are effectively saying: “We need people who can ship physical AI.”

So here’s the question I’d ask yourself before your next application or hiring cycle: if your robot falls tomorrow, do you know exactly which signal you’d inspect first—and do you have the data recorded to prove it?