Human-robot interaction design is the adoption bottleneck for AI robotics. Learn a practical HRI roadmap for manufacturing, healthcare, and logistics.

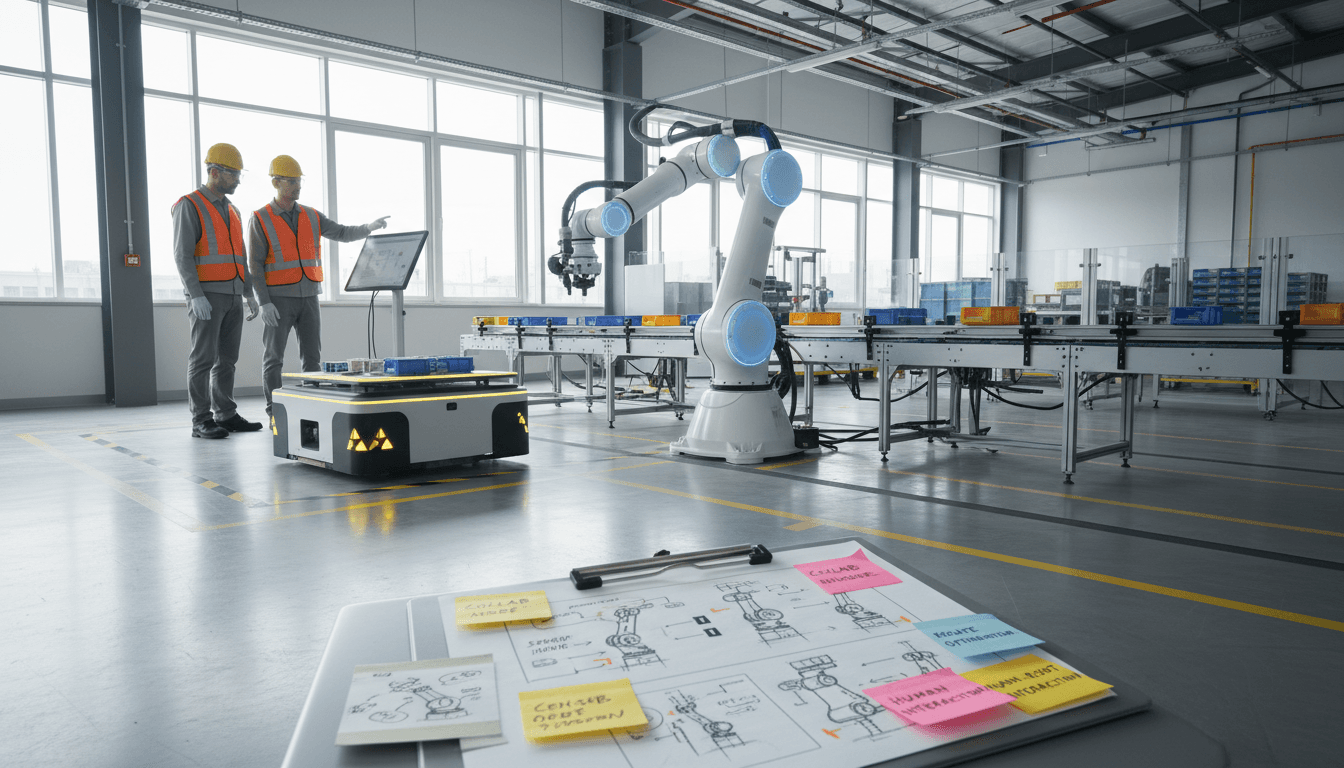

Designing Human-Robot Interaction That Works on the Floor

A lot of robot programs fail for a boring reason: people don’t want to work with the machines.

Not because the robot is “bad,” or because AI isn’t powerful enough. It fails because human-robot interaction (HRI) design is treated like polish—something you add after perception, planning, and control are “done.” The result shows up fast in manufacturing, healthcare, and logistics: stalled pilots, low trust, workarounds, safety concerns, and robots that technically function but don’t fit the job.

That’s why the recent Human-Robot Interaction Design Retreat caught my attention. The retreat brought together practitioners from academia and industry to do something the robotics world still doesn’t do enough: treat interaction design as a first-class engineering problem, and build a roadmap for the next 5–10 years of HRI.

This post is part of our AI in Robotics & Automation series. The core idea is simple: AI-enabled robots only create value when humans can predict, guide, and recover them in real workplaces. Let’s talk about what that actually takes.

Why HRI design is the bottleneck for AI robotics adoption

The fastest way to kill ROI in robotics is to ignore the human workflow. Even strong autonomy can look weak when the interaction layer makes everyday operations awkward.

In real deployments, the “interaction” isn’t just voice or a touchscreen. It’s everything around the robot’s behavior that answers a worker’s constant questions:

- What is it doing right now?

- What will it do next?

- Is it safe to step in?

- How do I correct it without calling an engineer?

- Who is responsible if it makes a mistake?

Here’s the stance I’ll take: If a robot can’t clearly communicate state, intent, and limits, it isn’t production-ready—no matter how good its AI stack is.

The HRI Design Retreat matters because it framed the future of HRI as something you shape deliberately, not something that “emerges” after shipping.

The hidden cost of “interaction debt”

Teams often accumulate interaction debt—the HRI version of technical debt:

- Operators learn tribal knowledge (“If it jitters, reboot it twice”).

- Supervisors create shadow processes to avoid edge cases.

- Safety teams restrict usage until the robot becomes irrelevant.

- Engineers spend their time on urgent usability fixes instead of capability improvements.

You can see the pattern across sectors:

- Warehouses: AMRs that don’t signal intent well create near-miss events and traffic jams.

- Manufacturing: cobots that require fiddly mode switching get bypassed during peak throughput.

- Hospitals and care: social or assistive robots lose trust quickly when they interrupt at the wrong moment or can’t gracefully hand control back to staff.

Good HRI design reduces this friction, and that’s why a roadmap exercise—like the retreat’s focus—has real operational implications.

What a design retreat gets right (that most robot programs miss)

A retreat forces cross-functional alignment before product decisions calcify. That’s the big win.

The Human-Robot Interaction Design Retreat used hands-on, interactive activities to help participants explore the future of HRI design and map priorities for the next 5–10 years. That format matters. Workshops and design sprints create shared language—crucial when your stakeholders include roboticists, designers, safety leaders, domain experts, and end users who don’t speak in the same abstractions.

In industry, I’ve found that the single most valuable output of events like this isn’t a slide deck. It’s agreement on:

- Who the robot is for (operator, nurse, technician, shopper, patient)

- What “success” looks like (throughput, injury reduction, response time, satisfaction)

- What failure looks like (and how the robot should fail safely and understandably)

The 5–10 year HRI shift: from interfaces to relationships

HRI used to be treated as a UI problem: buttons, prompts, and screens.

The next phase is more demanding. With AI in the loop, the relationship becomes dynamic:

- The robot adapts to changing conditions.

- Humans adapt their expectations based on experience.

- The organization adapts policies for oversight, training, and accountability.

So the interaction isn’t just “how do I control it?” It’s how do we collaborate over weeks and months in messy environments.

That’s a big reason retreats are useful: they help teams tackle long-horizon questions that product backlogs rarely create time for.

A practical HRI roadmap for AI-powered automation teams

If you’re building or buying AI-enabled robots, your roadmap should include HRI milestones that are as concrete as autonomy milestones. Below is a pragmatic roadmap you can apply to manufacturing, healthcare, and logistics.

1) Make robot intent legible (before you add more autonomy)

Intent legibility means a person can infer what the robot will do next with minimal attention.

In practice, that’s achieved with a combination of:

- Motion cues (slowing, pausing, yielding patterns)

- Light/sound cues (but only when they’re standardized and meaningful)

- On-device displays (state, next action, estimated completion)

- Facility-level cues (map signage, right-of-way norms)

If you’re deploying mobile robots in shared spaces, legibility is not optional. It’s how you prevent both accidents and “soft failures” like people blocking routes because they don’t trust the robot.

Design test: Show a 10-second video clip of the robot mid-task to a new operator and ask what it will do next. If they can’t answer confidently, you have legibility work to do.

2) Build for recovery, not perfection

AI systems will be wrong sometimes. What separates successful automation from stalled pilots is recovery design: how quickly a human can get the system back on track.

Recovery design includes:

- Clear “pause / safe stop / resume” semantics

- Guided troubleshooting that uses plain language

- Fast handoff to teleoperation or supervisor control

- Audit trails that explain why the robot did what it did

In warehouses, for example, the difference between a 2-minute recovery and a 20-minute recovery determines whether the robot fleet feels like help or hassle.

Rule I like: If a frontline worker can’t resolve the top 5 failure modes without calling engineering, you don’t have an HRI layer—you have a lab demo.

3) Treat trust as a measurable system output

Trust isn’t vibes. Operational trust is measurable.

You can track:

- Intervention rate (how often humans take over)

- Near-miss events (safety and traffic)

- Task abandonment (robots left idle due to frustration)

- Training time to proficiency (hours to independent operation)

- Preference data (which robot modes people choose under pressure)

Trust grows when the robot is predictable and honest about uncertainty. This is where AI design choices matter: if your robot uses learning-based perception, your interaction design should expose confidence in a way that supports decisions, not confusion.

Example in manufacturing QA: instead of “PASS/FAIL,” show “PASS (high confidence)” or “REVIEW (low confidence)” and make the review action quick.

4) Design for the whole system: policy, training, and accountability

Robots don’t get deployed into empty rooms. They land inside policies, unions, safety standards, staffing constraints, and IT rules.

Strong HRI programs account for:

- Training design: microlearning, refreshers, and skill checks

- Role clarity: operator vs. supervisor vs. maintenance ownership

- Escalation paths: what happens when the robot can’t proceed

- Accountability: who signs off on behavior changes after updates

As AI capabilities expand, this becomes more important. A robot that updates its models monthly needs a change-management process that’s closer to enterprise software than traditional machinery.

5) Validate HRI with field-ready experiments, not just demos

The retreat emphasized shaping the future of HRI design. In industry, shaping the future means choosing validation methods that survive real constraints.

A field-ready HRI validation plan typically includes:

- Wizard-of-Oz trials to test interaction concepts before autonomy is mature

- Shadow mode (robot observes and predicts, humans execute) to evaluate intent and recommendations

- Limited autonomy pilots with strict recovery flows

- Scale tests focused on fleet behavior, not single-robot performance

This approach prevents a common trap: investing heavily in autonomy only to discover late that the workflow is wrong.

Sector examples: where HRI design decides success or failure

The same HRI principles show up differently depending on the domain. Here are three concrete patterns.

Manufacturing: cobots that respect pace and space

On a manufacturing line, the robot’s job isn’t just “pick and place.” It must synchronize with human rhythm.

HRI success looks like:

- Fast, consistent cycle times that humans can anticipate

- Simple mode switching (setup vs production vs maintenance)

- Clear safe zones and predictable yielding behavior

- Minimal cognitive overhead during peak throughput

Failure looks like a cobot that’s technically safe but constantly interrupts flow—so operators route around it.

Healthcare: assistive robots that reduce coordination cost

In healthcare, the robot often becomes another teammate that staff must coordinate with. If it adds coordination cost, it won’t last.

HRI success looks like:

- Respectful timing (no interruptions during critical care moments)

- Clear prioritization and escalation (“I can’t enter this area; please confirm reroute”)

- Easy handoff to staff with minimal steps

- Privacy-aware behaviors (where it goes, what it records, how it signals)

The hard truth: a robot that saves 3 minutes but consumes 10 minutes of nurse attention is a net loss.

Logistics: robots that behave like good traffic participants

In logistics, humans judge robots like drivers judge other drivers. Smoothness matters.

HRI success looks like:

- Consistent right-of-way behaviors

- Communication that works at a distance (not just up close)

- Fleet-level coordination that prevents bottlenecks

- Rapid exception handling when aisles are blocked

If workers feel they’re constantly negotiating space with machines, adoption drops—even if the robots meet their technical KPIs.

People also ask: common HRI questions from automation teams

What’s the difference between HRI and UX design?

HRI is UX plus physical behavior and safety-critical decision-making. UX focuses on screens and flows; HRI also covers motion, spacing, timing, and shared control in real environments.

When should HRI start in a robotics project?

Day one. If you wait until autonomy is “done,” you’ll end up redesigning workflows late—when changes are expensive and political.

How do you evaluate HRI quickly?

Use a mix of task success rate, time-to-recovery, intervention rate, and training time. Pair that with short operator interviews focused on moments of confusion or hesitation.

What to do next if you’re deploying AI-enabled robots in 2026

The Human-Robot Interaction Design Retreat is a reminder that the next wave of AI robotics won’t be won by models alone. It will be won by teams that make robots easy to understand, easy to correct, and easy to integrate into human systems.

If you’re planning Q1–Q2 rollouts for 2026, here are three next steps that pay off quickly:

- Run an HRI workshop with operators, safety, IT, and engineering in the same room.

- Document your top 10 “confusion moments” (where humans hesitate, override, or improvise).

- Set HRI KPIs alongside autonomy KPIs (recovery time, intervention rate, training time).

The broader theme of this AI in Robotics & Automation series is practical: automation should feel like relief, not extra work. HRI design is where that promise either becomes real—or dies quietly in the pilot stage.

If your robots had to earn trust from a brand-new shift team next Monday, what would they change about how they communicate intent and handle mistakes?