Host a Robotics Fellow to accelerate AI robotics delivery—ROS 2 integration, simulation, tooling, and demos. Partially funded, low-risk collaboration.

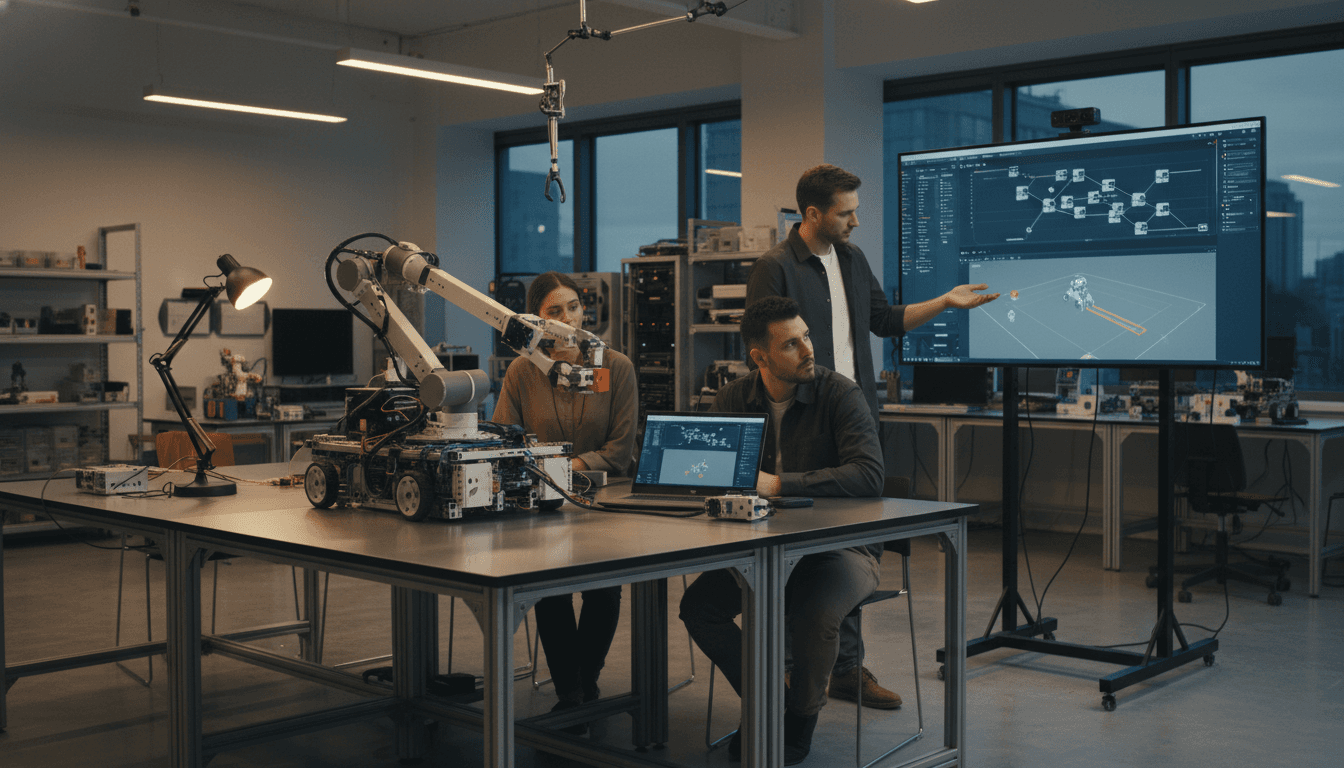

Host a Robotics Fellow to Ship AI Automation Faster

Most robotics teams don’t fail because they lack ideas—they fail because the backlog never stops growing.

If you’re building AI-enabled robots, you’re probably juggling perception experiments, ROS 2 integration, simulation validation, fleet tooling, and the unglamorous work (docs, tests, packaging) that actually makes a system deployable. Meanwhile, leadership wants “a demo in January,” customers want “a pilot next quarter,” and your senior engineers are the bottleneck for everything.

That’s why a partially funded “host a Robotics Fellow” model is interesting right now. A post in the Open Robotics community announced a Robotics Collaboration Fellowship where vetted robotics/software fellows can be hosted by robotics companies and startups, with partial funding for the first months to reduce risk. Done well, this kind of program isn’t charity or internship theater—it’s a practical way to accelerate AI in robotics and automation while keeping your core team focused on the hardest problems.

Why hosting a Robotics Fellow works (when it’s set up correctly)

A fellow is most valuable when you treat them like a short-cycle product team member, not an extra pair of hands. The whole point is to turn a backlog item into a shipped artifact: a working ROS package, a repeatable simulation benchmark, an integration demo, or a deployment-ready pipeline.

Here’s the reason this model maps so well to AI-enabled robotics:

- AI prototypes are cheap; AI products are expensive. Training a model or testing a new policy is often the easy part. The costly part is integration: data capture, labeling strategy, model serving on-device, fallbacks, observability, and the boring safety checks.

- Open-source robotics frameworks already standardize the plumbing. ROS 2, Gazebo, and supporting tooling reduce the “reinvent everything” tax—so a capable fellow can contribute quickly with well-scoped deliverables.

- Robotics progress is constrained by iteration speed. Every extra cycle of “simulate → test → fix → redeploy” compounds. Extra engineering capacity aimed at iteration tooling pays back fast.

A good fellowship engagement behaves like a de-risked micro-collaboration: you get momentum on a real deliverable, and both sides learn whether a deeper partnership (integration, services, training) makes sense.

The best projects for a fellow: high-leverage, bounded, shippable

The sweet spot is work that’s important, specific, and hard to prioritize—yet doesn’t require deep ownership of your entire stack. In practice, that usually means “one layer below the ‘AI magic’.”

1) ROS 2 packages that make AI features deployable

AI robotics teams commonly have promising code living in notebooks, internal repos, or half-finished nodes. A fellow can help turn that into something your system can actually run.

Strong candidate deliverables:

- A ROS 2 node that wraps an inference engine (GPU or CPU) with clear parameters, launch files, and performance metrics

- A

ros2_controlintegration for a new actuator/sensor that unblocks real-world data collection - A robust message pipeline (sync, compression, throttling) for high-rate cameras or point clouds

- A containerized build workflow for repeatable deployments across dev machines and robots

If you’re serious about AI integration, packaging and interfaces are where you win (or lose) months.

2) Simulation and benchmarking that prevents expensive field failures

A fellow is a great fit for “prove it in sim” work—especially when your team keeps postponing it because field testing feels more urgent.

High ROI tasks include:

- Building a Gazebo scenario that mirrors your real environment (lighting, reflectivity, terrain constraints)

- Creating regression tests for navigation and manipulation behaviors

- Designing repeatable benchmark suites for perception accuracy vs. latency

The outcome you want is simple: a button you can press that tells you if today’s changes broke yesterday’s capability.

3) Data and tooling for robot learning (the work everyone avoids)

If you’re pushing robot learning, your biggest limiter is usually not model architecture—it’s data quality and feedback loops.

A fellow can ship:

- Data logging conventions (topics, rate, compression) and “golden run” datasets

- Automatic dataset slicing (events, failure modes, edge cases)

- Labeling/annotation workflow improvements (even lightweight ones)

- Drift detection and basic monitoring dashboards for production robots

Here’s my stance: robot learning without disciplined data operations is just expensive guessing. A fellow can bring discipline fast.

4) Demo apps and docs that turn engineering into sales enablement

For a leads-driven robotics company, demos and documentation aren’t “nice to have.” They shorten sales cycles.

Great fellow outcomes:

- A polished demo app that shows a complete autonomy loop (perception → planning → control)

- Integration guides for partners (“here’s how to connect your system to ours”)

- Internal runbooks that reduce on-call burden

If you’re running pilots, this work pays back immediately.

How to scope a fellowship so it doesn’t become a time sink

The fastest way to waste this opportunity is vague scope. “Help us with AI” is not a project. “Deliver a ROS 2 package that runs YOLOv8 at 30 FPS on our target Jetson with monitoring and a fallback” is a project.

Use a 30-60-90 day deliverable plan

A practical structure:

- First 30 days (integration + environment): build access, compile/run, establish coding standards, define acceptance criteria

- Next 30 days (ship v1): deliver the first working artifact end-to-end

- Final 30 days (harden): tests, docs, performance profiling, CI, handoff

If you can’t define “done” in 2–3 measurable checks, the project is too fuzzy.

Write acceptance criteria like you’re buying a product

Examples that work:

- “Runs on hardware X with latency under 50 ms at 720p, publishes confidence scores, logs frames on low confidence.”

- “Gazebo scenario reproduces 5 real failure cases; pipeline runs nightly; failures create artifacts and reports.”

- “Package passes

colcon test, has a README that a new engineer can follow in 30 minutes.”

These criteria keep everyone honest.

Assign a single technical owner (not a committee)

A fellow needs one person who can answer questions quickly and unblock decisions. If ownership is spread across three staff engineers, you’ll get slow replies and soft scope creep.

I’ve found the best pattern is:

- 1 technical owner (your team)

- 1 product sponsor (who cares about outcomes)

- 1 weekly review cadence (30 minutes)

That’s it.

Where this fits in “AI in Robotics & Automation” (and why it’s timely)

AI in robotics and automation has matured in a very particular way: the hype moved from “robots will learn everything” to “how do we ship reliable autonomy in messy environments?” That shift rewards teams that can integrate, test, and deploy.

A fellowship model supports exactly that:

- Open-source robotics frameworks are the backbone. ROS 2 and related tooling let contributions land in a predictable structure.

- Real-world testing needs real collaborations. Fellows working on practical deliverables force your AI stack to touch the constraints that matter: compute, sensors, safety, and uptime.

- Partnerships matter more as systems get more complex. Multi-vendor stacks (robot base + sensors + AI software + fleet orchestration) are normal. Short, bounded collaborations help you find the partners worth scaling with.

And yes, December timing is not accidental: many teams are planning Q1 roadmaps, and a partially funded start makes it easier to justify experimentation without turning it into a hiring freeze debate.

“Is this like hiring?” Common questions teams ask

No—treat it more like contracting with a built-in trial and clearer learning goals. Here are the questions I hear most.

How is a Robotics Fellow different from an intern?

An intern is primarily a learning role. A fellow should be delivery-oriented, with enough experience to ship artifacts your team relies on. The bar should be: “Would we merge their work into production?”

What’s a reasonable time to see value?

If scope is tight, you should see a working end-to-end prototype in 30–45 days. If nothing is demo-able by week 6, the project is likely under-scoped (too big) or under-specified (too vague).

What if they need a lot of hand-holding?

Then the project selection was wrong. Pick work that’s:

- bounded

- testable

- supported by existing interfaces (ROS 2 nodes, clear message types, documented APIs)

Also, make sure onboarding isn’t neglected. A fellow can’t contribute if they can’t build your workspace.

How do we protect IP while working in open source?

Decide upfront what’s open and what’s proprietary. A clean split is common:

- Open: generic tooling, ROS 2 wrappers, simulation assets without proprietary geometry

- Closed: customer data, maps, product-specific policies, deployment secrets

Clarity prevents awkward conversations later.

A practical checklist to start a fellowship collaboration next month

If you’re considering hosting a Robotics Fellow, use this as your internal readiness check:

- Pick one deliverable that matters to revenue or pilot readiness.

- Define “done” with 2–3 measurable acceptance criteria.

- Prepare a minimal onboarding kit: build instructions, robot/sim access, coding standards.

- Set a weekly demo cadence (not just status updates).

- Plan the handoff: docs, tests, and ownership transfer.

Do these five things and the odds of success jump dramatically.

Snippet-worthy truth: Extra engineering capacity only helps if it reduces cycle time. Fellowship work should shorten your “idea to robot behavior” loop.

What to do if you want to host a Robotics Fellow

The Robotics Collaboration Fellowship shared in the Open Robotics community is explicitly looking for robotics companies and robotics-focused startups with project-based work—ROS tooling, simulation, demo apps, and documentation were called out as good fits, and the first months are partially funded to reduce risk.

If you’ve got a robotics backlog and you’re trying to ship AI automation faster, don’t overthink it: pick one project that has been stuck for months, scope it to a 90-day outcome, and run the collaboration like you care about shipping.

The broader “AI in Robotics & Automation” trend is heading toward teams that can execute reliably, not just experiment loudly. Hosting a Robotics Fellow is one of the more pragmatic ways to build that execution muscle—without committing to a full hire before you’ve proven the match.

Where could your team be by the end of Q1 if one long-stalled integration was finally done and tested?