Hindsight Experience Replay turns RL failures into training signal. Learn how HER boosts sparse-reward robotics and automation used in U.S. digital services.

Hindsight Experience Replay

Sparse rewards are why most reinforcement learning (RL) projects stall out. If your robot only gets a “success” signal when it finally grasps the object just right—or your customer-service agent only gets rewarded when a conversation ends in a paid upgrade—you can run millions of attempts and still learn almost nothing.

Hindsight Experience Replay (HER) fixes that bottleneck by turning failures into useful training data. It’s one of those research ideas that sounds almost too simple: after an episode ends, you pretend the agent was trying to achieve what it actually achieved, then learn from that. For robotics and digital automation teams in the U.S., HER is a practical way to cut training time, reduce data costs, and ship systems that improve with less hand-holding.

This post breaks down how HER works, why it matters for “AI in Robotics & Automation,” and how the same learning pattern shows up in modern U.S. digital services—from warehouse robots to customer-support automation.

What Hindsight Experience Replay actually does

HER makes sparse-reward RL learn from almost every episode by rewriting goals after the fact. Instead of treating an episode as “success or useless failure,” it creates extra training examples where the goal is swapped to something the agent did reach.

Here’s the core setup:

- You have goal-conditioned reinforcement learning: the policy and value function take the current state

sand a goalg. - The environment gives a reward based on whether the goal was achieved (often

0/1, or-1/0). - In sparse tasks, random exploration rarely hits the intended goal, so the replay buffer fills with mostly “no reward” transitions.

HER changes what goes into the replay buffer. For a trajectory that failed to reach the intended goal g, it samples one or more alternative goals g' from states the agent actually visited (often the final state). Then it recomputes the reward as if the goal was g'.

Snippet-worthy version: HER trains the agent on “what happened” as if that outcome was the goal all along.

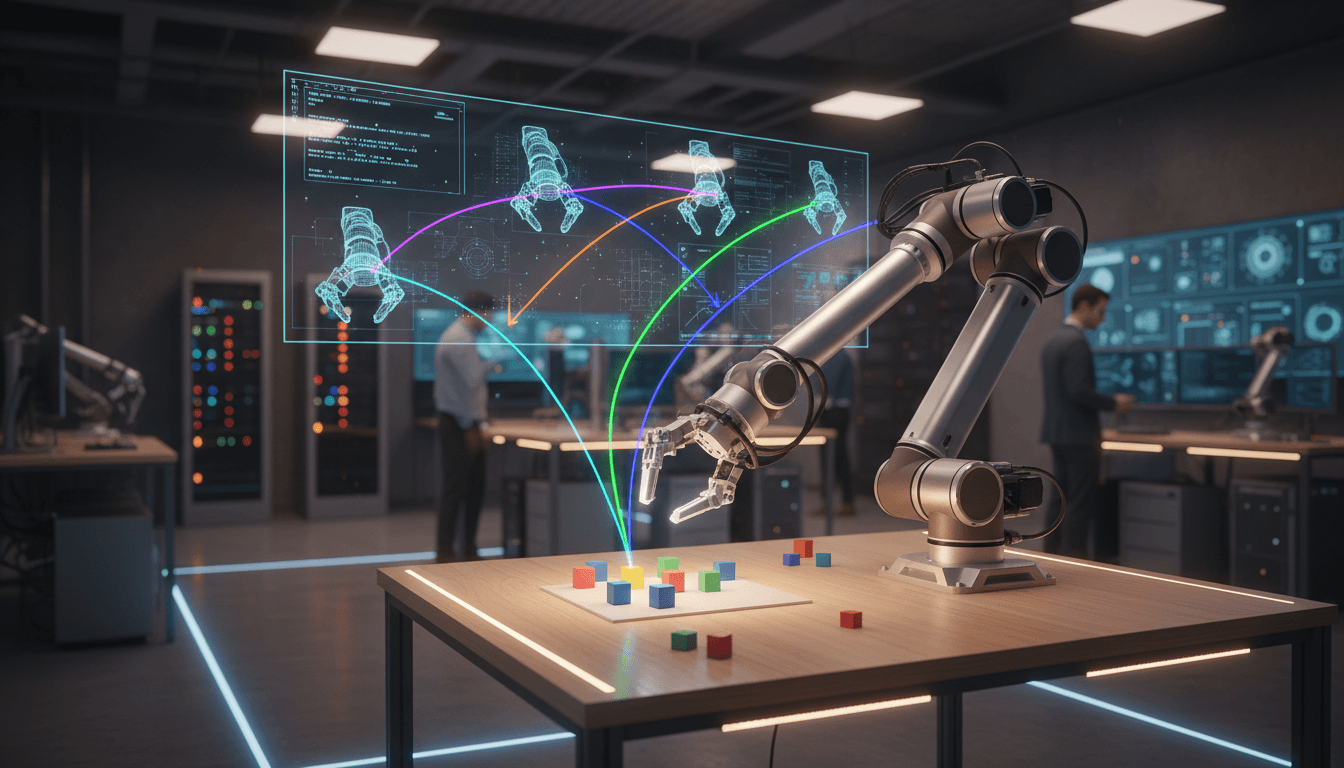

A concrete robotics example

Say a robot arm is supposed to place a cube at position A.

- Attempt #1: it drops the cube at position B.

- Classic sparse reward:

reward = 0(failure) and that episode is mostly wasted. - With HER: you add additional transitions where the goal is re-labeled as “place the cube at position B.” Now the same experience becomes a “success,” and the policy learns behaviors that reliably reach some goals.

Over time, those learned behaviors become reusable building blocks to reach harder goals (like the original position A), especially when paired with goal sampling strategies and curriculum-like training.

Why HER matters for robots and automation systems

HER is a productivity hack for RL teams: fewer environment interactions for the same skill level. In robotics, those interactions are expensive—either because you’re running physics simulation at scale or because real-world trials cost time, wear, and safety risk.

Sparse rewards are the default in the real world

In manufacturing, healthcare robotics, and logistics, rewards tend to be:

- Delayed: you only know success after a full sequence (navigate → align → grasp → place).

- Binary: either the package is in the tote or it isn’t.

- Rare: random behavior almost never completes the full chain.

HER is built for exactly this reality.

It pairs well with off-policy algorithms

HER is typically used with off-policy RL methods like DDPG, TD3, and SAC because they learn from replay buffers efficiently. That matters in automation because you often want:

- data reuse (every trajectory helps multiple updates),

- stable learning from logged episodes,

- training that can continue while the robot is operating in controlled settings.

If you’re building “always improving” automation—warehouse picking, mobile manipulation, lab automation—HER is one of the cleanest ways to get more learning signal without redesigning rewards.

The stance: if you’re doing sparse-reward RL without HER, you’re paying extra

Most teams try to “fix” sparse rewards by engineering dense reward functions (“+0.1 if closer to target, -0.2 if bumping, +0.05 if aligned…”). That can work, but it’s fragile:

- Agents learn to exploit shaping (optimize the proxy, not the task).

- Reward design becomes a full-time job.

- Small environment changes can break the incentive structure.

HER doesn’t eliminate reward design, but it reduces your dependence on intricate shaping. You can keep the reward aligned with the actual objective and still train efficiently.

How HER works under the hood (without the math headache)

HER is “goal relabeling + replay,” and the details that matter are surprisingly operational. If you implement it poorly, you can get slow or unstable gains.

The HER loop in practical terms

A typical training loop looks like:

- Run an episode with intended goal

g. - Store transitions

(s, a, s_next, g)in a replay buffer. - Sample minibatches from replay.

- For some sampled transitions, replace

gwith an achieved goalg'pulled from the same episode. - Recompute reward

r(s, a, s_next, g'). - Train Q/value and policy networks on the mixture of original and relabeled data.

Choosing the relabeled goal: “future” is often best

Common strategies include:

- Final: use the final achieved state as the goal.

- Future: sample a state the agent reaches later in the same episode.

- Episode: sample any state from the same episode.

In robotics manipulation, “future” often provides a strong training signal because it ties actions to attainable outcomes within the same behavior segment.

How many HER samples per real transition?

Teams often use a ratio like 4 relabeled goals per 1 original transition. There’s no magic constant, but there is a practical rule:

- Too few relabels → you don’t solve the sparse reward problem.

- Too many relabels → you may overfit to “accidental” goals and reduce focus on the real objective.

I’ve found it’s better to start with a moderate relabel ratio (like 4:1), watch success rates, then adjust based on whether learning plateaus early.

Where U.S. tech companies feel HER’s impact in digital services

HER isn’t just a robotics trick; it’s a blueprint for making learning systems more data-efficient in digital operations. The “hindsight” idea—relabeling outcomes to create more learning signal—shows up across U.S.-based AI products, especially in automation-heavy SaaS.

Customer support automation: learning from “partial wins”

Consider an AI agent handling customer chat:

- Intended goal: “resolve the issue and retain the customer.”

- Sparse reward: you only learn after a long conversation and maybe a churn/renewal outcome.

A HER-like framing is to treat intermediate, achieved outcomes as goals:

- “Customer provided account ID.”

- “Customer confirmed the device model.”

- “User accepted a troubleshooting step.”

If your system is goal-conditioned (explicitly or implicitly), those achieved subgoals can be used to train better policies from the same conversation logs. The broader point for digital services: stop throwing away interactions that didn’t end in the final KPI.

Content workflows: goal relabeling as a training strategy

In content creation pipelines—common in U.S. marketing SaaS—outcomes are also sparse:

- A campaign is “successful” only after performance results arrive.

- But you still want the system to learn from drafts, edits, and approvals.

A HER-like approach is to relabel training examples around achieved editorial goals (“compliant tone,” “correct product name,” “fits length constraint,” “approved by reviewer”), which increases useful signal without waiting for downstream metrics.

Why this connects to the U.S. AI ecosystem

OpenAI and other U.S.-based research orgs helped popularize experience replay methods and practical RL ideas that industry teams could implement. The real value isn’t name-dropping; it’s this: U.S. digital platforms scale because they learn efficiently from interaction data. HER is one of the cleanest examples of turning raw interaction logs into better policies faster.

Implementation playbook: using HER in real robotics and automation projects

If you want HER to pay off, treat it like an engineering system, not a paper. Here’s what’s worked in production-minded RL teams.

1) Start with goal design that matches how you’ll evaluate

Your goal representation g should reflect what “success” means operationally.

Examples:

- Mobile robot:

g = (x, y, yaw)pose within tolerance. - Manipulation:

g = object position+ maybe orientation. - Warehouse pick:

g = object in binplus a “secure grasp” condition.

If the goal is ambiguous, HER will happily train on weird proxies.

2) Use a reward that’s easy to recompute offline

HER requires recomputing reward after relabeling. So design the reward as a function you can compute from stored states:

- distance threshold,

- contact/grasp flags,

- object-in-region checks.

Avoid rewards that depend on hidden simulator internals you don’t log.

3) Combine HER with domain randomization and safety constraints

For robotics automation, you usually train in simulation first.

- Domain randomization improves transfer to reality.

- Safety constraints (action limits, collision penalties, termination conditions) prevent learning unsafe “shortcuts.”

HER helps with learning signal; it doesn’t automatically make behaviors safe.

4) Monitor the metric that matters: success rate on the original goal

A common failure mode is celebrating training curves while the real task doesn’t improve.

Track:

- success on intended goal

g(not relabeled goals), - time-to-success (steps),

- constraint violations (collisions, drops),

- robustness across randomized starts.

If relabeled success skyrockets while intended-goal success stays flat, your goal sampling strategy is likely off.

5) Know when HER isn’t the right tool

HER shines when:

- goals are well-defined and state-based,

- rewards are sparse and binary-ish,

- tasks are multi-goal or can be meaningfully relabeled.

HER is less helpful when:

- the objective is not naturally goal-conditioned,

- success depends on long-horizon, non-Markov signals (e.g., user satisfaction over weeks),

- the “achieved goals” aren’t meaningful stepping stones.

In those cases, you may need hierarchical RL, preference learning, or better problem framing.

The bigger story for AI in Robotics & Automation

Hindsight Experience Replay is a reminder that data efficiency beats brute force. In 2025, compute is still expensive, robotics trials are still messy, and automation teams are still asked to do more with less. HER’s contribution is practical: it squeezes more learning out of each attempt.

For U.S. companies building robots, contact centers, and digital services, the takeaway is consistent: instrument your systems so you can relabel outcomes into training signal. Whether that’s a robot arm missing a placement target or a support agent failing to close a renewal, the interaction is rarely useless—it’s often just mislabeled.

If you’re evaluating RL for automation in manufacturing, logistics, or service robotics, start by asking: are rewards sparse, and do we have clear goals we can relabel? If yes, HER should be on your shortlist. If not, it’s a sign you need to rethink the objective, not just the algorithm.

Where do you have “failed” interactions in your operation that could become training data if you changed the label of what success meant?