Gemini Robotics motor skills signal a shift toward practical AI manipulation. See what it means for manufacturing, logistics, and healthcare automation.

Gemini Robotics: Better Motor Skills, Real ROI

Most robotics teams don’t fail because their robot can’t “think.” They fail because the robot can’t do—reliably, repeatedly, and safely.

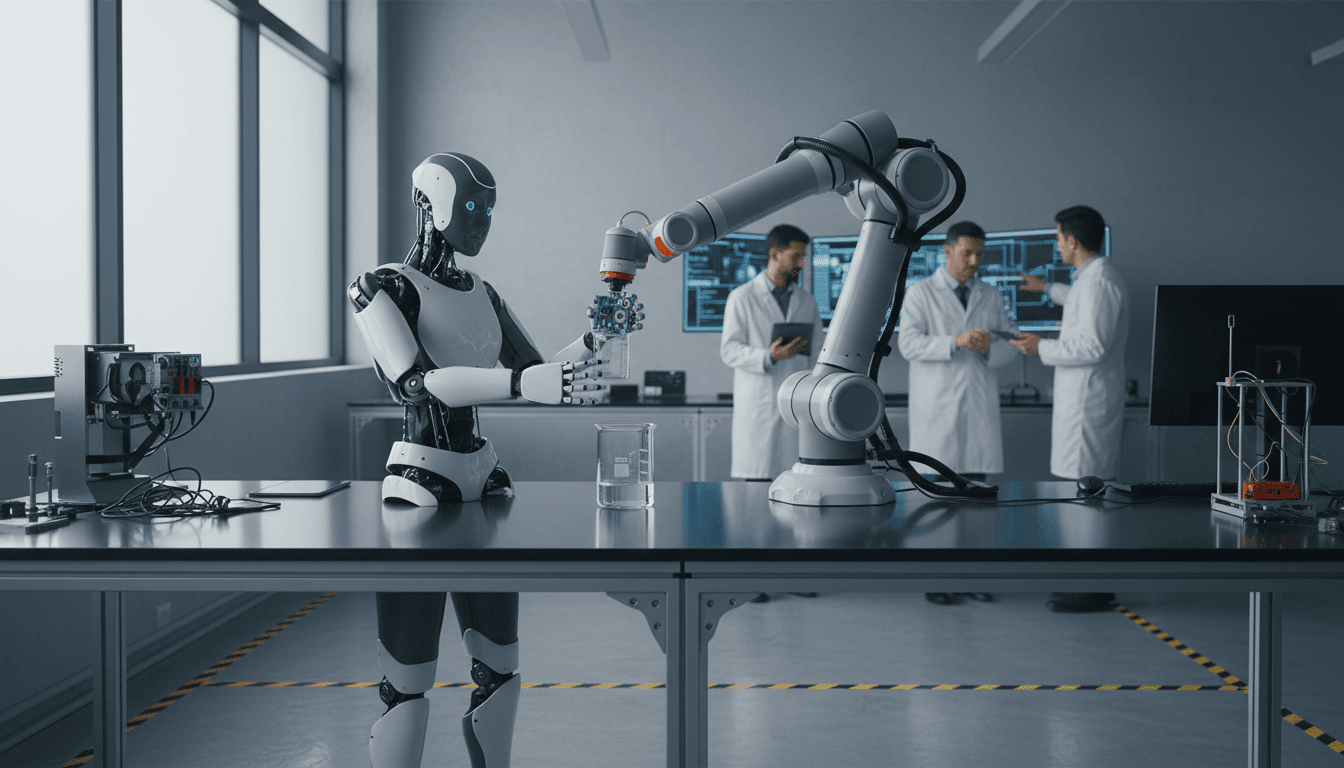

That’s why the recent attention around Google DeepMind’s Gemini Robotics (and its focus on improved motor skills via a vision-language-action approach) matters for anyone building automation in manufacturing, healthcare, or logistics. The demo videos are the flashy part. The practical story is simpler: better motor control shrinks the gap between a cool prototype and a robot that earns its keep on a real floor with real variability.

In this installment of our AI in Robotics & Automation series, I’ll translate what’s showing up in the latest robot video roundups—Gemini Robotics and a handful of other notable systems—into what it means for deployments, budgets, and the next 12–24 months of automation decisions.

Why “motor skills” are the real bottleneck in robot automation

Motor skills are the business bottleneck because they determine whether a robot can handle variation without endless fixturing, re-teaching, and downtime.

In classic industrial automation, we cheated (in a good way) by controlling the environment:

- Parts arrive in the same pose

- Lighting is fixed

- Paths are preplanned

- Tools are rigid and repeatable

That works brilliantly—until you want flexibility: mixed SKUs, smaller batches, frequent changeovers, human collaboration, or any kind of unstructured environment. At that point, motor control stops being “just robotics” and becomes an AI problem.

Here’s what motor skill improvements unlock in practice:

- Higher successful grasp rates on varied objects (less operator intervention)

- Lower cycle-time variance (predictable throughput, easier scheduling)

- Reduced need for custom fixtures (capex reduction and faster line changes)

- Safer human-robot interaction (more graceful failure modes)

If you’re evaluating AI robotics, don’t get distracted by whether it can describe what it sees. The value arrives when it can act on what it sees.

What Gemini Robotics changes: Vision-Language-Action that “thinks before moving”

Gemini Robotics is part of the shift from robots following scripted trajectories to robots executing policies conditioned on perception and instructions.

In the RSS roundup, Gemini Robotics 1.5 is described as a vision-language-action (VLA) model that converts visual inputs and natural-language instructions into robot motor commands. Two specific ideas in that description are worth pausing on:

1) Turning instructions into motor commands (not just plans)

Many systems can interpret a command like “pick up the grape without squishing it.” Fewer systems can produce stable motor output—grasp, force modulation, micro-adjustments—without a custom behavior tree written by an expert.

A VLA approach aims to compress that integration work. For buyers, the promise is:

- Less bespoke programming per task

- Faster redeployment across workcells

- A path to more “general” manipulation skills

2) “Thinking before taking action” and showing its process

The phrase reads like marketing, but the operational implication is important: transparent decision-making reduces commissioning risk.

When a robot fails in production, you need to answer three questions fast:

- What did it perceive?

- Why did it choose that action?

- What should we change—data, constraints, tooling, or environment?

If an AI system can expose intermediate reasoning or structured action selection (even imperfectly), you get faster debugging, safer validation, and clearer sign-off paths.

My stance: if you’re deploying AI-driven manipulation in 2026 budgets, observability is a buying criterion, not a nice-to-have. Ask vendors what you can log, replay, and audit.

The hidden theme in the video roundup: embodiment is diversifying

The near-term market isn’t “one robot to rule them all.” It’s specialized bodies connected by shared AI skills.

The roundup spans:

- Humanoids entering mass production claims

- Quadrupeds improving stability and recovery

- Mobile manipulators emphasizing intuitive interaction

- Soft robots that climb using origami-inspired mechanisms

- Lightweight haptic exosuits designed for rapid don/doff

This matters because it signals a practical direction for AI in robotics and automation: skill transfer across embodiments.

If a policy learned on one arm can accelerate learning on another (different reach, payload, end-effector), you lower total training cost. In factories, that could mean standardized AI “skills” across:

- Small cobots for light assembly

- Heavier arms for case packing

- Mobile manipulators for kitting

In healthcare, it could mean shared manipulation primitives across:

- Assistive robots in patient rooms

- Pharmacy automation arms

- Materials handling robots in sterile supply

The value is consistency: one operational model for monitoring, safety, and updates—even if the bodies differ.

Where improved motor skills pay off first (manufacturing, logistics, healthcare)

The first ROI wins will come from tasks that are already valuable but currently brittle: pick-and-place with variability, handling delicate items, and recovery from errors.

Manufacturing: flexible handling beats perfect precision

Factories don’t need robots that can do everything. They need robots that can do a handful of tasks across many SKUs.

High-payoff targets:

- End-of-line case packing with mixed products and changing carton sizes

- Machine tending where part presentation varies slightly

- Kitting and line feeding with imperfect bins and human handoffs

What improves with better motor skills:

- Fewer engineered part trays

- Less manual “rescue” work

- Shorter changeover windows

Logistics: “messy” environments are the point

Warehouses are built for flow, not perfect poses. Even a small boost in manipulation robustness can shift economics.

Best-fit scenarios:

- Each picking of varied items

- Returns processing (the most unstructured corner of many operations)

- Pallet depalletizing with crushed boxes and random gaps

If you’re running peak-season playbooks (and December is when this is most obvious), flexibility is the only way to add capacity without hiring spikes. Better motor control supports that flexibility.

Healthcare: safety + gentleness are non-negotiable

Healthcare manipulation is often lower speed but higher consequence. Handling soft packaging, small tools, or patient-adjacent tasks requires force control and graceful failure.

Near-term opportunities:

- Supply room automation (restocking, picking consumables)

- Pharmacy and lab workflows (packaging, sorting)

- Assistive handling where the robot must yield safely

The win isn’t “replace nurses.” It’s reducing the time clinicians spend hunting supplies and moving materials.

A practical checklist: how to evaluate VLA robotics for real deployment

You can tell quickly whether an AI robotics approach is production-minded by how it handles constraints, failure, and measurement.

Here’s what I’d ask in an RFP or a pilot kickoff.

1) What’s the failure behavior?

- Does it stop safely when uncertain?

- Can it back out and retry?

- Does it escalate to a human with a clear prompt (“object slipped,” “occluded,” “unexpected contact”)?

2) What’s the observability stack?

- Can you record camera streams, action commands, and state estimates?

- Can you replay episodes offline?

- Can you label and retrain from your own failures?

3) What are the constraints you can set?

- No-go zones

- Force/torque limits

- Speed limits near humans

- Tooling constraints (gripper open width, vacuum limits)

A system that can’t express constraints cleanly becomes a safety and QA nightmare.

4) How much “environment fixing” is still required?

VLA systems reduce fixturing needs, but they don’t eliminate physics.

Ask:

- What lighting range is supported?

- How much background clutter is acceptable?

- What object reflectivity or transparency breaks it?

5) What’s the unit economics?

Don’t accept vague answers. Get numbers:

- Expected cycle time range (p10/p50/p90)

- Success rate with your object set

- Interventions per hour

- Training and commissioning days per cell

The uncomfortable truth: demos aren’t the hard part—operations are

The biggest risk in AI-driven robotics is operational drift: the world changes, the robot’s performance slowly degrades, and nobody notices until throughput collapses.

If you’re serious about AI in robotics & automation, plan for operations from day one:

- Weekly review of failed episodes

- Continuous data collection from “near misses”

- A clear update process (what changes require re-validation?)

- A fallback mode that keeps the line running

This is where “robots that think before acting” becomes more than a tagline. It’s about building systems you can monitor and improve without shutting everything down.

What to do next if you’re exploring AI robotics in 2026 planning

If you’re building your 2026 automation roadmap right now (which many teams do in December), use the momentum around Gemini Robotics-style motor improvements as a filter:

- Pick one workflow with high labor pain and moderate variability (not the hardest problem you have).

- Run a 6–10 week pilot with success metrics that include interventions/hour and p90 cycle time, not just average speed.

- Require observability and replay as deliverables.

- Design the human-in-the-loop process (who rescues, how often, and how the system learns from it).

If you want leads that actually convert, this is also the right time to offer a concrete next step to your stakeholders: a scoped assessment of candidate tasks, sensor stack, and safety constraints—before anyone buys hardware.

Better robot motor skills aren’t a hype cycle detail. They’re the difference between “we tried robotics” and “we scaled robotics.” What’s the one task in your operation where a 20% reduction in manual interventions would pay for the pilot within a quarter?