Evolved policy gradients make reinforcement learning more stable—critical for robotics and SaaS automation. Learn where it fits and how to apply it.

Evolved Policy Gradients: Smarter RL for Automation

Most teams trying reinforcement learning for real-world automation hit the same wall: the algorithm works in a demo, then collapses under messy reality—noisy sensors, shifting demand, safety limits, and “you can’t just try random things in production.” That gap between lab success and operational reliability is where policy gradient methods either shine… or disappoint.

OpenAI’s “Evolved Policy Gradients” (EPG) points at a pragmatic direction: stop treating the policy gradient optimizer as sacred. Instead, treat it like engineering—something you can search over, test, and improve. Even though the RSS source couldn’t be retrieved (403/CAPTCHA), the idea is well-known in modern RL research: use automated discovery (often evolutionary search) to find better update rules than the default, hand-designed ones.

This matters directly to the “AI in Robotics & Automation” conversation in the U.S. because many of the highest-ROI automation problems—warehouse picking, dynamic routing, call-center workflows, fraud interventions, energy optimization—share the same requirement: learn good actions from feedback without breaking the system. Policy gradient improvements aren’t academic polish; they can be the difference between an automation pilot and an automation platform.

What “evolved policy gradients” actually changes

Answer first: Evolved policy gradients aim to improve how an RL policy learns by automatically discovering update rules (or combinations of existing ones) that train faster, more stably, or with fewer samples.

Traditional policy gradient RL uses a fairly standard recipe: collect trajectories, estimate an advantage, then update the policy parameters in the direction that increases expected reward. Over the years, the community added stabilizers—clipped objectives, value-function baselines, entropy bonuses, normalization tricks, learning rate schedules. Most of these were designed by researchers through iteration and intuition.

EPG-style research asks a blunt question: what if we search the space of possible update rules instead? That search might combine components like:

- Different ways to compute or normalize advantages

- Different loss terms (or weights) on policy loss, value loss, entropy

- Different clipping or trust-region-like constraints

- Different gradient transformations (e.g., scaling, momentum-like behavior)

- Different schedules that react to training signals rather than time

The key shift is mindset: the optimizer becomes a discoverable object. If your target is a robust controller for automation, “discovering” an update rule can be more productive than debating hyperparameters for weeks.

Why this is a big deal for robotics and automation

Robotics and automation systems punish instability. A robot arm that thrashes for a few minutes can damage equipment. A workflow agent that “explores” too aggressively can tank customer experience. A routing optimizer that oscillates can spike labor costs.

Policy gradient instability typically shows up as:

- High variance gradients (learning jumps around)

- Policy collapse (agent becomes overly deterministic too early)

- Reward hacking (agent finds loopholes that violate intent)

- Non-stationarity (the world changes; yesterday’s policy update hurts today)

Evolved approaches are compelling because they’re often searching for training dynamics that are calmer and more repeatable, not just higher peak scores.

From research to U.S. digital services: where it shows up

Answer first: Better policy gradient training shows up as smarter automation inside SaaS platforms—systems that decide what to do next based on feedback loops.

A lot of people hear “reinforcement learning” and think only of robots or games. In U.S. tech and digital services, RL is often hidden behind product terms like “optimization,” “automation,” or “decisioning.” If your system chooses actions and learns from outcomes, it’s in RL territory.

Here are concrete places policy gradients matter:

Dynamic workflow automation in SaaS

Consider a customer support automation system that chooses whether to:

- Auto-resolve a ticket

- Ask a clarifying question

- Route to a specialist queue

- Offer a credit

The reward isn’t immediate. It might arrive as a later CSAT rating, churn risk reduction, or reduced handle time. Policy gradient RL is a natural fit for these delayed outcomes, but only if training is stable and safe.

An evolved update rule that reduces variance or improves credit assignment can translate to:

- Fewer “weird” actions during training

- Faster convergence to acceptable policies

- Less reliance on massive data volumes

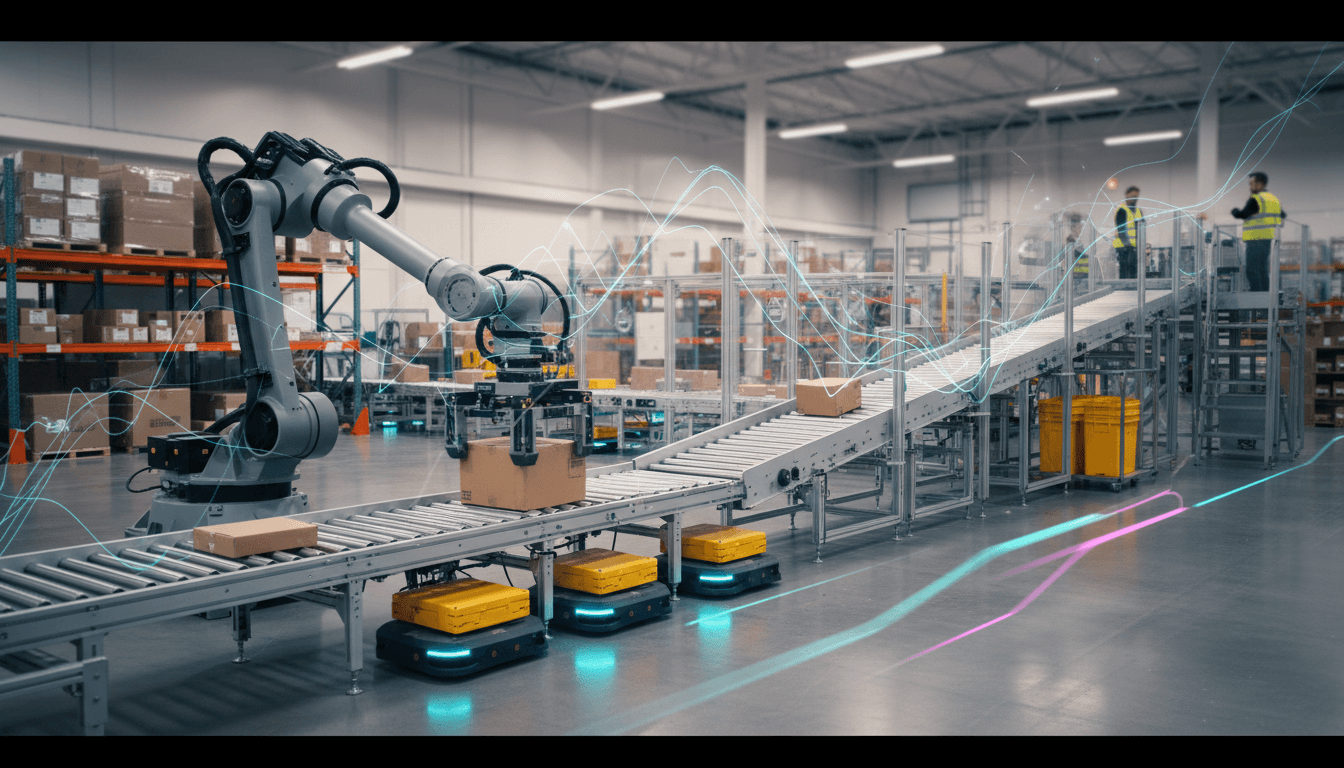

Logistics and warehouse automation

Routing, slotting, picking, replenishment, and labor planning are decision problems with constraints. RL is attractive because the system can learn policies that outperform hand-tuned heuristics—especially when demand changes seasonally.

Seasonality matters right now. Late December in the U.S. is peak returns and post-holiday inventory reshuffling. Automation systems face shifting priorities: speed, cost, and customer promises all move at once.

In these environments, policy gradients that train reliably under distribution shift are valuable. Evolved methods are often motivated by exactly that: not perfect performance on one benchmark day, but solid performance across conditions.

Robotics: sim-to-real and safety constraints

Robotics teams frequently train in simulation and transfer to real hardware. The bottleneck isn’t just realism; it’s that the learning algorithm must be stable under mismatch.

If an evolved policy gradient update learns policies that are less sensitive to small modeling errors, you get:

- Fewer fragile behaviors

- More predictable fine-tuning on real robots

- Lower risk during deployment

For U.S. manufacturers and healthcare robotics providers, that translates to shorter commissioning cycles and fewer “operator babysitting” hours.

How evolved policy gradients can reduce the cost of RL (the part buyers care about)

Answer first: The business value is usually one of three things—less data needed, fewer failed training runs, or faster iteration—all of which lower the cost of building automation.

RL has a reputation for being expensive because it often is:

- It needs a lot of interaction data

- Training runs can be brittle

- Debugging is slow because the “bug” may be the learning dynamics

EPG-style improvements target those pain points. Even modest stability gains can compound when you’re training many policies across many customers, facilities, or product lines.

A practical way to think about ROI

If you’re building an “AI automation” feature in a SaaS product, you rarely train one policy once. You train:

- Multiple policies (for segments, regions, or use cases)

- Multiple versions (for experiments, regressions, and compliance)

- Multiple environments (staging, simulated, customer-specific constraints)

So the real cost is: number of training runs × cost per run × failure rate.

Reducing failure rate is underrated. In my experience, one fewer week of chasing an unstable RL run is often worth more than a small bump in benchmark reward.

What to copy into your own RL stack (without doing a full research project)

Answer first: You can borrow the spirit of evolved policy gradients by systematically searching training recipes and standardizing evaluation, instead of “tuning until it looks good.”

You don’t need a bespoke evolutionary search system to benefit. Start with disciplined experimentation and a few algorithmic guardrails.

1) Define “success” as stability, not just reward

Teams often optimize mean reward and ignore variance. Don’t.

Track:

- Median and worst-case reward across seeds

- Rate of catastrophic episodes (violations, unsafe actions)

- Policy entropy over time (collapse detection)

- Sensitivity to hyperparameters (robustness)

If you’re deploying automation in digital services, add product metrics:

- Intervention rate (how often humans had to override)

- SLA violations

- Customer-visible error rate

2) Use offline and constrained RL patterns where possible

Many U.S. businesses can’t “explore” online. That’s fine—use safer patterns:

- Train from logged data (offline RL), then do limited online fine-tuning

- Add action constraints (“never exceed X,” “must satisfy Y”)

- Use reward shaping that encodes business guardrails

Policy gradients can work in these setups, but they’re sensitive to how you regularize and constrain. This is exactly where improved update rules help.

3) Search over training recipes like a product feature

Create a small “recipe grid” that varies:

- Advantage normalization strategy

- Entropy coefficient schedule

- Clipping thresholds

- Value loss weighting

- Learning rate schedule

Then evaluate across multiple seeds and scenarios. This is the low-budget version of “evolving” your policy gradient.

A reliable RL system is usually the one with the most boring learning curves.

4) Bake in deployment realities early

For robotics and automation, training isn’t the finish line. Plan for:

- Monitoring drift (sensor changes, demand changes)

- Safe fallback behaviors

- Human-in-the-loop escalation

- Audit logs for why actions were chosen

The more stable your policy training, the easier these production requirements become.

People also ask: policy gradients in automation

Are policy gradients only for continuous control robots?

No. They’re common in continuous control, but they also apply to discrete action decisions like routing, triage, scheduling, and pricing. If you can parameterize a policy and score outcomes, policy gradients can fit.

Why not just use supervised learning?

Supervised learning copies past decisions. RL optimizes outcomes, even when the right action isn’t labeled. For automation systems where the outcome is delayed (retention, cost, safety), RL is often the more direct tool.

What’s the risk of using RL in digital services?

The main risks are unsafe exploration, feedback loops, and reward misspecification. Stable policy gradient training helps, but you still need constraints, monitoring, and human override paths.

Where this fits in the “AI in Robotics & Automation” series

This series is about the real machinery of automation: not flashy demos, but systems that work week after week in U.S. factories, warehouses, hospitals, and software operations centers. Evolved policy gradients belong in that story because they target a core blocker for RL in automation: training reliability.

If your organization is building AI-driven automation—whether it’s a robot that grasps, a scheduler that allocates labor, or a SaaS agent that handles workflows—policy gradients are one of the engines under the hood. Making that engine easier to tune and less prone to failure is how research turns into durable digital services.

The next step I’d take: audit your current decisioning or automation backlog and identify one place where outcomes are delayed and rules are brittle. That’s a strong candidate for a policy-gradient RL prototype—designed with stability metrics and constraints from day one.

What would happen if your automation system optimized for the outcome you actually care about, not the proxy you settled for last quarter?