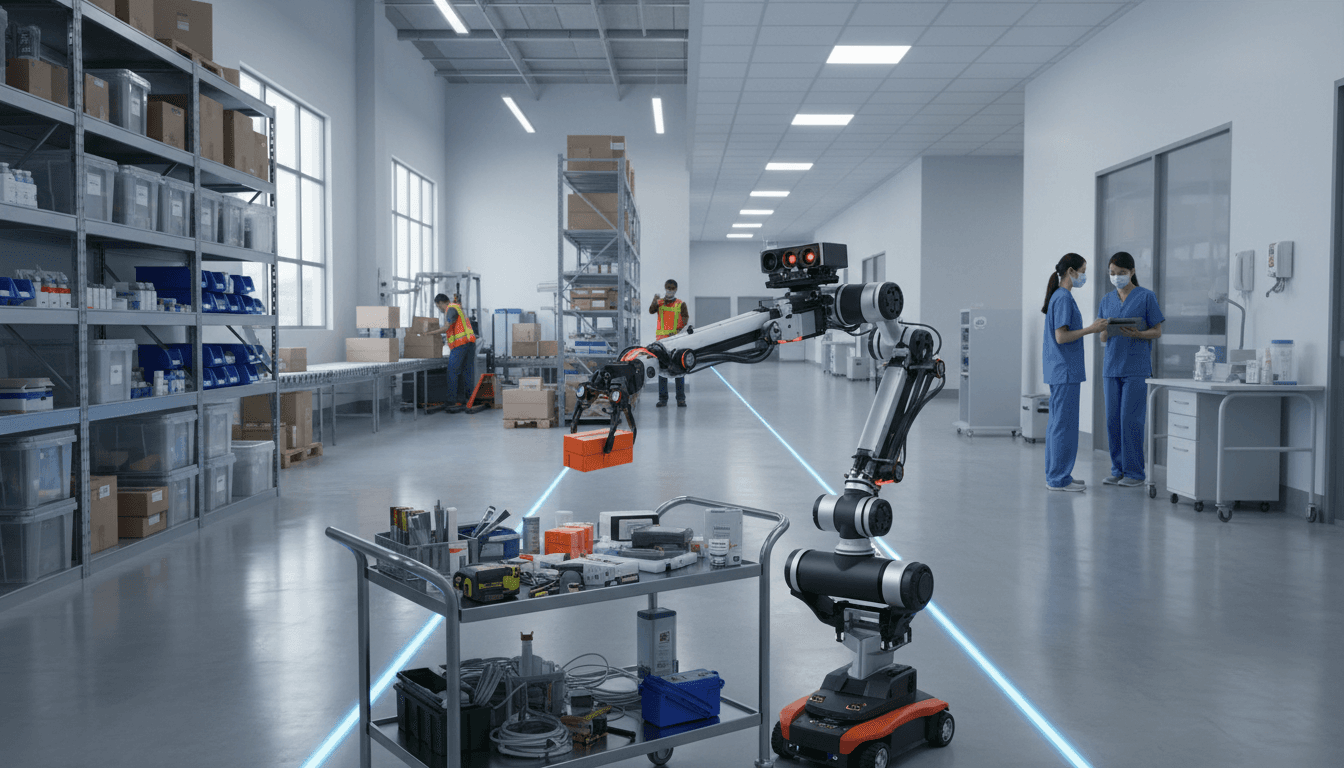

Embodied AI connects perception and action so robots can handle real-world variability. See what it means for manufacturing, healthcare, and service automation.

Embodied AI Robots: From Perception to Real Work

Robots don’t fail in factories because they can’t move. They fail because the real world refuses to sit still.

A bin’s contents shift. A reflective surface confuses a camera. A nurse interrupts a delivery run to ask the robot to help with a quick pickup. In robotics, perception and action aren’t separate steps in a pipeline—they’re a continuous loop that has to hold up under mess, noise, and surprises.

That’s why the recent conversation in AAAI’s Generations in Dialogue podcast with Professor Roberto Martín-Martín (UT Austin) lands at exactly the right moment for this AI in Robotics & Automation series. Embodied AI—the practice of building AI that learns to act in the physical world—has moved from “cool demo” territory to a practical roadmap for manufacturing automation, healthcare robotics, and service robots.

Embodied AI, explained in one sentence (and why it matters)

Embodied AI is AI that learns and makes decisions through a body—sensors, motors, and real-world feedback—rather than only through static data.

This matters because most automation bottlenecks in 2025 aren’t about computing power. They’re about robustness: the ability to perform tasks reliably when the environment changes.

Professor Martín-Martín’s research lens—spanning pick-and-place, navigation, cooking, and mobile manipulation—highlights a blunt reality: the “last mile” of robotics is not a mile at all. It’s thousands of tiny edge cases.

If you’re building or buying automation, embodied AI is how you reduce those edge cases from a constant firefight into an engineering discipline.

Perception + action isn’t a feature—it’s the product

Most companies still evaluate robotics like it’s 2015:

- Can it detect objects?

- Can it grasp?

- Can it navigate?

That checklist approach is exactly how you end up with a robot that performs well in a lab and struggles on your floor.

In embodied AI, the product is the loop:

- Perceive (what’s happening right now?)

- Decide (what should I do next, given constraints?)

- Act (execute with uncertainty)

- Learn (update behavior from outcomes)

A robot that can grasp a screwdriver is useful. A robot that can adapt when the screwdriver is partially occluded, oily, or in an unexpected orientation is the one that delivers ROI.

Why “making robots for everyone” is the right goal

A point that comes through strongly from Martín-Martín’s trajectory—and the podcast’s generational framing—is that robotics shouldn’t be treated as a specialist craft reserved for a handful of PhDs.

The next growth wave in automation comes from lowering the integration burden. If deploying a robot still requires months of tuning, custom fixtures, or rewriting perception logic every time packaging changes, you don’t have a scalable solution—you have a bespoke project.

Here’s the stance I’ll take: robots won’t spread because they’re smarter; they’ll spread because they’re easier to deploy, teach, and trust.

That means:

- Interfaces that match how operators already think

- Policies that generalize across sites and SKUs

- Clear failure modes (and graceful recovery)

- Human-robot collaboration designed around real workflows

Embodied AI pushes all four.

The generational angle is practical, not philosophical

The “Generations in Dialogue” concept isn’t just a feel-good theme. It hints at something operationally important: different generations have different mental models of technology.

- Some users want full control and visible settings.

- Others expect systems to learn from minimal instruction.

- Many frontline workers value predictability over novelty.

Embodied AI teams that ignore these realities build robots people avoid.

Perception that survives the real world: what to prioritize

Real-world robot perception is about reliability under variability, not maximum accuracy on a benchmark.

That shifts priorities in a way many organizations miss.

1) Robustness beats precision for automation ROI

In industrial and healthcare environments, a perception system that’s 99.9% accurate in clean conditions but collapses under glare, dust, or clutter creates downtime. In practice, you often want:

- Stable performance across lighting changes

- Occlusion handling (partially hidden objects)

- Uncertainty awareness (knowing when it doesn’t know)

One of the most valuable design patterns is to make uncertainty actionable: when confidence drops, the robot changes viewpoint, slows down, asks for confirmation, or triggers a safe recovery.

2) Multimodal sensing is underrated

If you’re only using RGB cameras, you’re leaving reliability on the table. For embodied AI systems that must act, combinations like these tend to hold up better:

- RGB + depth for geometry

- Vision + force/torque for contact-rich tasks

- Tactile sensing for grasp verification

- Proprioception for detecting slips or collisions early

Perception is not “seeing.” In robotics, perception is interpreting enough of the world to act safely and successfully.

3) Data strategy matters more than model selection

Teams fixate on architectures. The bigger differentiator is whether you can:

- Capture real failure cases (not just successes)

- Label efficiently (or avoid labeling via self-supervision)

- Re-train regularly without breaking deployed behavior

Embodied AI encourages feedback-driven data collection: every run is training signal.

Action and learning: where embodied AI becomes automation

Action is where robotics stops being AI research and becomes business value.

Martín-Martín’s work spans low-level skills like pick-and-place and navigation up to complex tasks like cooking and mobile manipulation. That spectrum maps neatly onto what companies are trying to automate right now.

Manufacturing: from fixed automation to adaptive manipulation

Traditional industrial robots excel when:

- Parts are fixtured

- Motions are scripted

- The environment is stable

But modern manufacturing is trending toward:

- Higher SKU counts

- Faster changeovers

- More mixed-model production

Embodied AI supports this shift by enabling robots to:

- Handle variation in part presentation

- Recover from near-misses (re-grasp, re-plan)

- Learn new tasks with fewer engineering hours

If you’re evaluating AI-driven automation, ask vendors a blunt question: “What happens when the grasp fails?” A good answer includes detection, recovery, and learning—not “we’ll tune it on-site.”

Logistics and warehouses: perception-action loops at scale

Warehouse robotics isn’t just navigation anymore. The high-impact tasks involve manipulation:

- Picking from cluttered bins

- Placing into variable containers

- Handling deformable packaging

Embodied AI shines when robots must combine “where am I?” with “what am I touching?” and “what should I do next?”—all under time pressure.

A practical KPI to track is intervention rate: how many human assists per 1,000 picks or per shift. Embodied AI systems should drive that number down over time.

Healthcare: safe autonomy with humans in the loop

Healthcare robotics is where hype goes to die unless you respect constraints.

Hospitals are crowded, workflows are dynamic, and safety expectations are absolute. Embodied AI helps not by making robots “independent,” but by making them situationally aware:

- Navigate around people who stop unpredictably

- Handle handoffs (deliveries, pickups) with clear signaling

- Adapt tasks when environments change (a hallway becomes blocked)

Here’s the key: the best healthcare robots are polite and reliable, not clever. Embodied AI enables that reliability by continuously integrating perception and action.

Service industries: mobile manipulation is the missing piece

Service robotics has historically been split:

- Delivery robots that move but don’t manipulate

- Manipulators that work at benches but don’t move

Mobile manipulation—an area Martín-Martín helps lead via the IEEE/RAS Technical Committee—closes that gap. It’s the prerequisite for robots that can actually help in hotels, campuses, eldercare facilities, and commercial kitchens.

Human-robot interaction: the “trust layer” for automation

Human-robot interaction (HRI) is how automation becomes acceptable in real operations.

Even if your long-term goal is autonomy, near-term deployments succeed when humans can:

- Predict robot behavior

- Interrupt safely

- Teach new variations quickly

- Understand what the robot will do next

A useful rule: If your robot needs a manual, your deployment plan is already at risk.

What “robots for everyone” looks like in practice

If you want embodied AI to translate into leads and deployments (not just pilots), build for these realities:

- Teach mode: operators can demonstrate a task variant in minutes

- Explain mode: robot can communicate intent (“repositioning for a better view”)

- Fallback mode: safe degradation instead of full stop

- Metrics mode: you can measure interventions, cycle time, and failure types

These aren’t nice-to-haves. They’re the difference between a robot that’s adopted and a robot that’s tolerated.

Common questions teams ask before buying embodied AI robotics

“Do we need fully autonomous robots to get value?”

No. The fastest ROI often comes from partial autonomy with strong recovery and handoff. You can automate 70% of a workflow and still win big if the remaining 30% is smooth to manage.

“How do we know if a system will generalize to our site?”

Ask for evidence of:

- Performance across multiple environments

- Variation in lighting, clutter, and object sets

- A plan for continuous learning without downtime

Also ask what changes invalidate the model (new packaging, new carts, new floor reflectivity). The honest answers are the useful ones.

“What’s the biggest risk?”

The biggest risk is integration debt—a robot that works only with hidden assumptions (fixtures, staging, perfect labeling) that aren’t sustainable operationally.

Embodied AI reduces integration debt when it’s paired with strong deployment engineering: monitoring, retraining workflows, and human-facing controls.

Where to go next in your automation roadmap

Embodied AI is quickly becoming the practical foundation for robots that operate in manufacturing, healthcare, logistics, and service settings—because it treats perception and action as one system, not two departments.

If you’re planning 2026 automation budgets right now, I’d focus on one question: Which workflows fail today because variability is too expensive to engineer around? Those are the workflows where embodied AI tends to pay off first.

If you’re exploring embodied AI robotics for your operation, start small but measure aggressively: intervention rate, recovery success, cycle time drift, and how fast the system improves after deployment. Then scale the winners.

Where could a robot that learns from the floor—instead of needing the floor to be redesigned—make the biggest dent in your throughput or service levels next quarter?