Deep reinforcement learning workshops help U.S. teams ship safer automation. Learn where RL fits, how to deploy it, and what to build first.

Deep Reinforcement Learning Workshops for U.S. Automation

Most teams don’t fail at deep reinforcement learning because the math is too hard. They fail because they treat it like a magic model you “train” once—then bolt onto a robot or digital service and hope it behaves.

That’s why educational programs like OpenAI’s “Spinning Up in Deep RL” workshops matter to the U.S. tech ecosystem. Even when the original workshop write-up isn’t easily accessible (the source page may block automated access), the idea behind it is clear and useful: teach engineers and product teams how to build RL systems that actually work in production—especially for robotics and automation where real-world constraints punish sloppy experimentation.

This post is part of our AI in Robotics & Automation series, and it’s written for U.S. founders, automation leaders, and engineering teams who want RL to do something practical: improve decision-making, reduce manual operations, and create smarter digital services.

What deep RL workshops actually change (and why it matters)

Deep RL workshops change one thing fast: they replace “RL as hype” with RL as an engineering discipline.

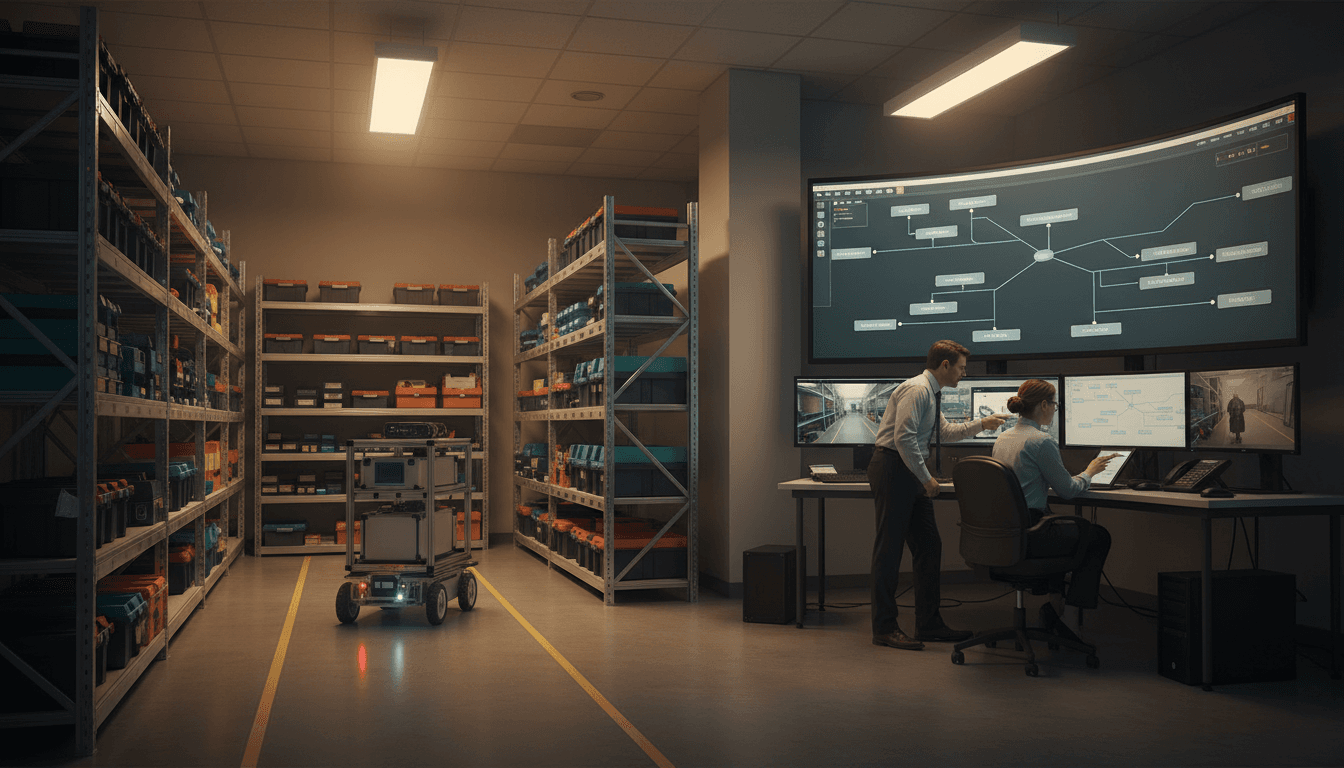

Reinforcement learning (RL) is the branch of machine learning where an agent learns by taking actions, getting feedback (rewards), and improving over time. Deep RL adds neural networks to handle complex states like images, sensor streams, or messy operational data. In automation settings—warehouse routing, robotic picking, call-center triage, fraud response, dynamic pricing—RL maps cleanly to a core business need: make a sequence of decisions under uncertainty.

The educational value isn’t just conceptual. Workshops force teams to confront the hard parts early:

- Problem framing: what is the state, action, reward, and episode?

- Stability and safety: how do you prevent the agent from “learning” unsafe shortcuts?

- Evaluation: how do you know improvement is real and not a lucky run?

- Deployment reality: what happens when the environment changes next week?

RL isn’t a model you “finish.” It’s a feedback system you maintain.

For U.S. startups and mid-market companies, this is a practical advantage. Talent is expensive, iteration cycles are short, and customers won’t tolerate unpredictable behavior—especially in regulated industries or physical automation.

Where deep reinforcement learning fits in robotics & automation

Deep RL fits best when you’re optimizing a policy (a behavior) rather than predicting a static label.

If you’re building in the AI in Robotics & Automation space, you’ve probably already used supervised learning for perception (detect the box, find the defect) and maybe classical controls for motion. Deep RL sits in the middle layer: decision-making.

High-ROI use cases in U.S. digital services

Many “robotics” problems are really automation and operations problems—software agents deciding what happens next.

Common RL-shaped targets:

- Contact center automation: routing, escalation policies, deflection strategies, and staffing decisions

- Fraud and risk systems: when to step up verification, when to block, when to allow with monitoring

- Cloud cost optimization: scheduling batch jobs, autoscaling policies, and queue prioritization

- Logistics and last-mile operations: dynamic dispatch, slotting, and route replanning

In each case, you’re not just minimizing error—you’re balancing competing goals: cost, latency, customer satisfaction, risk, and capacity.

Physical automation: why RL is attractive (and dangerous)

For robotics, RL can learn behaviors that are difficult to hand-code—grasp strategies, navigation policies, or adaptive control in changing conditions.

But physical RL can go wrong fast:

- Training on real robots is slow, expensive, and can damage equipment.

- Agents can exploit reward loopholes in ways that are unsafe.

- Distribution shift (new floor friction, lighting, payloads) can break a learned policy.

A good workshop mindset pushes teams toward safer patterns: simulation-first, strict evaluation gates, conservative rollout, and continuous monitoring.

The “Spinning Up” approach: the practical curriculum your team needs

A strong deep RL workshop doesn’t just list algorithms. It teaches how to think and how to ship.

OpenAI’s “Spinning Up” brand is widely associated with making RL less mystical and more actionable: clear baselines, strong intuitions, and implementation details that prevent wasted months.

Here’s the curriculum that tends to make the biggest difference for teams building automation products.

Start with the right algorithms for production constraints

You don’t pick RL algorithms the way you pick a favorite programming language. You pick them based on data availability, safety constraints, and how costly exploration is.

A practical mental model:

- Value-based methods (DQN-style): useful for discrete action spaces; brittle if the environment is messy.

- Policy gradient methods: more flexible; can handle continuous control.

- Actor-critic families (like PPO-style approaches): popular because they’re relatively stable and work across many domains.

Workshops typically emphasize stability and repeatability over fancy novelty. That’s the correct bias for U.S. businesses shipping AI-powered digital services.

Reward design is product design

Reward functions aren’t a detail—they are the product spec.

If your reward says “minimize handle time,” your system will learn to end calls quickly. If your reward says “maximize throughput,” it may sacrifice quality. This is why RL education belongs in product and ops conversations, not just research.

A reward checklist I’ve found useful:

- Does the reward encode long-term outcomes (retention, rework rate, SLA breaches), not just immediate clicks?

- Is the reward robust to gaming?

- Are there hard constraints that should be modeled as constraints (or safety filters), not soft penalties?

Offline RL and simulation: the safer on-ramp

For many U.S. companies, the best first step isn’t online RL—it’s offline reinforcement learning (learning from logged data) or simulation-based training.

If you already have event logs (support tickets, routing choices, warehouse scans), you can often build an RL-style dataset and prototype policies without risky exploration in production.

For robotics teams, simulation helps you iterate quickly, generate diverse scenarios, and reduce real-world wear-and-tear. The workshop value here is learning where sim helps—and where it lies.

Simulation speeds learning. Validation earns trust.

A deployment playbook for RL in U.S. tech companies

If you want RL to generate leads, revenue, or operational savings, treat it like a controlled product rollout, not a research demo.

Step 1: Pick a decision that repeats a lot

RL pays off when decisions happen frequently.

Good first targets:

- Assigning work items (tickets, orders, inspections)

- Prioritizing queues

- Scheduling resources

- Recommending next-best action for agents

Avoid “one-off” strategic decisions. RL needs repetition to learn and to justify the engineering cost.

Step 2: Define success with business metrics (not reward alone)

Your reward is internal. Your business metrics are the scoreboard.

Examples that work:

- Cost per resolution

- SLA compliance rate

- Rework/returns

- Fraud loss rate

- Customer satisfaction (or complaint rate)

Make sure you can measure these weekly, not quarterly.

Step 3: Add guardrails before you add autonomy

In automation and robotics, guardrails are the product.

Practical guardrails:

- Action constraints (never exceed speed/force limits; never approve beyond risk thresholds)

- Human-in-the-loop approvals for edge cases

- “Safe fallback” policies if confidence drops

- Canary rollouts (1% traffic, then 5%, then 20%)

Step 4: Treat drift as normal

Real environments drift: holidays, weather, promotions, new SKUs, policy changes. In the U.S., the week between Christmas and New Year’s is a classic example: volumes spike, staffing changes, and operational data shifts.

If your RL system doesn’t have monitoring and retraining plans, it’s not finished.

Minimum monitoring:

- Reward trends vs. business KPIs

- Action distribution shifts (agent behavior changing)

- Constraint violations and near-misses

- Segment performance (new users vs. returning, regions, device types)

People also ask: practical RL questions (answered directly)

Is deep reinforcement learning overkill for most automation?

Often, yes. If a rule system or supervised model solves it with fewer risks, start there. RL becomes worth it when you need sequential decisions, trade-offs, and adaptation.

What’s the biggest reason RL projects stall?

Bad problem framing. Teams pick an RL algorithm before they’ve defined the environment, constraints, and what “winning” means.

Do you need robotics hardware to benefit from RL?

No. Many of the strongest ROI cases are in digital services automation—routing, scheduling, resource allocation, and policy optimization.

How long does it take to see value?

A realistic timeline for a first production-grade pilot is 8–16 weeks if you already have usable logs and clear metrics. Physical robotics tends to take longer due to simulation, safety validation, and integration.

Why AI education is a growth strategy (not a nice-to-have)

For U.S. tech companies, workshops and structured learning aren’t “extra.” They’re how you avoid building fragile systems and calling it innovation.

Deep reinforcement learning is powerful for automation because it turns messy, multi-step operations into something you can optimize. But it also raises the bar: you need careful reward design, disciplined evaluation, and safe deployment practices.

If you’re building in AI in Robotics & Automation, the smartest move is to treat RL capability as a team skill—not a single hire, not a one-time model training run, and not a slide deck.

The next question to ask your team is simple: which operational decision would you trust an agent to make tomorrow if you had the right guardrails and training in place?