Deep inverse dynamics helps close the simulation-to-real gap by learning how to turn desired motion into real actuator commands. Practical steps inside.

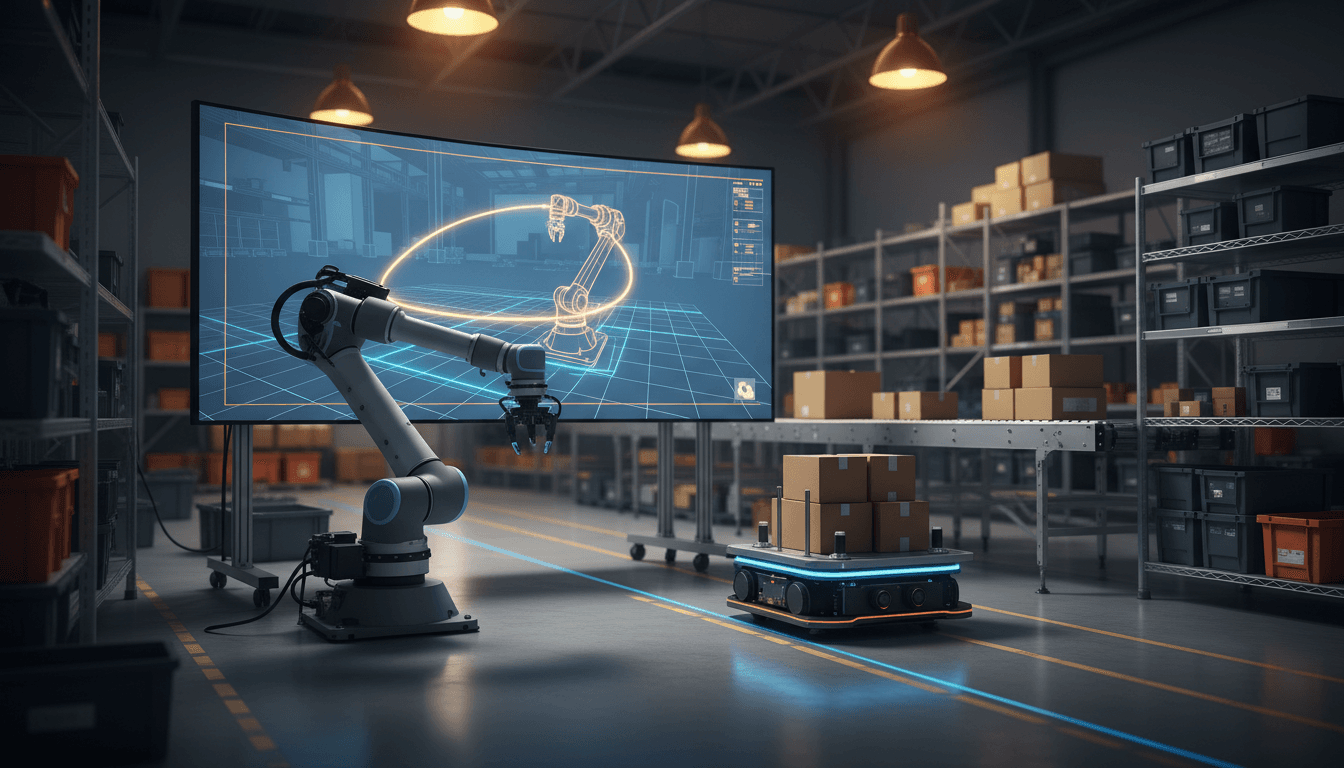

Deep Inverse Dynamics: Getting Robots Out of Simulation

Most robotics teams don’t fail because their simulation is “bad.” They fail because the real world refuses to behave like their simulator.

If you’re building automation for U.S. logistics, healthcare, manufacturing, or field service, you’ve felt this gap: policies that look stable in a physics engine become jittery on a real arm, drift on real wheels, or break the moment friction, payload, latency, or wear changes. Deep inverse dynamics is one of the more practical research directions for closing that gap—because it focuses on the missing piece most teams underestimate: mapping desired motion to real actuator commands under messy, changing conditions.

This post sits in our “AI in Robotics & Automation” series, and it’s written for people who have to ship: robotics engineers, automation leads, and SaaS teams selling automation as a product. We’ll cover what a deep inverse dynamics model is, why it helps with simulation-to-real transfer, and how to apply the idea to real-world tech and digital services—where “actuators” might even be APIs and workflows, not motors.

Why simulation-to-real breaks (and why it’s not just “noise”)

Simulation-to-real breaks because the same action doesn’t create the same effect. In simulation, a torque command produces a predictable change in joint angle. In the real world, that same torque depends on temperature, gearbox backlash, battery voltage, payload variation, cable routing, ground contact, and control loop delays.

Most teams respond with one of two strategies:

- Domain randomization: make simulation messy so the policy becomes robust.

- System identification: tune simulator parameters so it matches the physical system.

Both help, but they have limits. Randomization can make learning slower and still miss the right failure modes. System ID can be expensive, fragile over time, and rarely captures the long tail of “Friday afternoon” problems (wear, miscalibration, swapped parts).

Here’s the stance I’ll take: the fastest path to reliable real-world automation often isn’t a smarter policy—it’s a better model of how to command the machine. That’s where inverse dynamics learning earns its keep.

Deep inverse dynamics, explained like you’ll actually use it

An inverse dynamics model predicts the action required to produce a desired motion.

- Forward dynamics: “If I apply action

u, what motion happens?” - Inverse dynamics: “If I want motion

x → x', what actionushould I apply?”

A deep inverse dynamics model uses a neural network to learn that mapping from data. Instead of relying on perfect equations (which you rarely have), it learns from trajectories:

- Inputs: current state (joint positions/velocities, robot pose), target next state or desired acceleration, and sometimes context (payload estimate, contact mode)

- Output: control command (torques, motor currents, wheel speeds)

Why inverse dynamics is a strong sim-to-real bridge

Because it learns the “translation layer” between intent and actuation. Your planner or policy can stay relatively stable, while the inverse dynamics model adapts to the physical quirks.

Think of it as a learned controller that compensates for:

- unmodeled friction and stiction

- backlash and compliance

- contact dynamics (gripping, pushing, rolling)

- actuator saturation

- timing and latency differences

This is especially useful when your sim policy outputs “ideal” actions that don’t exist on hardware. The inverse dynamics model can map those idealized goals into commands that hardware can actually execute.

A concrete mental model

If you have a simulated arm that can follow a smooth end-effector path, the real arm might overshoot, vibrate, or lag.

A learned inverse dynamics model can be trained so that:

- when the system is lagging, it outputs slightly stronger commands

- when it detects conditions that cause overshoot, it damps commands

- when payload changes, it adjusts torque patterns

That’s not magic. It’s supervised learning applied to control.

How teams train deep inverse dynamics models in practice

You train inverse dynamics from trajectory data—then use it as a controller or as a correction layer.

There are a few workable pipelines; the right one depends on how much real-world data you can afford.

Option A: Train in simulation, fine-tune on a small real dataset

This is the common “get 80% in sim, finish on hardware” route.

- Generate trajectories in simulation (from a planner, a policy, or even hand-designed motions).

- Collect pairs:

(state, desired next state) → action. - Train the neural inverse dynamics model.

- Fine-tune on real data collected with safety constraints.

Why it works: simulation gives coverage; real data corrects the bias.

Option B: Use real-world logs you already have

If you’re running robots in production, you likely have logs of states and motor commands. That’s training data.

- You can reconstruct “desired next state” from short-horizon targets (from your controller) or from smoothed future states.

- Then train a model to imitate the command that succeeded (and down-weight segments that failed).

Strong opinion: if you have deployed robots and you’re not turning logs into control improvements, you’re leaving reliability on the table.

Option C: Train a residual model on top of a classical controller

This is often the safest route in regulated or high-uptime environments.

- Keep your baseline controller (PID, MPC, impedance control).

- Train a neural network to output a residual correction

Δu.

This gives you:

- predictable baseline behavior

- learned compensation for hard-to-model effects

- easier safety validation (you can clamp residual magnitude)

Snippet-worthy rule: Use learning to correct the last 10–20% of behavior, not replace the 80–90% you already trust.

Where this shows up in U.S. automation (and in SaaS)

Deep inverse dynamics is a pattern: translate “desired outcomes” into “effective actions” under real constraints. That’s robotics. It’s also digital operations.

Robotics examples that map cleanly to business outcomes

- Warehouse picking and packing: grasp success depends on contact, compliance, and payload. Inverse dynamics helps maintain stable motion when bins, SKUs, and gripper wear vary.

- AMRs in fulfillment centers: floor friction changes by zone (dust, tape, worn concrete). An inverse dynamics layer can reduce slip and improve path tracking without rewriting navigation.

- Healthcare automation: assistive arms and mobile carts need smooth, predictable motion near people. Residual inverse dynamics can reduce jerky behavior when loads change.

The SaaS analogy: inverse dynamics for workflows

If you sell “automation” as software, your actuators are things like:

- API calls that rate-limit or behave differently by tenant

- RPA steps that break when UI elements shift

- message delivery that changes with carrier filtering

- customer communication steps where timing and wording affect outcomes

Your “desired motion” is the business intent (e.g., verify identity, schedule a delivery, collect payment). Your “action” is the concrete sequence of steps across brittle systems.

A robotics-grade way to think about this:

- Policy/planner: decides what should happen next (intent)

- Inverse dynamics layer: decides how to make it happen reliably (execution)

That bridge point matters for the campaign because U.S. tech companies are investing in AI that adapts automation to real conditions, not just perfect sandbox environments. The teams that win don’t just automate—they keep automation working when reality changes.

What to measure: reliability metrics that actually reflect sim-to-real

If you can’t measure the gap, you can’t close it. Inverse dynamics work is easiest to justify when you track operational metrics tied to “motion quality.”

For robots, the most useful metrics are usually:

- Tracking error (position/orientation error along a path)

- Settling time (how fast the system stabilizes after a move)

- Energy/effort (torque/current usage; proxy for wear and battery)

- Failure rate by context (payload bins, floor zones, temperature bands)

For SaaS automation (the digital parallel), map the same ideas:

- Tracking error → intent mismatch rate (wrong status, wrong field, wrong next step)

- Settling time → time-to-resolution for automated cases

- Energy/effort → API cost and retry volume

- Failure rate by context → tenant-specific or channel-specific failure segmentation

Snippet-worthy rule: Segment failures by context or you’ll “fix” the average and ship regressions to your most valuable edge cases.

Implementation checklist: adopting inverse dynamics without breaking production

You don’t need a moonshot to use this idea. You need a controlled rollout.

1) Start with the most expensive instability

Pick one:

- overshoot causing dropped items

- oscillation causing wear

- slip causing navigation drift

- contact tasks failing under payload variation

In SaaS terms: choose a workflow with high retry volume, high support tickets, or high churn risk.

2) Decide on the role: replacement vs residual

For most teams, residual learning is the right starting point:

- keep classical controller

- add bounded neural correction

- add safety clamps and monitoring

3) Build a dataset that reflects reality

A model trained on “clean” data will behave cleanly—until it doesn’t.

- include real operational conditions (worn wheels, varied payloads, mixed floors)

- label context features (payload class, zone ID, temperature band, gripper type)

- keep a holdout set from the newest hardware revision (this catches brittleness)

4) Validate like an engineer, not a demo artist

Before you let it touch production:

- run replay tests on logged sequences

- run canary deployments (one robot, one site, one tenant)

- set abort thresholds (max torque, max tracking error, max retries)

5) Monitor drift and retrain on a schedule

Inverse dynamics is sensitive to drift because it models actuation.

- monitor error distributions weekly

- retrain when the distribution shifts (new payload mix, new floor coating, new firmware)

This is where AI-powered digital services get real: model maintenance becomes part of operations, not a one-time build.

People also ask: quick answers that help teams ship

Does inverse dynamics replace reinforcement learning?

No. It complements it. You can use RL or planning to choose goals, and inverse dynamics to produce effective commands on real hardware.

Is this only for robot arms?

No. It applies to wheeled robots, drones, humanoids, and any actuator-driven system. The concept also maps to digital automation where actions have uncertain effects.

What’s the biggest risk?

Overconfidence. If the model is allowed to output unbounded commands, it can create unsafe behavior. Residual learning with clamps is a practical safeguard.

Where this goes next for AI in Robotics & Automation

Deep inverse dynamics is part of a broader shift in the “AI in Robotics & Automation” story: AI is increasingly the reliability layer, not just the brains. It’s what helps automation survive variability—whether that variability is a warehouse floor, a hospital corridor, or a messy enterprise software stack.

If you’re building automation in the U.S. market, this matters for a simple reason: customers don’t buy demos. They buy uptime. A learned inverse dynamics model is one of the clearest technical paths from “works in simulation” to “works on Tuesday in Q4 when volumes spike.”

If you’re evaluating where to invest next, start by asking: where is your system translating intent into action poorly—on motors, on APIs, or on customer communications? That translation layer is often the highest-ROI place to apply AI.