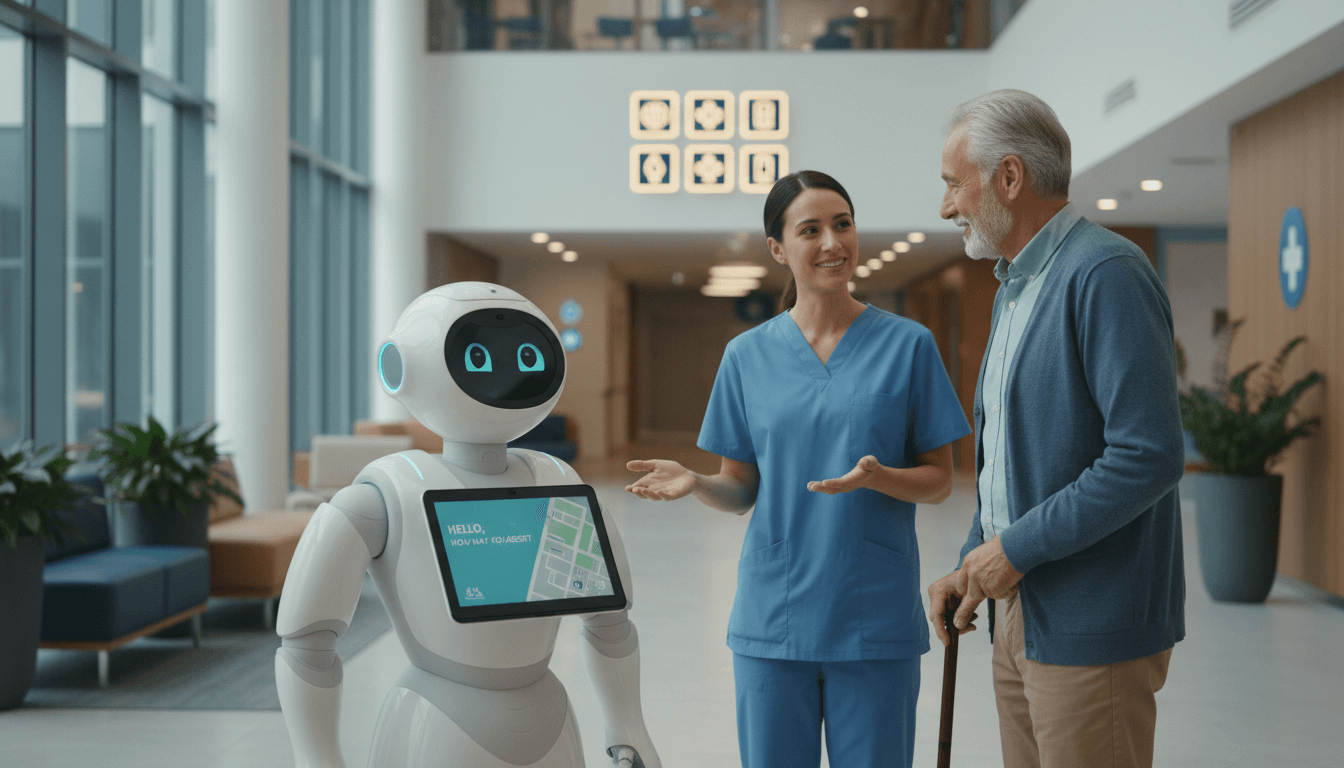

Natural conversation is becoming the deciding factor for robots in healthcare and service. Learn what it takes to deploy conversational AI robots that people trust.

Conversational AI Robots: Natural Talk in Care & Service

A lot of robotics pilots fail for a boring reason: the robot can do the task, but people don’t trust the handoff. When a service robot rolls up to a hospital room or a hotel lobby and says something stilted—or stays silent when it shouldn’t—humans get uneasy fast.

That’s why the conversation layer is becoming a deciding factor in whether robots stick around after the demo. In Robot Talk Episode 125, Claire speaks with Gabriel Skantze (KTH Royal Institute of Technology), a professor known for his work in conversational systems and human-robot interaction. The short version of his message is one I strongly agree with: if we want robots in healthcare and service environments to be useful at scale, they need to handle face-to-face conversation the way humans actually use it—messy, interruptible, contextual, and full of social signals.

This post expands on that idea with practical examples and a blueprint you can use to evaluate (or build) a conversational AI robot that works outside the lab.

Natural conversation is a robotics capability, not a “nice-to-have”

Natural language isn’t just a UI. In service and healthcare, it’s how work gets coordinated.

A robot in a hospital wing might need to: confirm identity, explain what it’s doing, ask for consent, coordinate with staff, and adjust when someone is confused or distressed. A robot in a hotel might need to: guide guests, answer policy questions, handle complaints, and escalate smoothly to a human.

When the robot can’t converse naturally, teams compensate—often unconsciously. Staff start “speaking robot,” avoiding complex requests, or skipping the robot altogether. The operational result is brutal: utilization drops, exceptions spike, and leadership concludes the robot “wasn’t ready.” Most companies get this wrong by treating conversation as the final coat of paint.

Snippet-worthy truth: In real deployments, conversational ability is often the difference between “technically works” and “actually adopted.”

Why face-to-face is harder than chat

Text chat is forgiving. Face-to-face interaction is not.

In person, humans rely on:

- Turn-taking (knowing when to speak, when to stop, when to wait)

- Timing (micro-pauses, interruptions, overlap)

- Grounding (checking shared understanding: “You mean room 312, right?”)

- Nonverbal signals (gaze, head nods, posture)

- Context (location, who’s present, what just happened)

Skantze’s research focus—speech communication and conversational AI for embodied agents—sits right at this intersection: building systems that can manage the rhythm and social expectations of in-person talk.

What conversational AI adds to robots in healthcare and service

Conversational AI in robotics isn’t about making robots “chattier.” It’s about making robots clearer, safer, and easier to work with.

Here are three concrete ways it changes outcomes.

1) Fewer failed handoffs and fewer “dead ends”

The fastest way to lose trust is to corner a person into a dead end:

- “I didn’t understand that.”

- “Please repeat.”

- “Error.”

A well-designed conversational robot does repair, not just rejection. It can narrow options (“Did you mean X or Y?”), paraphrase what it heard, and confirm before acting.

In healthcare, this matters because dead ends create safety workarounds. If nurses stop asking the robot for help because it’s unpredictable, you’ve lost the whole efficiency play.

2) Better human-robot teamwork through shared context

Human teams don’t coordinate with perfect commands. They coordinate with partial information and quick confirmations.

A practical conversational AI robot should be able to:

- Track task state (“I’m delivering medication to Bay 4; do you want me to stop at Bay 6 after?”)

- Reference shared environment (“The elevator is busy; I can take the stairs route but it’s slower.”)

- Use lightweight confirmations (“Okay—heading to Radiology. I’ll notify you when I arrive.”)

This is where human-robot interaction becomes operational, not academic: conversation is the glue that turns a robot from a gadget into a teammate.

3) More inclusive interfaces for real users

Hospitals and public-facing services involve people with different accents, languages, hearing levels, cognitive loads, and stress levels.

A conversation-first robot can offer:

- Multimodal fallback (speech + on-screen choices + gesture)

- Short, structured prompts under stress (“Tell me the room number, or point on the map.”)

- Adaptive pacing (slower speech, repeat confirmations)

If your deployment includes older adults (common in care settings), conversation quality isn’t a feature—it’s accessibility.

The technical reality: what it takes to “chat naturally” with a robot

Natural conversation is an engineering stack. If you’re evaluating vendors—or scoping an internal build—these are the pieces that determine whether the robot will work on day 1 and still work on day 100.

Speech isn’t enough: the robot needs turn-taking and grounding

Classic voice assistants focus on single-shot commands. Face-to-face interaction needs continuous coordination.

Look for capabilities like:

- Incremental ASR: the robot can start interpreting before the user finishes

- Barge-in handling: the robot stops talking when interrupted

- Backchannels: “mm-hmm,” “okay,” nods—signals that it’s following

- Grounding strategies: confirmations, paraphrases, clarifying questions

If a robot can’t do these, it will feel slow, rude, or clueless—no matter how good the language model is.

Embodiment changes everything (and that’s the point)

A robot has a body, a location, and a job. That’s an advantage.

A language model can answer questions. An embodied conversational robot can:

- Coordinate speech with navigation (“Follow me,” then actually lead)

- Use gaze and orientation to indicate attention and turn-taking

- Reference objects and people in the scene (“The wheelchair by the door”)

Skantze’s area—conversational AI for human-robot interaction—highlights that embodiment isn’t decoration. It’s signal. It’s how humans decide the robot is paying attention and behaving appropriately.

LLMs help, but guardrails decide whether you can deploy

By late 2025, large language models are broadly capable at generating fluent dialogue. Fluency is not the deployment bar.

If you’re putting conversational AI robots into healthcare or high-traffic service environments, you need:

- Constrained action policies (the robot can’t “talk itself” into unsafe actions)

- Retrieval-based grounding for factual answers (policies, schedules, facility info)

- Refusal behavior tuned to context (helpful, not obstructive)

- Auditability (logs, intent traces, escalation triggers)

Here’s my stance: if a vendor can’t explain exactly how they prevent hallucinated instructions and unsafe task execution, they’re not ready for real care settings.

Practical examples: where natural robot conversation pays off

These are scenarios where conversational ability translates directly into measurable value—shorter task times, fewer escalations, and better user experience.

Healthcare: patient-facing support without adding staff workload

A conversational robot can handle “small but constant” interaction that burns staff time:

- Wayfinding to departments and clinics

- Pre-visit intake reminders (“Your appointment is at 2:10; do you need wheelchair assistance?”)

- Comfort check-ins in waiting areas (“I can update you on the estimated wait time.”)

- Basic room logistics (“Where’s the bathroom?” “How do I call the nurse?”)

The win isn’t replacing clinicians. It’s reducing repeated interruptions so clinicians can focus.

Elder care: companionship that’s structured and safe

“Companion robots” can go wrong when they’re treated like open-ended friends. The better approach is structured, supportive conversation:

- Medication reminders with confirmation loops

- Routine building (“Let’s do the 3-minute mobility exercise now.”)

- Mood check-ins with escalation paths to caregivers

Natural conversation here means the robot can be warm and responsive while still staying within safety boundaries.

Hospitality and retail: service recovery and triage

A lobby robot that can only greet people is a gimmick. A robot that can triage requests is useful:

- “I can help with directions, late checkout, or calling the front desk—what do you need?”

- Handling simple policy questions consistently

- Routing edge cases to humans with a good summary (“Guest reports missing item; last seen near gym.”)

This is where conversational AI improves service quality without pretending the robot is the whole solution.

How to evaluate a conversational robot (a quick deployment checklist)

If you’re responsible for automation in a hospital, clinic, hotel, or large facility, use this to pressure-test a solution before you buy or build.

The “5-minute lobby test”

Put the robot in a moderately noisy public area for five minutes and see if it can:

- Handle interruptions without freezing

- Ask clarifying questions instead of repeating “I didn’t understand”

- Keep responses short and task-focused

- Escalate to a human with context when it’s stuck

- Respect privacy norms (lower voice, avoid repeating sensitive info)

If it fails here, it will fail during peak hours.

The “handoff quality” score (what I’d measure)

Track these metrics in your pilot:

- Task completion rate (end-to-end, not just “intent detected”)

- Average turns per task (too many turns = friction)

- Escalation rate and escalation usefulness (did the robot provide a summary?)

- Recovery success rate after misrecognition

- User trust signals (repeat use, voluntary re-engagement)

A conversational AI robot that’s improving should show fewer turns, fewer escalations, and higher repeat use over time.

Where this fits in the AI in Robotics & Automation series

Within the broader AI in Robotics & Automation landscape, conversational AI is the piece that connects autonomy to operations. Navigation, manipulation, and perception matter—but conversation is how robots get assigned work, explain actions, and earn permission to operate near people.

I’ve found that teams who treat dialogue as part of the robot’s safety system—not as a marketing feature—ship faster and get fewer late-stage surprises.

The next year of robotics deployment won’t be decided by who has the flashiest demo voice. It’ll be decided by who can deliver reliable, context-aware conversation that holds up during noise, interruptions, stress, and edge cases.

If you’re exploring conversational AI robots for healthcare or service environments, the best next step is simple: pick one high-frequency workflow (wayfinding, delivery coordination, intake triage) and run a pilot where you measure handoffs and recovery—not just response quality.

Where do you see the biggest conversation bottleneck today: the front desk, the hallway, or the handoff between staff and automation?