ChatGPT Enterprise is reshaping manufacturing work—faster research, safer design, and better training. See the playbook and steps to scale it.

ChatGPT Enterprise in Manufacturing: The Real Playbook

80% of employees reporting “significant workflow improvements” isn’t a cute pilot result—it’s a signal that AI in manufacturing is shifting from experiments to operating standard. ENEOS Materials rolled out ChatGPT Enterprise company-wide and ended up with more than 1,000 custom GPTs, 90%+ weekly usage, and HR work that dropped by about 90% in aggregation and analysis time.

Here’s why that matters for U.S. manufacturers (and the digital service providers that support them): the same pressure ENEOS faced—labor constraints, rising input costs, and specialized know-how trapped in a few experts—is hitting plants across the United States too. The difference in 2025 is that the “AI in robotics & automation” story isn’t only about cobots and vision systems anymore. It’s also about AI-powered workforce solutions that turn tribal knowledge, standards, and multilingual information into something every operator, engineer, and analyst can use.

This post breaks down what ENEOS did, what’s transferable to U.S. operations, and a practical implementation plan you can copy—without treating generative AI like a toy or a silver bullet.

What ENEOS proves about AI in manufacturing

The core lesson: adoption beats ambition. ENEOS didn’t win because they had the flashiest prototype; they won because they made AI usable, safe, and routine for regular employees.

Their measurable outcomes are the kind that actually change budgets and headcount plans:

- 80% of employees reported significant workflow improvements in the pilot

- 90% reduction in HR data aggregation and analysis time

- Technical and market investigations that took months now take minutes using deep research

- 1,000+ custom GPTs created internally

- 90%+ weekly usage across employees

For U.S. manufacturers, this should reset expectations. The fastest ROI often comes from knowledge work wrapped around the factory—engineering change, maintenance planning, EHS documentation, training, quality investigations—because it’s text-heavy, high-friction, and chronically under-automated.

AI isn’t replacing the plant floor—it’s compressing the “office latency” around it

Most plants don’t lose productivity because people can’t run a machine. They lose productivity because:

- Specs take days to confirm

- A corrosion risk gets rediscovered every few months

- Root-cause investigations stall waiting for someone who “knows that system”

- Training feedback sits in spreadsheets no one has time to analyze

Generative AI doesn’t weld or cut material. It reduces the coordination and analysis drag that slows everything else.

Secure adoption is the real differentiator (especially in the U.S.)

If your AI plan doesn’t satisfy security, it won’t ship. ENEOS explicitly chose ChatGPT Enterprise because they needed a secure environment for proprietary information and outputs accurate enough for internal expectations.

That maps cleanly to U.S. realities: aerospace, automotive, chemicals, medical devices, defense-adjacent supply chains—nearly all of them operate under strict customer requirements, cyber frameworks, and contractual controls.

A practical security model that works

If you’re implementing ChatGPT Enterprise (or a comparable enterprise AI stack), anchor the rollout in three rules:

-

Define “allowed data” by category, not vibes

- Public: safe for general prompts

- Internal: OK with Enterprise controls

- Restricted: only via approved workflows (retrieval, redaction, role-based access)

- Regulated: requires legal/compliance review and audit logging

-

Use internal knowledge sources intentionally

- The goal isn’t “let the model browse everything.”

- The goal is controlled retrieval from standards, SOPs, specs, and lessons learned—so answers are consistent and traceable.

-

Treat outputs as draft work unless the workflow proves otherwise

- Engineering: draft + human verification

- HR analytics: automated summaries + spot checks

- Safety: draft recommendations + EHS sign-off

My take: companies that skip this governance step end up with “AI theatre”—lots of excitement, no scale.

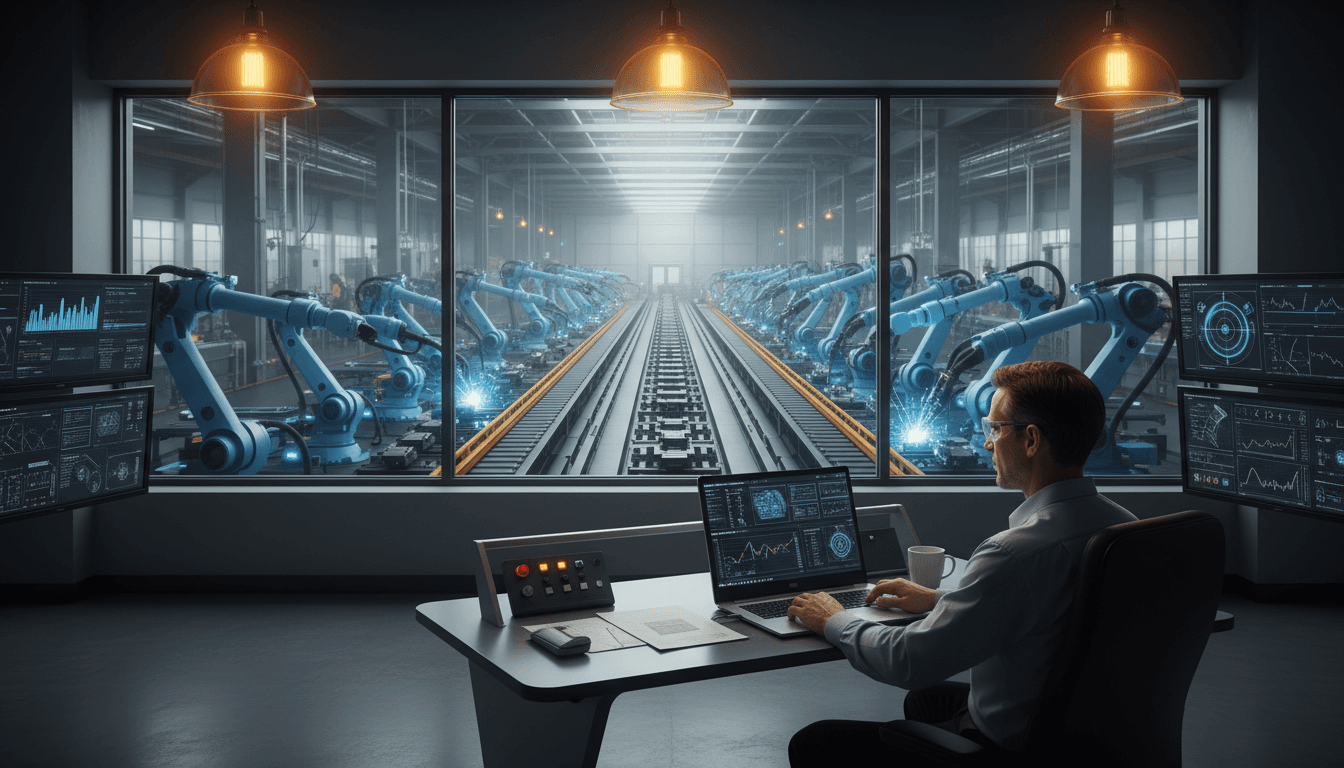

Where ChatGPT fits in robotics & automation (and where it doesn’t)

ChatGPT Enterprise is a force multiplier for robotics & automation programs because it speeds up the parts humans still own. Even highly automated plants have human bottlenecks: changeovers, exceptions, documentation, compliance, troubleshooting, training.

Think of the modern automation stack like this:

- Robots/PLCs/SCADA: execute actions

- Sensors/vision systems: observe

- MES/QMS/EAM/CMMS: coordinate

- Generative AI: explains, summarizes, drafts, compares, translates, and recommends

ENEOS’s vision of eventually controlling equipment through natural language is compelling, but the near-term win is more grounded: use AI to make engineers and operators faster, not to bypass controls. In U.S. plants, you’ll still want deterministic control layers (PLC logic, safety interlocks) regardless of how “conversational” the interface becomes.

The highest-ROI use cases look boring on paper

ENEOS’s most valuable examples weren’t flashy demos. They were repeatable workflows:

- Plant design guidance based on company standards

- Corrosion/material selection checks

- Cross-language technical research

- HR training analysis and aggregation

Those are exactly the kinds of use cases that scale across multiple facilities.

Three use cases worth copying in U.S. operations

Start with use cases that have (1) clear owners, (2) repeat frequency, and (3) existing text/data. ENEOS hit that trifecta.

1) “Standards GPT” for engineering and maintenance

ENEOS built a custom GPT for plant design using company standards. Inputs like fluid type, flow rate, pipe diameter, pressure loss, and materials produced optimized specs quickly—and flagged risks.

How to translate that to U.S. manufacturing:

- Create a Design Standards Assistant that references:

- piping/material standards

- equipment libraries

- preferred vendors

- internal lessons learned

- failure reports

- Build prompt templates for:

- corrosion risk screening

- spec comparison for substitutes

- change request drafts

- MOC (management of change) summaries

Snippet-worthy rule: If it’s written in a standard, it should be searchable in seconds.

2) Deep research for multilingual supply chains and compliance

ENEOS operates a plant in Hungary and used deep research to compress investigations from months to minutes, translating Hungarian sources into Japanese.

U.S. parallel: suppliers, regulations, and technical publications span languages and jurisdictions. A research workflow can support:

- supplier due diligence packets

- regulatory change monitoring (state-by-state and international)

- competitive benchmarking

- incident/recall research

The trick is to standardize outputs so the work is reusable:

- one-page brief

- key claims with citations from internal documents

- assumptions clearly listed

- “what would change my mind” section

3) Training quality and workforce enablement (the sleeper ROI)

ENEOS HR used a custom GPT for training analysis: tasks that took 1–2 hours were reduced to about 20 seconds, and data aggregation time dropped ~90% after building an internal tool (with no prior coding experience).

U.S. manufacturers should pay attention because 2025 is still a tight labor market in many skilled trades, and onboarding is expensive.

Practical applications:

- auto-summarize training feedback by role, site, and shift

- generate targeted refresher micro-lessons from incidents/near-misses

- draft skill matrices and individualized learning plans

- create “explain it like I’m new” versions of SOPs

Opinion: If you’re investing in automation but not investing in faster training, you’re building a plant only your most experienced people can run.

Implementation plan: how to scale without chaos

A working rollout has three tracks: people, process, and platform. ENEOS used a cross-functional volunteer team first, then scaled. That sequencing matters.

Step 1: Pick a narrow pilot with real pain

Choose 1–2 workflows that have:

- high frequency (weekly or daily)

- measurable cycle time

- clear owners

- existing documents (standards, SOPs, templates)

Examples: EHS report drafts, maintenance troubleshooting notes, quality deviation summaries.

Step 2: Build 5–10 “golden prompts” and two custom GPTs

Don’t let everyone start from scratch. Give them strong defaults:

- “Summarize this incident report into: what happened, why, corrective actions, prevention.”

- “Compare these two specs and list differences that affect safety, quality, or compliance.”

- “Draft an operator-facing one-page SOP from this engineering procedure.”

Then build custom GPTs around stable knowledge:

- Standards GPT (engineering/maintenance)

- Training Analytics GPT (HR/operations)

Step 3: Measure outcomes like an operations leader

Track metrics that executives care about:

- time-to-first-draft (minutes)

- cycle time reduction (hours/days)

- rework rate (how often outputs are unusable)

- adoption (% weekly active users)

- safety/quality indicators (near-miss analysis completion rate, deviation closure time)

ENEOS shared adoption numbers and time reductions. That’s the play.

Step 4: Put guardrails where they belong

Guardrails shouldn’t be “don’t use AI.” They should be:

- role-based access to internal knowledge

- approved templates for regulated docs

- mandatory human review for safety-critical decisions

- auditability for who queried what and why

If you want AI on the shop floor, you’ll need this discipline anyway.

What digital service providers should take from this

This is a services opportunity, not just a software story. U.S. manufacturers rarely fail to buy tools; they fail to operationalize them.

If you provide technology and digital services, the ENEOS pattern points to high-demand offerings:

- custom GPT design for standards and SOPs

- knowledge base cleanup and taxonomy (the unglamorous work that makes AI accurate)

- secure rollout playbooks (access control, logging, policy)

- change management and training for frontline supervisors

- integration with MES/QMS/EAM systems so AI outputs land where work happens

Done well, this becomes a compounding advantage: each new GPT, prompt template, and validated workflow reduces future friction.

The direction of travel for AI in robotics & automation

The next phase is “natural language over operations,” not “chatbots on the side.” ENEOS’s long-term view—internal models embedded in equipment and conversational control—matches where industrial automation is headed. But the winners will be the teams that earn trust through boring reliability first.

If you’re a U.S. manufacturer planning 2026 initiatives, I’d bet on this order:

- Standardize knowledge workflows (specs, SOPs, training, investigations)

- Connect AI to validated internal sources

- Introduce AI-assisted decision support for maintenance and quality

- Only then consider conversational interfaces for machines—with deterministic safety layers intact

AI in manufacturing doesn’t need hype. It needs reps.

If you’re considering ChatGPT Enterprise for manufacturing, the question to ask isn’t “Can it write a good answer?” It’s: Can we turn our best practices into a system the whole workforce can use every week?