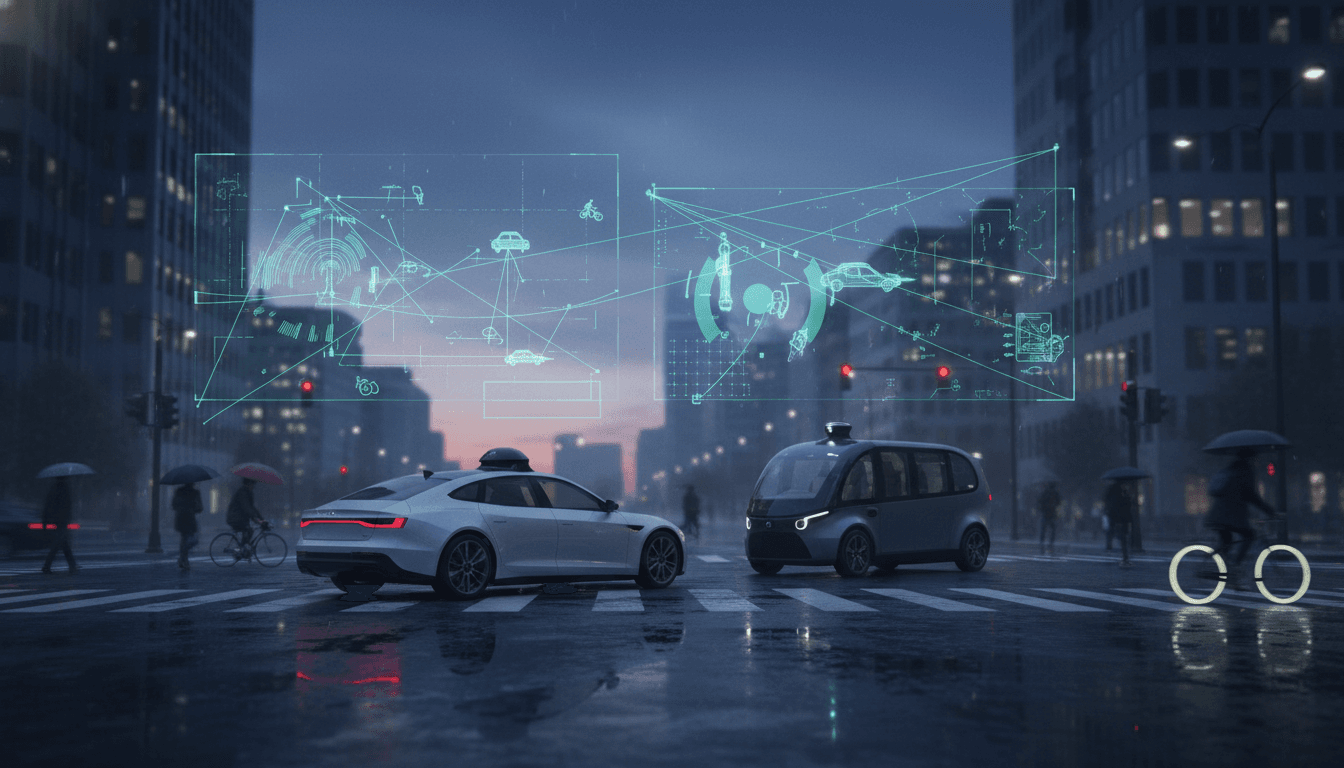

AV crash data can improve AI safety, but companies hoard it as IP. Here’s how scenario sharing and better incentives raise trust without exposing secrets.

AV Crash Data Sharing: Safer AI Without Giving Away IP

Most companies get this wrong: they treat autonomous vehicle crash data like a treasure chest that must stay locked—then act surprised when public trust stalls and regulators tighten the screws.

Crash and “near-miss” data is the fuel that makes AI-driven robotics safer, whether the robot is a self-driving taxi in San Francisco or an autonomous forklift in a distribution center. The problem is that the strongest incentives today push companies to hoard that fuel, because the same data that improves safety also exposes how their systems work.

Cornell researchers recently put words (and a roadmap) to what many AV safety teams already feel in their bones: the market rewards secrecy, not transparency. Their work doesn’t argue that AV companies should hand competitors raw logs and video. It argues something more practical: we can separate safety knowledge from proprietary implementation and design data-sharing mechanisms that raise the safety floor for everyone.

Why companies don’t share AV crash data

The simplest answer: crash data is competitive advantage. If your model fails in a weird edge case—say a pedestrian appears from behind a van while a cyclist swerves into your lane—your team gets a highly specific training signal. Fix it once and you’ve bought yourself a lead.

Cornell’s team interviewed 12 employees working on AV safety and found something that should worry anyone building AI in robotics and automation: there isn’t a shared playbook. Each company has its own “homegrown” safety datasets and internal practices, which means the industry repeatedly learns the same painful lessons in parallel.

Barrier #1: Safety data reveals the secret sauce

Crash and incident datasets aren’t just “what happened.” They often implicitly encode:

- Sensor placement and calibration decisions

- Perception and tracking behavior (what the system noticed or missed)

- Planning assumptions (what it believed other road users would do)

- Testing workflows and tooling

Even if a company wants to collaborate, it’s hard to share without exposing the machine learning pipeline and operational details that differentiate the product.

Barrier #2: The default mindset is “private knowledge”

The interviewees described safety knowledge as contested territory—something that makes their employer safer and more profitable. That framing matters because it shapes everything downstream: legal review, executive incentives, and what safety teams are allowed to publish.

If you’re running AI in robotics, you’ve seen this movie before. The same tension shows up when warehouse robotics vendors hesitate to share collision data, or when healthcare robotics teams keep adverse-event logs internal.

Why this matters beyond autonomous vehicles

AVs are the loudest example, but they aren’t unique. Autonomy scales risk: as systems take on more real-world responsibility, the cost of each unknown edge case goes up.

In the broader AI in Robotics & Automation world, the parallels are direct:

- Industrial robots: near-miss and contact events teach safer motion planning and human-aware navigation.

- Mobile robots in logistics: collision and obstruction logs improve perception in messy environments (holiday peak season chaos is basically a stress test).

- Service robots: user-interaction failures teach better intent recognition and safer behaviors around children, older adults, and crowded public spaces.

A blunt stance: if safety learning stays siloed, the industry will keep paying for it twice—first in incidents, then in delays from public pushback and reactive regulation.

What regulators require today (and why it’s not enough)

Current U.S. and European reporting requirements tend to focus on surface-level fields: date/month, manufacturer, injury status, and other high-level descriptors. That’s useful for counting incidents, but it’s weak for preventing the next one.

Safety teams need context that typical reporting doesn’t capture:

- Pre-incident scene dynamics (occlusions, unusual trajectories)

- Behavioral violations by human drivers (running a red light, illegal turns)

- Environment anomalies (construction signage, debris, lost cargo, glare)

- System state (what the AV believed was happening vs. reality)

If the only shared data is a thin incident summary, every company still has to rediscover the same scenario patterns through its own fleet.

The practical middle ground: share safety knowledge, not raw data

The Cornell roadmap lands on a key idea that’s widely applicable in robotics: untangle public safety knowledge from private technical artifacts.

Here’s how that looks in practice.

1) Publish structured “scenario reports” instead of raw logs

A scenario report is a standardized description of the event that avoids exposing proprietary infrastructure.

For example, rather than sharing raw camera feeds and internal annotations, an AV company could share:

- Road type (multi-lane arterial, residential, freeway on-ramp)

- Actor layout (pedestrian emerging from occlusion at 8–12m, cyclist crossing from right)

- Timing and triggers (lead vehicle hard brake, occlusion clears at T-0.7s)

- Weather/lighting (night, wet road, headlight glare)

- Outcome class (hard braking, near miss, low-speed contact)

This is “safety knowledge” another team can use to create tests and improve policies—without copying your stack.

Robotics translation: factories can share “contact event scenarios” (human steps into shared zone during pallet handoff) without uploading full video of the facility layout.

2) Use “exam questions” that every autonomy stack must pass

One of the most actionable proposals is to treat safety like certification exams: define scenario-based tests that systems must pass to operate.

A good safety test is an exam question: clear setup, measurable pass/fail criteria, and repeatable evaluation.

In AVs, that might be “pedestrian enters crosswalk from behind a stopped truck while adjacent lane traffic flows.” In mobile robotics, it might be “human approaches robot from rear while robot reverses out of a charging bay.”

The win is that companies can contribute scenarios they’ve learned from—while still keeping their data and implementation private.

3) Create a “virtual proving ground” with shared scenarios

The Cornell team points to the idea of a government-backed testing environment—a virtual city with standardized intersections, pedestrian-heavy zones, and tricky merges.

I’m strongly in favor of this, but only if the evaluation is transparent and adversarial:

- Transparent: scenario definitions and pass criteria are public.

- Adversarial: tests evolve as failure modes are found.

- Comparable: results are reported in a way that consumers and fleet buyers can understand.

Done right, this becomes the autonomy equivalent of crash-test ratings—except focused on AI behavior and edge cases.

4) Use trusted intermediaries to reduce legal and competitive risk

Academic institutions and independent research organizations can act as data intermediaries. That can look like:

- Secure data enclaves where companies contribute sensitive data under strict access controls

- Aggregated reporting where only scenario patterns (not raw artifacts) are released

- Joint research collaborations with pre-negotiated publication boundaries

This approach already exists in other safety-critical domains. The AV sector can borrow those governance patterns instead of inventing everything from scratch.

Incentives: the missing piece nobody can ignore

If you want more data sharing, you need more than good intentions. You need incentives that make sharing the rational choice.

Here are incentive mechanisms that actually fit how robotics and automation businesses operate:

Carrots (benefits for sharing)

- Faster approvals: regulators prioritize permits or expansions for companies contributing to shared safety evaluations.

- Procurement advantage: fleet buyers (logistics, transit agencies) require participation in scenario reporting as part of RFPs.

- Insurance pricing: insurers offer better terms when companies demonstrate continuous improvement against shared benchmarks.

Sticks (costs for not sharing)

- Mandatory scenario disclosure above a severity threshold (near-misses included, not just crashes)

- Standardized incident taxonomy so companies can’t hide behind vague labels

- Third-party audits of safety processes and reporting completeness

One opinionated point: if near-miss reporting isn’t part of the system, we’re optimizing for headlines, not safety. Near misses are where learning happens at scale.

“People also ask” (and the real answers)

Is AV crash data sharing a privacy risk?

Yes—if you share raw video and location traces. That’s why structured scenario reporting and intermediated access matter. Privacy-by-design has to be part of the framework.

Won’t sharing data reduce innovation?

Sharing raw artifacts can. Sharing safety knowledge tends to do the opposite: it raises baseline competence and shifts competition to product quality, cost, and deployment excellence.

Can these ideas apply to warehouse robots and industrial automation?

Directly. Any autonomy system that learns from real-world incidents can benefit from shared scenario libraries, standardized reporting, and independent evaluation.

What to do next if you build or buy autonomous systems

If you’re an AV company, robotics vendor, or an enterprise deploying automation, you can push this forward without waiting for perfect regulation.

- Adopt a scenario taxonomy now: define how you describe incidents and near misses in a way that’s shareable.

- Separate safety learning from proprietary artifacts: design internal tooling so scenario summaries are easy to export without exposing your ML pipeline.

- Contribute to a shared “exam bank”: start with a small set of scenarios and measurable pass/fail criteria.

- Put sharing into contracts: if you’re the buyer, require scenario reporting participation from vendors.

- Measure progress: track how many incidents map to known scenarios versus truly novel failures. Novelty rate is a meaningful safety KPI.

Safety in AI-powered robotics isn’t just about better models. It’s about better feedback loops across the industry.

The question for 2026 planning cycles is straightforward: will autonomy teams keep treating crash data as a private moat—or will we build the institutions and incentives that let safety knowledge spread without giving away the business?