Pramata’s AI-focused CLM approach highlights what matters in 2025: contract discovery, renewal control, and audit-ready obligation tracking for procurement and compliance.

Pramata CLM Review: AI Contract Ops for Procurement

Most procurement teams don’t have a “contract problem.” They have a contract visibility problem.

It shows up in the worst moments: year-end renewals hit all at once, a supplier dispute turns into a scramble for the “latest version,” or Legal asks for proof of flow-down clauses and you’re stuck stitching together PDFs from inboxes and shared drives. The cost isn’t abstract—missed notice windows, unmanaged obligations, inconsistent terms, and unnecessary vendor sprawl show up directly in margin and risk.

Spend Matters’ recent vendor analysis positions Pramata as an end-to-end contract lifecycle management (CLM) platform with a heavy emphasis on AI—especially for large organizations managing renewals, obligations, and contract discovery at scale. That framing matters for anyone in our AI in Legal & Compliance series, because CLM has become the operating system for how enterprises translate legal intent into procurement execution.

Why CLM is becoming procurement’s “AI control plane”

Answer first: In 2025, the highest-impact AI in procurement is the AI that can reliably answer, “What did we agree to, with whom, and what happens next?” That requires CLM.

AI procurement strategies often start with sourcing copilots or invoice automation. Those are useful, but they don’t resolve the biggest governance gap: contracts are still treated as documents, not data. When contracts stay unstructured, AI can’t consistently:

- Flag renewal cliffs across regions and business units

- Detect policy conflicts (e.g., insurance, data protection, audit rights)

- Track obligations (SLAs, rebates, reporting, security attestations)

- Enforce clause standards across supplier tiers

- Provide defensible answers during audits or disputes

Here’s the stance I’ll take: If you’re serious about AI in legal and compliance, CLM can’t be a “Legal tool.” It has to be a shared system of record across Legal, Procurement, Finance, and Risk.

Pramata’s market narrative—helping large enterprises find contracts, manage renewals, meet/enforce obligations, and consolidate vendors—lines up exactly with where AI delivers measurable outcomes.

The problem CLM is actually solving (and why spreadsheets fail)

Spreadsheets and shared drives aren’t “bad.” They’re just the wrong layer for operational control.

A contract portfolio is a living system:

- Contract metadata changes (renewal dates, termination rights, pricing schedules)

- Supplier relationships shift (M&A, performance issues, new regulatory exposure)

- Internal policies evolve (privacy terms, security baselines, ESG requirements)

If your “system” can’t update and notify, you don’t have a system—you have a graveyard.

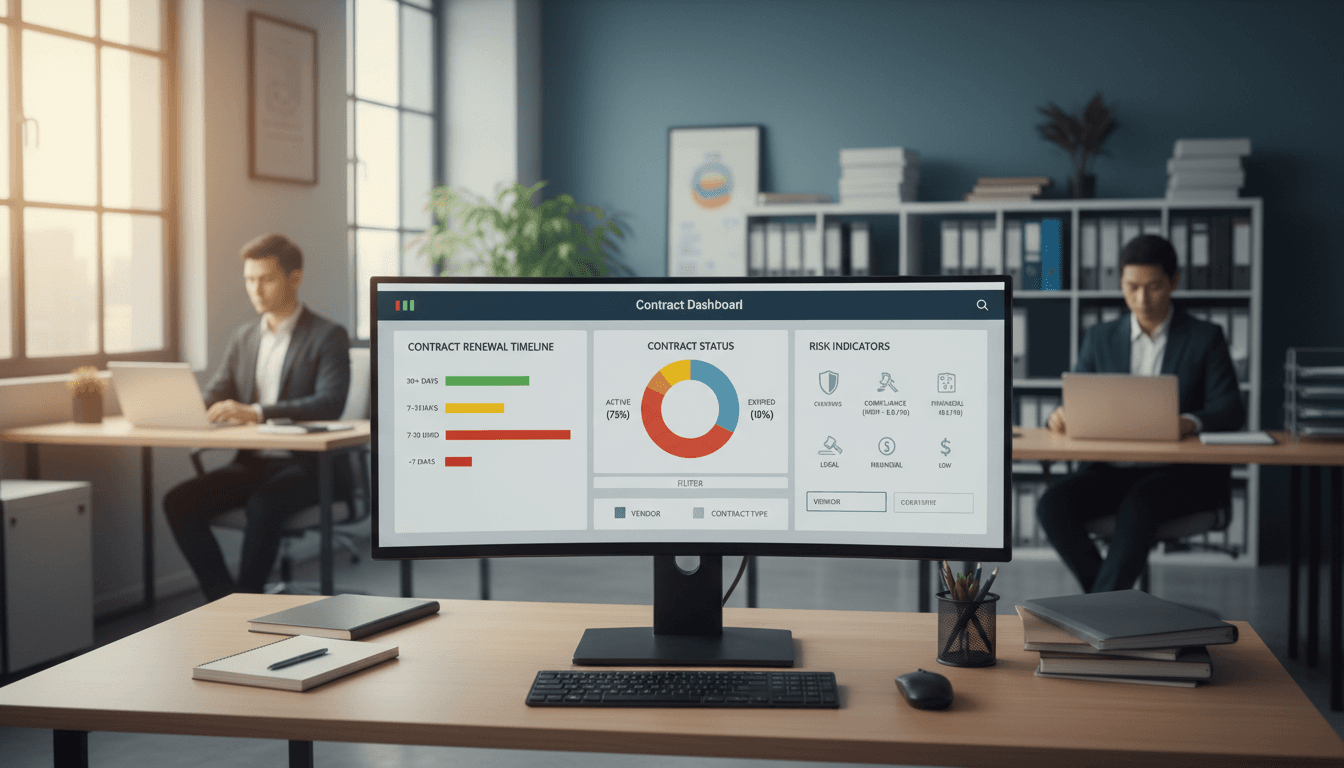

What to look for in an AI-forward CLM (and where Pramata fits)

Answer first: An AI-forward CLM isn’t about flashy summarization. It’s about accurate extraction, repeatable workflows, and defensible audit trails.

Spend Matters highlights Pramata’s emphasis on AI and its fit for large organizations. Based on that positioning, buyers should evaluate Pramata (and any CLM vendor) against three practical dimensions.

1) Contract discovery and normalization at enterprise scale

For large organizations, the first CLM milestone is rarely “authoring.” It’s finding what already exists.

A realistic enterprise contract landscape includes:

- Multiple ERPs and procurement suites

- M&A-created repositories (SharePoint sites, legacy CLM tools, local drives)

- Contract artifacts outside Legal (SOWs, change orders, amendments)

An AI-centric CLM must do more than ingest files. It should normalize a messy portfolio into a searchable, structured repository.

What I’d press on during demos:

- How does the platform handle amendments and precedence (what’s the “governing” clause)?

- Can it connect related documents (MSA → SOW → change orders → renewals)?

- What’s the error-handling process when extraction confidence is low?

If a vendor can’t answer those clearly, the AI layer becomes a liability.

2) Renewal management that’s tied to business action

Renewals are where CLM value becomes visible to procurement leadership.

Good renewal workflows do three things:

- Forecast: provide a 90/120/180-day pipeline of renewals with spend/context

- Route: assign actions to category owners and Legal with clear escalation paths

- Enforce: prevent auto-renew traps by tracking notice windows and approvals

Spend Matters calls out Pramata’s focus on managing renewals as a core pain point. That’s smart positioning, because renewals are one of the few CLM use cases that can produce near-term savings without “boiling the ocean.”

A concrete example:

- If your average enterprise has hundreds (or thousands) of supplier agreements, even a small improvement in timely renegotiation—say, converting 10% of auto-renewing contracts into competitive bids—can quickly justify the platform.

The key is operational integration: renewal alerts must feed into intake, sourcing events, or supplier performance workflows—not just email notifications.

3) Obligation and compliance tracking that’s audit-ready

Answer first: Compliance isn’t a PDF clause. Compliance is proof that required actions happened on time.

A CLM system that supports AI in legal and compliance should treat obligations as objects with owners, due dates, evidence, and escalation.

Examples that matter in procurement:

- Insurance certificates and renewals

- Security requirements (SOC 2, ISO, penetration testing attestations)

- Subcontractor flow-down obligations

- Rebate reporting and true-up clauses

- SLAs with service credits and reporting cadence

Spend Matters notes Pramata’s focus on meeting/enforcing obligations. For regulated industries (financial services, healthcare, critical infrastructure), this is where CLM becomes part of the compliance stack.

What I’d look for:

- Can obligations be tracked with evidence attachments and approval logs?

- Can AI flag missing evidence or inconsistent terms across similar suppliers?

- Are there defensible audit trails for who changed what, and when?

Competitive context: don’t compare CLM vendors like feature checklists

Answer first: The right CLM isn’t the one with the longest feature list; it’s the one that matches your contract maturity and your AI governance constraints.

Spend Matters’ vendor analysis format typically includes competitor lists and selection considerations. Even without naming every rival here, the categories are consistent:

- Enterprise CLM platforms optimized for complex repositories and global governance

- Mid-market CLM tools focused on speed of implementation and workflow simplicity

- Legal-centric CLM strong in authoring, playbooks, and redlining workflows

- Procurement suite CLM modules integrated with sourcing and P2P ecosystems

Pramata is positioned as a strong fit for large organizations needing end-to-end CLM with AI emphasis. That implies a buyer profile with:

- High contract volumes

- Multiple repositories and legacy tools

- Real risk exposure tied to obligations and renewals

- Executive pressure to consolidate vendors and standardize terms

Here’s the myth to drop: “We just need AI to summarize contracts.”

Summaries are nice. But procurement value comes from:

- Standard terms adoption

- Renewal control

- Obligation execution

- Risk visibility across suppliers

If a CLM can’t operationalize those, the AI output is just a prettier document.

Implementation reality in 2025: AI adoption fails on data hygiene, not models

Answer first: The biggest CLM implementation risk is underestimating how messy your contract data is—and how political ownership becomes.

When CLM projects stall, it’s usually because of one of these issues:

Data ingestion is treated like a one-time migration

Contract repositories aren’t static. New agreements appear in email threads, project folders, and supplier portals.

What works: define an ongoing ingestion and governance model:

- Where do contracts originate (Legal, Procurement, business units)?

- What’s the “system of record” for executed agreements?

- Who owns metadata quality and exception handling?

AI extraction confidence isn’t operationalized

AI can extract clauses and metadata, but there will always be edge cases: scanned documents, inconsistent templates, handwritten changes.

Practical requirement:

- A confidence scoring model that’s visible to users

- A workflow for human review (Legal Ops, Contract Managers)

- A way to learn from corrections (so the library gets cleaner over time)

Procurement and Legal don’t align on “success metrics”

Procurement often wants savings and cycle time. Legal wants defensibility and risk control.

The best CLM programs set shared KPIs such as:

- % of spend covered by searchable, normalized contracts

- % of contracts with tracked renewal dates and notice windows

-

of obligations with owners and evidence attached

- Clause deviation rate from approved standards

If you only measure “contracts uploaded,” you’ll get a very expensive document dump.

A practical evaluation checklist for Pramata (or any CLM)

Answer first: Evaluate CLM like a control system: inputs (contracts), processing (AI + workflow), outputs (actions, alerts, evidence).

Use this checklist to structure demos and reference calls.

Repository and search

- Can users find contracts by supplier, clause, obligation, product/service, and region?

- Does the system support a single supplier view across business units?

- Can it map relationships across MSA/SOW/amendments?

Renewals and commercial control

- Are notice windows captured and monitored automatically?

- Can renewal workflows route to category managers with clear SLAs?

- Can the platform surface “renewal risk” (auto-renew, termination penalties, exclusivity)?

Obligation management

- Are obligations tracked as tasks with owners, evidence, and escalation?

- Can the system report obligations due in the next 30/60/90 days?

- Does it support audit exports that a compliance team would accept?

AI governance and defensibility

- Is AI output explainable (where did the clause/field come from)?

- Are there controls for role-based access and sensitive clause visibility?

- Can you define approved clause libraries and deviation rules?

Integration and operating model

- Does it integrate with sourcing, supplier management, and ERP master data?

- Who administers templates, clause libraries, and playbooks?

- What’s the vendor’s approach to services: implementation, cleanup, enablement?

Where this fits in the “AI in Legal & Compliance” roadmap

CLM is one of the few enterprise systems where AI can be both high-impact and high-risk at the same time. High-impact because contracts govern money and obligations. High-risk because bad extraction or uncontrolled access can create compliance exposure.

Pramata’s positioning—end-to-end CLM with strong AI emphasis for large organizations—makes it a useful reference point for how the CLM market is evolving: away from “document management” and toward contract operations.

If you’re building an AI-driven procurement strategy for 2026 planning cycles, my advice is simple: treat CLM as the place where AI must be most disciplined. That’s where you’ll earn trust with Legal, Audit, and the business.

A mature CLM program doesn’t just store contracts—it turns contract terms into deadlines, owners, and proof.

Next step: map your top 20 supplier categories (by spend and risk) and identify which contract outcomes matter most—renewal control, obligation evidence, clause standardization, or vendor consolidation. Then evaluate vendors like Pramata against those outcomes, not against generic feature matrices.

If CLM becomes your AI control plane, what would you rather have in Q1 audits: a folder of PDFs—or a report that shows every high-risk obligation, owner, due date, and evidence in two clicks?