Continuous-time consistency models can match diffusion quality with two sampling steps. Learn what it means for faster, cheaper AI in U.S. SaaS.

Two-Step Consistency Models: Faster AI Sampling for SaaS

Most AI teams don’t lose sleep over sampling steps—until inference bills spike, latency targets get missed, and a “cool demo” can’t survive production traffic. That’s why the latest work on continuous-time consistency models is such a big deal: it reports comparable sample quality to leading diffusion models while using only two sampling steps.

If you run a U.S.-based SaaS product, a digital service, or an internal platform with real-time experiences (image generation, personalization, synthetic data, automated creative, dynamic UI assets), fewer sampling steps isn’t an academic flex. It’s a direct path to lower cost, higher throughput, and simpler scaling—especially during peak demand periods like late December promos, year-end reporting dashboards, and post-holiday customer support surges.

This post explains what “continuous-time consistency models” are in practical terms, why “two-step sampling” matters, and how to translate a research result into decisions about infrastructure, product design, and go-to-market in the U.S. tech ecosystem.

Continuous-time consistency models, explained without the fog

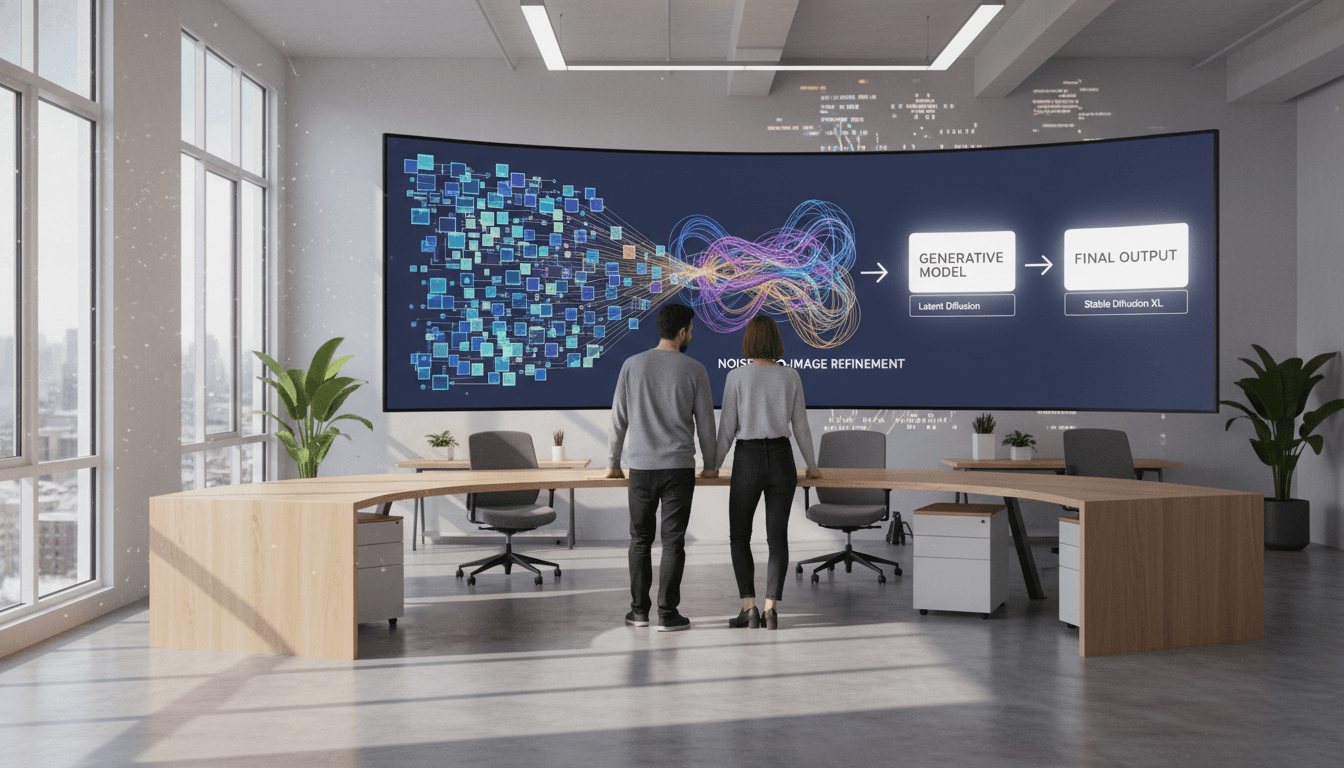

A continuous-time consistency model (CTCM) is a generative model trained so its outputs remain consistent across different noise levels and time points—so it can jump to a clean sample in very few steps.

Diffusion models typically generate outputs by gradually denoising over many steps. Even with modern samplers, you often budget tens of steps to get strong quality and stable outputs. Consistency models take a different angle: they’re trained to produce outputs that are “self-consistent” along the denoising path, so the model can make much bigger jumps.

Why “continuous-time” matters

Continuous-time training aims to make the model behave smoothly across an entire time interval, not just at a few discrete steps.

In production terms, this matters because discrete training can lead to “weird corners” where quality is great at certain step counts and degrades at others. Continuous-time framing is a bet on robustness: if the model learns the whole trajectory, you can sample with fewer steps and still stay on-distribution.

What “consistency” buys you

Consistency is a training constraint that reduces the number of refinement passes you need at inference.

Think of it like this: diffusion sampling is often iterative polishing. Consistency training tries to make the model good enough that it doesn’t need to polish much. When the RSS summary says the authors “simplified, stabilized, and scaled” these models, that’s the research team signaling they addressed the exact pain points that usually block adoption: training complexity, instability, and difficulties scaling to larger settings.

Snippet-worthy takeaway: If diffusion is “generate, check, refine, repeat,” consistency models aim for “generate correctly the first time—or close enough that two steps do the job.”

Why two-step sampling changes the economics of AI services

Two-step sampling changes the unit economics of generative AI because inference cost and latency are heavily influenced by how many forward passes you run per output.

A sampling “step” typically means a full model evaluation (a forward pass) at a given noise level/time. Reducing steps can translate into:

- Lower latency: fewer sequential passes means faster time-to-first-result.

- Higher throughput: the same GPU fleet can serve more requests per minute.

- Lower cost per generation: fewer passes reduces compute consumption.

- Simpler capacity planning: step count becomes less of a tuning knob during traffic spikes.

For U.S. digital services, this is particularly relevant because many teams are moving from “batch AI” to interactive AI:

- product imagery generated on demand for e-commerce listings

- creative variants generated during campaign setup in marketing platforms

- synthetic screenshots or UI mocks produced in design tools

- data augmentation pipelines for machine learning teams under tight timelines

In each case, users feel the difference between “a few seconds” and “instant.” And CFOs feel the difference between “we can afford this at scale” and “this feature needs a waitlist.”

The hidden win: fewer steps often means fewer failure modes

When you shrink sampling from many steps to two, you reduce the number of chances something goes wrong.

Long sampling chains can amplify small numerical issues, produce occasional artifacts, or require careful scheduler choices. In practical production environments—multiple model versions, quantization, mixed precision, autoscaling—simplicity isn’t aesthetic. It’s reliability.

What “simplified, stabilized, and scaled” likely signals (and why you should care)

Those three verbs map neatly to the three blockers that keep research models from becoming product features.

We don’t have the full paper text here, but the summary is still informative. When researchers emphasize simplification, stabilization, and scaling, they’re usually responding to known challenges:

Simplifying: fewer knobs, fewer bespoke tricks

Simplification usually means the training recipe is less fragile and easier to reproduce.

For a SaaS team, this is the difference between:

- “Only one PhD on the team can run this training job”

- and “We can standardize this in our ML platform with clear defaults”

If you’ve ever tried to operationalize a model that depends on a finicky scheduler or narrowly tuned hyperparameters, you know how expensive that fragility becomes.

Stabilizing: training that doesn’t collapse at scale

Stability means you can train longer, on larger datasets, and with larger model sizes without divergence or quality regressions.

This is especially important for U.S. companies under real-world constraints:

- model training has to fit within quarterly budgets

- experiments need to be repeatable for compliance and auditing

- reliability matters because outputs ship to customers

Scaling: quality that keeps up with diffusion baselines

Scaling means the approach isn’t only good on small benchmarks—it stays competitive as models and data grow.

The summary claims comparable sample quality to leading diffusion models. That’s a strong positioning statement because diffusion models are the reference point for high-quality generation in many modalities.

Where two-step generative models fit in U.S. SaaS and digital services

The best near-term fit is anywhere you need high-volume generation with tight latency budgets.

Here are practical patterns I’d bet on.

1) Real-time creative generation for marketing platforms

Marketing SaaS lives and dies by iteration speed—especially during seasonal pushes like end-of-year campaigns.

Two-step sampling can support:

- rapid ad creative variants (backgrounds, product scenes, lifestyle comps)

- on-brand template generation for email and landing pages

- personalized visual assets per segment without a massive render queue

Even if quality is merely “comparable,” the operational win is enormous when teams need hundreds of variants per hour.

2) Customer support and sales enablement content at scale

Generative models aren’t only for images; the same efficiency mindset applies to multimodal workflows.

If your support org generates:

- annotated screenshots

- step-by-step visuals

- tailored diagrams for onboarding

…fast sampling means these assets can be created inside live workflows rather than offline.

3) Synthetic data pipelines for ML teams

Synthetic data is only useful if it’s cheap enough to generate at the volumes your training needs.

Two-step sampling can reduce the compute barrier for:

- balancing rare classes

- generating edge cases for QA

- creating privacy-preserving training sets (with appropriate safeguards)

For U.S. firms working in regulated environments, synthetic data is often attractive, but costs and timelines can sink the project. Faster generation makes it feasible.

4) On-device and edge inference (where every millisecond matters)

Fewer steps is one of the most straightforward ways to make generative models more edge-friendly.

Even when you can’t fully run a model on-device, shrinking inference time reduces the networking and orchestration complexity of hybrid deployments.

What to ask before betting on a two-step sampler

The right question isn’t “Is it fast?” It’s “Is it predictably fast while staying on-brand and on-spec?”

Here’s a practical checklist for product and ML leads evaluating consistency-style approaches.

Quality and control

- Prompt adherence: Does two-step sampling follow instructions as well as your current diffusion baseline?

- Mode coverage: Does it produce enough variety, or does it collapse into similar outputs?

- Artifact rate: What percent of generations fail your automated checks (blurriness, odd hands, unreadable UI elements, etc.)?

Reliability and operations

- Variance under load: Does output quality change when you enable quantization, batching, or mixed precision?

- Latency distribution: Measure p50, p95, and p99. Two-step should improve tails, not only averages.

- Fallback strategy: If a sample fails validation, do you re-sample, route to a slower model, or request user edits?

Cost and scalability

- Cost per accepted output: Not cost per generation—cost per usable generation.

- GPU utilization: Fewer steps can increase throughput, but only if you keep the GPU fed (batching, queue design).

- SLA impact: Does the new sampler let you offer stronger response-time guarantees for enterprise customers?

Operational stance: A faster model that produces 10% more rejects can be a net loss. Tie evaluation to “accepted output” metrics.

A practical rollout plan for digital service teams

The safest way to adopt new sampling approaches is to start with narrow workflows where speed matters and stakes are manageable.

Here’s a rollout sequence that works in many U.S. SaaS orgs:

- Shadow mode evaluation (1–2 weeks): Run two-step generations alongside your current diffusion pipeline, store results, and score them with automated checks plus human review.

- Limited beta for internal teams (2–4 weeks): Let marketing ops, design, or customer education teams use the faster path with a manual “re-roll” button.

- Tiered production routing: Use two-step sampling as default for low-risk tasks (drafts, internal previews), and route high-stakes outputs (final assets, brand-critical renders) to the baseline until confidence grows.

- Add automated guardrails: Content policy checks, watermarking (if relevant), brand style filters, and “stop conditions” when outputs drift.

This approach turns research progress into product value without gambling your brand experience.

People also ask: quick answers

Is two-step sampling always better than diffusion? No. If your use case requires maximum fidelity on hard prompts, a longer sampler may still win. Two-step shines when speed, cost, and reliability dominate.

Will fewer steps reduce creativity or diversity? It can. You need to test diversity metrics and user satisfaction, not just “looks good” demos.

What’s the main business impact for SaaS? Lower inference cost and faster UX. That often translates to higher activation (users try more variants) and better retention (features feel responsive).

Where this fits in the bigger U.S. AI services story

Continuous-time consistency models are a clear example of the theme running through this series, “How AI Is Powering Technology and Digital Services in the United States.” The next wave of AI advantage won’t come only from bigger models. It’ll come from more efficient generation that makes AI features affordable, fast, and dependable enough to embed everywhere—customer communication, creative tooling, analytics workflows, and internal automation.

If you’re planning your 2026 roadmap, treat “two-step sampling with diffusion-level quality” as more than a research headline. It’s a signal that generative AI is getting easier to productize at scale.

Want a concrete next step? Pick one workflow where users are waiting on generation results—creative variants, support visuals, synthetic data—and run a two-week shadow test with a strict metric: cost per accepted output. If that number drops while user satisfaction holds, you’ve got a real scaling win on your hands.

Where in your product would a 2–5× faster generation loop change user behavior the most?