Stargate Project signals a shift to AI as infrastructure for U.S. digital services. Here’s how to scale automation, content, and support without losing trust.

Stargate Project: What It Means for US Digital Services

Most “AI announcements” aren’t really announcements. They’re branding exercises wrapped around features you could’ve guessed from the roadmap.

OpenAI’s Stargate Project (as teased by an announcement page that, at the time of scraping, was temporarily inaccessible) matters for a different reason: it signals the next phase of how AI will be built, hosted, governed, and operationalized inside U.S. technology and digital service companies.

And that’s the real story for this series—How AI Is Powering Technology and Digital Services in the United States. The winners in 2026 won’t be the teams that “try AI.” They’ll be the teams that treat AI like infrastructure: budgeted, monitored, secured, and measured like any other production system.

Snippet-worthy take: If your AI strategy is “pick a model and add a chat box,” you’re already behind.

What the Stargate Project signals (even with limited details)

The most direct point: OpenAI wouldn’t frame something as a “project” with a name like Stargate unless it’s bigger than a single model release.

When a major U.S.-based AI leader announces a named initiative, it typically implies at least three things:

- A platform direction (how developers and companies will build on it)

- A scale direction (how it will run reliably for more people and more workloads)

- A policy direction (how access, safety, and governance will work)

Even without the full text of the announcement page, the context is clear: U.S. digital services are shifting from AI as an add-on to AI as a core capability that touches customer support, content operations, software delivery, analytics, compliance, and personalization.

Why U.S. companies should care

U.S. SaaS and digital service providers compete on speed and experience. AI now impacts both.

- Speed: drafting, summarizing, classifying, coding, and routing work in seconds

- Experience: more responsive support, better onboarding, and smarter self-serve help

What changes with large initiatives like Stargate is the assumption that AI capability will be continuously expanding, not delivered in occasional “big bang” updates. If you’re running a U.S.-based digital service business, this is your cue to build an operating model for AI—not a one-off experiment.

AI infrastructure is the new competitive advantage for digital services

If Stargate is a platform-scale move, the downstream effect is predictable: AI becomes cheaper per task, faster to integrate, and more standardized.

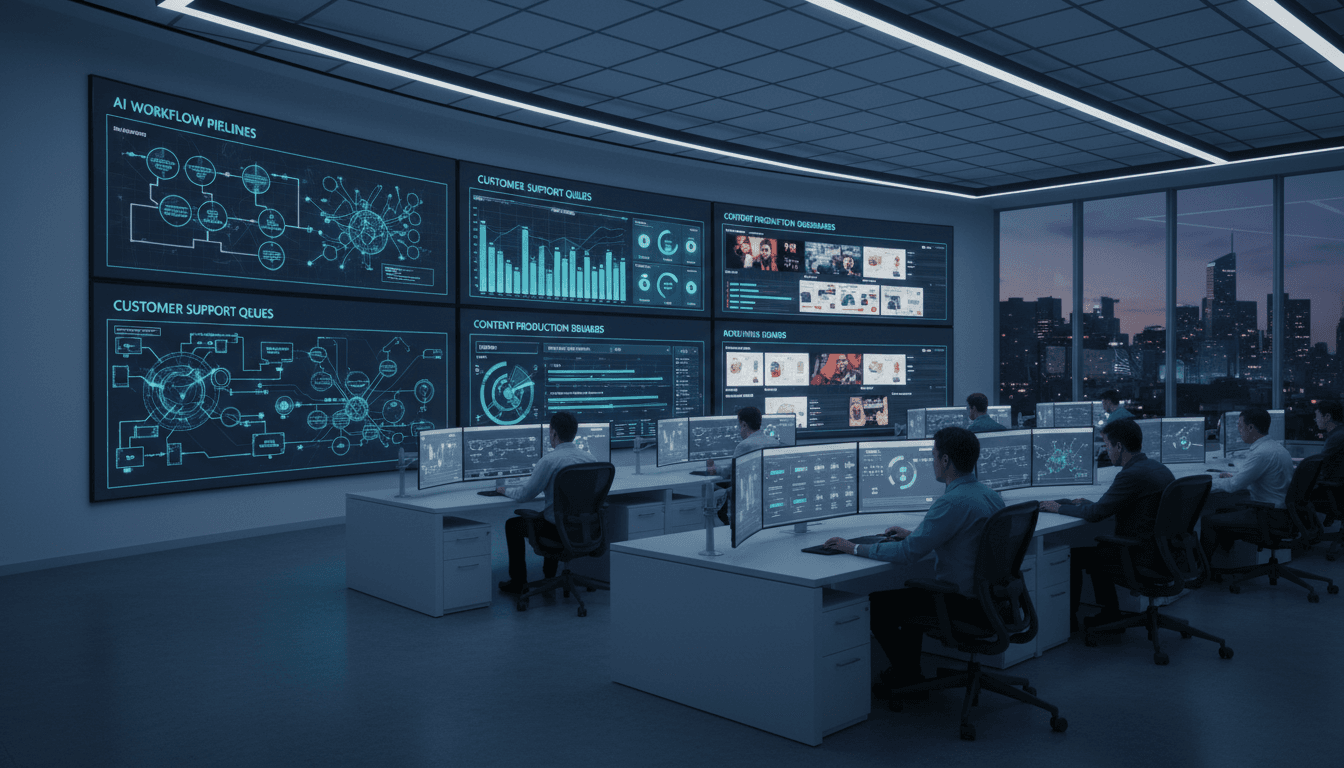

That sounds abstract. Here’s what it looks like in practice inside a mid-market U.S. SaaS company.

From manual workflows to AI production lines

Most teams start with AI in a scattered way:

- Support tries a chatbot

- Marketing tries AI writing

- Product tries AI search

- Sales tries AI outreach

The result is inconsistent quality and a lot of “it kind of works” demos.

A platform-style initiative pushes the opposite approach: you design an AI production line with shared components:

- A single approved model/provider strategy

- A shared prompt and evaluation library

- Unified logging and monitoring

- Governance around data access and retention

My stance: centralizing the boring parts is what lets you decentralize innovation safely.

The 4 layers you should plan for

Teams that scale AI inside digital services tend to converge on four layers:

- Experience layer: chat, search, copilots, agentic workflows

- Orchestration layer: routing, tool use, memory, guardrails

- Knowledge layer: retrieval, permissions, freshness, citations

- Operations layer: monitoring, cost controls, evals, incident response

If Stargate represents a push toward more standardized orchestration and operations, that’s a win for U.S. companies that have struggled with reliability and governance.

How Stargate connects to automation and service scaling

The campaign theme here is AI powering U.S. technology and digital services. The sharpest connection is automation that scales communication.

Digital services run on communication:

- customer questions

- onboarding instructions

- billing explanations

- status updates

- policy reminders

- internal handoffs

AI doesn’t just write words. It changes throughput.

Practical examples you can implement now

Below are AI use cases that consistently produce measurable operational impact when done responsibly:

1) Customer support: reduce handle time without trashing CSAT

A mature setup uses AI for:

- intent classification and routing

- suggested replies with knowledge base grounding

- conversation summarization for clean handoffs

- auto-tagging for analytics

What to measure:

- first response time (FRT)

- average handle time (AHT)

- resolution rate

- escalation rate

- customer satisfaction (CSAT)

If you can’t measure those, you don’t have an AI support strategy—you have a pilot.

2) Content ops: publish more without losing your voice

Most content teams hit a wall at 2–4 posts per month because:

- research takes time

- approvals take time

- repurposing is tedious

AI helps when you use it like an editor’s assistant:

- outline generation from internal briefs

- consistent style enforcement (“house voice”)

- extracting FAQs for help centers

- repurposing long-form into email and social drafts

Rule I like: if it’s public-facing, require a human pass. If it’s internal, automate aggressively.

3) Sales and success: better personalization, fewer spammy messages

AI should not mean “spray and pray faster.” It should mean:

- summarizing account context from CRM notes

- drafting renewal decks and QBR outlines

- generating call prep with risk flags

Bad AI outreach increases unsubscribes and burns your domain reputation. Good AI personalization increases reply quality.

What startups can learn from Stargate (without copying Big Tech)

Here’s the myth: only huge companies benefit from big AI initiatives.

The reality: startups benefit more because they can restructure faster.

The “small team, big output” playbook

If you’re a U.S. startup building a digital service, you can set norms now that larger firms take years to implement:

- Default to AI-assisted documentation (decisions, specs, retros)

- Ship with evaluation gates (don’t deploy prompts that aren’t tested)

- Treat tokens like spend (cost budgets per feature)

- Design for human override (no black-box automation in high-risk flows)

A simple maturity model for AI-powered digital services

Use this to sanity-check where you are:

- Experimenting: ad hoc prompts, no tracking

- Instrumented: logs, basic metrics, cost visibility

- Governed: access controls, data rules, approval flows

- Optimized: eval suites, prompt versioning, regression tests

- Differentiated: AI features customers will pay extra for

If Stargate accelerates tooling and best practices, the gap between levels 2 and 4 is going to get easier to cross—but only if you build the discipline.

The risk side: privacy, reliability, and brand trust

More AI in digital services creates real exposure. If you’re generating content or answering customers at scale, you’re making promises at scale.

The three failures customers don’t forgive

- Confidently wrong answers (hallucinations presented as facts)

- Data mishandling (sensitive info in prompts or logs)

- Tone-deaf automation (robotic responses in emotionally charged cases)

These aren’t theoretical. They show up as churn, chargebacks, and reputational damage.

The guardrails that actually work

You don’t fix this with a “be careful” policy. You fix it with systems:

- Retrieval-grounded responses for support and policy topics

- Fallback and escalation when confidence is low

- Red-team testing for prompt injection and jailbreaks

- PII minimization and explicit retention rules

- Human approval for anything legal/medical/financial

Snippet-worthy take: Trust isn’t an AI feature; it’s an operating requirement.

A 30-day action plan for U.S. digital service teams

If Stargate represents a broader shift toward AI infrastructure, the best response is to make your own org “AI-ready” quickly.

Week 1: pick one workflow that burns time

Choose something that:

- happens daily

- is measurable

- has clear failure modes

Good candidates:

- ticket summarization

- knowledge base gap detection

- onboarding email drafts

- churn-risk call notes

Week 2: define success metrics and failure rules

Write down:

- what “good” looks like (e.g., reduce AHT by 15%)

- what errors are unacceptable (e.g., policy misstatements)

- when to escalate to a human

Week 3: build with logging and review baked in

Minimum viable operations:

- store prompts and outputs for review n- tag outputs by use case

- track per-task cost

- sample 20–50 outputs/week for quality

Week 4: standardize and expand

If it works:

- document the pattern

- templatize prompts

- add a lightweight evaluation checklist

- roll it to the next workflow

This is how AI adoption becomes compounding, not chaotic.

Where the Stargate idea fits in the bigger U.S. AI story

The U.S. has an advantage in AI-powered digital services because it has three things at scale: cloud infrastructure, software talent, and a massive market that rewards productivity.

A named initiative like Stargate reinforces the direction of travel: more AI capability packaged for real-world use, not just research demos.

If you run a SaaS platform, an agency, a marketplace, or a content-driven business, the right move is to assume AI tooling will keep improving—and to build your processes so you can adopt improvements without breaking quality, compliance, or trust.

The next year will favor teams that can answer one question clearly: What do we want AI to do, how will we measure it, and what happens when it’s wrong?