Reversible generative models like Glow make AI easier to measure and operate. See where they fit in U.S. SaaS for anomaly detection, control, and monitoring.

Reversible Generative Models: What Glow Enables

Most teams building AI features in U.S. digital services hit the same wall: your generative model is powerful, but it’s expensive to run, hard to debug, and painful to deploy at scale. That’s not just an engineering problem—it becomes a product constraint. Latency creeps up, cloud bills balloon, and you start trimming “nice-to-have” AI features that customers actually want.

That’s why reversible generative models—the research direction associated with models like Glow—still matter in 2025, even in a world dominated by large language models. Glow is a milestone in a family of approaches called normalizing flows, where the model is designed so you can run it forward (data → latent representation) and backward (latent → generated data) efficiently.

This post explains what “reversible” means in practice, why Glow-style architectures are still relevant to AI-powered technology and digital services in the United States, and how U.S.-based SaaS platforms and product teams can apply the underlying ideas to ship faster, safer, and more predictable generative features.

What a reversible generative model actually gives you

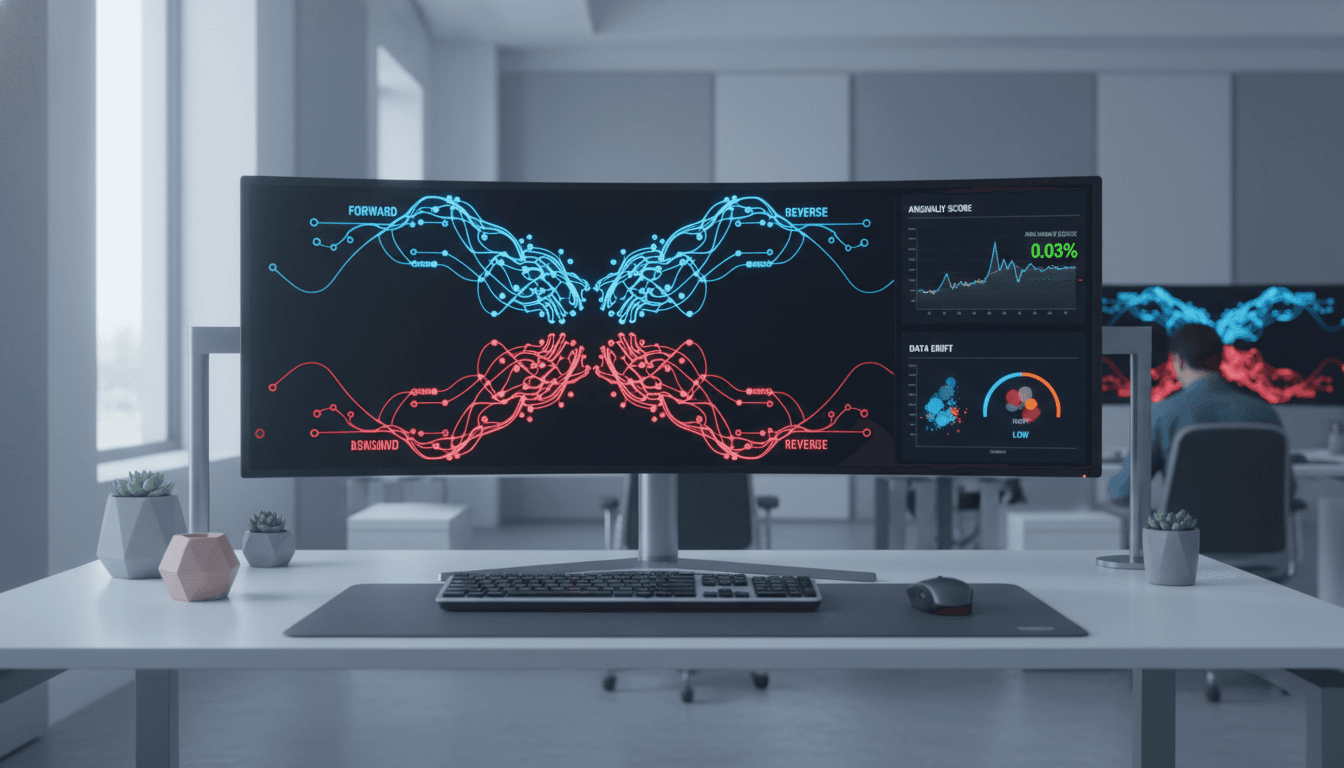

A reversible generative model gives you two reliable operations: (1) generate outputs from noise and (2) score or map real data back into a latent space with the same model.

In many popular generative approaches, you’re forced into trade-offs: sampling might be fast but likelihood scoring is tricky, or scoring is strong but sampling is slow. Normalizing flows (Glow is a well-known example) are built around an invertible transformation, which changes the day-to-day engineering experience.

Here’s the core practical value:

- Exact likelihoods (you can compute how probable an input is under the model).

- Bidirectional mapping between data and latent variables (useful for editing, compression, anomaly detection).

- Stable training compared with adversarial training setups that can be temperamental.

If you build digital services where you need predictable behavior and measurable confidence—think fraud signals, document workflows, medical intake, enterprise content moderation—those three properties aren’t academic. They translate directly into easier QA, clearer monitoring, and fewer “mystery failures.”

“Reversible” doesn’t mean “undo any mistake”

Reversible means the model’s transformations are mathematically invertible. It doesn’t guarantee outputs are always correct or safe. What it does guarantee is that the internal representation is structured in a way that’s easier to trace.

A product-minded way to say it:

Reversible models are easier to reason about because they don’t hide the mapping between inputs and generated outputs behind one-way compression.

Glow in one paragraph (and why it got attention)

Glow refers to a flow-based architecture designed for high-quality image generation using a stack of invertible operations (often involving invertible 1×1 convolutions and multi-scale designs). The “better” in “better reversible generative models” is about improving quality and efficiency so these models are practical, not just elegant.

Even if your company never trains an image generator, Glow popularized ideas that show up elsewhere: invertibility, controllable representations, and measurable likelihoods. Those ideas matter to U.S. companies building AI into customer-facing digital experiences where reliability and traceability are features.

Why U.S. digital services should care in 2025

The U.S. market is crowded with AI features that look the same: “generate text,” “summarize,” “rewrite,” “make an image.” The differentiation has shifted from raw capability to operational excellence:

- predictable latency

- controllable outputs

- auditable behavior

- lower per-request cost

- clear evaluation metrics

Reversible architectures support that shift because they’re built for measurement and control, not just impressive demos.

Seasonal reality check: Q4 and Q1 are when AI reliability matters most

It’s late December. For many U.S. businesses, this is peak load (ecommerce support, shipping exceptions, billing questions) and the start of annual planning.

When traffic spikes, AI systems fail in familiar ways:

- timeouts and queue backups

- “helpful” hallucinations in support channels

- inability to explain why a request was flagged

Flow-based and reversible-model ideas won’t replace language models for support chat. But they can strengthen the surrounding system: anomaly detection, content risk scoring, identity/document checks, and “is this request normal?” classification—areas where likelihood scoring and traceability are valuable.

Where reversible models help real products (not research demos)

Reversible generative models shine when you need density estimation or structured generation with tight constraints.

1) Anomaly detection for digital services

If you can compute a likelihood, you can rank “how typical” a new event looks.

Examples in U.S. SaaS and fintech:

- Detect unusual login patterns or session behaviors.

- Flag suspicious invoice layouts or payment instructions in AP automation.

- Identify outlier customer support attachments (scanned IDs, forms) that don’t match normal distributions.

This matters because anomaly detection is often built from brittle rules. A likelihood-based approach can adapt as your product changes.

2) Editing and controllable generation

Reversible mappings make “edit in latent space, then invert back” a natural workflow.

For product teams, that idea generalizes:

- Personalization: nudge representations toward a customer’s brand style.

- Content transformations: normalize scans, fix lighting artifacts, enhance readability.

- Compliance: remove sensitive patterns while preserving usability.

Even when the core generation is handled by other models, reversible components can act as a controlled transformation layer.

3) Better monitoring: turning generative uncertainty into metrics

Most AI teams monitor latency and error rates, then cross their fingers on quality.

With likelihood-based models you can build dashboards around:

- distribution shift (inputs are drifting)

- low-likelihood events by segment (one customer tenant behaving oddly)

- “unknown unknowns” detection (things your classifier was never trained on)

A line I use internally:

If you can’t measure confidence, you can’t operate AI at scale.

Reversible-model thinking pushes you toward measurable signals.

How Glow-style ideas fit alongside LLMs

Most U.S. digital services today rely on large language models for communication tasks: support replies, knowledge base drafts, sales enablement, and internal copilots. Glow isn’t competing with that.

The better architecture is a system, not a single model:

- LLM for language generation and reasoning

- classifier/ranker for policy and routing

- likelihood/anomaly model for “is this normal?”

- retrieval layer for grounding (docs, tickets, policies)

Reversible generative models fit into the “likelihood/anomaly” and “structured transformation” slots.

A practical pattern for SaaS: the “AI safety sandwich”

If you’re selling AI features to U.S. businesses, here’s a pattern that reduces incidents:

- Pre-check: score incoming content/events for abnormality and risk.

- Generate: run the LLM or generative model.

- Post-check: validate output for policy, format, and plausibility.

The pre-check and post-check are where flow-based likelihood signals can be useful—especially when you’re trying to keep false positives low while still catching weird edge cases.

Implementation guidance: what to do if you’re not training Glow

You probably aren’t training a Glow model from scratch. That’s fine. The win is adopting the principles.

Start with the business decision the model will support

Pick a decision that benefits from “how typical is this?”

Good candidates:

- routing (send to human vs automation)

- fraud review queues

- document verification workflows

- spam/abuse scoring

Define the action threshold and what happens on each side of it.

Instrument data the way you’ll operate it

Reversible/density models are sensitive to data drift. So instrument drift from day one:

- segment by customer tenant

- segment by geography/device

- track input feature distributions weekly

- track tail events (lowest 1% likelihood)

This is boring work. It’s also what keeps AI features alive after launch.

Prefer hybrid systems over ideology

I’m opinionated here: don’t get religious about one model family.

- Use LLMs where language matters.

- Use flows/likelihood models where confidence and anomaly scoring matter.

- Use simpler baselines (logistic regression, gradient boosting) when they’re easier to maintain.

Customers don’t buy “flow-based modeling.” They buy fewer chargebacks, faster onboarding, and fewer escalations.

People also ask: quick answers teams need

Are reversible generative models faster than diffusion models?

Often, yes for sampling, because generation can be a single pass through an invertible network rather than many iterative denoising steps. But speed depends on architecture and hardware.

Do reversible models work for text like they do for images?

They’re most common in continuous domains like images and audio. Text is discrete, which complicates exact invertibility. That said, the likelihood and monitoring mindset still applies.

What’s the biggest product benefit?

Operational clarity. When you can score inputs and detect drift, you can build alerts, guardrails, and SLAs around your AI features instead of hoping they behave.

What this means for AI-powered digital services in the U.S.

Glow-style reversible generative modeling is a reminder that progress in AI isn’t only about bigger models. A lot of real value comes from models that are measurable, debuggable, and production-friendly.

If you’re building AI into a U.S.-based SaaS product, start looking at your roadmap through that lens. Where are you currently blind—unable to quantify uncertainty, unable to detect drift, unable to explain why something looks suspicious? Those are the spots where reversible-model ideas can pay for themselves.

If you’re planning Q1 initiatives right now, a strong bet is pairing your generative features with systems that can score, monitor, and control them. The teams that win in 2026 won’t be the ones who can generate the most. They’ll be the ones who can operate AI reliably at scale.

What part of your AI stack today is hardest to measure: input risk, output quality, or drift over time? That answer usually points to your next build.