Model distillation in APIs helps teams keep large-model quality while cutting latency and cost. Learn where it fits, how to deploy it, and what to measure.

Model Distillation in APIs: Faster AI at Lower Cost

Most teams don’t have an “AI problem.” They have a unit economics problem.

You can build an impressive prototype with a large model, ship it to production, and then watch the bill spike the first time your support volume doubles or your product goes viral on a holiday weekend. In the U.S. digital services market—where customers expect instant responses and always-on personalization—latency and cost aren’t technical footnotes. They decide whether your AI features survive past Q1.

That’s why model distillation in an API is one of the most practical ideas in modern AI infrastructure. The promise is simple: keep the behavior you like from a large “teacher” model, but run it using a smaller “student” model that’s cheaper, faster, and easier to scale. If your business depends on AI-powered customer communication, agent workflows, or content generation, distillation is how you stop paying premium prices for every single token.

Why model distillation matters for U.S. digital services

Model distillation matters because it turns AI from a premium feature into a scalable service layer. In practice, that means you can offer AI-powered experiences—support automation, sales follow-ups, onboarding copilots—without pricing yourself into a corner.

In the United States, companies are under pressure to do more with less: higher customer expectations, tighter budgets, and intense competition across SaaS, fintech, healthcare, retail, and logistics. Distillation helps in three concrete ways:

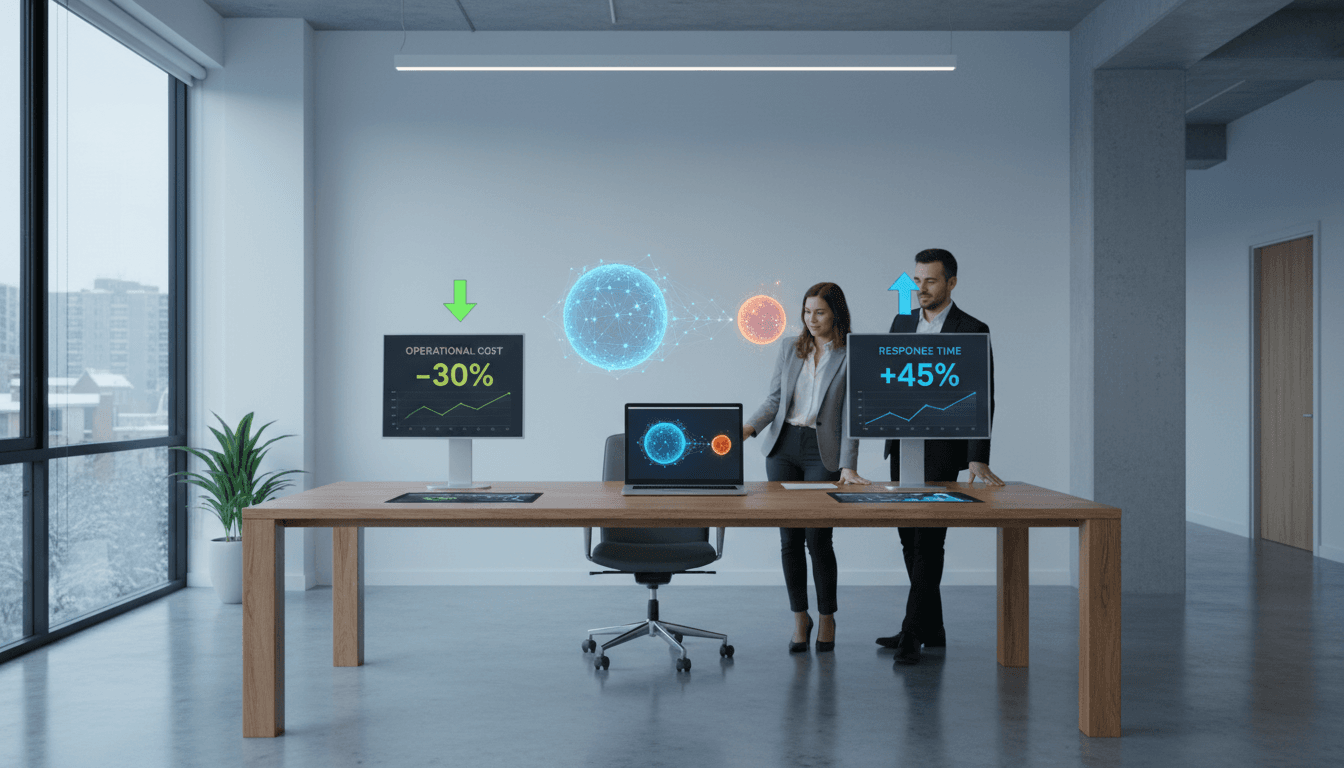

- Cost control: Smaller models generally reduce per-request costs, which is what you feel most in production.

- Speed: Lower latency matters for chat, voice, and real-time agent assist.

- Reliability at scale: You’re less likely to hit throughput bottlenecks when traffic surges.

Here’s the stance I’ll take: if you’re serving high-volume, repeatable tasks with a frontier model, you’re probably overspending. Not always—but often.

The hidden cost trap: “It works in staging”

A common pattern looks like this:

- Team prototypes with a large model because it’s easiest to get good outputs.

- The feature ships.

- Usage grows.

- Finance asks why inference costs are now a line item that needs weekly attention.

Distillation is one of the cleanest ways to keep the UX while improving the economics.

What “model distillation in the API” actually means

Model distillation is training or tuning a smaller model to imitate a larger model’s outputs for a specific set of tasks. The big model is the teacher. The smaller one is the student.

In an API context, the goal usually isn’t academic benchmark performance. It’s operational performance:

- Similar responses for your real prompts

- Fewer tokens wasted

- Lower latency and cost

- Stable tone, policy adherence, and formatting

Think of it like this: the teacher model is your senior specialist. The student model becomes the well-trained frontline team—fast, consistent, and able to handle most tickets without escalation.

Snippet-worthy definition: Model distillation is the process of copying task-specific behavior from a large model into a smaller model so you can run the same workflow with lower cost and latency.

Distillation vs. fine-tuning vs. prompt engineering

These get mixed up constantly. Here’s the practical difference:

- Prompt engineering: You change inputs to get better outputs. Cheap and fast to try. Fragile under edge cases.

- Fine-tuning: You adjust a model’s weights to improve performance on your data. Powerful, but can be slower to iterate and may require more governance.

- Distillation: You use a stronger model’s outputs (often at scale) as training targets for a smaller model. It’s ideal when you want the teacher’s style and decisions but need student-level costs.

In real production systems, you often use all three: prompt design for guardrails, fine-tuning for domain language, and distillation to make the whole thing economical.

Where distillation pays off (and where it doesn’t)

Distillation pays off most when your requests are high-volume and structurally repetitive. If your app does the same few jobs thousands of times a day, distillation is an obvious candidate.

High-ROI use cases in digital services

Here are common U.S. digital service workflows where distillation tends to shine:

- Customer support triage and replies

- Classify intent, detect sentiment, draft responses, suggest macros

- Sales development and follow-up

- Write email variants, handle objections, summarize calls, update CRM notes

- Document and policy Q&A

- Answer questions from internal handbooks, benefit plans, SOPs, onboarding docs

- Content operations

- Product descriptions, help center drafts, ad variations, social post versions

- Agent assist in real time

- Suggest next steps, recommended articles, compliance-safe phrasing

These are exactly the types of AI-powered technology and digital services that are expanding across the U.S. economy—because they map directly to operational costs and customer experience.

When you should keep the large model

Distillation isn’t magic. Some tasks still justify a bigger model:

- Complex reasoning with novel edge cases (rare prompts, high ambiguity)

- High-stakes decisions (medical, legal, financial approvals) where you also need layered controls

- Deep multi-tool orchestration where the model is acting as a planner across many systems

A practical approach is tiered inference:

- Student model handles the default path

- Teacher model is used for escalations, retries, or “uncertain” classifications

This keeps costs predictable while protecting quality.

A practical distillation workflow for API teams

The best distillation workflow is boring and measurable. You’re not trying to impress anyone with theory—you’re trying to ship a cheaper, faster model that behaves the same way.

Step 1: Define the job in crisp, testable terms

Pick one workflow. Not “customer support.” Something like:

- “Draft a refund response for subscription cancellations, in our brand voice, with a 3-bullet summary and a single CTA.”

Write requirements that are easy to score:

- Format rules (JSON, bullets, headings)

- Tone constraints

- Must-include policy statements

- Disallowed claims

Step 2: Build a “golden set” of real prompts

Collect a dataset that reflects production:

- 200–2,000 prompts is often enough to start

- Include messy user inputs (typos, short messages, angry complaints)

- Include edge cases (multiple intents, policy exceptions)

If you only distill from clean prompts, your student model will fail on the ugly ones—exactly the ones customers send at 11:47 PM on December 26.

Step 3: Generate teacher outputs (and keep the metadata)

Run the golden set through the teacher model and store:

- Prompt

- Teacher response

- Any tool outputs used

- Ground-truth labels where available (e.g., “billing dispute”)

- Rubric scores (helpfulness, accuracy, policy compliance)

This becomes your training and evaluation backbone.

Step 4: Train/tune the student and evaluate like a product

Treat evaluation as a release gate:

- Exactness metrics: Does it follow format and required fields?

- Policy metrics: Does it avoid prohibited claims?

- Customer metrics: CSAT proxies, containment rate, escalation rate

- Ops metrics: p95 latency, cost per resolution, tokens per conversation

A strong rule: don’t ship a distilled model without a rollback path.

Step 5: Deploy with routing and monitoring

I’ve found routing is where teams win long-term. A simple pattern:

- Start with student model

- If confidence is low, or user is angry, or policy-sensitive category detected → teacher model

- Log failures and add them back into the next distillation cycle

That feedback loop is how your AI gets cheaper and better.

What to ask your vendor or platform team

If a provider says they support model distillation in the API, you should ask how it behaves under real production constraints. These questions cut through marketing quickly:

- Data handling: Can we control retention and isolate data per tenant/environment?

- Evaluation tooling: Do we get built-in evals or do we own the full harness?

- Versioning: Can we pin a student model version and roll forward safely?

- Routing support: Is there a clean way to fall back to a larger model?

- Observability: Do we get per-route cost, latency, and quality signals?

For lead generation and vendor selection, these questions also make buying easier: you’re not “shopping for AI.” You’re shopping for predictable service delivery.

People also ask: model distillation in APIs

Is model distillation only for big tech?

No. If you have repetitive workflows and meaningful volume, distillation is often more valuable for mid-market SaaS and service providers because it stabilizes margins. The trick is scoping: distill one workflow first, prove savings, then expand.

Does distillation reduce quality?

It can, but it doesn’t have to. The quality drop usually comes from weak training data (too clean, too small, or not representative) or missing evaluation. With a good golden set and routing for edge cases, teams keep UX steady.

How do you know distillation is worth it?

If you can estimate:

- requests/day

- average tokens/request

- current cost/request

- target latency

…you can build a simple ROI model. Distillation is worth it when the savings over 3–6 months exceed the engineering and platform costs.

Where this fits in the “AI powering U.S. digital services” story

Model distillation is a quiet shift in how AI gets deployed across the U.S. economy. The early phase was “Who can demo the smartest model?” The current phase is “Who can run AI profitably at scale?” Digital services live or die on unit economics, and distillation is one of the strongest tools for making AI sustainable.

If you’re building AI features through an API—support automation, sales copilots, content pipelines—distillation is the difference between a flashy feature and a durable system. Start with one workflow, measure it like a product, and only then expand.

If you could make one part of your customer communication 30–50% cheaper to run next quarter, which workflow would you pick first?