Human-feedback summarization makes AI outputs more accurate and user-aligned—critical for support, marketing, and sales in U.S. digital services.

Human Feedback Summaries: Better AI for Digital Services

Most companies don’t have a “content problem.” They have a trust problem.

Your team can publish a thousand support articles, product updates, and campaign emails—but if the language is off, the details are wrong, or the tone feels robotic, customers notice. And once they stop believing what your digital channels tell them, conversion drops, support volume rises, and brand consistency turns into a whack-a-mole game.

That’s why learning to summarize with human feedback matters far beyond research headlines. It’s one of the clearest paths to AI systems that produce accurate, user-aligned content at scale—exactly the kind of capability powering U.S. technology and digital services right now.

Why “summarize with human feedback” is a big deal

AI summarization isn’t hard to demo. It’s hard to trust in production.

A model can shorten a long article, a meeting transcript, or a support ticket thread. The business risk shows up when the summary:

- omits a crucial condition (“only available for enterprise plans”)

- invents a detail (a classic hallucination)

- changes the meaning ("we may" becomes "we will")

- uses the wrong tone for the customer and brand

Human feedback training is the practical fix: instead of only learning from “what text usually looks like,” the model learns from what people judge as correct and helpful.

A useful summary isn’t the shortest one. It’s the one that preserves the decisions, constraints, and intent.

In digital services—especially in the U.S. SaaS market—this shows up everywhere: knowledge bases, customer support, sales enablement, compliance reviews, healthcare intake notes, and internal documentation.

How human feedback training actually improves summaries

Human feedback training (often discussed as RLHF—reinforcement learning from human feedback) is less mystical than it sounds. The core idea is simple:

- Humans rate or compare outputs. Given a document, the model produces multiple summaries; reviewers choose the better one.

- A “preference model” learns those judgments. It predicts which outputs humans would prefer.

- The summarizer is tuned to maximize those preferences. Over time, it produces outputs closer to what reviewers reward.

What humans are really rewarding

When reviewers prefer one summary over another, they’re usually rewarding a bundle of qualities:

- Factual consistency: statements must match the source

- Coverage: key points included, not just the “headline”

- Clarity: readable, properly structured, minimal jargon

- Helpfulness: oriented toward the user’s next step

- Tone alignment: “friendly and direct” vs. “legalistic” vs. “clinical”

Here’s what I’ve found in real deployments: teams often over-focus on tone and formatting first because it’s easy to see. The bigger win comes from training and evaluation that targets factuality and decision-critical details.

Why this beats generic prompt engineering

Prompts can help, but they don’t change what the model wants to do.

Human feedback changes the model’s internal incentives. If your reviewers consistently penalize vague claims and reward specific, sourced statements, the system becomes less “creative,” more grounded. That shift matters when AI is writing:

- customer-facing explanations of pricing and policies

- incident summaries after outages

- regulated disclosures (financial services, health, education)

Where this shows up in U.S. digital services (and why leads care)

For the “How AI Is Powering Technology and Digital Services in the United States” series, summarization with human feedback is a foundational capability. It’s what makes AI usable not just for drafts—but for operational workflows.

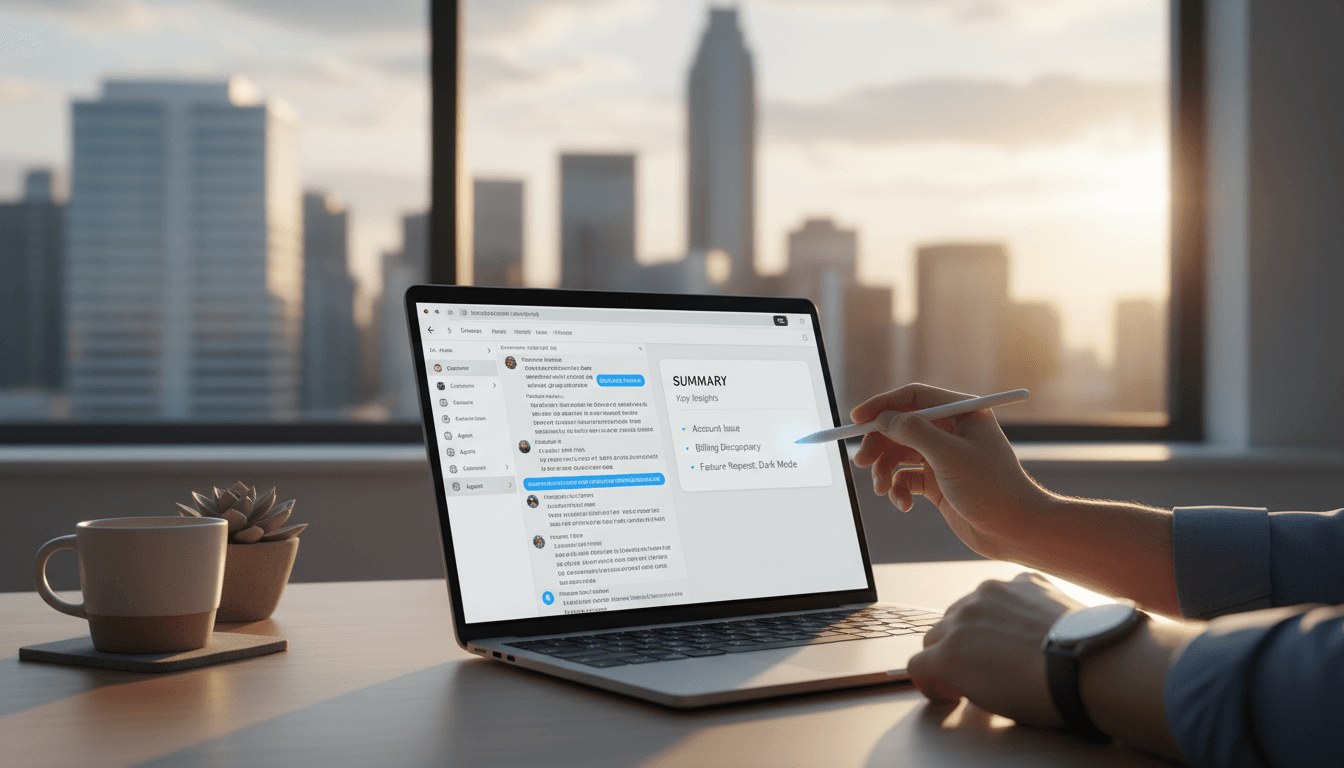

1) Customer support: faster resolution without making things up

Support teams live inside long, messy threads: logs, screenshots, chat transcripts, ticket histories.

A human-feedback-trained summarizer can:

- produce a case brief for the next agent (“what’s been tried, what failed, what to do next”)

- generate a customer recap that’s accurate and polite

- create a root-cause summary for engineering (symptoms, environment, reproduction steps)

The lead-gen angle is straightforward: if you sell software or services, better summaries mean lower support costs and higher CSAT—and that’s budget.

2) Marketing and lifecycle: content scaling with guardrails

Marketing teams want scale. They also need consistency.

Summaries are the hidden workhorse behind:

- turning webinars into campaign-ready abstracts

- condensing whitepapers into nurture sequences

- generating sales one-pagers from product docs

- creating “what changed” release note highlights

Human feedback is what keeps this from becoming a brand-risk machine.

A stance: if your AI content pipeline doesn’t include human preference signals (from editors, brand reviewers, legal, or product), you’re building a content engine that will drift. It might drift slowly. But it will.

3) Sales enablement: fewer “Wall of Text” follow-ups

Sales calls produce long transcripts and even longer follow-up emails.

Summarization tuned by human feedback can produce:

- action-item lists with owners and deadlines

- objection summaries with recommended responses

- account briefs that don’t bury the lede

When you’re selling into mid-market or enterprise in the U.S., buyers expect tight communication. A clean summary is often the difference between “this vendor is organized” and “this vendor is chaos.”

A practical framework for implementing human-feedback summarization

You don’t need a research lab to do this well. You need a review loop and a few disciplined choices.

Step 1: Decide what “good” means for your business

Start with a rubric. Make it concrete.

For example, a support-ticket summary rubric might score:

- Accuracy (0–2): no invented facts, correct identifiers

- Coverage (0–2): includes issue, impact, steps tried, current status

- Actionability (0–2): clear next step and requested info

- Tone (0–2): matches brand and context

- Brevity (0–2): short but not missing essentials

Write this down. Train reviewers on it. Otherwise, “human feedback” becomes “human vibes.”

Step 2: Collect preference data without slowing teams down

The best feedback systems feel like normal work.

Places to embed simple comparisons:

- Editors choosing between two summary candidates

- Agents selecting “more accurate” vs. “more helpful”

- Product managers marking missing critical details

Keep it lightweight:

- two candidates per item is usually enough

- 30–90 seconds per judgment is realistic

- sample a subset of traffic (start with 1–5%)

Step 3: Use layered guardrails, not just training

Human feedback improves behavior, but production systems still need checks.

A solid setup includes:

- Grounding requirement: summary must cite or quote source snippets internally

- Policy rules: forbid unsupported claims (pricing, legal commitments)

- Confidence flags: if the model isn’t sure, it asks for clarification

- Fallback behavior: route edge cases to a human

This is how you get to summaries that are safe enough for customer-facing workflows.

Step 4: Measure what matters (beyond “looks good”)

If you want reliable AI summarization, measure outcomes.

Metrics that actually correlate with business value:

- Deflection rate (support): fewer follow-up questions after summaries

- Handle time (support/sales): minutes saved per case/call

- Escalation rate: fewer “summary caused confusion” escalations

- Compliance errors: policy violations caught per 1,000 summaries

- Edit distance (marketing): how much human editors change AI output

One practical benchmark: if humans rewrite more than ~30–40% of summaries, your rubric, prompts, or training loop needs work.

People also ask: common questions about human feedback summaries

Is human feedback just manual labeling at scale?

No. Labeling is often “what is the right answer?” Human feedback is frequently “which answer is better?” Pairwise preference data is faster to collect and closer to real editorial decisions.

Does this eliminate hallucinations?

It reduces them, but it doesn’t magically delete the risk. The strongest results come from combining preference training with grounding, retrieval from trusted sources, and strict policies.

Can small teams do this, or is it only for big tech?

Small teams can absolutely do it. Start with a narrow workflow (support recaps or webinar summaries), collect feedback from 3–10 reviewers, and tune your system based on real errors.

What this means for 2026 planning in U.S. digital services

Late December is when teams reset budgets, tools, and operating rhythms. If AI is on your 2026 roadmap, summarization with human feedback is one of the safest high-ROI entries because it targets a universal pain: too much text, not enough clarity.

It also fits how modern U.S. digital services are built: lots of SaaS tools, lots of customer touchpoints, and a constant need to communicate changes quickly without losing accuracy.

If you’re evaluating AI for marketing automation, customer communication, or scalable content creation, ask one question early:

Where will the human feedback come from, and how will it shape the model’s behavior over time?

Teams that can answer that have a real advantage—because they’re not just generating more content. They’re building systems that learn what “good” means for their customers.