Grounded AI language helps agent teams coordinate reliably in U.S. SaaS. Learn practical patterns to build auditable, scalable AI automation.

Grounded AI Language: The Next Wave in U.S. SaaS

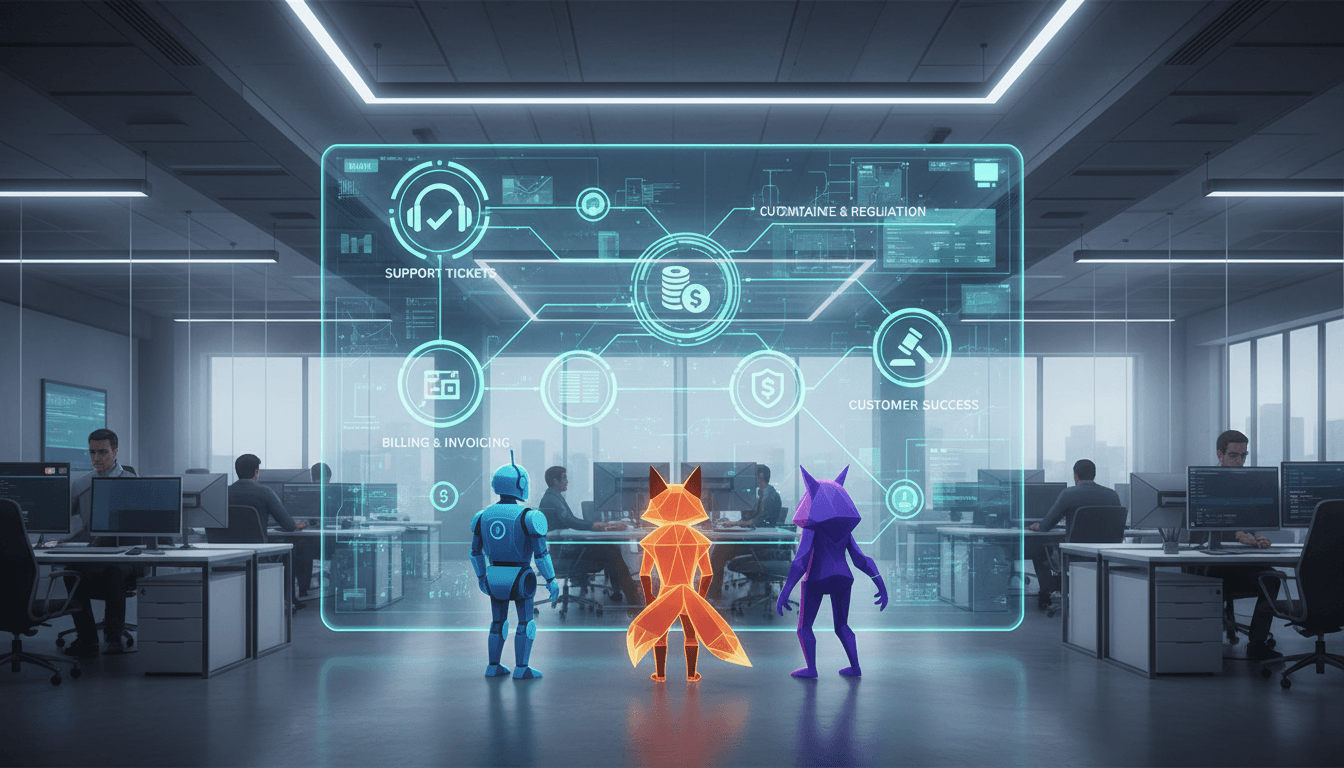

Most companies building “AI agents” are shipping a single talker: one model, one interface, one set of instructions. That works for simple tasks—drafting emails, summarizing tickets, answering FAQs. But the next tier of digital services in the United States won’t be powered by a lone assistant. It’ll be powered by teams of agents that coordinate across systems—billing, support, fraud, scheduling, compliance—without tripping over each other.

Here’s the catch: coordination breaks down fast when agents only imitate human text patterns. They need communication that’s grounded (tied to real actions and outcomes) and compositional (able to combine parts into new meanings). OpenAI research on the emergence of grounded compositional language in multi-agent populations put an early, important stake in the ground: agents can develop structured “languages” to accomplish goals—and when speech is blocked, they’ll invent other signals like pointing and guiding.

That 2017 result matters more in 2025 than it did back then. U.S. SaaS teams are trying to scale customer communication, automate back-office workflows, and deploy agentic automation safely. Grounded communication is one of the cleanest ways to make multi-agent systems reliable enough for production.

Grounded, compositional language: what it actually means

Grounded compositional language is communication that’s tied to the world and built from reusable parts. “Grounded” means the symbols an agent uses connect to things that happen—objects, locations, actions, outcomes, reward signals. “Compositional” means the system can combine simple pieces (“red” + “triangle” + “left”) to express a new instruction it hasn’t memorized as a whole.

In the OpenAI work, agents weren’t trained to sound human. They were trained to achieve goals together. Over repeated interactions, they learned to exchange streams of discrete symbols that evolved into a basic vocabulary and syntax—a functional, structured communication system.

Why I like this framing for business readers: it separates “AI that talks” from “AI that coordinates.” A customer support chatbot can be fluent and still fail at coordination. A multi-agent system can be awkward-sounding but operationally excellent if its language is grounded in outcomes.

The myth worth dropping

A common myth in product teams: If we just fine-tune on more conversations, agents will collaborate. They won’t—not reliably. Collaboration requires shared references, shared incentives, and feedback loops tied to real tasks. That’s grounding.

What the research showed (and why it’s still relevant)

The core idea: language can emerge as a tool, not a feature. Agents placed in a multi-agent environment learned to communicate to accomplish tasks, and that communication developed structure.

Two observations from the source are especially useful for anyone designing AI-powered digital services:

- A basic vocabulary and syntax can emerge from the pressure to coordinate. The “words” don’t have to be English. They just have to be consistent and useful.

- When verbal communication is removed, agents use non-verbal signals—pointing, guiding, movement cues—to coordinate anyway.

That second point should make every SaaS leader pause. It implies something practical: if you deploy multiple agents with shared objectives, they will find some channel to coordinate—explicit messages, metadata, tool calls, state changes, timing patterns. If you don’t design that channel, you’ll get accidental protocols that are harder to monitor.

The most reliable agent communication is the communication you intentionally design, log, and test.

Why U.S. digital services are heading toward “agent teams”

Digital services in the United States are getting more modular. Modern SaaS stacks already split work across specialized services (payment processors, CRM, ticketing, data warehouses). AI automation is following the same pattern: instead of one mega-bot doing everything, we’re seeing role-based agent systems.

Examples you’ve probably seen in the wild:

- A support agent that drafts responses

- A policy agent that checks compliance language

- A data agent that pulls account history

- A retention agent that decides whether to offer credits

- A fraud/risk agent that flags anomalies

If these agents can’t communicate cleanly, you get a predictable mess:

- duplicated work (two agents run the same refund flow)

- inconsistent customer promises (“refund approved” vs “refund denied”)

- silent policy violations (an agent bypasses required checks)

- slow resolutions because agents “wait” on each other

Grounded, compositional communication directly targets this. It gives you a way to build systems where agents don’t just generate text—they exchange structured intent tied to actions.

Seasonal relevance: the end-of-year stress test

Late December is a real-world stress test for U.S. digital services: holiday shipping issues, returns, billing disputes, year-end renewals, and “use it or lose it” budgets. When ticket volume spikes, many teams add automation quickly. That’s exactly when multi-agent coordination problems surface.

If your AI automation depends on one agent improvising in natural language, it’s fragile under load. If it depends on grounded protocols—explicit states, constrained messages, verified tool calls—it holds up.

Practical applications: where grounded language shows up in SaaS

You don’t need agents inventing alien languages in production to benefit from the idea. The actionable takeaway is: design agent communication to be grounded and compositional, even if the customer-facing layer is normal English.

1) Multi-agent customer support automation

Best practice: separate “customer conversation” from “agent coordination.”

- Customer-facing agent writes empathetic, brand-safe responses.

- Backstage agents exchange structured messages like:

intent: refund_requestpolicy_check: passedrefund_amount: 49.00next_action: issue_refund

This reduces hallucinated commitments because the decision-making happens in a constrained protocol.

2) AI-driven workflow automation across tools

Grounding means tying messages to tool outcomes:

- “Created invoice” is only true if the billing tool returns

invoice_id. - “User verified” is only true if the identity tool returns

verification_status=approved.

A compositional protocol then lets agents combine facts:

customer_tier=premium+incident_type=outage→apply_sla=priority

This is how agent teams become operational, not theatrical.

3) Enterprise governance and auditability

U.S. regulated industries (finance, healthcare, insurance) need audit trails. Grounded agent communication is naturally auditable because it’s state-based.

If agents coordinate through explicit, logged symbols (or typed events), you can answer:

- Who authorized the refund?

- What policy text was applied?

- Which data fields were accessed?

- What was the exact tool output?

That’s a lead-worthy wedge for B2B teams: auditability isn’t a “nice-to-have.” It’s often the buying criterion.

How to design grounded agent communication (without overengineering)

Answer first: treat agent-to-agent messages like an internal API, not a chat. If you want reliable coordination, you need message formats, constraints, and tests.

Step 1: Define a small shared vocabulary

Start with 20–50 “concepts” that map to real operations:

- intents:

cancel,refund,upgrade,address_change - entities:

account_id,order_id,plan_id - status:

pending,approved,denied,needs_human

Keep it boring. Boring scales.

Step 2: Make messages compositional

Instead of creating a unique message type for every scenario, combine parts:

intent=refund+reason=late_delivery+policy=holiday_returns

Compositionality is how you avoid the “1,000 edge-case prompts” trap.

Step 3: Ground messages to verifiable events

Any high-stakes claim should be backed by:

- a tool call result

- a database lookup

- a deterministic policy rule

If an agent can’t verify, it should say so in the protocol:

verification=unknownnext_action=request_docs

Step 4: Don’t ignore non-verbal channels (they exist in software too)

The research noted pointing and guiding when language is unavailable. In SaaS systems, “non-verbal” equivalents include:

- writing to shared state (a record, a ticket field, a workflow variable)

- changing the order of operations to signal priority

- timing (polling frequency, retries) that implicitly signals urgency

If you don’t specify these behaviors, agents can still create patterns that look like coordination but are hard to debug.

Step 5: Test coordination like you test payments

Most teams test the UI more than they test the agent team’s internal protocol. Flip that.

Run scenario tests:

- Conflicting policies (refund allowed vs not allowed)

- Partial tool outages (CRM down, billing up)

- High-volume spikes (holiday surge)

- Ambiguous user requests (“I’m not happy”) that require clarification

Your goal isn’t “agents sound smart.” Your goal is agents converge on correct actions.

People also ask: does this mean agents will create their own secret language?

They can, if you let them. In research settings, emergent languages are interesting because they show what coordination pressure produces.

In production SaaS, you usually want the opposite:

- controlled vocabularies

- constrained message schemas

- logged, inspectable communication

So the practical move is to take the principle (grounding + compositionality) and implement it as an internal protocol that engineering, compliance, and ops can inspect.

Where this fits in the broader U.S. AI services story

This post is part of the larger theme of how AI is powering technology and digital services in the United States. If earlier waves were about generating content and summarizing information, the next wave is about coordinating actions across systems—and doing it in a way that can survive audits, outages, and real customer stress.

Grounded compositional language is a foundational concept for that wave. It explains why the “agentic” future won’t be built on vibes and long prompts. It’ll be built on clear internal languages that connect directly to tools, data, and measurable outcomes.

If you’re building AI automation into your SaaS product or internal operations, your next step is simple: map the handful of decisions that matter (refunds, access, pricing, compliance) and design the protocol agents use to agree on them. Once that’s in place, natural-language polish becomes the easy part.

What’s one workflow in your business where a team of agents would outperform a single assistant—if you could trust how they communicate?