Quantitative analysis makes decoder-based generative AI reliable in SaaS. Learn the metrics and test loops U.S. teams use to ship safely.

Quantifying Decoder Models: What Matters in 2025

Most teams using generative AI in production still evaluate it like it’s a demo: a few prompts, a gut-check, and maybe a thumbs-up/down from someone on the marketing team. That approach breaks the moment you scale—more channels, more customer segments, more compliance rules, more ways for a model to be “mostly fine” while quietly hurting conversion or support quality.

Decoder-based generative models—the family of systems behind modern text generation—became practical because researchers learned to measure them. Not just “does it sound good,” but: how predictable is it, what errors does it make, and what happens when you change the knobs? That quantitative mindset, rooted in foundational research from the mid-2010s, is a big reason U.S. SaaS products can now trust generative AI for real work: email drafting, chat support automation, knowledge-base answers, sales enablement, and internal ops.

This post translates the idea of quantitative analysis of decoder-based generative models into what you actually need in 2025: evaluation metrics that predict business outcomes, practical test designs, and a measurement stack you can run inside a U.S.-based digital service without turning your engineers into full-time researchers.

Decoder-based generative models: the workhorse behind U.S. SaaS

Decoder models are the engines that predict the next token (word piece) given a context, then repeat that step to produce a full response. That simple mechanism is why they’re so widely useful: the same model can write an onboarding email, summarize a call transcript, or draft a support response.

The reason this matters for the “How AI Is Powering Technology and Digital Services in the United States” series is practical: most AI-powered content creation and customer communication automation today is decoder-first. Even when products advertise “AI agents” or “copilots,” the core generation step is still usually decoder-based.

Why decoder models fit digital services so well

Decoder models map neatly to the highest-volume workflows in U.S. tech companies:

- Marketing operations: subject lines, landing-page variants, ad copy, personalization tokens

- Customer support: first-draft replies, macro generation, ticket summarization

- Sales: outbound sequences, account research summaries, objection handling

- Product & engineering: release notes, incident summaries, internal Q&A

What’s changed since the early research era isn’t the basic idea of decoding—it’s the systems discipline around evaluation. You can’t run AI at scale without measurement that’s consistent, repeatable, and tied to risk.

Quantitative analysis: the difference between “cool” and “reliable”

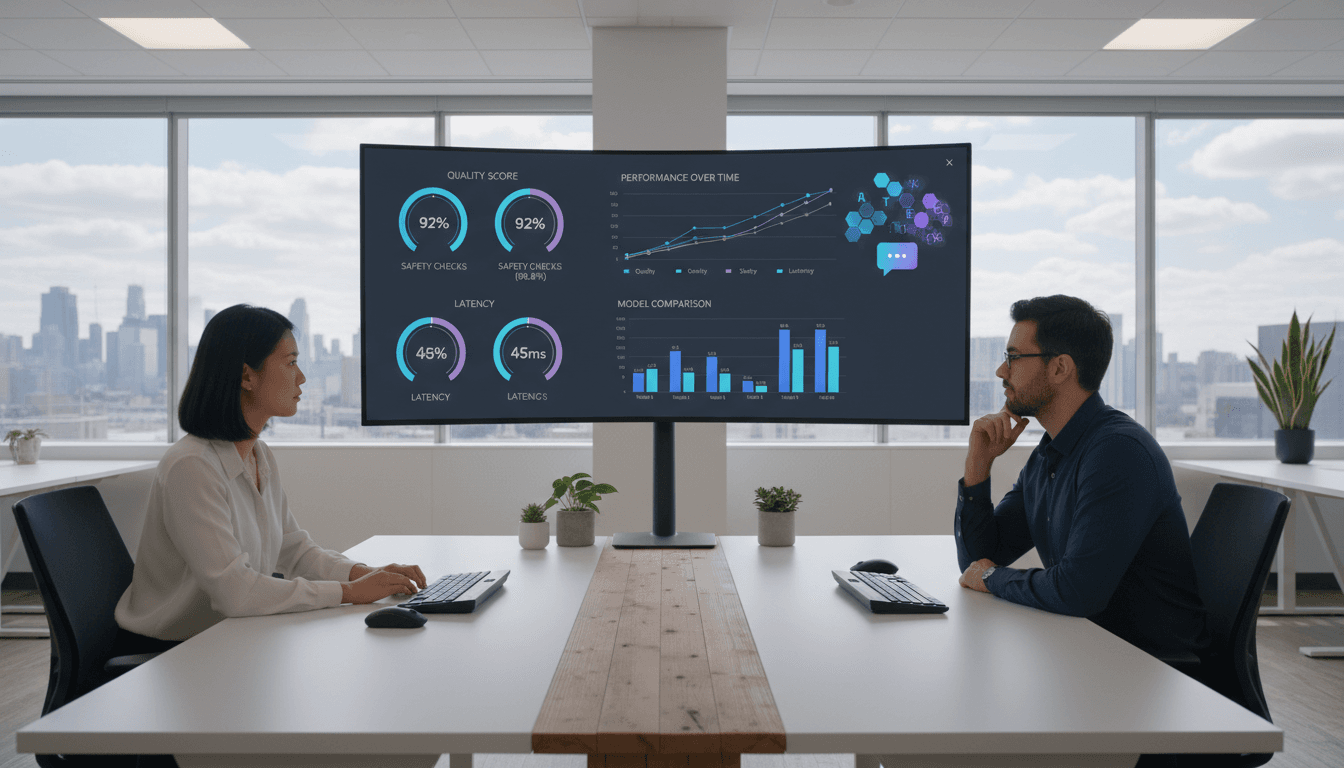

Quantitative analysis means you define success in numbers, then test models under conditions that resemble production. If you’re building AI into a SaaS platform, your evaluation has to answer three questions with evidence:

- Quality: Are outputs accurate, helpful, and on-brand?

- Stability: Do results remain consistent across time, prompts, and user segments?

- Safety & compliance: Does the model avoid disallowed content and follow policies?

In early decoder-model research, one of the key ideas was to move from anecdotal samples to aggregate measures that track model behavior across many examples. That principle is still the backbone of modern evaluation.

A useful one-liner for teams: If you can’t measure it weekly, you can’t ship it confidently.

The core metric family: likelihood-based evaluation (and its limits)

The classic quantitative approach for decoder models is to measure how well the model predicts real text. This is often expressed via log-likelihood and summarized as perplexity.

- Log-likelihood: How probable the model thinks the correct next token is.

- Perplexity: A transformed version that’s easier to compare; lower is better.

Perplexity is great for answering: Is the model better at modeling language? But in SaaS, you usually care about: Is the output correct and useful for this workflow? A model can have improved perplexity and still:

- hallucinate citations

- miss required fields (like refund policy constraints)

- ignore tone guidelines

- fail on edge cases (angry customers, regulated categories)

So treat likelihood metrics as a health signal, not a launch gate.

What to measure in 2025: metrics tied to business outcomes

For U.S. digital services, evaluation works best when it mixes automated scoring and targeted human review. Here’s a practical metric set I’ve seen work across content creation and customer support.

1) Task success rate (TSR)

Task success rate is the percentage of outputs that meet a workflow’s definition of “done.” You define a checklist and score pass/fail.

Examples:

- Support reply includes: apology + policy-accurate answer + next step + correct tone

- Marketing email includes: offer terms + CTA + compliant disclaimer + personalization

Why it’s powerful: it’s binary and operational, so it maps to throughput and QA cost.

2) Factuality and groundedness

If your model answers from a knowledge base, measure whether it stays grounded.

Practical scoring approaches:

- Citation/quote required: output must reference allowed snippets (even if you don’t show citations to users)

- Answerability gate: if the knowledge isn’t present, the model must say it can’t find it

This is where many “AI customer support automation” rollouts succeed or fail. If you don’t quantify groundedness, you’ll end up with polite nonsense at scale.

3) Brand voice consistency

Brand voice isn’t subjective if you define it.

Create a rubric with 5–10 rules such as:

- uses contractions (or doesn’t)

- avoids slang

- uses second person (“you”) more than first person (“we”)

- keeps sentences under X words for certain channels

Then sample outputs weekly and score them. If you can’t quantify voice, the model will drift as prompts evolve.

4) Safety and policy adherence

This is where teams often overcomplicate things. Keep it simple:

- Disallowed content rate: percent of outputs violating policy

- Refusal correctness: percent of cases where the model correctly refuses

- Escalation correctness: percent of cases routed to a human when required

For regulated industries (finance, healthcare), you also want disclaimer presence and prohibited claim rate.

5) Latency and cost per successful output

Your business doesn’t pay for tokens; it pays for completed work.

Track:

- p95 latency per channel (chat vs email vs internal)

- cost per success = total generation cost / number of outputs that pass TSR

This creates healthy pressure to optimize prompts, caching, and retrieval instead of just buying bigger models.

How to run evaluation like a SaaS team (not a research lab)

The cleanest approach is an evaluation pipeline with three layers: offline tests, online monitoring, and incident response.

Offline: build a “golden set” that reflects production

Start with 200–1,000 real examples from your product workflows, scrubbed for privacy. Split them into buckets:

- common cases (the everyday stuff)

- edge cases (angry tone, incomplete info, contradictory info)

- policy cases (refunds, cancellations, restricted content)

Then freeze that dataset and re-run it every time you change:

- prompts

- retrieval settings

- model version

- policy rules

If you’re doing AI content creation for marketing, include seasonality. Late December matters: year-end promos, holiday support volume, and calendar-year transitions create predictable spikes in “policy questions” and “urgent tone.” Bake those into the set.

Online: monitor drift with lightweight sampling

Once in production, don’t try to human-review everything. Do this instead:

- sample 0.5%–2% of outputs per workflow

- score them against TSR + groundedness + safety

- trend the results weekly

You’re looking for drift triggered by:

- new product features

- updated knowledge base articles

- prompt tweaks by well-meaning teams

- changes in user behavior (common during holiday seasons)

Incident response: treat model failures like product bugs

When the model fails, write it up like an incident:

- what happened (example outputs)

- user impact (tickets, churn risk, compliance exposure)

- root cause (prompt, retrieval, model change, data freshness)

- fix (guardrail, template, retrieval filter, escalation rule)

- regression test added to golden set

This is how quantitative analysis becomes a compounding asset: every failure improves your test suite.

People also ask: practical questions teams hit fast

“Is perplexity useful for my product?”

Yes, but mostly for model comparison and regression detection. It won’t tell you whether answers are correct for your knowledge base or compliant with your policies.

“Can I automate evaluation with another model?”

You can, and it’s often effective for first-pass scoring (tone checks, format adherence, rubric grading). But keep a recurring human audit so you don’t build a self-referential grading loop.

“How many examples do I need?”

For a first reliable read, 200–300 well-chosen cases beat 2,000 random ones. Coverage matters more than volume.

“What’s the biggest mistake teams make?”

They measure vibes, not workflows. If you can’t write down a pass/fail definition for a task, you’re not ready to automate it.

Where this shows up in U.S. tech right now

Here’s what this looks like in real SaaS patterns across the U.S. market:

- AI customer support: TSR includes “policy-accurate” and “escalates billing disputes.” Groundedness is enforced via retrieval gating.

- AI marketing automation: voice rubric + compliance checks are run before content is pushed into email and ads.

- AI knowledge assistants: answerability gates prevent confident guessing; success is measured by deflection rate and audit accuracy.

These are not abstract research concerns. They’re operational necessities when a model speaks to customers at scale.

What to do next if you’re building with generative AI

If you’re shipping decoder-based generative AI inside a U.S. digital service, your next step isn’t “find a better model.” It’s to build a measurement loop that makes improvement predictable.

Start with this 30-day plan:

- Define 1 workflow (support reply, onboarding email, lead qualification chat).

- Write a TSR rubric with 5–10 pass/fail checks.

- Create a golden set of 300 cases (include edge and policy cases).

- Add groundedness rules if knowledge is involved.

- Launch with sampling and weekly scorecards.

The bigger point for this series—“How AI Is Powering Technology and Digital Services in the United States”—is that the most successful AI products aren’t the ones that generate the flashiest text. They’re the ones that can prove, with numbers, that the system behaves reliably under real customer pressure.

Where could your team benefit most from quantitative evaluation right now: marketing content, customer communication automation, or internal knowledge work?