AI source attribution helps U.S. digital platforms verify what users see and hear online. Learn practical provenance and content verification steps.

Know What You’re Seeing: AI Source Checks Online

Digital services in the U.S. have a trust problem, and it’s not subtle. A product review video can be synthetically voiced, a “CEO statement” can be generated in minutes, and a screenshot of a “breaking news” post can spread faster than any correction. The messy part isn’t that AI can create convincing media—it’s that most platforms and teams still can’t reliably answer a basic question: Where did this content come from, and has it been altered?

That question sits at the center of the next phase of AI in U.S. technology and digital services. It’s no longer enough for SaaS platforms to generate content, automate campaigns, and personalize customer messages. They also need source attribution—practical ways to label, verify, and track what people see and hear online so customers can trust the output.

The RSS source we pulled for this topic was blocked (a 403), so there wasn’t usable article text to quote or summarize. But the headline—“Understanding the source of what we see and hear online”—points to a very real, very urgent industry shift: AI-powered provenance (proof of origin) is becoming a core feature for platforms that publish, distribute, or monetize digital content.

Digital provenance is the new baseline for trust

Answer first: If your platform can’t show where an image, audio clip, or paragraph originated—and what changed along the way—you’re asking users to rely on vibes. And vibes don’t scale.

“Provenance” sounds academic, but it’s practical. Provenance is the chain of evidence for a piece of media:

- Who created it (or which system generated it)

- When it was created

- Which tool edited it

- Whether it was exported, compressed, resized, re-encoded, or translated

- Whether the current version is identical to the original

In U.S. digital services, provenance is becoming as essential as uptime or security controls. If you run:

- a marketing automation platform,

- a video hosting tool,

- a creator marketplace,

- a customer support knowledge base,

- an HR recruiting platform,

…you’re already in the “trust business.” AI just makes that obvious.

Why this is spiking in late 2025

Three forces are colliding right now:

- Cheap generation: Text, images, and voice cloning are now accessible to practically anyone.

- High distribution: Social and messaging channels move content instantly, and screenshots erase context.

- Low verification: Many orgs still rely on manual review or simplistic “AI detection” scores.

The result is predictable: more impersonation, more fraud, more brand-risk incidents, and more customers demanding proof.

“AI detection” isn’t the same as “source attribution”

Answer first: Detection tries to guess what created something. Attribution tries to prove where it came from. Attribution wins when the stakes are high.

A lot of teams start with AI detection tools: classifiers that predict whether content was AI-generated. I’m not against detection, but I am against treating it like a courtroom-grade verdict.

Here’s the problem: detection is probabilistic. It can be useful in triage, but it’s easy to break with edits, paraphrasing, re-encoding, or mixing human and AI work.

Source attribution, on the other hand, is evidence-based. It aims to make claims like:

“This audio file was generated by Tool X on Date Y, exported from Account Z, and has not been modified since.”

That’s the shift U.S. platforms are racing toward.

A practical way to think about it

- Detection answers: “Does this look like AI?”

- Attribution answers: “What system produced this, and can we verify the chain?”

Detection is a smoke alarm. Attribution is a security camera plus access logs.

How U.S. tech companies are implementing AI-powered content verification

Answer first: The winning approach is layered: embed provenance at creation time, preserve it through distribution, and verify it at consumption time.

Platforms fighting misinformation and impersonation typically build a three-layer system.

1) Capture provenance at creation (where it’s easiest)

If you create content inside a SaaS product—copy generation, ad creative, product images, training videos—the platform has the best opportunity to attach trustworthy metadata.

Common methods include:

- Cryptographic signing: The system signs content or a content hash, creating tamper-evident proof.

- Content credentials: Structured metadata describing creation/edit history.

- Watermarking (visible or invisible): Signals embedded into the media itself.

The big point: You can’t reliably reconstruct origin later if you don’t record it up front.

2) Preserve provenance through edits and workflows

Most real-world content gets edited:

- A designer crops an image.

- A social team adds captions.

- A localization vendor translates text.

- A video is re-encoded for different platforms.

If provenance breaks whenever something changes, nobody will use it.

The better pattern is “provenance journaling,” where each tool adds a signed step to the record. This is where AI shows up in a less flashy way: AI helps classify transformations and detect suspicious edits (for example, a voice track swapped in without a corresponding editing step).

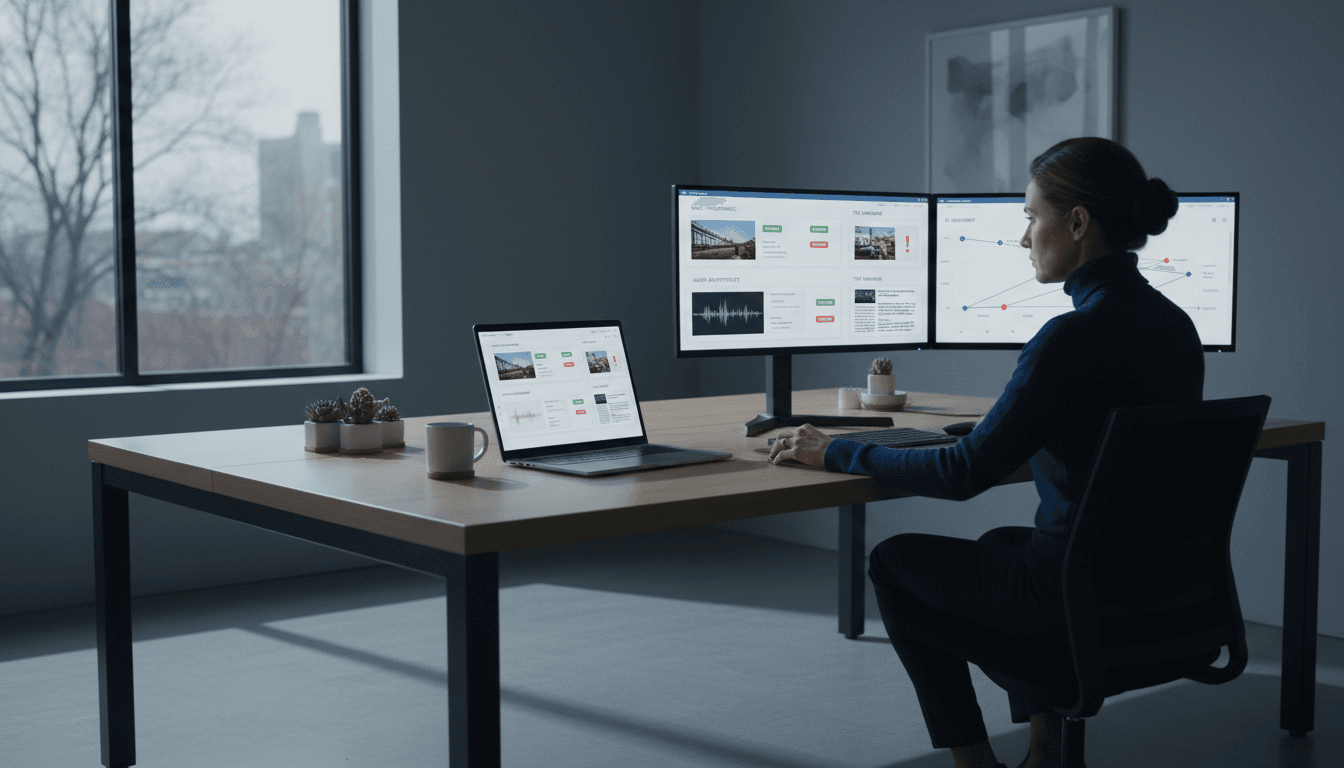

3) Verify at the point of use (where decisions happen)

Verification needs to show up where it matters:

- in a social feed moderation console,

- inside a newsroom CMS,

- in a brand safety dashboard,

- in a customer support admin view,

- in a fraud analyst workflow.

A solid verification UX doesn’t dump technical jargon on humans. It gives an operator a clear outcome:

- Verified origin (signed, intact chain)

- Partially verified (some steps missing)

- Unverified (no provenance)

- Tampered (signature mismatch)

That’s usable in operations, not just research.

Where misinformation actually hurts digital services (and leads to churn)

Answer first: Misinformation isn’t only a “social media problem.” It hits SaaS revenue through fraud, support load, compliance risk, and brand damage.

In this topic series—How AI Is Powering Technology and Digital Services in the United States—we often talk about AI boosting output: more campaigns, more personalization, faster support. But trust failures erase those gains.

Here are four scenarios I see repeatedly:

1) Executive impersonation and internal comms fraud

Voice cloning and “CEO-style” writing can trick employees into transferring funds, changing payroll details, or disclosing credentials.

What helps: provenance checks on internal video messages, signed comms templates, and verification prompts for high-risk requests.

2) Fake product claims in ads and landing pages

Affiliate networks and rogue resellers can generate believable ads that look like your brand, then route traffic to scams.

What helps: brand asset signing, monitoring for unsigned creatives, and platform-level requirements for verified ad accounts.

3) Support knowledge base poisoning

AI-written articles can be inserted (or suggested) with subtle but harmful instructions. Even without malicious intent, hallucinated procedures can increase returns, outages, or security incidents.

What helps: verified authorship for KB changes, model-assisted review queues, and “source required” policies for any instructional content.

4) Marketplace trust issues (reviews, listings, creator content)

If users suspect reviews or creator posts are synthetic, the marketplace loses its core value: credible signals.

What helps: account-level provenance, rate limits for mass-generated content, and clearly labeled AI-assisted content policies.

A practical checklist for SaaS teams building content trust in 2026

Answer first: Treat content trust like security: define controls, implement verification, and measure outcomes.

If you’re a product leader, security lead, or growth marketer at a U.S. tech company, here’s a plan that actually fits shipping cycles.

Step 1: Decide what “trust” means for your product

Pick two or three measurable promises. Examples:

- “We can verify the origin of all assets generated in-platform.”

- “We can detect tampering for signed media.”

- “We label AI-assisted content in user-facing surfaces.”

If you try to solve “misinformation” as a concept, you’ll ship nothing.

Step 2: Classify your highest-risk content types

Focus on the areas that cause real damage:

- Payments-related messages

- Legal/health claims

- Executive comms

- Security instructions

- Political content (if you host it)

Then decide where provenance is mandatory vs optional.

Step 3: Build a layered system (not one magic detector)

A robust stack usually includes:

- Provenance capture (signing / credentials / watermarking)

- AI-assisted anomaly detection (edits, re-uploads, suspicious patterns)

- Human review for edge cases

- Clear UX labels and admin logs

The stance I take: If your policy relies solely on detection, it will fail under pressure.

Step 4: Make verification visible in workflows

Verification must be easy enough that teams use it without being nagged.

Good patterns:

- A “Verified” badge with drill-down details

- Admin audit trails with exportable evidence

- Automated quarantine for unsigned high-risk media

Bad pattern:

- A single “AI likelihood: 62%” number with no explanation and no action path

Step 5: Measure business outcomes (not just model metrics)

Track:

- Fraud attempts blocked

- Time-to-triage for suspicious content

- Reduction in escalations and chargebacks

- Decrease in impersonation incidents

- Change in user trust scores (surveys or NPS comments)

Trust work needs revenue language or it gets deprioritized.

People also ask: can AI really tell who created content?

Answer first: AI can help, but “telling who created it” is strongest when the creator’s tool records proof at the time of creation.

- If provenance is embedded and signed: verification can be highly reliable.

- If provenance is missing: AI can only estimate (via detection, style signals, forensics). That’s useful for triage, not proof.

A good policy is: require provenance for the content types that can hurt people or the business. Treat everything else as “nice to have.”

Where this fits in the broader AI-in-digital-services story

AI in U.S. SaaS has moved from “create more” to “create responsibly.” Marketing teams want scale, support teams want speed, and product teams want personalization. Those are legitimate goals. But if customers can’t trust what they’re seeing and hearing online, the entire digital service experience becomes fragile.

Source attribution is the stabilizer. It’s how platforms keep AI-generated content useful without letting it become a liability.

If you’re building or buying AI features in 2026 planning right now, ask one operational question: When something goes wrong, can we prove where it came from? If the answer is no, you’ve found your next roadmap item.