Learn what DALL·E 3-style safety means for U.S. SaaS teams: guardrails, audits, and practical controls for safe AI image generation at scale.

DALL·E 3 Safety: What U.S. SaaS Teams Should Copy

Most product teams obsess over image quality. The teams that win in the U.S. digital services market obsess over something less glamorous: whether the image should be generated at all.

That’s why the phrase “system card” matters. A system card is basically a public blueprint of how an AI system is intended to behave, where it can fail, and what guardrails are in place. The RSS source here points to the DALL·E 3 system card, but the content couldn’t be retrieved (a 403/CAPTCHA response). Rather than hand-wave, I’ll do the useful thing: translate what “system card thinking” looks like in practice for U.S.-based SaaS and digital service providers building safe AI content creation into their products.

This matters because generative AI has already moved from “marketing experiment” to “core workflow” in customer support, lifecycle marketing, sales enablement, and creative operations. If you’re shipping AI image generation—or even just AI-generated assets that include images—you need a safety posture that holds up under customer scrutiny, platform policies, and legal risk.

System cards aren’t PR—they’re product requirements

A system card is a product document before it’s a public-facing artifact. The best ones read like an internal launch checklist: capabilities, limitations, evaluation methods, and safety mitigations.

For U.S. SaaS teams, the lesson is simple: if you can’t explain your generative AI system’s safety behavior clearly, you probably can’t test it reliably. And if you can’t test it reliably, you can’t promise customers anything meaningful.

What a “system card mindset” includes

If you’re building AI-generated content features (images, banners, product shots, ad creative, thumbnails), you should be able to answer—without improvising—questions like:

- What content is blocked outright? (sexual content, child sexual abuse material, instructions for wrongdoing, etc.)

- What content is allowed but constrained? (public figures, political content, sensitive medical imagery)

- How do prompts get screened? (pre-generation text filters, policy classifiers)

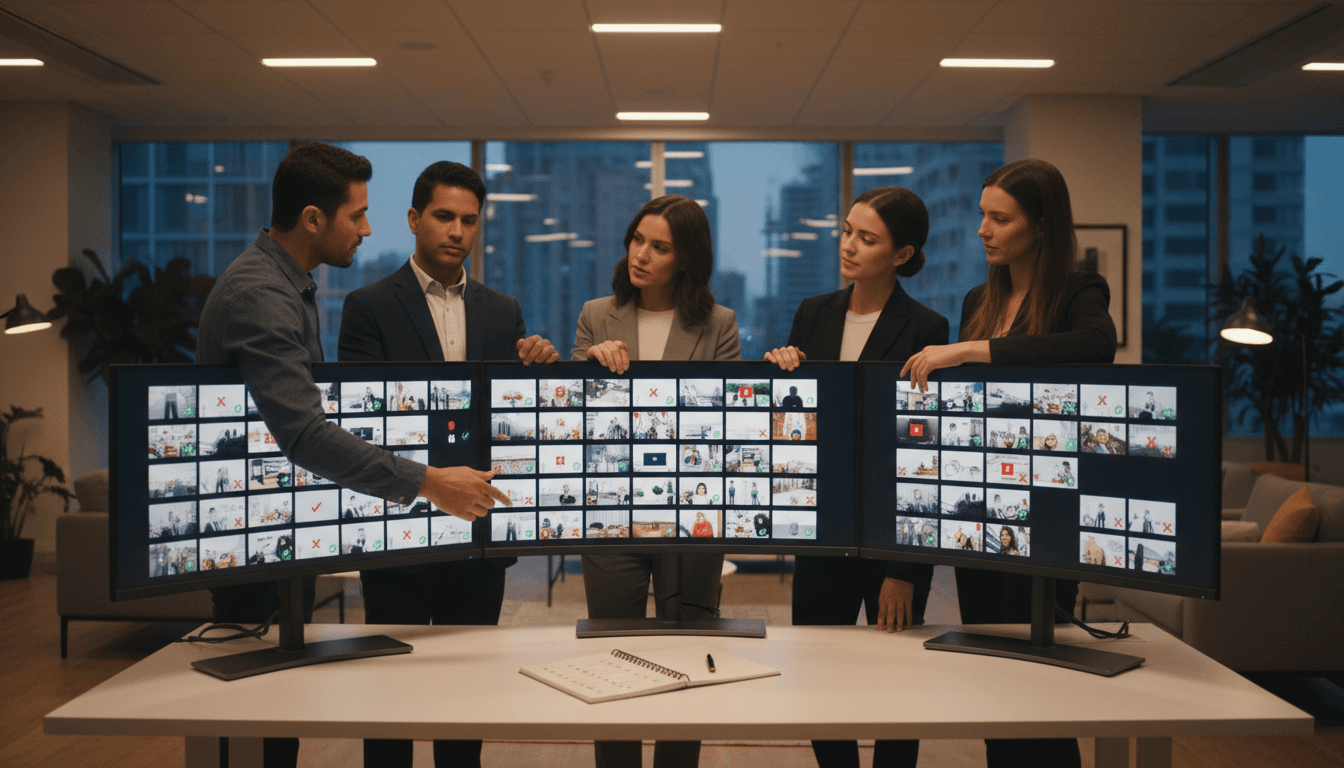

- How do outputs get screened? (image moderation models, human review for escalations)

- How do you handle “prompt laundering”? (users rephrasing requests to bypass rules)

- How do you document exceptions? (enterprise allowlists, regulated-industry workflows)

Snippet-worthy stance: If your AI feature can’t be audited, it can’t be enterprise-ready.

Why U.S. buyers care more in 2025

In the U.S., AI procurement has matured fast. Security questionnaires now routinely include AI sections: training data exposure, output safety, moderation, incident response, and governance. Even mid-market customers ask about risk because their customers do.

If your product is used to generate marketing and customer communication assets at scale, your AI safety failures don’t stay small. They propagate.

The real safety risks in AI image generation (and how teams miss them)

The obvious risk is harmful imagery. The less obvious risk is operational misuse: content that’s technically “clean” but still creates legal, brand, or trust problems.

Risk 1: Brand impersonation and deceptive creative

AI images can be used to mimic a competitor’s visual identity, fabricate endorsements, or imply partnerships.

What to implement:

- Brand name and trademark detection in prompts

- “Deceptive intent” heuristics (e.g., “make it look like Nike sponsored this”)

- Enterprise controls: customer-specific brand allowlists and blocklists

Practical example: A SaaS social scheduling platform that adds “Generate ad creative” should block prompts that request a competitor’s logo or a fake co-branded banner, and route borderline cases to review.

Risk 2: Public figure and political misuse

Even when policies allow certain public figure content, the safety bar is higher for political persuasion, misinformation, and harassment.

What to implement:

- Public figure classifiers for prompt text

- Higher friction for sensitive categories (confirmation steps, reduced realism presets)

- Logging and rate limits for political or public figure prompts

My opinion: if you sell into U.S. enterprises, don’t wait for an election-year incident to build this. Build it before you need it.

Risk 3: “Not illegal, still harmful” workplace use

Teams generate internal training visuals, recruiting creatives, or “culture” graphics. Bias can sneak in quietly: stereotyped portrayals of roles, age, race, disability, or gender.

What to implement:

- Bias testing harnesses: same prompt, vary demographic descriptors, compare outputs

- Safer defaults: encourage diverse representation in templates

- Human-in-the-loop for high-stakes HR or health-related content

Risk 4: Data leakage through prompts

People paste sensitive information into prompts: customer names, emails, screenshots, account numbers, or internal strategy.

What to implement:

- PII detection on prompt input (emails, phone numbers, SSNs)

- Admin controls: disable certain modes for regulated teams

- Clear UI warnings: “Don’t include confidential customer data” isn’t enough—make the product enforce it

Guardrails that actually work in SaaS products

“Safety” can’t be a PDF and a hope. It has to be built into the user flow. Here are patterns that hold up when AI generation is embedded in real production work.

Layer 1: Pre-generation prompt controls

Answer first: Stop bad requests before compute is spent.

Implement:

- Policy classifier on prompt text (fast)

- Regex/keyword rules for known prohibited terms (simple, surprisingly effective)

- Contextual rewriting for borderline requests (e.g., remove explicit sexual detail)

- User feedback that explains the block without revealing bypass tips

A good block message is specific enough to educate, not specific enough to teach evasion.

Layer 2: Post-generation output moderation

Answer first: Assume some unsafe content will slip through prompt filters.

Implement:

- Image moderation model to detect nudity, violence, hateful symbols, self-harm, and harassment cues

- Confidence thresholds with clear actions:

- High confidence unsafe → block

- Medium confidence → blur + require confirmation or review

- Low confidence → allow + log

Layer 3: Product-level friction and permissions

Answer first: Risky capabilities should require higher trust.

Implement:

- Role-based access control (RBAC) for “Generate realistic faces,” “Use reference images,” or “Export at high resolution”

- Rate limits that tighten when prompts trend risky

- Workspace-level policy toggles for regulated industries

This is how you turn “AI alignment” into something a CIO can approve.

Layer 4: Audit logs and incident response

Answer first: You can’t manage what you can’t reconstruct.

Implement:

- Prompt + metadata logging (user, time, workspace, model settings)

- Output hashes (so you can identify re-uploads)

- Review queues for flagged generations

- A documented incident response path: who gets paged, how you notify customers, what you disable first

How DALL·E 3-style safety thinking helps marketing teams scale

Generative AI is now a core part of U.S. marketing ops: seasonal campaigns, product launches, landing pages, and customer education. Late December is a perfect example—teams are simultaneously wrapping year-end reporting and building January pipeline campaigns.

Here’s where safe AI image generation pays off immediately.

Faster creative iteration without brand risk

When guardrails are solid, marketing can iterate quickly:

- Variant headers for landing pages

- Holiday-to-New-Year creative refreshes

- Paid social image sets localized by region

- Product mockups for feature announcements

Without guardrails, every iteration becomes a compliance review bottleneck.

Consistent “house style” with fewer weird outputs

Safety and quality overlap more than teams expect. Adding constraints like:

- disallowing certain sensitive themes

- requiring neutral backgrounds for product shots

- limiting photorealism in certain categories

…often produces outputs that look more consistent and on-brand.

Safer automation for lifecycle and customer comms

If your SaaS platform generates images for:

- onboarding emails

- in-app tooltips

- knowledge base banners

- webinar promos

…then the safety bar is higher because the content is customer-facing and high-volume. Automation magnifies mistakes.

“People also ask” questions your buyers will raise

Is AI image generation safe for enterprise use?

Yes—when the product includes layered moderation, RBAC, audit logs, and a clear incident response process. Enterprise safety is less about one perfect filter and more about defense in depth.

What’s the difference between AI safety and AI alignment?

In product terms: AI safety is blocking harmful content; AI alignment is making the system reliably follow the intent of policies and user expectations, even under adversarial prompts.

What should I ask a vendor about AI-generated images?

Ask for specifics:

- What content categories are blocked?

- Do you moderate both prompts and outputs?

- Can admins control access by role?

- Do you provide audit logs?

- How do you handle policy updates and customer notification?

If they can’t answer quickly, assume the controls are thin.

A practical checklist for U.S. SaaS teams shipping AI images

Answer first: If you’re adding AI image generation in 2026 planning, start here.

- Define content policy in product language (not just legal language)

- Implement prompt + output moderation with thresholds and actions

- Add RBAC and workspace toggles for riskier modes

- Create an evaluation suite (200–500 test prompts across categories)

- Measure block/allow rates weekly and review edge cases

- Ship audit logs and a review workflow for flagged content

- Train support on how to handle “why was this blocked?” tickets

One-liner to keep on a sticky note: If you can’t test it, you can’t trust it.

Where this fits in the bigger U.S. AI services story

This post is part of the series How AI Is Powering Technology and Digital Services in the United States, and this is the pattern I keep seeing: U.S. companies don’t adopt generative AI because it’s flashy—they adopt it because it lowers content production costs and increases speed. But they keep it because it’s governed.

DALL·E 3 put a spotlight on the idea that image generation isn’t just a model problem; it’s a system problem. Policies, UX, monitoring, and enforcement are the product.

If you’re building or buying AI content creation capabilities for 2026, don’t ask only, “Can it generate great images?” Ask: “Can it generate images my company can stand behind at scale?”