CSU’s move to bring AI to 500,000 students and faculty shows what scalable, policy-aligned AI services look like. Learn the rollout lessons that apply to any large org.

AI in Higher Education: Scaling Support to 500,000

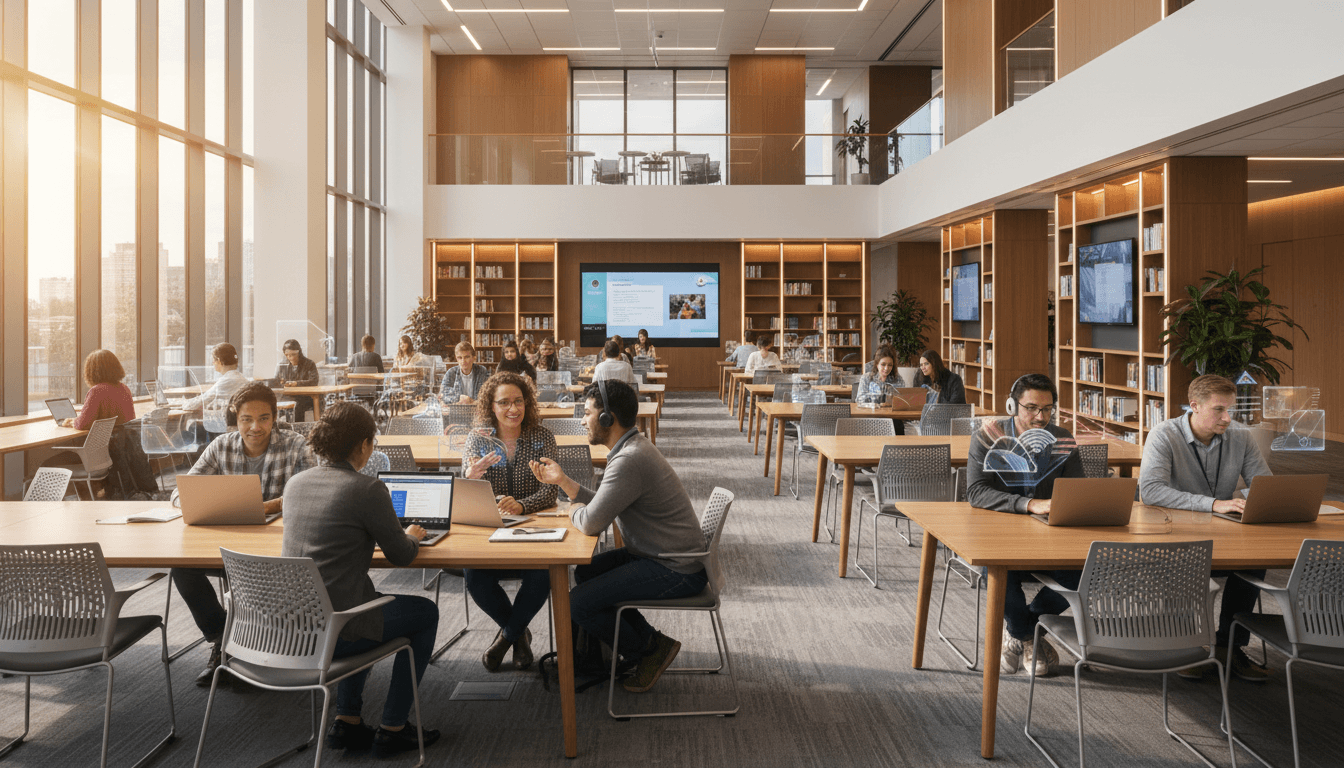

A single campus rolling out an AI assistant is interesting. A 23-campus public university system doing it for roughly 500,000 students and faculty is a different category of story. It’s less about novelty and more about operations: access, consistency, governance, and whether digital services can keep up with human demand.

That’s why the news that OpenAI and the California State University (CSU) system are working to bring AI tools to a community of about half a million people matters well beyond academia. CSU is effectively a live test of something most U.S. organizations are wrestling with right now: how do you scale expertise and support without scaling headcount at the same rate?

This post sits in our series “How AI Is Powering Technology and Digital Services in the United States.” Education is one of the clearest examples of AI’s real value in digital services—because the “customers” (students, faculty, staff) need help all the time, and the bottlenecks (advising, tutoring, help desks, writing support) are painfully predictable.

Why the CSU scale matters for AI-powered digital services

The CSU story is really a scaling story. When you serve hundreds of thousands of people, almost every question is a repeat question, every process has policy edge cases, and every service line has seasonal spikes.

Think about the academic calendar in late December. Students are wrapping fall term, transferring credits, planning spring schedules, and dealing with financial aid timelines. Meanwhile, faculty are grading, preparing syllabi, and updating course shells. That creates predictable “support storms” that traditional service models handle with queues, overtime, and frustration.

AI-powered digital services change the shape of that problem:

- More first-contact resolution: An AI assistant can answer routine questions immediately (hours, policies, deadlines, how-to steps).

- Better triage: When a human is needed, the AI can route the request with context (what the user tried, what policy applies).

- 24/7 service without “night shift staffing”: Higher education doesn’t stop at 5pm, and neither do student needs.

Here’s the stance I’ll take: If AI isn’t improving response time and consistency for large institutions, it’s not really being deployed—it’s being demoed. CSU’s scale forces the conversation to shift from “cool tool” to “repeatable system.”

What “bringing AI to 500,000 people” actually involves

An institution-wide AI rollout isn’t one decision—it’s a stack of decisions. For a large U.S. education system, success depends on how well the rollout covers identity, access, safety, and day-to-day workflows.

Identity, access, and “who is allowed to do what”

At CSU scale, you can’t treat AI like a consumer app. You need role-based access:

- Students using AI for tutoring, studying, and writing support

- Faculty using AI for lesson planning, rubrics, feedback drafts, and research support

- Staff using AI for policy Q&A, communications drafts, and service desk assistance

The practical point: AI has to respect institutional boundaries. A student shouldn’t be able to query private HR documentation, and a staff member shouldn’t accidentally expose sensitive student records in prompts.

Knowledge grounding: policy is the product

For most universities, the “truth” students need is scattered across:

- Department pages

- Registrar policy docs

- Financial aid guidance

- Advising materials

- IT help desk articles

AI works best when it can be grounded in an approved, current knowledge base. Otherwise, it becomes confident-but-wrong, and higher ed can’t afford that. A system-wide deployment needs:

- A clear source of approved answers

- Version control (policies change every term)

- A feedback loop for “this answer was wrong”

A snippet-worthy line that holds up in practice:

At scale, AI isn’t a chatbot. It’s a policy delivery system.

Safety, privacy, and academic integrity

Education adds a layer many digital services don’t have: assessment. When AI is available to everyone, you have to design for appropriate use.

The best deployments don’t pretend cheating won’t happen. They set norms and support faculty with practical guardrails:

- Clear guidelines for allowed vs. prohibited AI assistance per assignment

- Assessments that reward reasoning, drafts, and process—not just final output

- Tools and training for faculty to design “AI-resilient” coursework

On privacy: institutions have legal and ethical obligations around student data. A credible rollout requires policies like:

- Don’t input protected personal data into prompts

- Use institutional accounts and approved tools

- Log, monitor, and audit usage patterns appropriately

Where students and faculty will feel the impact first

The early wins will show up in the highest-volume, highest-friction workflows. That’s where AI can reduce wait times, improve consistency, and keep humans focused on complex cases.

Student services: advising, registration, and financial aid navigation

If you want to see whether AI is improving student experience, look at these moments:

- Class selection and degree progress: “What do I need next term to stay on track?”

- Transfer credit and prerequisites: “Does this course satisfy requirement X?”

- Financial aid timing: “What’s missing from my file, and what happens if I miss deadline Y?”

A strong AI experience doesn’t just answer questions—it reduces back-and-forth:

- It asks clarifying questions

- It provides step-by-step actions

- It surfaces the right form or process (without making the user hunt)

This is where AI is powering technology and digital services in the U.S. in the most tangible way: fewer dead ends.

Teaching workflows: faster prep, better feedback, more time for people

Faculty time is the most expensive resource on campus, and too much of it gets burned on repetitive tasks. AI can help when used transparently and professionally:

- Drafting lesson outlines aligned to learning objectives

- Generating example problems at multiple difficulty levels

- Creating rubric language and consistent feedback starters

- Helping transform lecture notes into study guides

I’ve found that the best faculty outcomes come from a simple rule: use AI to draft structure, then apply human judgment to substance. Students don’t need “more words.” They need clarity, consistency, and meaningful feedback.

Campus IT and help desks: fewer tickets, better ticket quality

When a help desk serves tens of thousands of users, many tickets are password resets, access issues, VPN setup, and LMS troubleshooting.

AI can:

- Provide instant troubleshooting flows

- Identify missing details (“What device? What error message?”)

- Generate a cleaner ticket summary for human escalation

That’s not replacing IT staff. It’s reducing noise so specialists can focus on the hard issues.

Lessons for any large organization rolling out AI at scale

CSU’s headline number—500,000—maps directly onto enterprise reality. Whether you’re a SaaS company, a healthcare network, a government agency, or a national retailer, the mechanics of scaling AI-powered digital services are similar.

1) Start with service maps, not tools

The temptation is to buy a tool and “announce AI.” The better approach is to map the top 20 service journeys:

- What are the most common requests?

- Where do users get stuck?

- Which steps require human approval?

Then design the AI assistant around those journeys.

2) Define success metrics you can’t fake

If your AI rollout can’t be measured, it’ll turn into vibes and anecdotes. Use metrics that reflect real service outcomes:

- Time to first response

- Percentage of requests resolved without escalation

- Reduction in repeat contacts for the same issue

- Student/faculty satisfaction after an interaction

- Policy accuracy rate (sampled and audited)

A good target for year one isn’t “AI everywhere.” It’s measurable improvement in 3–5 high-volume services.

3) Governance isn’t bureaucracy—it’s scale insurance

At 500,000 users, small mistakes become large incidents. You need clear rules:

- Approved use cases vs. prohibited use cases

- Data handling standards

- Human escalation paths

- Model and prompt updates (change management)

The reality? Governance is what makes AI feel boring—and boring is what you want in institutional services.

4) Train people for workflows, not features

Most training fails because it focuses on buttons and menus.

Effective enablement looks like:

- “Here are 5 prompts that save an advisor 30 minutes a day”

- “Here’s how to check AI output against the policy source”

- “Here’s how to document when you used AI in feedback”

If users don’t know how AI fits into their work, adoption will plateau.

People also ask: what does an OpenAI–university partnership mean?

It means AI is moving from optional to institutional. Partnerships between AI providers and public university systems signal that generative AI is becoming part of core digital infrastructure, similar to learning management systems and identity platforms.

Does this replace instructors or advisors? No. It shifts their workload. Routine explanation and first-pass drafts can be automated; high-touch mentoring, judgment, and complex problem-solving remain human.

Will AI make education more equitable? It can—if access is consistent across campuses and students aren’t forced to rely on paid tools. System-wide availability helps, but equity still depends on training, policy, and support.

What about hallucinations and incorrect answers? That’s the central operational risk. The fix is not “tell people to be careful.” The fix is grounding answers in approved sources, auditing accuracy, and providing easy escalation to humans.

What to do next if you’re planning an AI rollout

The CSU initiative is a reminder that AI strategy is service strategy. When you can support a half-million people more consistently, you’re not just adopting technology—you’re changing how the institution functions.

If you’re leading digital services in a university, a SaaS platform, or any large U.S. organization, start here:

- Pick one high-volume service line and instrument it (baseline metrics first).

- Build an approved knowledge source of truth (even if it’s imperfect at the start).

- Launch a pilot with clear guardrails and a human escalation path.

- Track accuracy and satisfaction weekly, not quarterly.

The next year in U.S. digital services won’t be defined by who “has AI.” It’ll be defined by who operates AI responsibly at scale.

What would change in your organization if your customers—or your students—could get a correct, policy-aligned answer in under 30 seconds, any time of day?