Practical AI deployment lessons for U.S. SaaS and digital services teams: choose the right use case, set guardrails, and ship measurable results fast.

AI Deployment Lessons U.S. Teams Can Use Now

Most companies don’t fail at AI because the model is “bad.” They fail because the deployment is treated like a demo instead of a product.

By late 2025, AI has moved from experimentation to expectation across U.S. technology and digital services. If you run a SaaS platform, a support-heavy service business, or a fast-moving startup, your customers and your team already assume AI will speed things up: faster responses, cleaner workflows, fewer handoffs, better personalization. The gap is execution—getting reliable outcomes in production without breaking trust, compliance, or budgets.

This post is part of our “How AI Is Powering Technology and Digital Services in the United States” series. The original RSS source referenced “lessons from hundreds of successful deployments,” but the page wasn’t accessible at crawl time. So instead of summarizing a missing webinar page, I’ll do the more useful thing: lay out the practical patterns I’ve seen consistently separate successful AI deployments from expensive pilots—especially in U.S.-based digital services where customers feel the impact immediately.

Start with one measurable job, not “use AI everywhere”

The most effective AI deployment strategy is to pick one job-to-be-done with a clear metric and a clear owner. AI programs stall when the goal is fuzzy (“improve productivity”) or too broad (“automate customer support”).

A strong starting point looks like this:

- Job: Reduce first-response time for support tickets in the U.S. by drafting replies.

- Metric: Median first-response time drops from 3 hours to 30 minutes.

- Owner: Head of Support (not “the AI team”).

- Scope: Only billing and login issues for the first 30 days.

That scope feels almost comically narrow—and that’s why it works. Narrow scope lets you:

- Build the right data access

- Create a review workflow

- Add guardrails

- Prove ROI with real numbers

What “good ROI” actually looks like

For many U.S. SaaS and digital services teams, the first deployable wins show up as time recaptured, not headcount reduction. Examples that are easy to measure:

- Support: 20–40% reduction in average handle time after agents use AI drafts and summaries

- Sales: 10–25% more follow-ups sent per rep when AI handles personalization at scale

- Ops: 30–60 minutes saved per analyst per day through automated document and meeting summarization

If you can’t define the metric, you can’t defend the budget when usage grows.

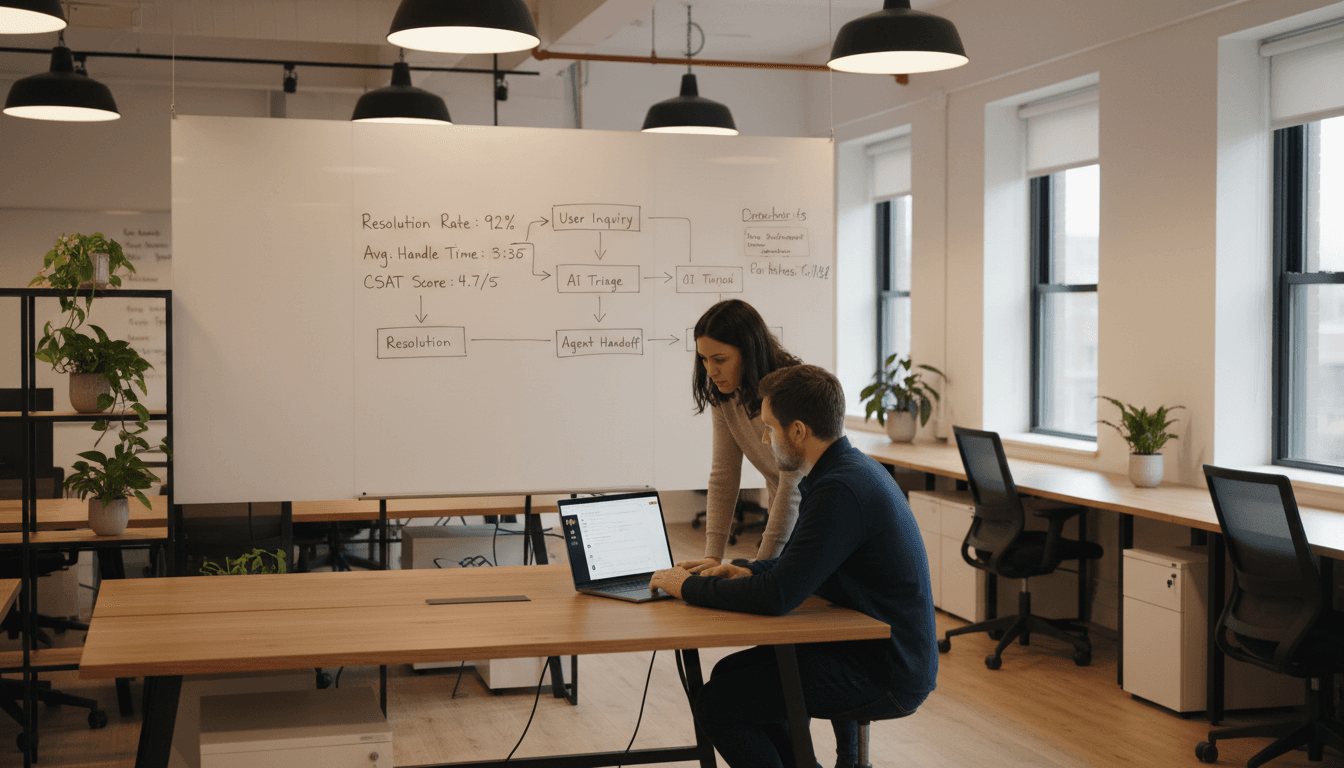

Treat AI like a product: inputs, outputs, and feedback loops

Successful teams don’t “add a chatbot.” They ship an AI feature with explicit inputs, expected outputs, and a feedback loop.

Here’s the difference in practice:

- A demo mindset: “Ask the bot anything about our product.”

- A product mindset: “For these 12 ticket types, the AI produces a draft reply with citations from approved sources and a confidence score.”

Build the workflow around humans (yes, even in automation)

In real U.S. business operations, the winning pattern is human-in-the-loop where risk is high, and automation where risk is low.

A practical ladder you can deploy in weeks:

- Assist mode: AI drafts; human sends. (Fastest safe start)

- Guarded automation: AI sends only when confidence is high and policy checks pass.

- Autonomy with audits: AI acts; humans review samples daily/weekly.

The mistake I see: teams jump straight to step 3, then get spooked by one bad output and shut the whole project down.

Snippet-worthy rule: If the cost of a mistake is high, require review. If the volume is high and the stakes are low, automate.

Data access is the bottleneck—solve it early

AI in digital services lives or dies on whether the system can access the right context. U.S. companies often have the data, but it’s fragmented across tools: CRM, ticketing, docs, internal wikis, billing, product logs.

The fastest path is not “connect everything.” It’s to create a thin, approved context layer:

- A curated set of knowledge articles and policy docs

- The last N customer interactions (tickets, chats, emails)

- A limited customer profile (plan, status, renewal date)

RAG isn’t a buzzword—it’s a reliability strategy

If your AI needs to answer questions about your business, a strong pattern is retrieval-augmented generation (RAG): fetch relevant internal content, then generate an answer grounded in it.

For U.S. SaaS support and customer success, RAG usually reduces:

- Hallucinations (made-up product details)

- Inconsistent policy answers

- “Confidently wrong” edge cases

But RAG still needs governance:

- Approved sources only (no random Google snippets)

- Freshness rules (what happens when pricing changes?)

- Citations (so agents can verify quickly)

Guardrails: policy, privacy, and compliance aren’t optional in the U.S.

In U.S. deployments, the fastest way to kill an AI project is to ignore security until after the pilot “works.” As soon as legal, security, or procurement gets involved, everything stops.

Instead, decide up front:

What data can the AI touch?

Create a simple classification:

- Public: marketing site, public docs

- Internal: SOPs, runbooks, internal knowledge

- Sensitive: customer PII, payment info, health/education data

Then implement enforcement:

- Redaction or tokenization for sensitive fields

- Role-based access controls

- Audit logs for prompts and outputs

What can the AI do?

Think in terms of permissions, like any other software:

- Can it draft?

- Can it send?

- Can it refund?

- Can it change account settings?

For most U.S. digital services, a smart early boundary is: AI can draft and recommend; humans execute account-impacting actions until you’ve proven reliability.

The playbook for avoiding common AI implementation pitfalls

Here are the failure patterns that show up again and again—and how to avoid them.

Pitfall 1: Measuring vibes instead of outcomes

If your KPI is “people like it,” you’ll get a month of excitement and no renewal. Pick metrics that finance and operations respect:

- Cost per contact

- First-response time

- Conversion rate

- Time-to-resolution

- Backlog size

- Churn or retention for accounts touched by AI-assisted workflows

Pitfall 2: Shipping AI without brand and policy alignment

AI-generated messages that don’t match your voice can erode trust quickly—especially in customer communication. Fix this with:

- A style guide the model is instructed to follow

- Approved phrases for sensitive moments (refunds, outages, account security)

- “Never say” rules

Pitfall 3: Letting the model be the product

When the AI is the entire interface, you get unpredictable results. Strong AI products put structure around the model:

- Forms that constrain inputs

- Templates for outputs

- Required citations

- Confidence thresholds

- Escalation paths

Another snippet: The model is a component; the workflow is the product.

Pitfall 4: Underestimating change management

The hard part isn’t technical—it’s adoption. Your team needs to trust the tool and know when not to use it.

What works in practice:

- Start with a champion team (5–15 users)

- Create a shared channel to post “good outputs” and “bad outputs”

- Run weekly calibration for one month

- Reward usage that improves customer outcomes (not just “using AI”)

High-impact AI use cases in U.S. digital services (right now)

If you’re deciding where to place your first bet, these use cases tend to produce fast payback without requiring a total platform rebuild.

Customer support: drafts, summaries, and smarter routing

- Draft replies grounded in policy docs and prior interactions

- Summarize long ticket histories into a few bullet points

- Classify and route tickets by intent, sentiment, and urgency

This matters in the U.S. market because customers expect speed plus accuracy. AI helps you meet both when you keep humans in the loop early.

Customer success: proactive outreach that doesn’t feel spammy

- Generate QBR notes from usage data and call transcripts

- Suggest health risks (“usage dropped 30% in 2 weeks”) with next actions

- Personalize check-ins based on lifecycle stage

Sales and marketing ops: faster cycles, tighter targeting

- Enrich lead notes and produce tailored outreach drafts

- Summarize calls into CRM-ready fields

- Generate campaign variants aligned to brand rules

Back office: document processing and internal knowledge search

- Extract fields from contracts and invoices

- Summarize vendor security questionnaires into action lists

- Provide internal “how do I…?” answers grounded in SOPs

A 30-day rollout plan that doesn’t implode

If you want a realistic approach for a U.S. SaaS or service business, here’s a simple month-one plan.

-

Week 1: Pick the job and baseline the metric

- Choose one workflow and define success

- Capture current performance (time, cost, quality)

-

Week 2: Build the context and guardrails

- Identify approved knowledge sources

- Define redaction rules and access controls

- Create review and escalation steps

-

Week 3: Pilot with a small group

- Train users on “when to trust” vs “when to rewrite”

- Collect failures and categorize them (missing context vs bad instruction vs policy conflict)

-

Week 4: Expand scope carefully

- Add 2–3 more ticket types or workflows

- Introduce confidence thresholds for partial automation

- Report results with before/after numbers

If you can’t show improvement in 30 days, you either picked the wrong job, didn’t provide the right context, or didn’t structure the workflow.

Where this is headed in 2026 (and what to do now)

U.S. digital services are moving toward AI as a standard layer: customer communication, internal operations, analytics, and product experiences. The teams that win won’t be the ones with the flashiest chatbot—they’ll be the ones that can deploy reliable AI features repeatedly, safely, and with measurable impact.

If you’re building toward that future, start small but build with discipline: pick one measurable job, treat AI like a product, invest early in data access and guardrails, and run a pilot that produces numbers you can defend.

What would happen to your customer experience—and your margins—if one workflow in your business got 30% faster by January?