Agentic AI Foundation and AGENTS.md point to shared rules for AI agents. Here’s how U.S. SaaS teams can ship safer, auditable automation.

Agentic AI Foundation: Open Source Rules for AI Agents

Most companies are about to repeat the same mistake they made with APIs a decade ago: they’ll build “AI agents” that work in a demo, then crumble in production because nobody agreed on the basics—how agents should behave, how they should be documented, and how they should be reviewed.

That’s why the news that OpenAI co-founded the Agentic AI Foundation and donated a spec called AGENTS.md matters to anyone building AI-powered digital services in the United States. Even though the original announcement page wasn’t accessible from our feed scrape (403), the signal is still clear: major U.S. AI players are putting weight behind open-source AI infrastructure for agentic systems.

This post sits in our series, How AI Is Powering Technology and Digital Services in the United States, and it’s focused on one practical question: what changes for U.S. SaaS teams, startups, and digital service providers when “agentic AI” starts getting shared standards?

What the Agentic AI Foundation signals for U.S. digital services

Answer first: The Agentic AI Foundation is a bet that shared, open standards will speed up safe deployment of AI agents—especially across U.S. SaaS and enterprise services—by making agent behavior easier to understand, test, and govern.

Agentic AI isn’t just chat. It’s software that can plan, call tools, make decisions, and take actions across systems like CRMs, ticketing platforms, data warehouses, calendars, billing, and internal admin tools.

Here’s what tends to break when standards don’t exist:

- No consistent “contract” for agent behavior: one team’s agent follows strict approval rules, another silently executes.

- Hidden operational risk: logs and decision trails vary wildly, making post-incident review painful.

- Vendor lock-in by accident: teams encode behavior in proprietary prompts and glue code that’s hard to port.

- Security gaps: the agent gets broader permissions than intended because the permission model isn’t documented.

A foundation pushing open artifacts (like a shared documentation pattern) is a classic move in U.S. tech: reduce fragmentation, build a developer commons, and let the market compete above the plumbing.

Agentic AI, explained like you’ll ship it next quarter

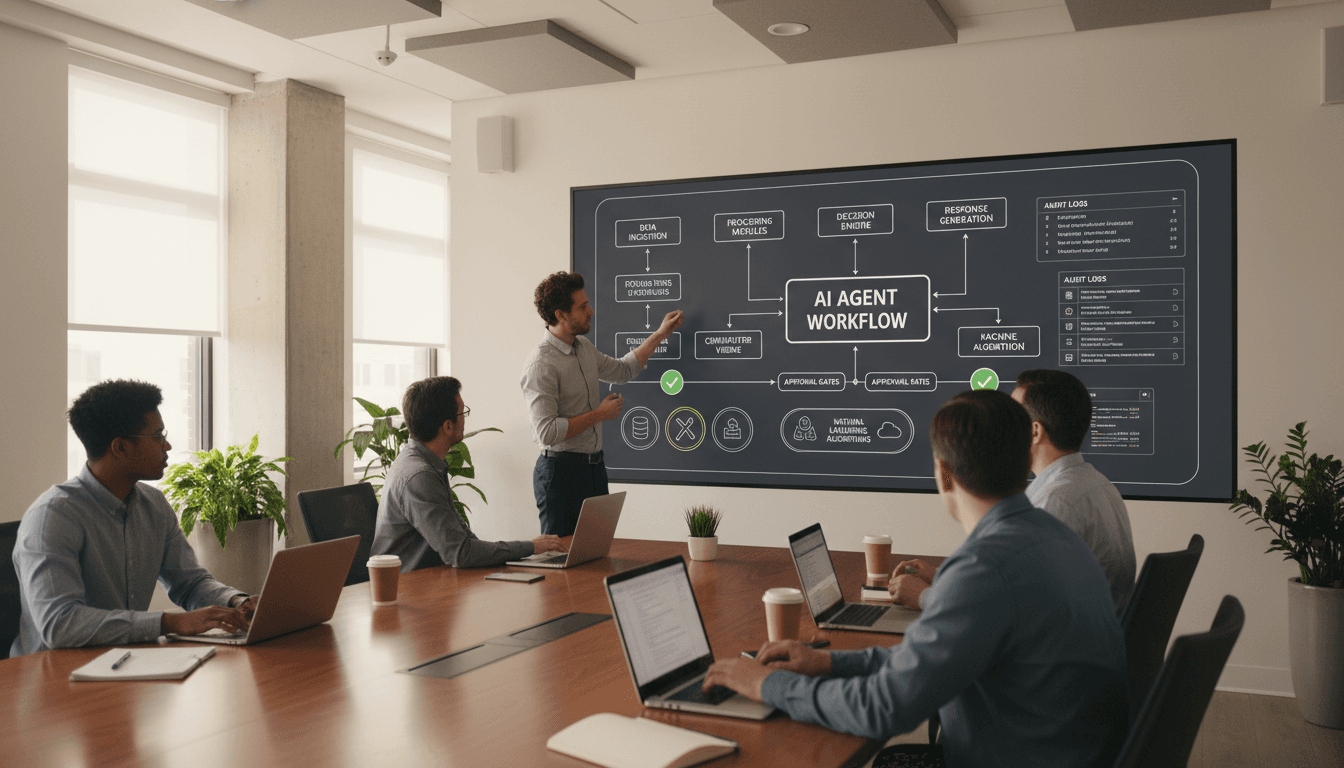

Answer first: An AI agent is a workflow-driven system that turns a goal into a sequence of actions, using tools and guardrails, with logging and review baked in.

A helpful way to think about agentic AI in digital services is a three-layer stack:

1) The “brain”: models + planning

The model interprets intent, drafts a plan, and chooses actions. Planning can be explicit (step lists) or implicit (tool selection). In production, the plan needs constraints:

- What counts as “done”

- When to ask for confirmation

- When to escalate to a human

- What tools are allowed

2) The “hands”: tools and integrations

Tools are where revenue and risk show up. In U.S. SaaS, the common tool surface looks like:

- CRM updates (Salesforce/HubSpot-style objects)

- Customer support actions (ticket status, refunds, macros)

- Payments and billing (invoices, credits)

- Scheduling and messaging (email/calendar/Slack-style)

- Data queries (analytics tables, dashboards)

If tools aren’t documented with consistent expectations, agents will behave differently across teams and vendors.

3) The “seatbelts”: policy, evaluation, and audit

This is where most “agent demos” fail in real life.

Production-grade agentic systems need:

- Permission boundaries (least privilege)

- Human approvals for sensitive actions

- Audit logs that capture tool calls and rationale

- Evaluations (offline test suites + online monitoring)

This is the exact area where a shared standard like AGENTS.md can help: not by making agents smarter, but by making them predictable and reviewable.

Why AGENTS.md matters (even if you never use OpenAI)

Answer first: AGENTS.md is valuable because it pushes teams toward a consistent, readable “owner’s manual” for agents—so anyone can understand what the agent is allowed to do, how it should be tested, and how changes should be reviewed.

If you’ve worked in software long enough, you’ve seen this pattern:

README.mdexplains a repo.CONTRIBUTING.mdexplains how to change it.SECURITY.mdexplains disclosure and security posture.

AGENTS.md fits that family—an artifact designed to make agent behavior explicit.

A well-designed AGENTS.md-style file (whatever the final community convention becomes) should answer questions like:

- Purpose: What business job does the agent perform?

- Scope: What systems can it touch? What can’t it touch?

- Tools: Which tool calls exist, with constraints and examples.

- Guardrails: When does it require approval? What actions are blocked?

- Data handling: What customer data is read/written? Retention expectations.

- Evaluation: What tests must pass before deployment?

- Fallbacks: What happens when the model is uncertain or tools fail?

A simple rule I like: if a non-author engineer can’t predict the agent’s next action from its documentation, it’s not production-ready.

For U.S. organizations building AI-powered customer communication and automation, that kind of clarity reduces risk in two ways: fewer incidents, and faster security/legal reviews.

Open-source agent infrastructure is a growth play for U.S. SaaS

Answer first: Open standards around AI agents make it cheaper and faster for U.S. startups and SaaS providers to ship reliable automation—because they can reuse governance patterns, documentation templates, and evaluation methods.

Open-source infrastructure isn’t charity. It’s market-making.

When a shared approach exists, three things happen quickly:

Faster vendor switching (and fewer dead-end builds)

Teams stop hardcoding their product around one provider’s agent conventions. They can migrate models or orchestration layers while keeping the same “behavior contract.” That’s especially useful in 2025 as model performance, price, and compliance features keep shifting.

Better enterprise trust = shorter sales cycles

If you sell into U.S. enterprises, you know the blockers:

- security review

- compliance questionnaires

- change management

- auditability

A standard, expected agent documentation file helps prospects evaluate risk quickly. It turns “trust us” into “here’s how it works.”

More competition on product value

Once baseline governance becomes common, companies compete on what customers actually pay for:

- better user experience

- domain accuracy

- workflow coverage

- integrations

- support and reliability

That’s how you get more AI-powered digital services shipping across the U.S., not just more prototypes.

Practical examples: where agentic AI pays off in digital services

Answer first: The best agentic AI wins come from high-volume, rules-aware workflows—where the agent can take 80% of actions and route the tricky 20% to humans.

Here are three examples I’ve seen teams implement successfully (or at least sanely):

Customer support: “resolution agent” with approval gates

A support agent can:

- summarize a ticket thread

- classify intent (refund, bug, how-to)

- fetch account status via tools

- propose a resolution and draft a response

- execute approved actions (refund within policy, replacement order)

The trick is policy encoding:

- Refunds under $50: auto-approve

- Refunds $50–$200: require human click

- Refunds over $200: escalate + attach evidence

This structure is easy to describe in an AGENTS.md-style spec and easy to test.

Sales operations: “CRM hygiene agent” that cleans without breaking things

These agents do boring work that impacts revenue forecasting:

- dedupe leads

- normalize titles/industries

- set next steps from meeting notes

- nudge reps when opportunities stall

But the agent must be constrained:

- cannot change pipeline stage without explicit approval

- must log all field edits and prior values

- must run only on assigned territories

IT and internal ops: “access request agent” with strict permissions

This is where U.S. companies feel pressure in Q4 and year-end transitions (exactly where we are now): onboarding, offboarding, role changes.

An internal agent can:

- gather required info

- open tickets in the ITSM tool

- check policy (role-based access)

- prepare a request bundle for manager approval

Here, documentation and audit logs aren’t “nice to have.” They’re the product.

How to adopt agent standards in your org (a 30-day plan)

Answer first: You don’t need a massive platform rewrite; you need a consistent contract for agent behavior, plus testable guardrails.

Here’s a practical rollout plan I recommend for U.S. SaaS and digital service teams:

Week 1: Pick one workflow and define “allowed actions”

Choose a workflow with clear ROI and low blast radius (ticket triage, FAQ drafting, lead enrichment). Write down:

- tools involved

- actions the agent may take

- actions that require approval

- actions that are prohibited

Week 2: Write an AGENTS.md-style spec

Even if you don’t follow a final community template yet, create a single file that includes:

- purpose + scope

- tool list with constraints

- approval thresholds

- logging requirements

- data handling notes

Treat it as a change-controlled artifact.

Week 3: Build an evaluation harness before you expand scope

At minimum:

- 50–200 historical cases as a replay set

- pass/fail checks for policy compliance

- “tool call unit tests” for risky actions

If you can’t test it, you can’t ship it.

Week 4: Add monitoring and a human-in-the-loop lane

Ship with:

- error budgets (what failure rate is acceptable)

- escalation paths (who gets paged)

- sampling review (e.g., review 5% of actions weekly)

This is where teams learn the real edge cases fast.

Common questions teams ask about agentic AI

Answer first: Most concerns come down to control, compliance, and cost—and you can address all three with strong agent contracts and evaluation.

“Will agents replace our support or ops teams?”

They’ll change the job. The strongest pattern is automation for the repeatable 80%, and better tooling for humans on the remaining 20%. If you remove humans entirely from sensitive workflows, you’re usually creating a future incident.

“Are open standards risky?”

No—undocumented behavior is risky. Open conventions tend to improve security because expectations are clearer and review is easier.

“What should we standardize first?”

Standardize permissions, approvals, logging, and tests before you standardize prompt wording. Prompt text changes; governance shouldn’t.

Where this goes next for AI-powered digital services in the U.S.

Agentic AI is shifting from “cool assistant” to “software operator.” When operators don’t have rulebooks, you get fragile systems and avoidable failures. When they do, you get faster shipping and fewer surprises.

OpenAI co-founding the Agentic AI Foundation and donating AGENTS.md is part of a broader U.S. trend: invest in shared, open-source AI infrastructure so more companies can build reliable AI-powered digital services—without each team reinventing governance from scratch.

If you’re building agents in 2026 planning right now, the question isn’t whether you’ll use an agent. It’s whether your agent will come with a clear contract, a test suite, and an audit trail—or whether you’ll be debugging “why did it do that?” in front of customers.

What workflow in your business would be measurably better if an agent could take action—but only within rules you can explain on one page?