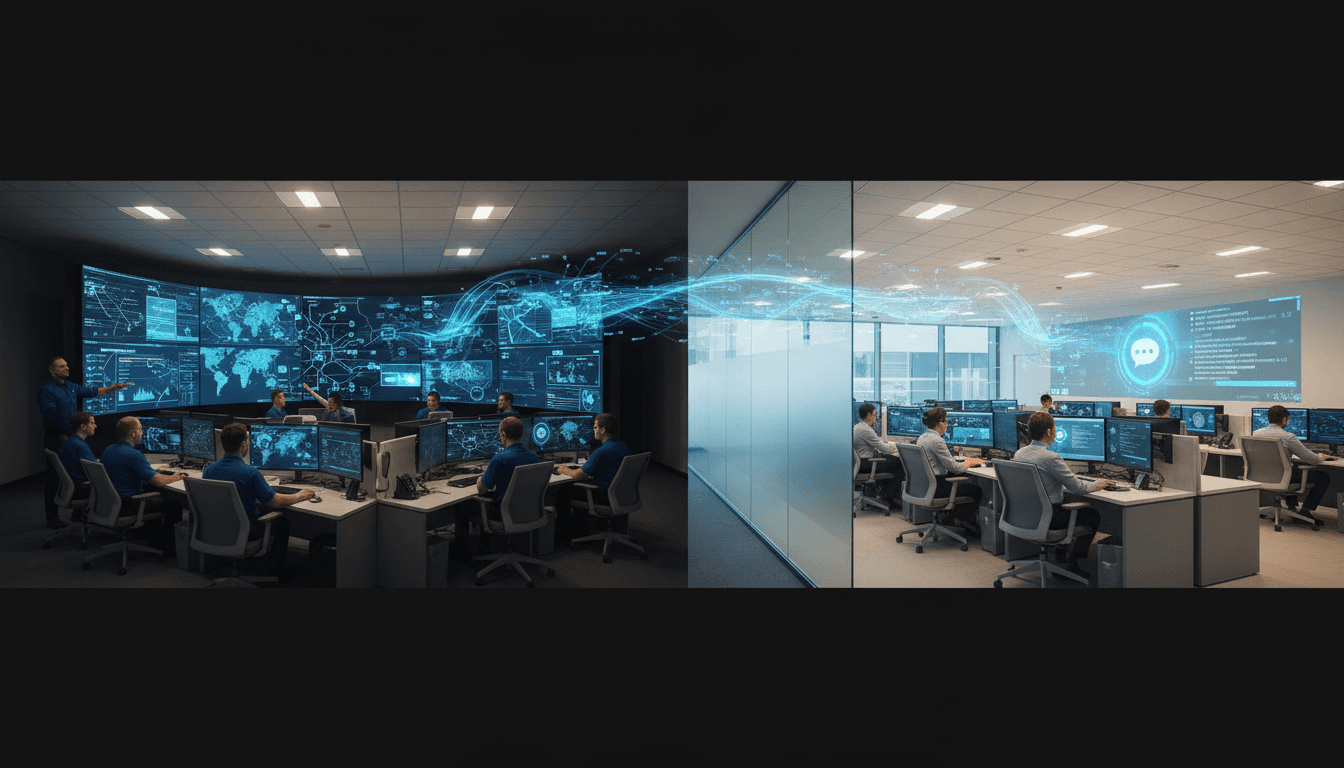

How OpenAI and Deutsche Telekom signal the next phase of AI in telecom—scaling support, automating ops, and building safer AI customer service.

AI in Telecom: Scaling Support With OpenAI + DT

A telecom serving tens of millions of people doesn’t get to “pilot” customer support the way a startup does. If your digital channels break or your support experience is inconsistent, you feel it immediately—in call center volume, churn, and brand trust.

That’s why the reported OpenAI collaboration with Deutsche Telekom (DT) is worth paying attention to, even from the United States. It’s a clear signal of where AI in telecommunications is headed: large digital service providers are standardizing on U.S.-built AI capabilities to scale customer experience, protect margins, and modernize operations across markets.

The source content we received from the RSS feed was access-limited (the page returned a 403). So rather than pretend we have proprietary details, this post focuses on what can be responsibly inferred from the collaboration headline—and, more importantly, what it means for telcos building AI-powered customer service, network operations, and digital self-service at national scale.

Why this OpenAI–Deutsche Telekom collaboration matters

The point isn’t the press-release optics. The point is that telecom is one of the hardest environments for enterprise AI—high volume, high stakes, regulated data, and customers who contact you when something is already going wrong.

A partnership between a major European telco like Deutsche Telekom and a U.S. AI provider is a case study in how U.S. AI innovation is shaping global digital ecosystems. Telecoms aren’t buying AI for novelty; they’re buying it because:

- Support demand is spiky and expensive. Outages, device launches, and billing cycles create predictable surges.

- Customer journeys are fragmented. Users bounce between apps, web portals, chat, phone, stores, and field techs.

- Network complexity keeps rising. 5G management, fiber rollouts, and edge services create more operational load.

Here’s the stance I’ll take: most telcos don’t have a “chatbot problem.” They have a systems-and-workflows problem. Large language models (LLMs) only pay off when they’re connected to the right tools, data, and guardrails.

What “bringing powerful AI to millions” really implies in telecom

When a telco says it’s bringing AI to millions, it usually means something broader than a single chat widget.

AI in customer service: containment, speed, and consistency

At telco scale, even small improvements compound. If a digital assistant can resolve a few more issues without escalation, you don’t just save cost—you protect your human agents for the hardest cases.

Practical outcomes telcos typically target with AI customer support include:

- Higher self-service resolution (containment): fewer handoffs to voice

- Lower average handle time (AHT): agents spend less time searching policies and systems

- Better first-contact resolution (FCR): fewer repeat contacts for the same issue

- More consistent policy application: fewer “depends who you talk to” answers

A simple example: “My internet is slow.” A well-designed assistant doesn’t respond with generic tips. It should run a workflow: identify plan and modem type, check known outages, pull line diagnostics, suggest specific steps, and—if needed—schedule a technician with the right skill profile.

AI in telecom operations: the hidden ROI layer

Customer-facing AI gets the spotlight, but the bigger payoff often comes from internal workflows. Telcos can use LLMs to help:

- Summarize trouble tickets and field notes

- Draft change-management communications

- Improve knowledge base quality and findability

- Assist NOC and SOC teams with incident timelines

This is where AI in telecom operations becomes less about “chat” and more about reducing operational friction.

AI at “millions of users” scale forces discipline

At scale, the usual AI mistakes become expensive fast:

- Hallucinated answers become regulatory risk.

- Inconsistent tone becomes brand risk.

- Weak identity controls become security risk.

So “millions” implies serious investment in governance, evaluation, monitoring, and fallback design.

How U.S.-built AI gets deployed inside a European telco

The collaboration angle matters to U.S. readers because it highlights an export pattern: American AI platforms are increasingly the intelligence layer that global service providers embed into their digital channels.

The typical architecture: LLM + tools + guardrails

The most successful deployments look like this:

- Model layer: an LLM for language understanding and generation

- Retrieval layer (RAG): pulls answers from approved telco content (plans, policies, outage advisories)

- Tool layer: executes actions (check order status, reset line, book appointment)

- Policy layer: compliance constraints and safe completion rules

- Observability: logs, evaluation, and continuous improvement

If you’re leading telecom digital transformation, the tool layer is where you win. A pretty assistant that can’t actually do anything just sends people to the phone line.

Data boundaries: what can and can’t be shared

European telcos operate under strict privacy requirements. That pushes architectures toward:

- Clear separation of personal data

- Role-based access controls n- Audit trails for sensitive actions

A useful mental model: treat the AI assistant like a new employee who needs least-privilege access and strict training materials. Don’t give it the keys to every system on day one.

Why telecoms partner instead of building everything in-house

Telcos are great at networks and service delivery. They’re not always positioned to:

- Train and update foundation models

- Maintain safety and red-teaming programs

- Keep pace with model iteration cycles

Partnering with a specialized U.S. AI provider can shorten time-to-value—especially when the business need is immediate (holiday device sales, year-end churn reduction, post-outage recovery).

A practical playbook for telcos rolling out generative AI

If you’re operating in the U.S. telecom market (or selling into it), the OpenAI–DT headline is a prompt to get serious about execution details. Here’s a rollout approach that tends to work.

1) Start with 3-5 high-volume, low-ambiguity intents

Pick intents that already have strong documentation and clear outcomes, such as:

- Billing due date and payment confirmation

- Order status and shipping tracking

- Outage checking by ZIP code

- Plan comparison (with guardrails)

- Appointment scheduling or rescheduling

These are the bread-and-butter of AI-powered customer experience. They also provide clean metrics fast.

2) Measure what actually changes in the business

Don’t settle for “chat engagement.” Track operational metrics:

- Digital resolution rate

- Escalation rate to agent

- AHT reduction for assisted agents

- Repeat contact rate within 7 days

- CSAT split by intent and channel

If you can’t tie AI to these metrics, you’re funding a demo.

3) Make the assistant reliable with retrieval, not improvisation

In telecom, policy errors are costly. A strong retrieval-augmented generation (RAG) setup anchored to approved knowledge reduces risk and improves consistency.

Two rules I’ve found useful:

- If the assistant can’t cite an internal source document, it should ask a clarifying question or escalate.

- If an answer impacts money, contract terms, or safety, require stricter validation.

4) Build “agent assist” before full automation (if you’re cautious)

If your organization is risk-averse, start by putting AI behind the scenes:

- Suggested replies grounded in policy

- One-click summaries of the customer history

- Troubleshooting scripts tailored to device type

This improves outcomes without forcing customers to trust a bot immediately.

5) Prepare for surges and failure modes

Telecom is full of edge cases. Your design should include:

- Clear handoff to humans with context included

- Degraded-mode behavior during outages (short, factual updates)

- Abuse handling and prompt-injection defenses

- Monitoring for sudden shifts in intent volume

That last point matters during peak seasons. Late December is a classic example: holiday device gifting, travel-related roaming questions, and end-of-year billing changes can all spike contact volume.

People also ask: common questions about AI in telecommunications

Can generative AI really reduce telecom call center costs?

Yes—when it’s connected to tooling and policies. Cost reduction comes from higher self-service resolution and shorter agent handle times, not from “friendly conversation.”

Where does AI help most: network ops or customer care?

Customer care shows results fastest, but network operations often produces deeper long-term ROI through better incident workflows, ticket quality, and faster troubleshooting.

What’s the biggest risk in telecom AI deployments?

Uncontrolled outputs. In telecom, a wrong answer can cause billing disputes, regulatory exposure, or unsafe troubleshooting advice. Guardrails, retrieval, and monitoring aren’t optional.

Does this collaboration say anything about U.S. AI competitiveness?

It does. When major non-U.S. digital service providers embed U.S.-built AI into core customer experiences, it signals that American AI platforms are becoming foundational infrastructure globally.

What to do next if you’re leading AI in a telco or digital service provider

If you run customer experience, operations, or digital channels at scale, the OpenAI–Deutsche Telekom collaboration is a reminder that AI in telecom is maturing from experiments into operational systems.

Start with a small set of high-volume intents, wire the assistant into real tools, and measure outcomes that finance and operations teams care about. Then expand—carefully—into higher-risk domains like retention offers, contract changes, and complex technical troubleshooting.

This post is part of our AI in Telecommunications series, where we track how AI is reshaping network operations, 5G management, predictive maintenance, and customer experience automation. The forward-looking question I’d ask your team heading into 2026: when the next demand surge hits—outage, launch, or billing cycle—will your AI reduce chaos, or add to it?