Build an internal “AI University” model to scale AI in utilities—linking talent, infrastructure, and procurement workflows to reliability and cost outcomes.

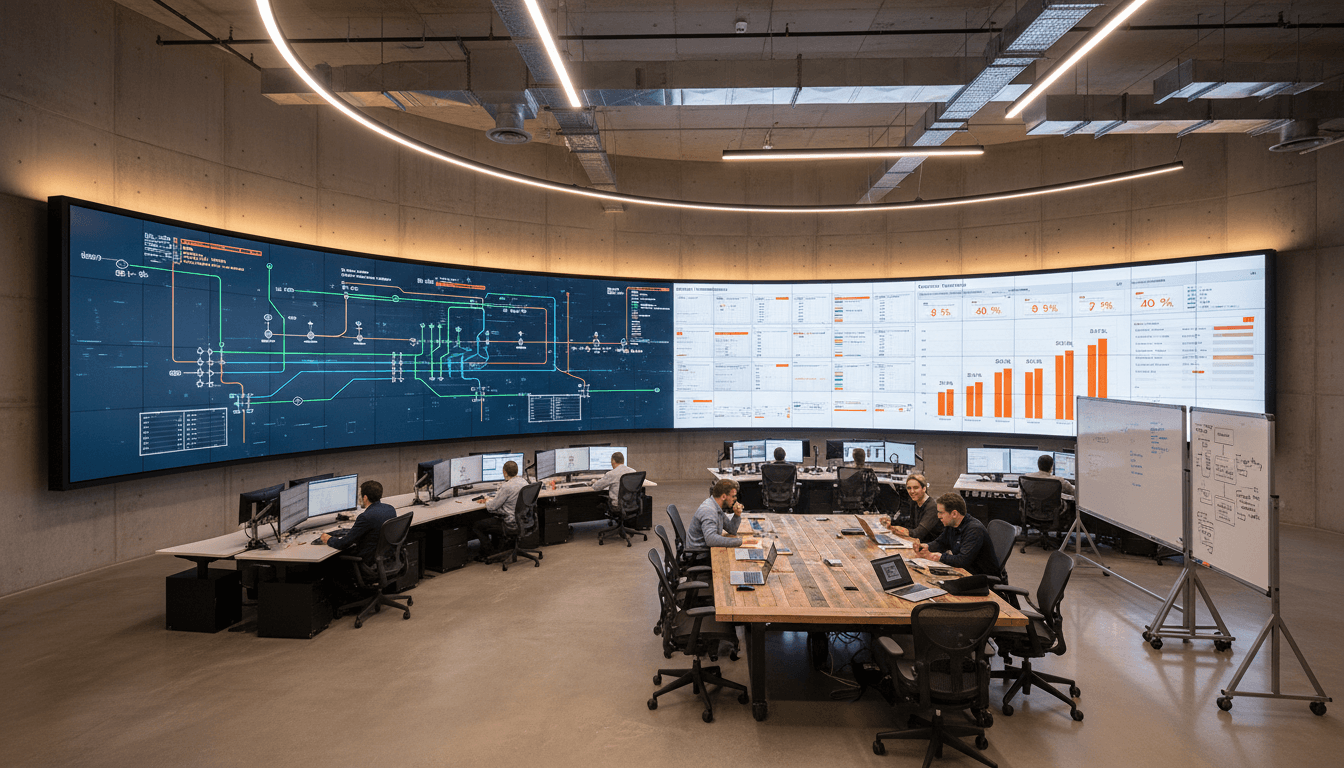

Build an “AI University” Inside Your Utility

Utilities don’t lose AI initiatives because the models are bad. They lose because the organization can’t absorb AI: not enough shared data, not enough compute, not enough aligned incentives, and not enough practical training for the people who actually run the grid.

That’s why the “AI University” idea—popularized in a recent NVIDIA-sponsored higher-ed roadmap—matters far beyond campus. If a university can coordinate dozens of departments, budgets, and priorities to scale AI, a utility can do the same across generation, T&D, field operations, customer programs, and procurement.

This post is part of our AI in Supply Chain & Procurement series, so I’ll focus on a specific angle: how building internal AI capability improves asset and materials planning, supplier risk management, inventory optimization, and outage response logistics—the behind-the-scenes work that determines whether reliability targets are met.

Why an “AI University” model fits utilities (better than another pilot)

An internal “AI University” is a practical operating model: a repeatable way to create talent, infrastructure, and governance so AI stops being a string of one-off proofs of concept.

Utilities have three structural challenges that make this approach especially relevant:

- Long asset lives + short tech cycles: transformers last decades; AI tools change yearly. You need an internal learning system that keeps people current.

- Siloed data ownership: OMS, ADMS, EAM, SCADA historians, GIS, AMI, procurement ERPs—each has a “keeper.” AI needs cross-functional access with guardrails.

- Risk and regulation: you can’t “ship and iterate” like a consumer app. You need documented controls, auditability, and a clear model risk approach.

Here’s the stance I’ll take: if you don’t build the organizational muscle, your AI roadmap becomes a slide deck—and your supply chain and grid modernization teams will keep fighting the same fires with better dashboards, not better decisions.

The five-part framework utilities can borrow and adapt

The original “AI University” roadmap emphasizes campus-wide strategy, infrastructure, talent, and metrics. Translate that into utilities, and you get five pillars that map cleanly to industrial AI adoption.

1) Start with cross-functional buy-in—then lock it in with operating cadence

The fastest way to stall is to let AI become “the data team’s thing.” Utilities need a shared mandate across:

- Grid operations (dispatch, reliability, protection engineering)

- Asset management and maintenance

- Customer operations (call center, DER programs)

- IT/OT security and architecture

- Supply chain & procurement (sourcing, warehousing, contractor networks)

Answer first: Create an AI steering group that meets monthly, but run delivery through a weekly execution forum.

A workable pattern:

- AI Steering (monthly): sets priorities, approves funding, resolves conflicts, owns ROI targets.

- AI Delivery Council (weekly): removes blockers, aligns data owners, tracks model performance and adoption.

- Use-case product owners: come from the business (not IT), accountable for outcomes like SAIDI/SAIFI impact, stockout reduction, or truck roll avoidance.

If you’ve ever tried to coordinate storm stock, mutual aid, contractor onboarding, and switching plans during a major event, you already know why cadence matters.

2) Build the compute and data foundation like a grid asset, not a lab toy

The higher-ed roadmap stresses computing infrastructure and funding models. For utilities, the translation is blunt: AI capability is infrastructure.

Answer first: Treat your AI platform as a shared service with defined tiers—sandbox, production, and regulated/critical.

A practical three-tier design:

- Sandbox (days): for rapid experimentation on de-identified or low-risk datasets.

- Production (weeks): integrated pipelines, monitoring, access control, change management.

- Critical (months): for OT-adjacent or safety-impacting use cases, with stronger validation, model governance, and audit trails.

For procurement and supply chain, this matters because models often depend on sensitive data:

- supplier pricing and contract terms

- critical spares lists and reorder points

- workforce availability and contractor performance

- logistics constraints during emergencies

Compute decisions also have budget implications. Many utilities end up with a hybrid approach:

- Cloud for bursty training and non-OT workloads

- On-prem or dedicated environments for latency, data residency, and security

- A curated set of “approved” AI services and model deployment patterns

The goal isn’t to chase maximum GPU utilization. It’s to ensure repeatable deployment and a predictable path from prototype to production.

3) Make AI literacy a job requirement—especially for planners and buyers

The original “AI University” concept emphasizes AI literacy across disciplines. Utilities should take that literally.

Answer first: Train three layers of capability: AI-aware operators, AI power users, and AI builders.

A simple skills map that works in practice:

- AI-aware (everyone impacted): what the model does, how to interpret confidence, what “bad data” looks like, how to escalate issues.

- AI power users (planners, analysts, category managers): feature understanding, scenario testing, bias and drift awareness, prompt patterns for internal copilots.

- AI builders (data/ML team): MLOps, time-series, graph analytics, model risk management, cybersecurity-by-design.

In supply chain & procurement, the power-user group is the difference between “the model said so” and “the model plus my constraints yields a decision.”

Here’s what I’ve found works: tie training to real workflows.

- Forecasting workshops using your actual demand history for critical spares

- Supplier risk exercises using real lead-time variability and quality events

- Outage logistics simulations that combine weather, crew availability, and stock positions

4) Choose use cases that force data integration (because that’s the real bottleneck)

Universities use high-impact research and cross-department projects to build momentum. Utilities should do the same, but pick projects that create reusable data products.

Answer first: Prioritize use cases where the model output becomes an operational decision and requires multiple systems to cooperate.

High-value, supply-chain-connected examples:

- Critical spares optimization: dynamic reorder points based on failure probability, lead times, and storm seasonality.

- Predictive maintenance to procurement loop: predicted failure windows automatically trigger sourcing actions (RFQs, expediting) with human approval.

- Supplier lead-time prediction: model variability by lane, supplier, and season to reduce safety stock without raising risk.

- Work order + inventory synchronization: recommend kitting and staging plans for planned outages.

- Storm response logistics: pre-positioning based on probabilistic damage, feeder criticality, and warehouse constraints.

A quick myth-bust: many teams start with chatbots because they’re visible. Chat has a place—but in utilities, the biggest ROI often sits in time-series forecasting, optimization, and anomaly detection, tightly coupled to planning and procurement.

5) Measure ROI like a utility: reliability, cost, and risk—tracked monthly

The roadmap calls out measurable metrics like enrollment and funding. Utilities need equally concrete scorecards.

Answer first: Track value in three buckets—financial, reliability/operational, and risk.

A metric set that works for AI in supply chain & procurement:

- Inventory performance: stockouts, service levels, turns, obsolete inventory, expedite fees.

- Supplier performance: on-time delivery variance, quality incident rates, lead-time drift.

- Work execution: scheduled vs unscheduled maintenance ratio, kit completeness, truck roll reductions.

- Reliability outcomes: restoration time improvements attributable to better staging and materials availability.

- Model operations: adoption rate, override rate (with reasons), drift events, time-to-retrain.

If you can’t measure adoption, you’re not running AI—you’re running a science project.

A practical 90-day plan to start your internal “AI University”

Utilities planning for 2026 budgets (right now, in December) have a window: you can set up governance and training while projects are being scoped.

Answer first: In 90 days, you can stand up the operating model, deliver one integrated use case, and launch role-based training.

Days 0–30: Set the foundation

- Appoint an executive sponsor and 2–3 business product owners (include one from procurement)

- Define your AI tiers (sandbox/production/critical) and minimum controls

- Select 1–2 “force integration” use cases (critical spares is a strong first pick)

- Create a shared KPI baseline (current stockout rate, expedite spend, lead-time variance)

Days 31–60: Build and pilot

- Deliver a first model with human-in-the-loop approvals

- Establish monitoring: data freshness, prediction quality, and override reasons

- Run two training cohorts: AI-aware (broad) and AI power users (targeted)

Days 61–90: Operationalize

- Integrate outputs into existing tools (EAM/ERP workflows, planning dashboards)

- Publish a playbook: “how to request an AI use case,” “how models go live,” “how to retire models”

- Present a monthly scorecard to the steering group and re-prioritize based on outcomes

This isn’t flashy. It’s effective.

People also ask: what utilities get wrong when building internal AI capability?

“Should we build or buy AI solutions?”

Buy what’s commoditized (common forecasting, standard anomaly detection), build where it’s differentiating (your grid topology, your storm playbooks, your supplier ecosystem). Most utilities end up with a blended portfolio.

“Do we need GPUs to get value from AI in procurement?”

Not always. Many procurement and inventory models run fine on CPU. GPUs become more relevant for large-scale deep learning, computer vision, and certain time-series workloads. The bigger requirement is usually data quality and integration, not compute.

“How do we keep models from becoming shelfware?”

Tie every model to a decision, put a named owner on that decision, and measure adoption monthly. If the model doesn’t change behavior, it doesn’t matter.

Where this fits in the AI in Supply Chain & Procurement series

Most supply chain AI content focuses on forecasting demand or automating purchasing workflows. That’s necessary, but it’s incomplete for energy and utilities.

A utility’s supply chain is inseparable from reliability. When a transformer fails, procurement isn’t a back-office function—it’s a restoration constraint. Building an internal “AI University” is how you scale from isolated analytics to decision-grade AI that planners, buyers, and operators actually trust.

If you’re planning your 2026 initiatives, a good next step is to pick one cross-system use case (critical spares, kitting for planned outages, or supplier lead-time prediction) and build the training and governance around it. The question worth debating internally is simple: which operational decision will you make measurably better by the end of Q2?