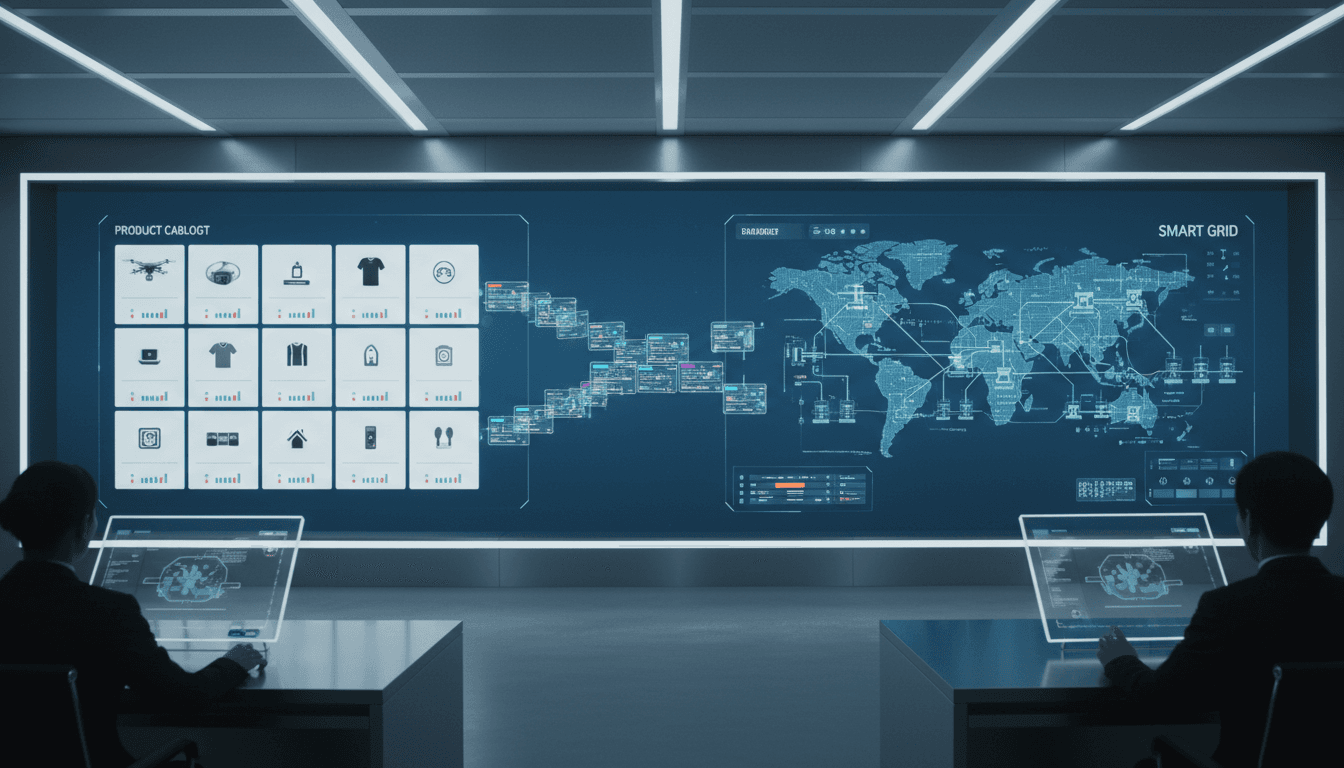

Amazon’s Catalog AI shows how structured data improves search and UX. Learn how the same playbook applies to utilities for forecasting, grid ops, and maintenance.

Catalog AI Lessons for Smarter Search—Retail to Grid

Amazon expects its Catalog AI system to add US $7.5 billion in sales this year (reported in July). That number grabs attention, but the more interesting story is how it gets there: not by flashy front-end features, but by fixing the messy, unglamorous layer underneath—product data.

If you lead digital, data, or operations teams in energy and utilities, this should feel familiar. Your “catalog” might be a patchwork of asset registries, GIS records, SCADA tags, outage notes, maintenance logs, tariff tables, and customer programs. And when that data is incomplete or inconsistent, everything downstream suffers: search, analytics, field execution, customer experience, and even regulatory reporting.

This post is part of our AI in Retail & E-Commerce series, but I’m going to take a firm stance: retail has quietly become a blueprint for large-scale operational AI. Amazon’s Catalog AI is a clean case study in what works when the “dataset” is the business.

Catalog AI’s real job: turn messy data into usable decisions

Catalog AI isn’t primarily a writing tool. Its core job is entity understanding and data standardization at scale—finding, correcting, enriching, and organizing product attributes so customers can discover the right item quickly.

From the RSS summary, Catalog AI:

- Collects product information from across the web

- Uses large language models (LLMs) to fill missing attributes, correct errors, and rewrite titles/specs for clarity

- Improves predictive search by making listings more consistent and machine-readable

Here’s the operational insight: search quality is mostly a data quality problem. Better UI helps, but it can’t compensate for inconsistent attributes.

In utilities, the analog is direct:

- If transformer attributes aren’t standardized, your reliability analytics will lie.

- If outage tickets don’t map cleanly to assets, restoration ETAs drift.

- If customer program data is inconsistent, personalization becomes spam.

The pattern is universal: when the underlying “catalog” is structured, every downstream system gets smarter for free.

Why Amazon’s approach matters (even if you don’t sell products)

Amazon’s catalog is shaped by thousands (millions) of third-party sellers entering data with variable quality. Utilities have the same problem—just with different “sellers”:

- Vendors and contractors

- Field crews entering notes under time pressure

- Migrations between enterprise systems

- Regional naming conventions that never fully converge

Catalog AI represents a mature response: don’t demand perfection from humans; build systems that raise the floor automatically.

The hidden playbook: glossary-first, then automation

One detail from the story is easy to skip but incredibly telling: before Catalog AI scaled, Agrawal’s team built a glossary—standardized terms for dimensions, color, manufacturer, and other attributes. Sellers then received auto-suggestions as they typed.

That sequence—controlled vocabulary → guided input → automated enrichment—is exactly how you avoid an LLM turning your enterprise data into expensive chaos.

How this maps to energy and utilities data management

A practical translation looks like this:

-

Build or adopt a utility “glossary” (ontology/taxonomy) for assets and work

Examples: asset types, failure modes, causes, locations, materials, manufacturer/model naming rules. -

Guide input at the edge (where data is created)

Crew mobile apps suggest standardized failure codes; call-center tools suggest program names; engineering tools enforce attribute formats. -

Use AI to enrich and reconcile, not to invent

LLMs can propose missing fields based on evidence (documents, manuals, past work orders), but approvals and confidence thresholds matter.

Here’s what works in practice:

- Treat the glossary as a product with owners, versioning, and change control.

- Use LLMs to map synonyms to canonical terms (“pole-top transformer” → “distribution transformer”), then store canonical values.

- Track provenance (where each attribute came from), because audits happen.

Predictive search isn’t a feature—it's an operational capability

Amazon’s improved predictive search suggests items in real time as a shopper types. That sounds consumer-focused, but underneath it’s a system that understands intent and matches it to structured entities.

For utilities, “predictive search” shows up in places that directly affect cost and reliability:

- A dispatcher searching outage notes and instantly seeing relevant switching orders

- A planner typing “failed arrester coastal” and seeing the most common compatible parts and past fixes

- A customer typing “bill high” and seeing the right self-serve actions based on their rate plan and usage pattern

The Bing lesson: when data is scarce, structure wins

Agrawal’s earlier work on Microsoft’s Bing is another useful clue. When the team lacked enough user data to train machine learning models, they leaned on deterministic algorithms and structured keyword extraction, then improved relevance through ranking and experimentation.

Utilities often face similar constraints:

- Limited labeled failure data for rare events

- Sparse examples for new DER programs

- New assets without historical sensor patterns

A strong approach is hybrid:

- Use rules and deterministic extraction where you can (high precision, explainable)

- Use ML/LLMs for ranking, summarization, and reconciliation where uncertainty is higher

If you’re waiting for perfect training data before improving search and decision support, you’ll wait forever. Structure first.

What “Catalog AI for the grid” looks like in 2026

Retail AI tends to look like personalization. Utility AI tends to look like forecasting. Both are true, but they’re incomplete. The bigger win is system-wide optimization through shared, clean entities.

Here are three concrete Catalog AI parallels that utilities can act on in the next 6–12 months.

1) Asset data enrichment for predictive maintenance

Catalog AI fills missing product attributes and corrects errors. The grid equivalent is asset profile completion:

- Missing manufacturer/model fields

- Conflicting install dates across systems

- Non-standard component names in work orders

AI can help by:

- Extracting attributes from PDFs (manuals, inspection reports, as-builts)

- Reconciling duplicates (two IDs for the same asset)

- Normalizing terminology (synonyms, abbreviations, legacy codes)

The operational payoff is real: predictive maintenance models don’t just need sensor streams; they need trustworthy asset context.

2) Demand forecasting that actually respects customer “entities”

Catalog AI improves discovery because it can map what users type to the right products. Utilities have a similar mapping problem: what a customer is trying to do vs. what the system thinks they’re eligible for.

If you can reliably structure:

- Rate plans

- DER participation

- Building type and heating fuel

- EV ownership signals

…then your demand forecasting and program targeting become less blunt. You stop treating customers as anonymous meters and start treating them as explainable segments.

3) Field operations copilots grounded in standardized language

Agrawal’s system suggests standardized glossary language as sellers type. In field operations, the same concept reduces rework:

- Auto-suggest failure codes based on a short free-text note

- Convert “sparking at cutout” into standardized condition + severity

- Propose parts and procedures based on asset type + symptom + location

The big constraint: a copilot is only as good as the structured data it writes back to. If your work management system can’t store clean entities, the copilot becomes a chat window with no memory.

How to implement this without creating a new data mess

Most companies get this wrong by starting with the model. Start with the workflow and the data contract.

A practical rollout plan (that doesn’t depend on heroics)

-

Pick one “catalog” slice with high business pain

Examples: pole assets in one region, outage cause codes, EV program enrollment. -

Define the minimum viable schema (10–30 attributes)

Keep it tight: what do you need for search, analytics, and decisions? -

Create a glossary with enforcement points

Not a PDF. A living service: APIs, dropdowns, validation rules. -

Add AI for enrichment with confidence thresholds

- High confidence → auto-fill

- Medium confidence → human review

- Low confidence → leave blank, log suggestion

-

Instrument everything with experimentation

Agrawal’s A/B experimentation platform is the unsung hero here. In utilities, do the same:- Compare search time-to-answer before/after

- Measure reduction in “other” categories

- Track truck rolls avoided due to better part matching

Metrics that show whether it’s working

Retail measures conversion. Utilities should measure operational friction. A good starting set:

- Search success rate (did the user find the right asset/work order within N clicks?)

- Data completeness for target attributes (percent filled, percent consistent)

- Mean time to diagnose in field/dispatch workflows

- Repeat work due to wrong part/procedure selection

- Forecast error reduction after adding structured customer/asset features

Why this story belongs in an AI in Retail & E-Commerce series

Retail and e-commerce are where AI meets reality: messy inputs, huge catalogs, constant change, and customers who won’t tolerate friction. Catalog AI is a reminder that the most valuable AI work is often invisible—it shows up as fewer dead ends, clearer choices, and faster decisions.

For energy and utilities teams pursuing grid optimization, demand forecasting, and predictive maintenance, the lesson is straightforward: treat your operational data like a product catalog. Build the glossary, enforce the schema at the edge, and let AI do the boring cleanup at scale.

If you’re planning your 2026 roadmap right now, here’s a useful question to pressure-test priorities: Which utility “catalog” (assets, customers, programs, work) would create the biggest downstream lift if it were 20% cleaner by March?