Best TV episodes of 2025 reveal patterns AI can use to personalize recommendations. Learn how critics + data improve discovery and engagement.

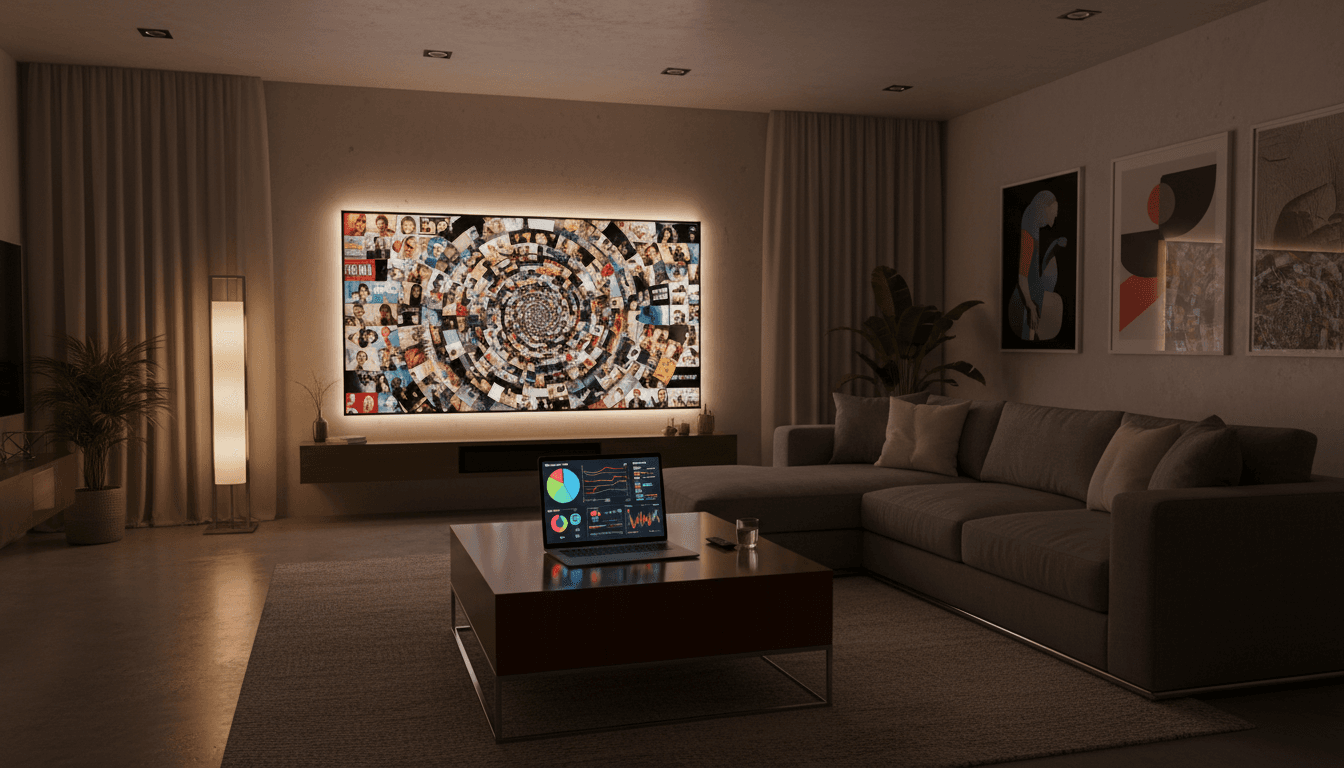

Best TV Episodes of 2025: What AI Sees in Your Favorites

A single great TV episode can do what a whole season sometimes can’t: make you stop scrolling. Critics at The Hollywood Reporter highlighted 10 standouts from 2025—ranging from a pint-sized murder mystery to a searing Spike Lee–directed documentary installment to a bittersweetly hilarious Thanksgiving episode. Different genres, different tones, different audiences… yet people reacted with the same intensity.

That’s the part media teams should pay attention to.

When a list like “best episodes of the year” lands, most streamers treat it as press. Smart teams treat it as data. Critics are effectively publishing a high-signal dataset: what craftsmanship looks like, what themes cut through, and what “must-watch” means when attention is scarce. AI for media and entertainment is at its best when it translates that signal into decisions—recommendations that feel personal, programming that’s easier to market, and creative development that’s grounded in real audience appetite.

This post uses the “best TV episodes of 2025” idea as a practical case study: what patterns consistently show up in top episodes, how AI can detect them at scale, and how streaming platforms and studios can turn those insights into better discovery and higher engagement.

The real value of “best episode” lists (it’s not the list)

Answer first: Year-end “best of” lists matter because they reveal why episodes resonate—information AI can operationalize into recommendation engines and content strategy.

Critics aren’t perfect proxies for audiences, but they’re unusually good at articulating craft reasons behind emotional impact: structure, pacing, performance, theme, and novelty. Those reasons become features—signals that AI models can learn from and use to predict what a viewer might love next.

Here’s what’s happening under the hood when a platform takes this seriously:

- Critic language becomes labeled data. Words like “bittersweet,” “hilarious,” “searing,” and “mystery” are not fluff. They’re sentiment + tone + genre signals.

- Episode-level analysis beats show-level tags. People don’t just like The Bear; they like that episode that left them wrecked (or hungry). Episode granularity increases recommendation accuracy.

- Curation becomes a measurable input, not an editorial vibe. If curated picks correlate with longer watch sessions or higher completion rates, you can quantify curation’s ROI.

The punchline: the list is the artifact; the language and reaction around it are the asset.

What made the best TV episodes of 2025 stand out (patterns AI can detect)

Answer first: Top episodes cluster around a few repeatable “engagement drivers”—high-contrast tone, contained premises, social-event viewing, and purposeful discomfort.

The RSS summary calls out three types of standouts:

- a pint-sized murder mystery

- a Spike Lee–directed documentary entry

- a bittersweetly hilarious Thanksgiving

Those three descriptions already hint at patterns that show up in many “episode of the year” conversations.

1) The “contained premise” episode

A pint-sized murder mystery is a classic example of a self-contained hook. These episodes often:

- start with an immediate question (who did it? what happened?)

- give the viewer frequent progress markers

- reward attention with reveals

Why it wins: contained episodes create a sense of completion and control—perfect for viewers juggling holiday chaos, end-of-year travel, or “one more episode” fatigue.

How AI helps: models can learn which viewers respond to contained arcs and then surface episodes (not just series) that scratch that itch.

2) The “director signature” episode

A Spike Lee–directed doc installment signals authorship. Viewers may not articulate it, but they feel it: rhythm, framing, point of view, intentional discomfort.

Why it wins: signature episodes create cultural conversation. People share them because they feel important, not just entertaining.

How AI helps: AI content analysis can detect stylistic markers (visual tempo, color palettes, shot length distribution, audio intensity) and match them to viewer preferences—without relying on a viewer to know a director’s name.

3) The “holiday/social viewing” episode

A Thanksgiving episode is an annual pressure test. If it lands, it becomes tradition. If it doesn’t, it disappears.

Why it wins: holiday episodes often mix comedy + pain (families, nostalgia, conflict) and spark group chat reactions.

How AI helps: recommendation engines can incorporate seasonal context (it’s December 2025; viewers are primed for family dynamics, year-end reflection, and comfort viewing) and nudge the right episodes at the right time.

Snippet-worthy truth: The best recommendations aren’t only about taste—they’re about timing.

How AI turns critic picks into better recommendations

Answer first: AI improves TV recommendation engines by combining critic sentiment, episode metadata, and behavioral signals to deliver episode-level personalization.

Most recommendation systems still over-rely on broad tags like “comedy,” “drama,” or “post-apocalyptic.” That’s how you end up with the classic bad rec: “You watched a prestige drama, so here are 40 prestige dramas.”

A stronger approach is multi-signal.

A practical recommendation stack (what works in real platforms)

-

Content understanding (what it is):

- transcripts + subtitles for themes and dialogue density

- scene detection for pacing and structure

- audio features for intensity and mood

-

Audience response (how people react):

- completion rate by episode

- “next episode” start rate

- rewatch frequency

- pause/rewind hotspots

-

Critical consensus (why it’s praised):

- critic descriptors (tone, novelty, performance)

- recurring phrases across reviews (e.g., “bottle episode,” “gut-punch,” “horror-comedy”)

-

Context (when it fits):

- seasonality (holiday weeks, summer weekends)

- device usage (mobile vs TV)

- time-of-day viewing patterns

Put together, you can recommend something like:

- “If you liked the bittersweet Thanksgiving chaos vibe, try this other episode with family conflict + dark comedy + confined setting.”

That’s far closer to how humans recommend TV.

“People also ask” (and the straight answers)

Can AI recommend TV episodes, not just shows? Yes—episode-level embeddings (vector representations of each episode’s themes, tone, pace, and outcomes) are already a standard approach in modern media ML pipelines.

Will critic data skew recommendations toward prestige picks? Only if you over-weight it. The best systems treat critic input as a high-quality feature, then calibrate with audience behavior so the model doesn’t ignore mainstream tastes.

Does personalization hurt discovery? Bad personalization does. Good personalization includes exploration—intentionally mixing in “adjacent surprises” so users don’t get stuck in a taste bubble.

How AI finds trends in 2025’s standout episodes (and why creators should care)

Answer first: AI trend analysis spots recurring themes and structures across hit episodes, giving creators and marketers a clearer map of what audiences are responding to.

A “Top 10 episodes” list is small, but it usually aligns with broader signals you can measure across the year: social chatter, completion rates, critic overlap, meme velocity, even subtitle keyword spikes.

Here are trends that commonly surface when you analyze standout TV episodes (and that the RSS hints at):

Trend: tonal contrast beats tonal purity

A “bittersweetly hilarious” episode is a clue. Viewers increasingly reward episodes that can switch modes without losing coherence.

Creator use: plan scenes where comedy doesn’t undercut stakes, it sharpens them.

AI use: train classifiers not just on genre, but on tone shifts—a strong predictor of “I need to tell someone about this.”

Trend: purposeful discomfort travels fast

A “searing” documentary entry suggests intensity and point of view.

Creator use: don’t sand down the edges in the edit. If the thesis is strong, audiences follow.

AI use: detect “intensity arcs” (rising tension, sustained discomfort) and match them to viewers who historically complete challenging content.

Trend: mystery mechanics still work—when the episode is tight

The “pint-sized” framing matters: a small mystery with clean structure.

Creator use: treat mystery like choreography. Every scene should either complicate the question or sharpen it.

AI use: identify episodes with high information gain (new facts per minute) and recommend them to viewers who abandon slow-burn content.

Opinion I’ll stand by: Most “content strategy” decks ignore episode structure. That’s a mistake. Structure is one of the most personal preference signals viewers have.

A playbook for media teams: using AI + curation to drive engagement

Answer first: Pair human curation with AI ranking and you get recommendations that feel trustworthy—and perform measurably better.

If your goal is leads (and real business impact), here’s the practical workflow I’ve seen work across streaming, FAST, and broadcaster apps.

Step 1: Build an episode “taste graph”

Create vectors for each episode using:

- transcript themes

- tone and sentiment

- pacing and scene variety

- cast and character focus

- viewer retention curves

This is the foundation for personalized recommendations that don’t feel random.

Step 2: Treat critic lists as “quality anchors”

Use lists like the 2025 picks as anchors for:

- validating your embeddings (“do similar episodes cluster together?”)

- testing UI collections (“Awarded Episodes,” “Perfect Holiday Chaos,” “One-Night Mysteries”)

- improving search (“searing documentary” should mean something in retrieval)

Step 3: Ship seasonal rails in December (because timing wins)

December is when “what do we watch tonight?” becomes a daily question.

Collections that tend to convert well:

- Holiday episodes that aren’t cheesy (bittersweet, messy, real)

- One-sitting mysteries (contained premise)

- Talk-worthy docs (director signature, cultural relevance)

Step 4: Measure what matters (not vanity)

Track:

- uplift in episode starts from curated rails

- completion rate changes vs control recommendations

- saves/likes and share actions

- downstream effects (did they start the series? did they return next day?)

If you can’t measure it, you can’t improve it.

Where this fits in the “AI in Media & Entertainment” series

The broader theme of this series is simple: AI works best when it improves the viewer experience and respects creative intent. Episode-of-the-year lists are a clean test case because they’re about taste, not tech.

Critics surfaced what made 2025’s best TV episodes stand out: tightly constructed mystery, unmistakable documentary authorship, and holiday comedy that knows life is painful and funny at the same time. AI can’t replace that craft. But it can make sure the right people actually find it—especially during December, when choice overload is at its worst.

If you’re building a media product, here’s the question worth sitting with: Are your recommendations describing shows… or are they matching moments?