AI vs pro Dota showed how constraints shape AI behavior. Learn how those lessons apply to U.S. SaaS, personalization, and automation.

AI vs Pros in Dota: What It Means for U.S. SaaS

OpenAI Five lost both of its mainstage games at The International 2018—and that’s exactly why the story still matters for AI in U.S. digital services. Five held real win conditions for 20–35 minutes in both matches against elite Dota 2 professionals, then got out-maneuvered late. That arc—strong early performance, late-stage breakdowns under pressure—looks a lot like what happens when companies ship AI features that shine in demos but stumble in messy, real-world edge cases.

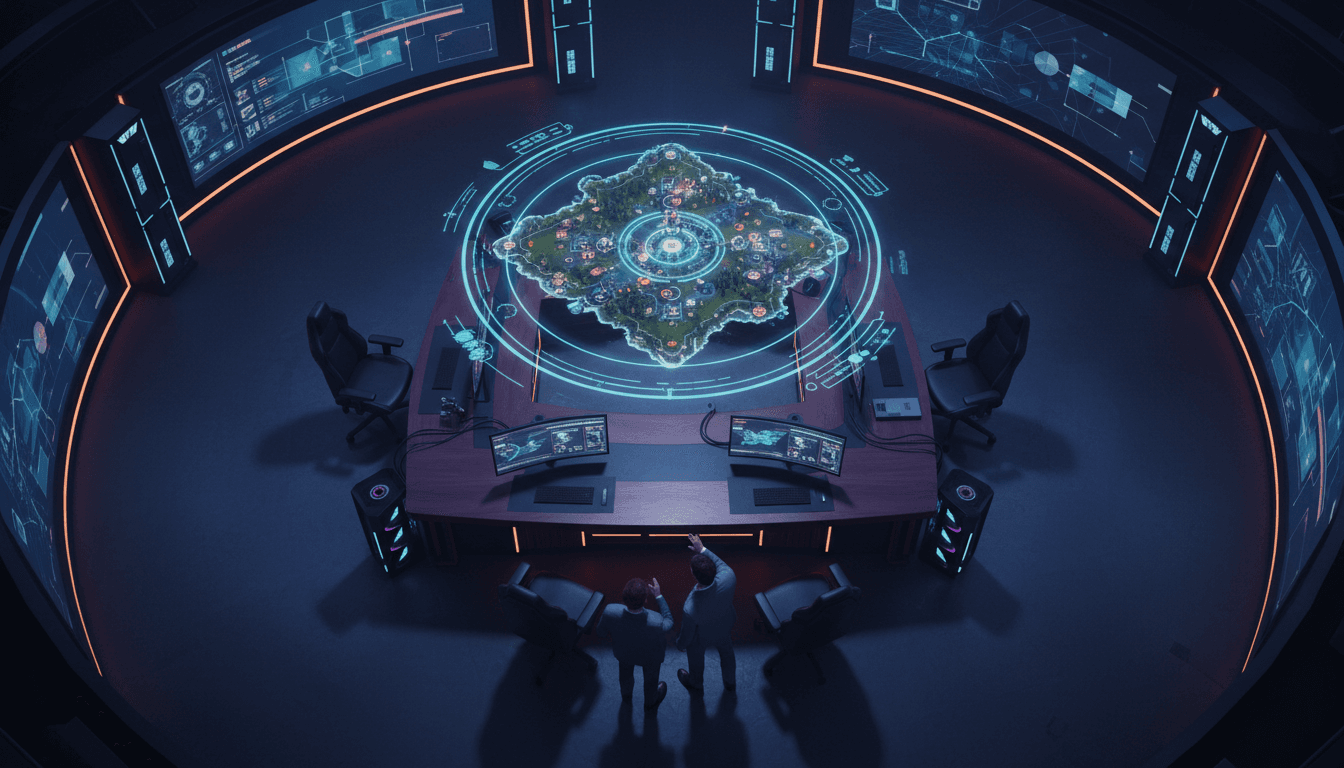

For our AI in Media & Entertainment series, this is one of the clearest “origin stories” for modern AI decision-making in complex environments. Dota 2 isn’t just a game; it’s a fast-moving system with incomplete information, long time horizons, coordination costs, and adversarial opponents. Those are the same forces at work in content platforms, streaming operations, ad delivery systems, customer messaging, and SaaS workflows.

Here’s the stance I’ll take: the most useful lessons from OpenAI Five aren’t about winning. They’re about constraint design, training feedback loops, and how to translate “AI that can act” into “AI that can operate.”

Why Dota 2 is a serious testbed for applied AI

Dota 2 is a practical benchmark because it forces AI to do more than pattern-match. It requires sequencing decisions across minutes and dozens of micro-interactions, then aligning those choices with a strategy that only pays off later.

In the TI 2018 matches, OpenAI Five faced:

- Much stronger humans than earlier benchmark games

- Third-party hero lineups (less opportunity to engineer favorable drafts)

- A ruleset closer to what pros call “Real Dota”, including a key change: moving from one courier per hero to a single, shared, mortal courier

For business readers, translate that as: the AI moved from a controlled environment to something closer to production.

The “20–35 minute” lesson: early dominance doesn’t equal robustness

Five’s ability to keep games competitive for 20–35 minutes is meaningful because it shows the system had learned:

- lane pressure and resource control

- coordinated skirmishes and team-fight mechanics

- tempo-driven play (keep the opponent reacting)

But late-game Dota punishes brittle assumptions: one misread of the map, one poor fight timing, one missed objective trade—then the game flips.

That’s also how AI features fail in SaaS: the model performs well on common flows, then breaks during handoffs, escalation paths, unusual user behavior, or adversarial inputs.

The courier change: a masterclass in constraints and unintended incentives

The most business-relevant detail from the TI 2018 results isn’t the scoreboard. It’s the rule change.

Earlier, OpenAI Five operated with an unusually forgiving setup: each hero had its own invulnerable courier. That meant a constant stream of regeneration items, enabling nonstop aggression. Community feedback was blunt: it didn’t feel like “Real Dota.” So the team removed the restriction and trained with a single courier—only six days before the TI matches.

Here’s the useful takeaway in one line:

AI becomes what you reward and enable—whether you meant to or not.

What this maps to in U.S. tech and SaaS

Constraints are everywhere in product AI:

- An AI support agent that’s allowed to issue refunds automatically will “learn” a different policy than one that must escalate.

- A recommendation engine optimized purely for watch time will behave differently than one constrained by diversity, novelty, or safety.

- A marketing automation model rewarded for clicks will produce different messaging than one rewarded for qualified pipeline.

The courier change is a clean example of incentive drift. Give the system easy resupply, and it builds a high-pressure identity. Remove it, and you find out what the system really understands.

Actionable constraint checklist (what I’d do before shipping)

If you’re building AI into a digital service—especially anything customer-facing—treat “rules” like a first-class product surface:

- List the permissions: what can the AI do without a human? refund, publish, email, recommend, route, change settings.

- Define failure costs: what’s an acceptable error rate when the downside is reputational or legal?

- Add friction intentionally: rate limits, approval thresholds, safe templates, and sandbox modes.

- Measure behavior shift after constraint changes (don’t assume it’s minor).

What the TI 2018 matches reveal about training loops

At The International 2018, OpenAI Five played:

- a match against paiN Gaming (top-18 caliber at the event), lasting about 51 minutes

- a match against a team of Chinese superstar players, lasting about 45 minutes

The pros had deep experience—average career earnings cited around $350,000 for paiN players and about $1 million for the all-star group. That isn’t trivia; it signals Five wasn’t farming easy opponents. It was being tested against people whose edge comes from adaptation, drafting intuition, and reading pressure.

The business translation: your AI needs “hard opponents,” not friendly test cases

Most organizations validate AI with:

- internal users who know the system’s quirks

- clean historical data

- happy-path prompts

Then they’re surprised when the real world behaves like a TI mainstage: adversarial, chaotic, and unforgiving.

If you’re serious about AI in customer communication, content personalization, or workflow automation, you need evaluation that feels like elite play:

- red-team prompts and abuse cases

- distribution shift (new customer segments, new seasons, new products)

- latency pressure (real-time decisions)

- multi-agent complexity (handoffs between sales, support, billing)

From esports to media & entertainment AI: the shared mechanics

AI in media & entertainment is often described in terms of “recommendations” and “personalization,” but the hardest problems look a lot like Dota:

- Partial information: you never fully know why a user churns or converts.

- Long horizons: a decision today changes what a user does next week.

- Team coordination: content ops, marketing, product, and support all influence outcomes.

- Adversarial dynamics: fraud, spam, bots, and incentive gaming.

Where OpenAI Five’s lessons show up in entertainment platforms

1) Recommendation engines and exploration

A Dota bot must explore strategies; a content platform must explore content options. If you over-optimize for short-term clicks, you’ll get shallow satisfaction and long-term churn.

2) Real-time decision systems

Dota punishes late reactions. Live sports streaming, ad auctions, and personalized homepages do too. If your AI can’t operate under timing constraints, it’s not production-ready.

3) Safe sandboxing

OpenAI framed Dota as a safe sandbox for progress. Media companies need the same mindset: test AI behaviors in limited audiences, staged rollouts, and clearly bounded permissions.

Practical applications: how to use “Dota thinking” to improve your AI stack

If you’re running a U.S.-based SaaS or digital service and trying to translate AI hype into reliable outcomes, focus on three things: feedback, constraints, and late-game reliability.

1) Build feedback loops that improve the model, not just the dashboard

A lot of teams collect thumbs-up/down and call it done. The stronger approach is closing the loop:

- Route negative outcomes into labeled queues

- Categorize failures (hallucination, policy violation, wrong tool, wrong intent)

- Retrain or re-rank with an explicit goal tied to business value

In Dota terms: don’t just watch replays—change the training.

2) Evaluate “late game” scenarios explicitly

Five looked strong early, then lost later. Your AI might also look great in first-touch interactions and fail in escalations.

Test:

- multi-step threads (10+ turns)

- handoff to a human and back

- edge-case billing or compliance scenarios

- context overload (multiple products, multiple users, conflicting instructions)

A simple metric I like: task completion rate after the 6th step. Many systems fall apart past that point.

3) Use constraint updates as major version changes

OpenAI trained with the single courier for only six days before TI. In software terms, that’s a risky production cutover.

For SaaS teams:

- Treat policy/permission changes as major releases

- Run A/B tests with rollback plans

- Expect behavior shifts and temporarily reduced performance

Constraint changes aren’t “config tweaks.” They reshape what the system learns to do.

People also ask: what does “AI learned from scratch” mean for business?

It means the system can discover strategies that weren’t explicitly programmed, but it also means it can pick up behaviors you didn’t anticipate.

In business settings, that translates to two non-negotiables:

- you need clear objectives (what outcome are you rewarding?)

- you need guardrails (what must never happen?)

If you only define success and ignore boundaries, the AI will optimize in ways that surprise you.

Where this leaves AI in U.S. digital services in late 2025

Seven years after TI 2018, the big shift is that AI isn’t confined to research demos. It’s embedded in customer support, sales development, content operations, analytics, and personalization systems across U.S. SaaS.

But the TI lesson still holds: competitive performance in a complex environment comes from training loops and constraint design, not magic. If OpenAI Five could stay competitive against top pros for half an hour, your organization can absolutely build AI that handles real workflows—provided you test it like the world is trying to break it.

If you’re working on AI-driven marketing automation, customer communication, or media personalization, the next step is straightforward: define what “Real Dota” means in your product. What constraints make the system honest? What evaluation makes it robust? And what would it take to trust it when the game goes late?