OpenAI Five’s TI 2018 losses show how AI learns under pressure. Apply the same lessons to AI automation, personalization, and digital services.

When AI Loses at Dota, Digital Services Still Win

Most people misread AI milestones because they focus on the final score.

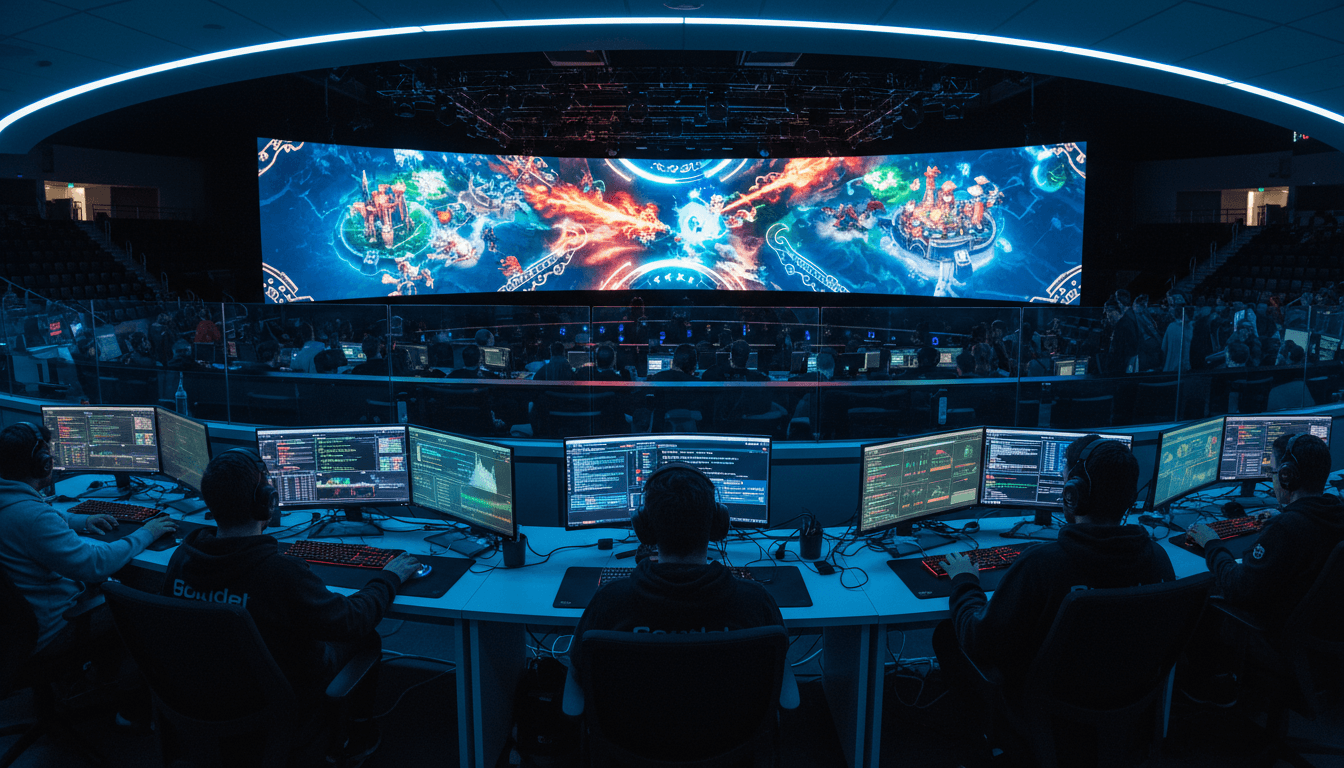

At The International 2018 in Vancouver, OpenAI Five (a self-taught Dota 2 system) lost two showcase matches—yet it still held a realistic chance to win for 20–35 minutes in each game against elite professional players. That detail matters more than the loss column, because it’s exactly what we care about in modern U.S. digital services: Can an AI system stay competent under pressure, adapt to changing conditions, and recover after setbacks?

This post is part of our “AI in Media & Entertainment” series, where we track how AI changes the way people watch, play, create, and engage. A Dota match on a mainstage might look like “just esports,” but it’s also a clean case study in AI decision-making in dynamic environments—the same kind of environment your customer support stack, recommendation engine, fraud tooling, or marketing automation faces every day.

The real result: AI performed under pro-level uncertainty

OpenAI Five’s biggest achievement at TI 2018 wasn’t domination. It was sustained competence in a chaotic, adversarial system.

In the earlier Benchmark matches (17 days before TI), OpenAI Five played games that were lopsided—either it rolled opponents or got exposed. At TI, the matches were closer and more strategically rich because three major things changed:

- Much stronger opponents: Five faced professional players (one team among the top 18 globally, and another made of Chinese superstar veterans).

- Third-party hero lineups: Five didn’t get to optimize drafts against humans.

- A key “Real Dota” restriction removed: the match rules moved closer to standard competitive play.

That’s a familiar pattern in U.S. product teams: models look great in friendly evaluations, then you ship to production and discover reality includes edge cases, new behaviors, and adversarial users.

Why “20–35 minutes of winning chances” is a big deal

If you’ve worked on AI-powered digital services, you know the hard part isn’t a single good prediction. It’s maintaining performance across a full sequence of decisions, where each choice reshapes the next state.

A Dota game is basically a stress test for:

- Real-time decision loops

- Partial information

- Team coordination

- Strategy shifts and counter-strategy

- Punishing compounding mistakes

In other words: exactly the conditions that break brittle automation.

What the courier rule change teaches product teams

One of the most practical lessons from TI 2018 is that small operational constraints can redefine an AI’s behavior.

In the Benchmark games, each hero had its own invulnerable courier, which meant items and regeneration arrived constantly. OpenAI Five developed a signature “high-pressure” style—keep pushing, keep fighting—because it could sustain aggression without the usual retreat-and-heal cost.

Pros criticized this because it didn’t feel like “Real Dota.” So OpenAI switched to a single (mortal) team courier, closer to standard gameplay. They trained under that rule set for only six days before TI.

The product parallel: incentives and constraints shape AI outcomes

If you want a crisp takeaway for AI in digital services, it’s this:

AI systems don’t “learn your business.” They learn the rules you actually enforce.

Examples that mirror the courier shift:

- Customer support automation: If your bot is rewarded for low handle time, it’ll rush and deflect. If it’s rewarded for resolution quality, it’ll slow down and ask clarifying questions.

- Recommendation engines in streaming and social: If you optimize only for watch time, you often get “more extreme” content. If you incorporate satisfaction signals (like “not interested” or churn risk), you get different behavior.

- Marketing automation: If the success metric is clicks, you’ll get clicky subject lines and short-term spikes. If the metric is qualified pipeline or retention, the system behaves more conservatively.

Operational constraints—rate limits, handoffs, escalation rules, data freshness, latency budgets—are the “courier rules” of SaaS.

Why losing to pros is useful (and how to use losses in production)

Those TI matches delivered something executives and engineering leaders often skip: high-quality failure analysis.

OpenAI Five didn’t lose because it forgot basic mechanics. It lost after long, competitive games where humans executed high-level pushes, teamfight timing, and strategic traps. That’s exactly the kind of loss you want to study because it reveals:

- Which failure modes only appear under expert pressure

- Where coordination breaks down

- How the system behaves when the environment stops being forgiving

A practical framework: treat your AI like a competitor, not a feature

I’ve found teams get better results when they treat an AI system as an “agent” operating in a hostile world. That means instrumenting it like you would instrument a competitive system.

Here’s a concrete approach you can apply to AI-powered customer communication tools, personalization, or automation platforms:

-

Define “game time phases” for your product

- Early phase: onboarding, first session, first ticket

- Mid phase: repeat usage, multi-step workflows

- Late phase: escalations, renewals, churn moments

-

Measure “kept winning chances,” not just outcomes

- Support: percentage of conversations that stay on track until human handoff

- Personalization: sessions where recommendations remain relevant after 5–10 interactions

- Marketing ops: workflows that stay compliant and accurate across changing inputs

-

Record the exact context around failures

- What signals did the model see?

- What did it choose?

- What changed after the choice?

-

Run “pro player” tests

- Red-team prompts for LLM workflows

- Adversarial users for fraud or policy

- Power users trying to break automations

This is how you turn losses into roadmaps.

From Dota to U.S. digital services: the shared engine is self-improving learning

OpenAI Five matters for the broader U.S. technology ecosystem because it demonstrates a scalable idea: systems that can train themselves in complex environments and keep improving.

In media & entertainment, the same principles show up everywhere:

- Game AI and NPC behavior: More adaptive opponents and more believable cooperative teammates.

- Audience analytics: Models that learn changing tastes (especially across seasonal spikes like holiday releases and year-end campaigns).

- Content recommendation: Better session-to-session personalization without manual rule tuning.

- Moderation and safety: Faster adaptation to new abuse patterns without rewriting policies every week.

And outside entertainment—where the campaign focus really lands—those same mechanisms underpin:

- AI-powered customer support automation

- Sales and marketing workflow optimization

- Real-time fraud detection

- Operations forecasting and routing

The “scripted logic” lesson

OpenAI also noted it still had “the last pieces of scripted logic” to remove. That’s another underappreciated product insight.

Scripted logic is tempting because it patches a gap quickly. But in agentic systems, scripts often become:

- Hard-to-debug constraints

- Hidden sources of brittleness

- A reason the model fails in novel scenarios

A good standard in AI product work is: use scripts for guardrails and compliance, not to fake competence. If the model needs a script to do the core job, it’s not ready for that job.

People also ask: what does this mean for AI personalization and automation?

Does gaming AI translate to real business problems?

Yes—because the shared challenge is decision-making under uncertainty. Dota compresses it into 45–51 minutes. Digital services stretch it across weeks or months, but the feedback loops, adversarial behavior, and compounding errors are the same.

Why is “close losses” better than “easy wins” for model development?

Because close losses reveal where competence ends. Easy wins often reflect a weak opponent, a friendly evaluation, or a loophole in the rules—none of which survive production.

What’s the simplest way to apply this to a SaaS team?

Start by defining:

- One high-stakes workflow (billing disputes, password recovery, cancellations)

- One success metric (resolution rate, time-to-resolution, retention)

- One adversarial test plan (edge cases, weird inputs, policy conflicts)

Then iterate weekly with tight instrumentation.

What to do next if you’re building AI-powered digital services

If you want AI in digital services to perform like it did on the TI stage—competent under pressure—focus less on flashy demos and more on operational discipline.

Here’s a short checklist that works across personalization, customer communication tools, and automation:

- Make constraints explicit: rate limits, latency, escalation rules, allowed actions.

- Treat “near-miss” sessions as gold: cases where the system almost succeeded are your best training data.

- Test against experts: power users, internal red teams, and domain specialists.

- Align rewards to long-term outcomes: retention, satisfaction, compliance—not just speed or clicks.

AI in media & entertainment is often the first place we see these dynamics in public—because games are measurable and competitive. The business payoff comes when we apply the same lessons to U.S. digital services where reliability and trust matter.

OpenAI Five didn’t win at The International 2018. But it proved something more useful: an AI can learn complex behavior well enough to challenge experts—and that’s the foundation for AI systems that improve customer experiences, automate operations, and adapt in real time.

If AI can hold its own for 35 minutes against pros in a chaotic esport, what would it take for your product to hold up just as well against real users who don’t behave like your test set?