Build insurance AI dashboards that explain model output, drive decisions, and improve trust in underwriting and claims analytics.

Insurance AI Dashboards: Turn Model Output Into Action

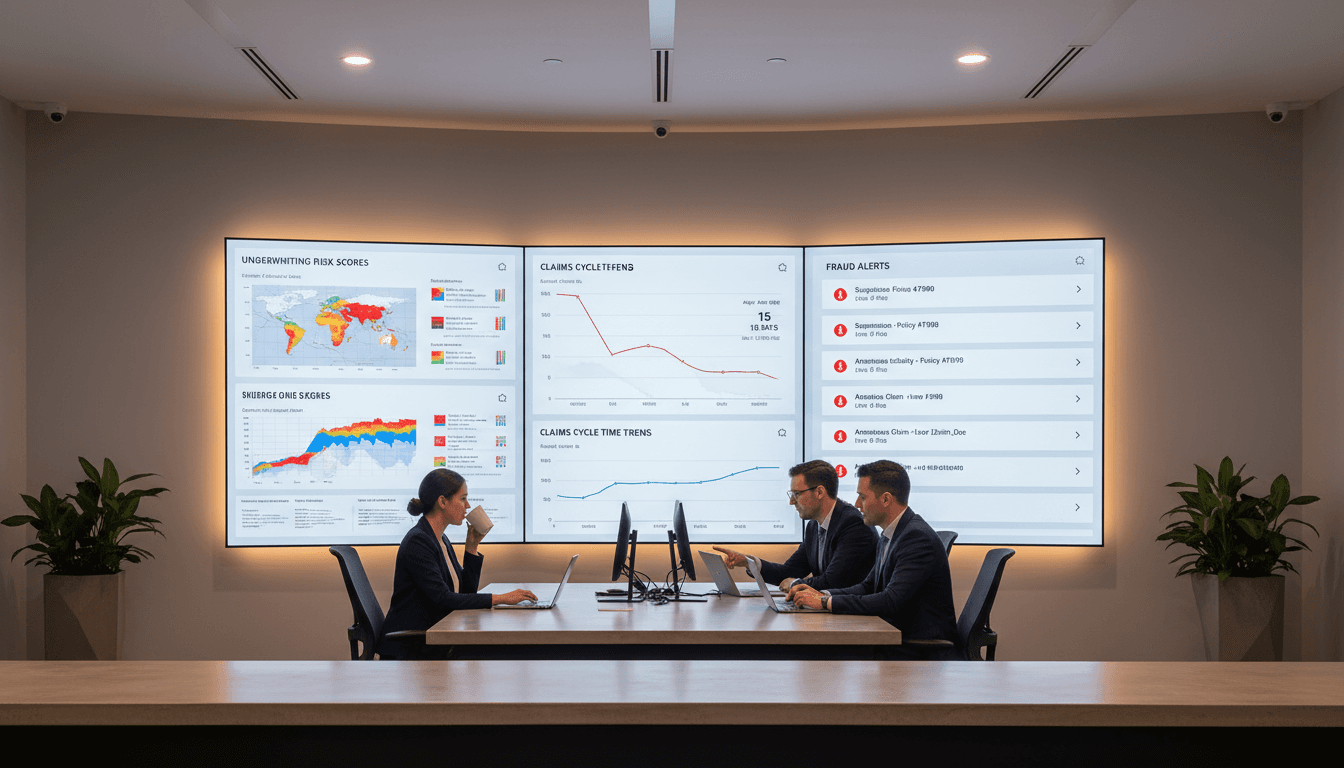

A surprising number of insurance AI initiatives fail for a simple reason: the models work, but the business can’t see what to do next. Underwriters don’t trust the risk score. Claims leaders can’t explain why automation rates fell this month. Distribution managers know “engagement is down,” but not which products, which segments, or which channels are driving it.

That gap isn’t an “AI problem.” It’s a data visualization and product UX problem.

In the AI in Insurance series, we’ve talked a lot about underwriting automation, claims triage, fraud detection, and personalized customer engagement. Here’s the connective tissue across all of them: if you can’t measure outcomes and communicate them clearly, you can’t manage them. This post breaks down how to implement analytics dashboards inside an insurance B2B SaaS product—especially when generative AI and machine learning models are producing more outputs than teams can reasonably interpret.

Why insurers need dashboards that explain AI (not just report it)

Answer first: Insurers need analytics dashboards because AI output is only valuable when it’s interpretable, auditable, and actionable inside real workflows.

Most internal reporting tools were built for classic BI use cases: monthly performance reviews, static KPIs, and retrospective analysis. But AI in underwriting, claims, and risk pricing operates at a different pace:

- Underwriting models score submissions continuously, often with multiple sub-scores (risk, fraud, appetite fit, routing confidence).

- Claims automation pipelines produce operational metrics (touchless rate, straight-through processing, cycle time) and model metrics (precision/recall, drift, confidence distribution).

- Fraud detection needs explanations that can stand up to internal audit and, in many markets, regulatory scrutiny.

A dashboard that only shows “average risk score by month” isn’t enough. Insurance teams need to know:

- What changed, exactly?

- Where did performance shift (segment, channel, product, region)?

- Which operational lever should we pull (rules, thresholds, training, staffing, distribution strategy)?

A practical one-liner I’ve found useful when designing these experiences:

If a KPI can’t trigger a decision, it’s not a KPI—it’s trivia.

Start with alignment: the dashboard isn’t for “users,” it’s for roles

Answer first: Successful insurance analytics features start by mapping dashboard value to specific stakeholder decisions—not generic “insight.”

Insurance SaaS products rarely have one audience. A single platform might serve:

- Heads of P&C or life distribution

- Agent/advisor enablement leaders

- Claims operations managers

- Fraud investigators

- Digital marketing and growth teams

- Data and IT leaders responsible for governance

Each group wants analytics, but for different outcomes. If you try to satisfy everyone with one “universal dashboard,” you’ll end up with a cluttered page that nobody uses.

A quick stakeholder-to-dashboard mapping

Here’s a simple mapping you can use in discovery workshops:

- Decision: What decision does this person make weekly?

- Trigger: What change should prompt that decision?

- Proof: What evidence do they need to trust the data?

- Action: What can they do in-product once they see it?

For example:

-

Claims ops leader

- Decision: adjust automation thresholds or staffing

- Trigger: cycle time up 12% week-over-week for a claim type

- Proof: breakdown by claim type, channel, region; confidence distribution

- Action: change routing policy, review “low-confidence” buckets

-

Underwriting lead

- Decision: revise appetite rules or referral thresholds

- Trigger: quote-to-bind rate drops for a product line

- Proof: segment-level conversion + risk score distribution + explanations

- Action: tune thresholds, request model review, adjust questions

That’s how you prevent analytics from becoming “nice charts” and turn it into a feature that actually drives renewal and expansion.

Build vs buy: why custom visualization often wins in insurance AI

Answer first: Buying a BI tool is fast, but custom visualization wins when you need workflow-native analytics, AI explainability, and role-specific UX.

Off-the-shelf data visualization tools are mature and can save time. If you mainly need standard charts (trend lines, bar charts, simple filters), they can work well.

But insurance AI products hit edge cases quickly:

- Explainability needs (reason codes, feature contributions, counterfactuals)

- Operational and model metrics together (business KPIs + model performance)

- Embedded actions (change routing, flag cases, create tasks, export audit pack)

- Multi-tenant complexity (carrier-specific metrics, broker hierarchies, product catalogs)

A custom layer is often worth it because you can design around the insurance context:

- A claims dashboard should speak “FNOL, indemnity, leakage, subrogation,” not generic “tickets.”

- An underwriting dashboard should reflect “submission, referral, bind,” not “leads, pipeline, close.”

Custom also sets you up for LLM-powered analytics experiences (more on that below), where dashboards aren’t static pages but adaptive views generated from user intent.

UX/UI rules that actually drive adoption in insurance analytics

Answer first: Analytics adoption comes from clarity: fewer charts, better defaults, and visuals chosen for how humans perceive differences.

Most companies get this wrong by shipping an “everything dashboard.” It looks impressive in a demo and fails in week two.

1) Design for perception, not decoration

People compare length and position better than angles. So, if you need stakeholders to quickly spot changes:

- Prefer bar charts, line charts, and dot plots over pie charts

- Use consistent axes and stable ordering

- Avoid color overload—color should signal meaning, not branding

2) Reduce choices, increase confidence

Dashboards fail when users must configure them before they’re useful. Insurance leaders want answers fast.

Good defaults are a product feature:

- Default time windows: last 7 days, last 30 days, quarter-to-date

- Default segment breakdowns: product line, channel, region, risk tier

- “Explain this change” panel pre-populated when a KPI shifts

3) Make every chart answer a question

A chart without an implied question invites misinterpretation.

Better pattern:

- Question: “Are we automating more claims without increasing leakage?”

- Charts: touchless rate trend + reopen rate + average severity for automated vs manual

- Decision hook: threshold suggestion or staffing impact estimate

What “smart insights” look like for insurance AI teams

Answer first: Smart insights are focused, contextual, and decision-oriented—often predictive or prescriptive.

A strong insurance analytics feature doesn’t just show activity counts. It tells a coherent story and highlights what matters.

Examples of insurance-specific smart insights

Here are examples modeled on real insurer needs:

-

Engagement and distribution

- Policyholders engaged through digital servicing this month

- Average recommendations per policyholder (cross-sell/upsell)

- Recommendation mix by product (motor, term life, legal protection)

-

Underwriting performance

- Referral rate by channel and risk tier

- Bind rate for “borderline” risk scores (a classic threshold-tuning signal)

- Quote time vs bind probability (operational speed affects conversion)

-

Claims automation and quality

- Straight-through processing rate by claim type

- Low-confidence automation attempts (where the model is unsure)

- Leakage proxy metrics (reopen rate, supplements, escalations)

Don’t skip “strong statistics”

Generative AI is everywhere in December 2025, but here’s my stance: a lot of dashboard value comes from boring, robust statistics.

Before building complex ML-driven insights, nail the basics:

- Cohort analysis (e.g., retention by acquisition month)

- Correlation checks (e.g., which product bundles correlate with retention)

- Control charts for operational stability (detect when a process shifts)

If your KPI definitions are shaky, adding AI on top just makes bad measurement faster.

LLM-powered dashboards: the next phase of analytics in insurance SaaS

Answer first: LLMs can turn dashboards into interactive analysis tools by translating natural-language intent into charts, narratives, and next-step recommendations.

Static dashboards assume users already know what they’re looking for. Real insurance work doesn’t happen that way. A claims VP often starts with a messy prompt:

- “Why did cycle time spike in the Northeast?”

- “Show me what changed after we adjusted the referral threshold.”

- “Which broker segments are driving low-quality submissions?”

An LLM-driven analytics layer can:

- Generate the right breakdowns automatically

- Produce a short narrative explanation (what moved, where, and why)

- Suggest next questions (“Do you want to compare severity or reopen rate next?”)

- Create dynamic dashboards on request, tailored to the role and scenario

Guardrails insurers should insist on

LLM analytics is powerful, but insurance organizations need discipline:

- No silent assumptions: the dashboard should disclose filters, time windows, and definitions.

- Traceability: every number should be clickable back to the underlying query logic.

- Permission-aware results: role-based access must apply to both charts and narratives.

- Hallucination resistance: narratives must be grounded in retrieved metrics, not “creative” explanations.

If you implement generative AI in analytics without these controls, you’ll create a trust problem that’s hard to undo.

A practical implementation checklist for insurance SaaS teams

Answer first: The fastest path is to ship a narrow dashboard MVP, validate decisions it drives, then expand into role-specific and AI-assisted insights.

Use this checklist to keep the build focused:

- Define 10–25 core insurance KPIs tied to decisions (not curiosity).

- Standardize metric definitions (one “automation rate,” one “bind rate,” etc.).

- Create role-based views (claims, underwriting, distribution, IT/governance).

- Prioritize drill-down paths over more top-level charts.

- Add “smart insight” callouts only when they trigger action.

- Instrument model metrics (confidence, drift signals, performance by segment).

- Design for trust: explanations, data lineage, and audit-friendly exports.

- Pilot with 3–5 customers and track adoption weekly (not quarterly).

Where this fits in the AI in Insurance story

AI in insurance is moving from experiments to operating systems. That shift changes what “success” looks like. It’s no longer enough to say a model is accurate. You need visibility into how AI changes underwriting decisions, claims outcomes, and customer behavior—week by week, segment by segment.

If you’re building or buying an insurance AI platform, treat data visualization as a first-class product capability. It’s how insurers turn AI from “a black box that outputs scores” into something leaders can defend, tune, and scale.

If you’re planning your 2026 roadmap right now, here’s a strong planning question to end on: Which AI decisions in your organization would improve fastest if every stakeholder could see—and trust—the same story in the data?