See how BPCE uses an internal AI copilot to speed answers, reduce expert overload, and improve insurance operations. Get a practical implementation blueprint.

AI Copilots for Insurance Teams: BPCE’s Playbook

Most insurers treat generative AI as a customer-facing toy: a chatbot on the website, a prettier FAQ, maybe a claims status bot. BPCE Assurances went the other direction—and it’s the smarter bet.

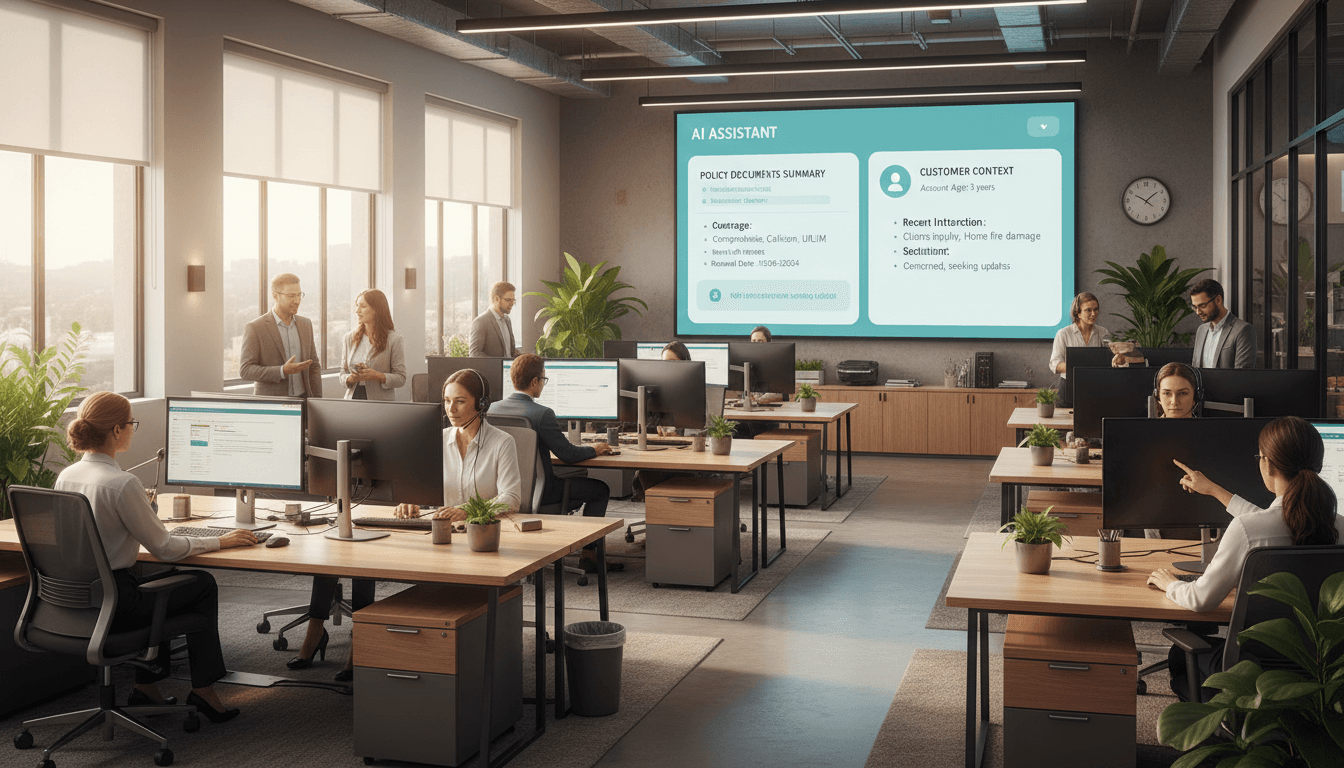

They focused on the people who answer the hard questions all day: customer relationship managers, back-office teams, and internal experts. The result is “Anna NextGen,” an internal insurance copilot built with Zelros that gives staff fast, contextual answers sourced from the insurer’s own documents and data—plus ready-to-send drafts like emails. That’s not a shiny demo. That’s operational infrastructure.

This matters in December 2025 more than ever. The year-end rush (policy renewals, billing questions, coverage changes, and inevitable escalations) exposes a painful truth: your customer experience is capped by your employee experience. If advisors spend 10 minutes hunting for a clause across three knowledge bases, customers feel it—no matter how good your marketing is.

The real bottleneck in insurance: knowledge work at scale

Insurance operations don’t break because people can’t work hard. They break because information is fragmented. When answers live across PDFs, policy admin systems, claims notes, and internal procedures, even strong teams slow down.

BPCE Assurances faced two classic constraints that show up in almost every carrier I talk to:

1) Information retrieval is the hidden cost center

If an advisor has to search through terms and conditions, internal memos, and procedural guides to answer a single question, you get:

- Longer handle times

- More transfers and callbacks

- Higher training burden for new hires

- Higher error risk (especially when documents change)

The operational effect is compounding: delays create queues, queues create stress, and stress increases mistakes.

2) Experts become a human API for repetitive questions

When frontline teams can’t confidently self-serve information, they escalate to specialists. Specialists then spend their days answering “repeat” questions instead of handling complex cases.

That’s not just inefficient—it’s demoralizing. Experts want to solve edge cases, not re-explain the same rule 30 times.

BPCE’s core insight: if you remove the friction inside the organization, you don’t just speed up service—you improve quality and consistency.

What BPCE built with Zelros: “Anna NextGen” as an internal copilot

Anna NextGen is an internal AI assistant designed for insurance workflows, not generic chat. BPCE Assurances and Zelros have collaborated for seven years, evolving “Anna” from earlier NLP approaches to a generative AI model running in a secure Azure environment using modern OpenAI capabilities.

Here’s what makes this kind of AI copilot useful (and what many insurers miss).

It answers from your sources, not the open internet

For insurance, the only answers that matter are those grounded in:

- Your policy wording

- Your endorsements and product rules

- Your internal procedures

- Your customer context (where permitted)

Anna NextGen is designed to generate responses based on BPCE Assurances’ specific documents and data, so employees aren’t improvising.

It combines structured and unstructured data

This is the big practical leap.

- Structured data: transactional/customer/policy information

- Unstructured data: PDFs, knowledge bases, terms and conditions, procedures

Most internal tools are good at one or the other. Advisors need both in a single flow to answer complex real-world questions like: “Given this customer’s policy history and this contract clause, what’s the correct next step?”

It produces ready-to-use outputs (like emails)

Speed isn’t just about finding information—it’s about packaging it into a response.

Anna NextGen can generate draft messages that are close to send-ready, which reduces cognitive load and makes quality easier to standardize.

My stance: drafting is where genAI creates the fastest ROI in insurance operations—because it removes repetitive writing, not judgment.

It’s designed for adoption, not novelty

BPCE leadership highlighted a “smooth transition” from the previous Anna to the new version. That sounds like a soft benefit, but it’s actually a hard one.

If your tool breaks routines, adoption drops. The best internal AI feels like a power-up inside existing workflows.

Why “employee experience” is a serious AI strategy (not HR fluff)

Employee experience is an operational KPI in disguise. Here’s the causal chain that shows up in contact centers, back offices, underwriting support, and claims operations:

- Better internal answers → fewer holds and escalations

- Fewer escalations → experts focus on high-severity work

- Higher confidence → better first-contact resolution

- Better first-contact resolution → higher customer satisfaction and retention

And there’s a second-order benefit that insurers underestimate: training acceleration.

AI copilots shorten time-to-competency

Insurance knowledge is heavy, and new hires can take months to become fully independent.

An internal copilot changes that in two ways:

- Just-in-time learning: employees learn while working, not only in training sessions

- Reduced fear of being wrong: they can validate their understanding quickly

The result isn’t “replacing” people. It’s getting people productive faster and keeping them from burning out.

AI copilots improve compliance by standardizing how answers are formed

When advisors respond from memory, they vary. Variation creates risk.

A grounded copilot encourages:

- Consistent phrasing

- Consistent reference to current documents

- More predictable handling of regulated scenarios

That doesn’t eliminate compliance work—but it makes compliance easier to enforce.

A practical blueprint: how to implement an insurance copilot without chaos

If you’re leading AI in insurance—operations, transformation, IT, or customer service—BPCE’s example suggests a pragmatic path. Here’s what works.

Step 1: Start where volume and complexity collide

The best first use cases have three traits:

- High inquiry volume (lots of repetitive demand)

- High document dependence (answers live in procedures/wordings)

- Moderate-to-high complexity (enough nuance to justify a copilot)

Personal lines service, life & health policy servicing, and claims intake support are common starting points.

Step 2: Treat your knowledge base like a product

Internal copilots are only as good as the content they retrieve.

A workable knowledge foundation includes:

- Document ownership (who updates what)

- Version control (what is “current”)

- Taxonomy (how content is categorized)

- Feedback loops (flagging wrong or outdated answers)

BPCE’s approach includes document processing and classification, turning content into clearer structures (headings, key points). That’s exactly the kind of unglamorous work that makes AI useful.

Step 3: Design for controllability (especially in regulated environments)

For insurance, “AI that writes” is less important than AI you can govern.

Operational controls typically include:

- Access management by role (what data each persona can use)

- Approved sources (what documents are eligible)

- Auditability (what was referenced and when)

- Guardrails for high-risk topics (complaints, cancellations, health data, etc.)

Step 4: Measure outcomes that operations leaders actually care about

If you want stakeholder buy-in, track metrics that show up on executive dashboards.

A strong measurement set includes:

- Average handle time (AHT) and after-call work (ACW)

- First-contact resolution (FCR)

- Escalation rate to experts

- Training time-to-independence for new hires

- Quality assurance scores and compliance exceptions

- Employee satisfaction for the teams using the tool

Notice what’s missing: vanity metrics like “number of prompts.” If it doesn’t change throughput, quality, or cost, it’s theater.

“People also ask”: common questions about AI copilots in insurance

Does an insurance copilot replace agents, adjusters, or advisors?

No. The high-value use is augmentation: faster retrieval, better drafting, and more consistent process execution. Judgment, empathy, and accountability stay human.

Where does the ROI usually come from first?

Productivity and deflection. Fewer escalations to experts, shorter handle times, and less rework from incorrect answers. Drafting customer communications is often one of the quickest wins.

What’s the biggest failure mode?

Bad content and weak governance.

If your documents are outdated, contradictory, or poorly structured, the copilot will expose that mess faster than any audit. Fixing the knowledge layer is not optional.

Is this relevant beyond customer service?

Yes. The same pattern applies to:

- Claims operations (coverage questions, process guidance, customer updates)

- Underwriting support (appetite rules, document interpretation, referral prep)

- Broker and agent support desks (product Q&A, endorsements, exceptions)

Internal AI is the connective tissue across these functions.

What BPCE’s case study signals for AI in Insurance in 2026

Within the broader AI in Insurance series, BPCE Assurances’ implementation is a reminder that the biggest wins often happen behind the scenes. Customer-facing automation is visible, but internal copilots are what make service reliably good—especially during peak periods like year-end.

If you’re considering generative AI for insurance operations, I’d take a strong stance on this: don’t start with a public chatbot. Start with your employees. When teams can answer confidently, customers feel the difference immediately.

A good next step is to map your top 25 inquiry types and identify where time is lost: document searches, policy admin navigation, drafting, or escalations. That map becomes your copilot backlog.

The forward-looking question I’d ask your leadership team heading into 2026 is simple: Are we building AI that impresses customers for a minute—or AI that helps employees deliver excellence all year?