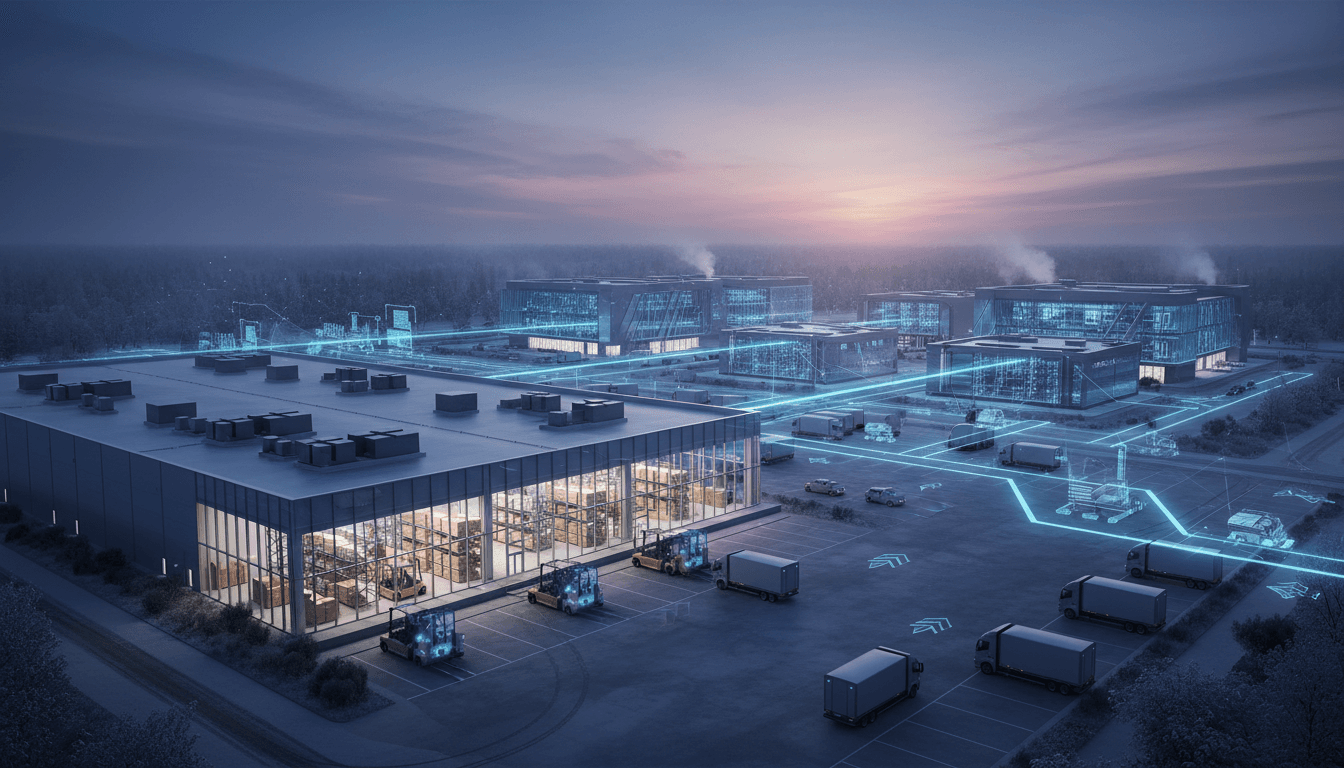

Genesis Mission expands U.S. AI infrastructure and R&D. Here’s what it means for supply chain optimization, route planning, and warehouse automation in 2026.

Genesis Mission: What It Means for Logistics AI

A lot of AI strategy talks as if the only thing standing between you and a smarter supply chain is better software. Most companies get this wrong. The bottleneck is infrastructure: compute capacity, energy availability, secure data pipelines, and the ability to test models against reality at scale.

That’s why the newly announced Genesis Mission partnership between NVIDIA and the U.S. Department of Energy (DOE) matters far beyond labs and national research facilities. It’s a government-backed push to expand AI infrastructure and accelerate R&D across energy, scientific research, and national security—areas that quietly determine how fast transportation and logistics AI can mature.

This post is part of our “AI in Government & Public Sector” series, where we look at public-private AI initiatives and translate them into practical implications. Here’s the transport-and-logistics angle: when government and industry invest in shared AI infrastructure, supply chain optimization stops being a pilot project and starts becoming an operating standard.

Genesis Mission in plain terms (and why infrastructure wins)

Genesis Mission is a coordinated effort to scale U.S. AI leadership by investing in compute, energy-aware systems, and applied R&D—then connecting government, academia, and industry through a shared discovery platform. That’s the big idea.

NVIDIA is joining as a private industry partner, and a memorandum of understanding outlines priorities that include AI for manufacturing and supply chain, open-source AI, robotics, AI-enabled digital twins, and high-performance computing. The DOE’s expectation—stated directly—is that this initiative could double productivity and impact in American science and engineering.

Here’s why that matters to logistics leaders: transportation AI is not “just algorithms.” It’s also:

- Training and running models on massive, high-velocity data (telemetry, TMS events, WMS scans, IoT signals)

- Simulating messy physical reality (traffic, weather, port congestion, labor variability)

- Making decisions fast enough to matter (at the edge, inside warehouses, on vehicles)

- Doing all of it with energy and security constraints that are getting tighter, not looser

If AI infrastructure expands—and gets cheaper, more available, and more standardized—transportation and logistics teams can move from “nice demo” to “enterprise-grade deployment” faster.

What government-backed AI infrastructure changes for supply chains

The practical effect of large-scale AI infrastructure investments is simple: you can run more experiments, on more data, with shorter feedback loops. In logistics, that translates into measurable operational improvements because the decision cadence is constant: re-route, re-slot, re-price, re-staff, re-pack, re-deliver.

Faster forecasting cycles (and fewer surprises)

Forecasting is often treated as a monthly process. The reality is that disruptions happen hourly.

With more available compute and better tooling emerging from public sector R&D, we’ll see more organizations adopt rolling, near-real-time demand and capacity forecasting that ingests:

- Weather and storm-path predictions

- Port and rail congestion signals

- Supplier lead-time variability

- Promotional demand spikes

- Returns and reverse logistics patterns

The Genesis Mission explicitly calls out open AI science models for things like weather forecasting and computational simulation. For logistics, weather isn’t a news headline—it’s an input variable that impacts:

- Linehaul route risk

- Last-mile failure rates

- Cold-chain compliance

- Dwell times and detention costs

Better forecasting doesn’t just reduce cost. It reduces “operational thrash,” which is the hidden tax of modern logistics.

Route optimization that’s actually dispatch-ready

Most route optimization tools look great until you apply real constraints: driver hours, service windows, dock schedules, charging availability, customer preferences, and unpredictable exceptions.

Expanded AI infrastructure supports more realistic optimization because you can:

- Train on bigger datasets (more lanes, more seasonality, more exceptions)

- Run scenario plans quickly (fuel price jump, winter storm, labor shortage)

- Combine prediction + optimization (ETA prediction feeds dispatch decisions)

The result isn’t a prettier dashboard. It’s fewer late deliveries, fewer empty miles, and fewer manual overrides.

Warehouse automation that scales beyond “one perfect site”

Warehouse AI fails most often at scale, not in the first proof of concept. One facility gets tuned perfectly; then rollout stalls because every site has different slotting logic, different labor patterns, and different equipment.

Genesis priorities like robotics, edge AI, autonomous labs, and digital twins map directly to what warehouse leaders need:

- High-fidelity simulation for layout changes before you spend capex

- Vision models that adapt across lighting, SKU packaging, and camera setups

- Edge inference for safety and latency (you can’t wait on cloud round-trips for some decisions)

A practical stance: if you’re planning automation in 2026, budget for digital twin capability early. Not as a flashy “metaverse” initiative—more like a flight simulator for ops.

Digital twins + “AI co-scientists”: the next ops stack

Digital twins are becoming the test environment logistics has always needed but rarely had. Instead of experimenting on live operations, you create a simulated mirror of warehouses, yards, fleets, or even multi-node networks.

The DOE-NVIDIA collaboration highlights AI-enabled digital twins and autonomous laboratories. In a transportation and logistics context, the same concept shows up as:

- Network digital twins for lane strategy and carrier mix

- Warehouse digital twins for throughput and congestion modeling

- Yard digital twins for gate scheduling and dwell reduction

What this enables (concretely)

Digital twins and large-scale compute make three hard things easier:

- Exception training: You can train models on rare events (ice storms, port strikes) by simulating them.

- Policy testing: You can validate “if-then” operating policies (re-route thresholds, safety stock triggers) without risking service.

- Continuous improvement: You can run a weekly cycle of hypothesis → simulation → limited rollout → measurement.

The article also references “AI co-scientists” that speed algorithm development and code generation for demanding applications. For logistics teams, the operational version is an AI copilot for analysts and engineers that helps:

- Generate SQL and data transformations

- Draft optimization constraints

- Propose features for ETA/delay models

- Create test harnesses and monitoring checks

My take: AI copilots won’t replace experienced ops people. But they will raise the floor—and that’s how you scale analytics across a network without hiring 50 specialists.

Energy, security, and resilience: the unglamorous constraints that decide winners

Transportation AI lives under three constraints: energy, security, and resilience. Genesis is explicitly framed around energy and national security, and that’s not abstract.

Energy-aware AI becomes a logistics requirement

Compute isn’t free, and neither is power. AI infrastructure that’s designed with energy efficiency in mind pushes the market toward:

- More efficient model training and inference

- Better scheduling of compute-heavy jobs

- Increased interest in “right-sized” models that meet latency and cost targets

For fleets, energy optimization isn’t only about fuel. It’s also about EV charging schedules, depot power limits, and route planning that respects charging constraints. Better AI infrastructure and energy research increases the odds that the tooling ecosystem supports those realities.

Public sector security practices will bleed into private logistics

As government and national labs standardize how AI systems are secured, audited, and monitored, those patterns tend to become procurement expectations.

Logistics providers working with regulated industries (pharma, defense, critical infrastructure) should expect more attention on:

- Model governance (who changed what, when)

- Data lineage and access controls

- Monitoring for drift and performance degradation

- Incident response for AI-driven systems

If you’re building AI into dispatch, picking, or vehicle safety workflows, this is good news. It forces discipline—and disciplined AI systems survive scale.

How to turn “big national AI” into a 90-day logistics plan

You don’t need a national lab to benefit from this shift. You need a plan that assumes compute and AI tooling will get more accessible—and competition will get tighter. Here’s what works in a realistic 90-day window.

1) Pick one operational metric with clear dollars attached

Examples that tend to perform well:

- Empty miles percentage

- Cost per stop (last mile)

- Warehouse pick rate and mis-picks

- On-time-in-full (OTIF)

- Dwell time at yards or customer docks

Tie the metric to a business owner who can approve process changes, not just dashboards.

2) Build a “decision pipeline,” not a model

A useful logistics AI system includes:

- Data ingestion (TMS/WMS/telematics)

- Feature store or curated datasets

- Model training and evaluation

- Deployment pathway (API, edge device, or embedded in workflows)

- Monitoring and rollback

The fastest failures I’ve seen happen when teams build a model and then ask, “Now where does it go?” Start with the decision.

3) Create a lightweight digital twin for one site or one lane family

You don’t need a perfect simulation. You need a test bed that answers a few questions reliably:

- If we change cut-off times, what happens to throughput?

- If we add one more wave, do we reduce late orders or just move congestion?

- If we re-route around a congestion point, what happens to driver hours and cost?

Treat it as an internal product. Iterate weekly.

4) Write procurement requirements now (before you’re stuck)

If you’re buying AI systems in 2026, include requirements that align with emerging public sector norms:

- Audit logs for model changes

- Explainability options for regulated workflows

- Clear data retention and access rules n- Monitoring SLAs (drift, latency, uptime)

This prevents “black box regret” later.

Snippet-worthy stance: If your AI vendor can’t explain how they monitor drift and rollback models, they’re selling a demo, not an operational system.

Common questions logistics teams are asking about Genesis Mission

Will this immediately lower costs for shippers and 3PLs?

Not overnight, but it will compress timelines. As AI infrastructure expands and R&D spills into commercial tooling, you’ll see faster deployments and fewer bespoke builds.

Does this mainly benefit companies with huge data teams?

No—mid-market operators may benefit more. Standardized platforms, open models, and better tooling reduce the “talent tax” that used to keep advanced analytics locked behind big budgets.

What’s the biggest risk?

Assuming access to compute equals competitive advantage. Everyone will have more access. Differentiation will come from operational integration: clean processes, good data discipline, and tight feedback loops.

Where this fits in the “AI in Government & Public Sector” story

Public sector AI initiatives don’t just produce policy memos. When they’re paired with infrastructure and real R&D priorities—like Genesis Mission—they reshape what vendors build, what enterprises can afford, and what becomes standard practice.

For transportation and logistics, the message is straightforward: AI infrastructure is becoming a national priority, and supply chain optimization is one of the clearest places to apply it. If you’re waiting for “perfect conditions” to modernize forecasting, routing, and warehouse decision-making, you’ll be late.

If you want a practical next step, start by mapping one high-value decision (dispatch, slotting, replenishment, yard scheduling) to its data inputs and failure modes. Then decide: are you building for pilots, or are you building for scale?