Track China’s Africa security push with AI-driven risk signals. Learn where backfire is likely and how governments can build early-warning workflows.

AI and China’s Africa Security Push: Risk Signals

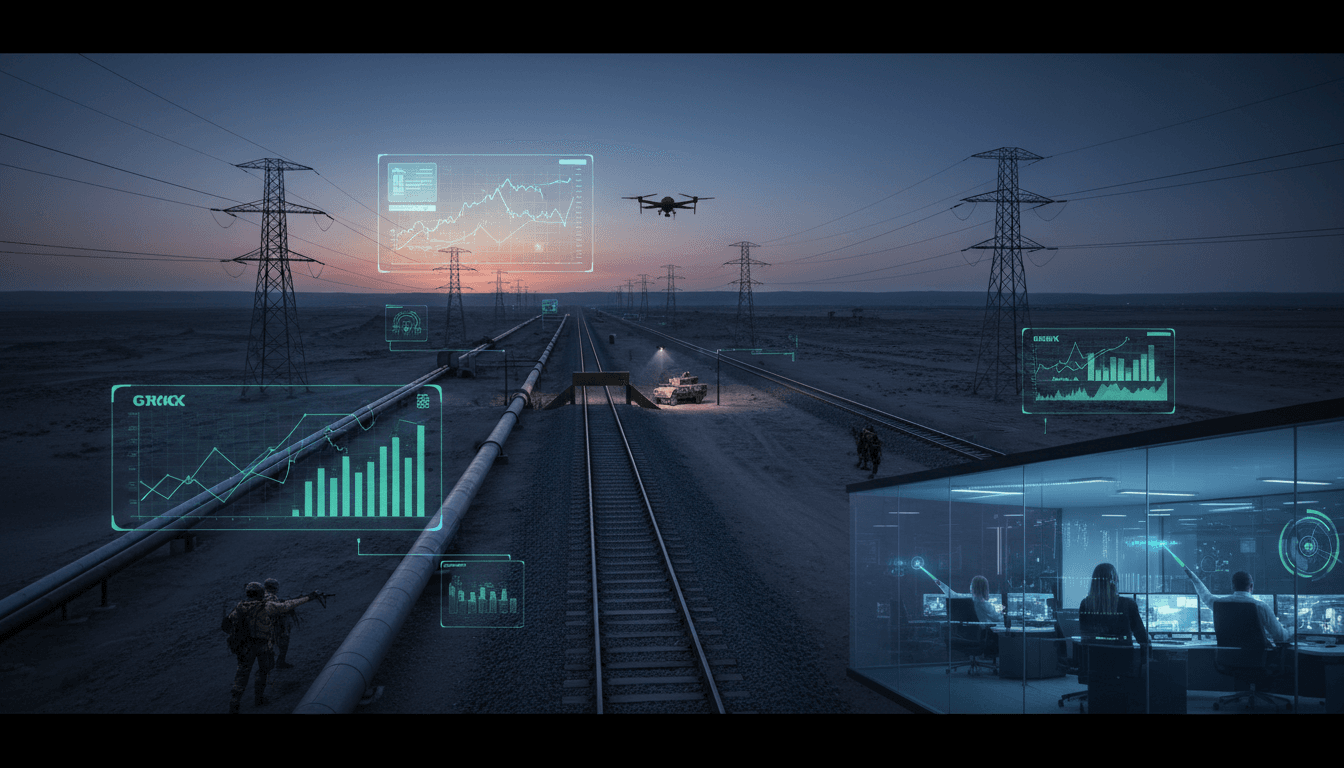

A quiet shift is underway in Africa’s security landscape: China is moving from primarily financing infrastructure to actively shaping security relationships around the same assets it funds. That shift is visible in the details—new defense attachés, joint training agreements, technology transfer, and local defense-industrial projects tied to unmanned systems and explosives manufacturing.

For defense and public-sector leaders, the interesting question isn’t whether China is “expanding influence” (it is). The question is whether Beijing’s approach creates predictable friction points—and how AI in government can surface those risk signals early enough to act on them. If you manage national security planning, intelligence analysis, public safety, or critical infrastructure protection, this matters because Africa is becoming a proving ground for how emerging powers protect overseas interests using a blend of diplomacy, arms sales, private security, and information operations.

What follows is a practical read of the situation through an “AI in Defense & National Security” lens: what China appears to be doing, where it can backfire, and how to build an AI-enabled monitoring and response playbook without sleepwalking into escalation or analytic blind spots.

What Beijing’s security strategy in Africa looks like in 2025

China’s current approach is best described as asset-linked security expansion: deepen security cooperation in places where Chinese investments (or future projects) face political instability, insurgency, or elite competition.

The publicly visible indicators are straightforward:

- Expanded defense representation: new or elevated military attaché presence and higher-touch engagement with defense leadership.

- Security cooperation packages: joint exercises, professional military education, and technology transfer agreements.

- Defense-industrial footholds: partnerships that localize production (including unmanned aerial vehicles) and munitions-adjacent manufacturing.

- Political timing: security engagement that aligns with election cycles, succession uncertainty, or regime consolidation.

Uganda: from projects to protection

Uganda illustrates the “protect the portfolio” logic. Chinese engagement has included defense cooperation discussions at senior levels, follow-on visits to China, and agreements that span training, exercises, and technology sharing. Uganda has also been associated with defense-related ventures involving UAV production and explosives manufacturing.

The strategic tell is that security cooperation is appearing alongside economic reprioritization—where energy infrastructure and extractive projects can become more salient than headline rail projects, because energy assets are harder to pause, politically hotter, and more sabotage-prone.

The Sahel: backing regimes when the West steps back

In the Sahel, the pattern looks different: engagement is occurring amid reduced Western influence and the consolidation of junta-led governments. Beijing’s defense signaling—such as appointing defense attachés—fits a model where China positions itself as a reliable partner to governments that want regime security, equipment, and external legitimacy.

This is where backfire risk rises fast. The Sahel is a dense knot of insurgent violence, competing external actors, sanctions politics, and information warfare. Security cooperation here can turn into reputational damage or operational entanglement with very little notice.

Where this strategy can backfire (and why it’s predictable)

Backfire is not a mystery; it’s a set of recurring dynamics that show up whenever an external power ties itself to contested politics and coercive capacity.

1) Elite politics turns partners into liabilities

When security cooperation aligns with a specific leader, faction, or succession plan, it can age poorly. If power shifts, yesterday’s “trusted partner” becomes today’s evidence of interference.

Risk signal: a surge in defense agreements and high-level security visits within 18–24 months of a contentious election or leadership transition.

2) “Security for assets” can look like “security over citizens”

Populations often tolerate foreign economic projects—until security arrangements appear to prioritize external assets over local welfare. If policing, surveillance, or force is perceived as protecting foreign stakes, the narrative becomes combustible.

Risk signal: sustained local protest discourse linking foreign projects to repression, corruption, land disputes, or environmental harm.

3) Proliferation and blowback from drones and explosives ecosystems

Localizing production or transferring know-how around UAVs and explosives can strengthen state capacity. It can also:

- leak into black markets,

- boost coercive abuse,

- intensify arms races among neighbors,

- improve insurgent countermeasures when systems are captured.

Risk signal: a mismatch between rapid capability growth (UAV availability, training throughput) and weak governance indicators (procurement transparency, end-use monitoring, judiciary independence).

4) Private security creates ambiguous accountability

Where state-to-state security cooperation is politically sensitive, private security firms can fill gaps. That can help protect facilities—but it also introduces unclear rules of engagement, weak transparency, and incident risk.

Risk signal: increased reporting of “unattributed” security incidents around major projects, especially when official narratives are thin or inconsistent.

5) Information operations can trip over their own messaging

China often frames its engagement as non-interference and development-first. Expanded security activity can collide with that narrative, giving opponents a clean wedge issue.

Risk signal: messaging that simultaneously claims non-interference while announcing deeper security ties, tech transfer, or security deployments.

The AI angle: how governments can detect and respond earlier

AI doesn’t “predict coups.” What it can do—when used correctly—is help public-sector teams triage weak signals, connect disparate indicators, and reduce the time between “something is changing” and “we have a defensible assessment.”

Here are four AI-in-government patterns that work particularly well for this problem set.

1) AI-enabled geopolitical risk monitoring (OSINT at scale)

The baseline capability is a monitoring stack that ingests multilingual open-source content—local media, government statements, procurement notices, social platforms, and conflict reporting—then summarizes trends.

What makes it useful isn’t the summarization; it’s structured extraction:

- who met whom,

- which units or ministries are named,

- what equipment categories appear,

- where facilities are located,

- what dates align with elections, protests, or attacks.

A well-tuned system produces daily “signal cards” rather than a flood of alerts.

2) Entity resolution for “who is actually connected to what”

Most analytic failures in influence and security cooperation come from identity confusion: similar names, shell companies, renamed agencies, or “new” firms that are old actors in new packaging.

AI-assisted entity resolution helps analysts answer:

- Is this defense venture connected to a sanctioned entity?

- Are the same intermediaries appearing across multiple countries?

- Do procurement patterns match known supply networks?

When done well, this is less about surveillance and more about procurement integrity and risk control.

3) Predictive maintenance logic applied to political risk

A useful mental model: treat political stability like maintenance. You’re not forecasting an exact failure time; you’re identifying rising likelihood of disruption.

AI can combine structured indicators such as:

- election calendars,

- conflict-event frequency,

- commodity price shocks,

- infrastructure attacks near corridors,

- shifts in military leadership,

- public sentiment toward foreign projects.

The output should be scenario bands (low/medium/high disruption risk) tied to explicit assumptions. That makes it actionable for planners.

4) Cybersecurity and influence: monitoring narrative movement

Security strategies travel with narratives: “anti-terror partnership,” “sovereignty,” “anti-colonial,” “development wins,” “Western abandonment.” AI-based narrative analysis can track when these frames spike, which audiences amplify them, and what events correlate.

For national security teams, the point isn’t to “win propaganda.” It’s to understand when narrative shifts precede physical risk—protests, attacks on facilities, diplomatic expulsions, or legislative moves.

A practical rule: when political rhetoric starts naming specific projects, companies, or foreign security partners, operational risk usually follows.

A practical playbook for public-sector teams (what to implement next)

If you’re building AI in government and public sector workflows for defense and national security, focus on outcomes that stand up in interagency meetings and budget reviews.

Build a “China security engagement” indicator set

Start with 12–20 indicators that can be monitored consistently, then refine. Examples:

- New defense attaché appointments or expanded embassy defense staffing

- Joint exercise announcements and training quotas

- Defense-industrial joint ventures (especially UAVs, ISR systems, munitions-adjacent production)

- Port/airfield upgrades with dual-use characteristics

- Private security contracting changes near major projects

- Arms transfer chatter (even if unconfirmed) across multiple sources

- Election-linked security cooperation spikes

- High-frequency leadership meetings with defense chiefs

- Legal changes affecting basing, overflight, or security jurisdiction

- Narrative spikes linking China to regime security or repression

Stand up an analytic workflow that’s “human-led, AI-assisted”

What works in practice:

- AI produces daily structured briefs and highlights anomalies.

- Analysts validate, add context, and write the assessment.

- A reviewer checks for overconfidence, source bias, and misattribution.

This is how you prevent the two classic failure modes: automation bias (“the model said it”) and analysis paralysis (“we can’t be sure”).

Red-team the backfire scenarios

At least quarterly, run a short red-team exercise:

- What if the partner government changes?

- What if an incident occurs involving private security?

- What if UAV tech leaks to non-state actors?

- What if a disinformation campaign targets a specific project corridor?

Your AI tooling should support this by retrieving historical analogs, mapping stakeholders, and stress-testing assumptions.

Define response options before the crisis

Governments respond faster when options are pre-baked. Create a tiered menu:

- Diplomatic: demarches, multilateral statements, quiet engagement with regional bodies

- Security assistance: capacity building that is governance-conditional and measurable

- Cyber: hardening critical infrastructure and election systems; monitoring coordinated inauthentic behavior

- Economic: transparency support for procurement and debt; resilience planning for supply corridors

AI helps by simulating second-order effects: “If we do X, who will frame it as Y, and where?”

What this means for the “AI in Government & Public Sector” agenda

AI in government and public sector isn’t only about chatbots and service delivery. In defense and national security, the near-term value is more specific: faster, more defensible situational awareness.

China’s expanding security posture in Africa is a clean test case because it blends politics, procurement, military cooperation, technology transfer, and narratives. That’s exactly the kind of multi-domain problem where human teams struggle to connect signals quickly—and where AI, used responsibly, can shorten decision cycles without cutting humans out.

The next year will likely bring more “attaché diplomacy,” more localized defense production deals, and sharper narrative competition around sovereignty and development. The governments that do best won’t be the ones with the loudest statements. They’ll be the ones with the best early-warning systems and the discipline to act on them.

If your team is evaluating AI for intelligence analysis, cybersecurity, or strategic influence monitoring, the practical question to ask is simple: Which risk indicators can we detect 30–90 days earlier than we do today—and what decision will that earlier detection enable?