Reservoir computing edge AI delivers real-time time-series prediction at ultra-low power—ideal for grid forecasting, predictive maintenance, and field devices.

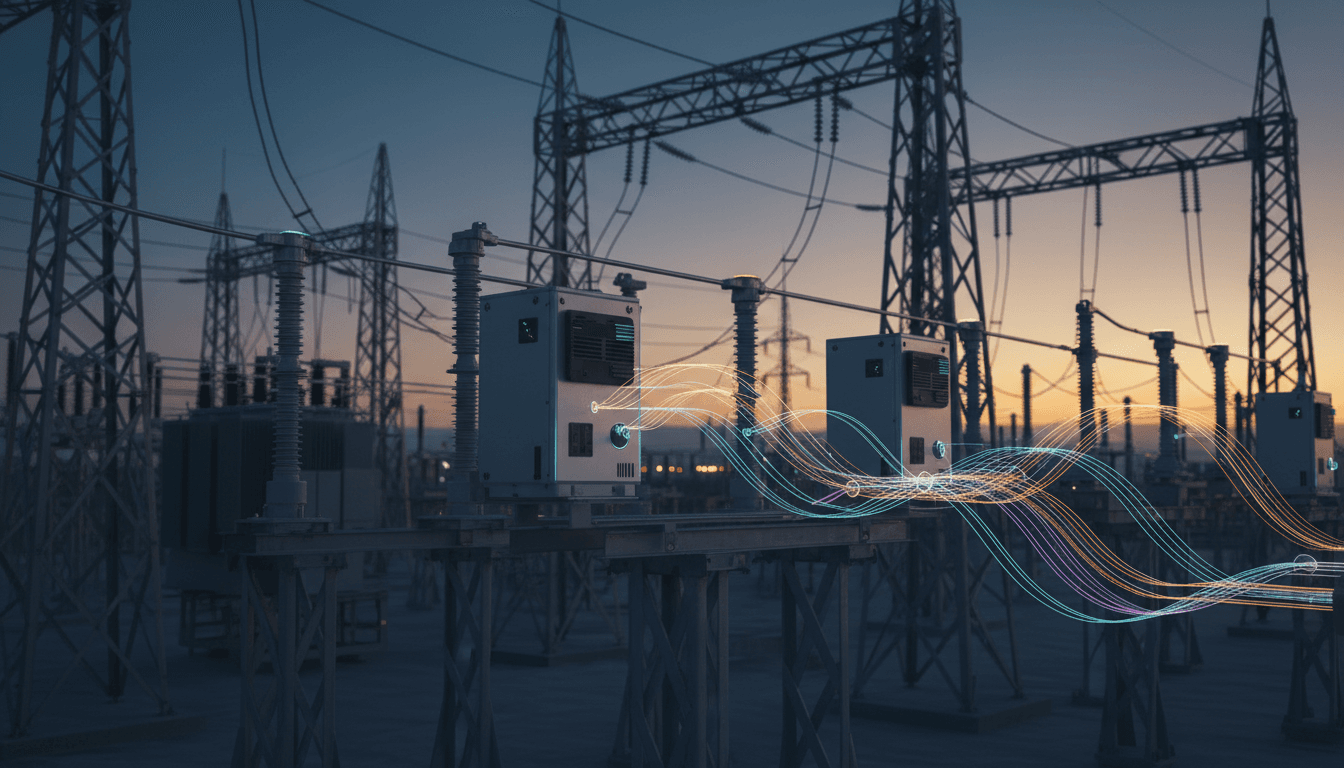

Reservoir Computing Edge AI for Real-Time Grid Forecasts

A tiny chip that beats humans at rock-paper-scissors sounds like a party trick. It isn’t.

The same design choice that lets this chip predict your next hand gesture fast enough to win in real time is exactly what energy and utility teams have been asking for: ultra-low-power edge AI that can make a prediction now, not after the data takes a round-trip to the cloud.

Researchers at Hokkaido University and engineers at TDK built a CMOS chip using reservoir computing—a machine learning approach that’s unusually good at time-series prediction with low energy use. Their demo used a thumb-mounted acceleration sensor to learn a person’s gesture patterns, then predict the next motion within the short “rock-paper-scissors…shoot” window. Swap “thumb motion” for “transformer vibration,” “feeder load,” or “wind ramp,” and you start to see why this matters for AI in Energy & Utilities.

Why edge AI is stalling in utilities (and what this chip changes)

Utilities don’t lack data—they lack time, power, and deployment simplicity at the edge. Most grid and asset intelligence still depends on shipping raw or semi-processed telemetry to centralized systems. That introduces three persistent headaches.

First: latency. If your model’s output arrives after the control window closes, it’s not predictive—it’s historical. That’s a real issue for substation automation, feeder reconfiguration, and inverter controls where milliseconds to seconds matter.

Second: power and thermal budgets. A surprising amount of edge hardware in energy is installed in places that don’t welcome hungry compute: pole-top devices, remote reclosers, solar farms, offshore assets, or battery enclosures where heat and power draw are constant enemies.

Third: operational friction. Edge AI sounds great until you try to keep models updated across thousands of devices, deal with intermittent connectivity, and satisfy cybersecurity requirements.

The chip described in the RSS summary targets the first two problems directly. The team reported 20 microwatts per core and 80 µW total for a four-core chip in their demo. That’s not a small improvement; that’s a different class of device. When you can keep inference running at tens of microwatts, you can start placing intelligence in far more locations—without re-architecting your power system just to support the AI.

Reservoir computing, explained like you’ll actually use it

Reservoir computing is a time-series prediction method where only the final layer is trained. That’s the practical difference that makes it attractive for edge use.

Traditional neural networks (especially deep networks) learn by adjusting huge numbers of weights using backpropagation. That’s powerful, but it’s also computationally expensive—often too expensive for always-on prediction in constrained environments.

Reservoir computing flips the workflow:

- You feed your time-series signal into a fixed, recurrent network (the “reservoir”) with lots of internal loops.

- Those loops create a kind of fading memory of recent history.

- Instead of training the entire network, you train only a lightweight readout layer that maps reservoir states to the output you need.

Here’s the one-liner you can reuse internally:

Reservoir computing turns time-series history into rich features using fixed dynamics, then trains a small readout layer to make fast predictions.

Why “edge of chaos” is a feature, not a bug

Reservoirs are often tuned to operate near the edge of chaos—complex enough to represent many states, stable enough to remain useful. That chaotic richness is precisely what helps in nonlinear systems like:

- feeder load profiles with abrupt demand changes

- wind and solar generation with ramps and gusts

- equipment vibration signals that drift before failure

- weather-driven demand swings

Energy systems aren’t just noisy; they’re dynamical. Reservoir computing is built for that.

The hardware angle: why analog CMOS matters for energy deployments

The team implemented an analog reservoir-computing circuit in CMOS to minimize both energy and latency. In practice, this matters because many energy edge deployments want “always-on,” not “wake up, batch process, go back to sleep.”

According to the summary, each artificial neuron was implemented as an analog circuit node with:

- a nonlinear resistor (for nonlinearity)

- a memory element based on MOS capacitors (for state)

- a buffer amplifier (to manage signal flow)

Their chip used four cores, each with 121 nodes, and adopted a simple cycle reservoir (a large loop) to reduce wiring complexity while retaining useful dynamics.

Here’s the stance I’ll take: utilities should pay attention to analog and mixed-signal AI again. For years, the default has been “digital accelerators everywhere.” That’s fine in data centers. At the grid edge, analog approaches can win on power, latency, and even electromagnetic practicality—especially when the task is narrow (predict the next step, detect drift, forecast a ramp) rather than general-purpose vision or language.

Where ultra-low-power prediction fits in energy and utilities

If you can predict the next step of a time series with microwatt-level power draw, you can move intelligence closer to the asset. That changes both architecture and outcomes.

Predictive maintenance that doesn’t need a constant uplink

Most predictive maintenance programs still centralize analytics: stream vibration/temperature/current signatures to a platform, then run models. That works—until bandwidth, cost, or connectivity makes it brittle.

Reservoir computing at the edge enables a different pattern:

- Run continuous micro-inference locally (anomaly score, next-step prediction error, drift).

- Transmit only events and summaries, not raw high-frequency data.

- Escalate to richer diagnostics only when needed.

For rotating equipment (pumps, compressors, turbines) and grid assets (transformers, breakers), this “predict locally, report selectively” model can cut data movement drastically while improving time-to-detection.

Real-time demand forecasting where it actually helps: the feeder

System-level demand forecasting is useful; feeder-level forecasting is operational. It informs switching decisions, voltage control, and congestion management.

Edge AI can deliver short-horizon forecasts (seconds to minutes) that central systems often can’t act on quickly enough. With reservoir computing, the utility can run a light model per feeder or per neighborhood device, tuned to local patterns:

- EV charging clustering

- temperature-sensitive loads (winter heating, summer cooling)

- industrial step loads

- behind-the-meter solar variability

If you’re integrating more electrification in 2025—EVs, heat pumps, flexible loads—those short-horizon predictions stop being “nice to have.” They’re how you keep operations calm.

Grid optimization and control loops that don’t tolerate latency

The RSS example is rock-paper-scissors: predict the next move fast enough to respond inside a narrow time window. Grid control is the same constraint wearing different clothes.

Use cases that benefit from low-latency inference include:

- inverter control support (fast ramp prediction, smoothing)

- fault precursor detection (signature shifts before protection events)

- oscillation monitoring (early warning from PMU-like streams)

- microgrid energy management (forecasting net load and state transitions)

A practical rule: if your operator or controller needs the answer before the next measurement arrives, cloud-only analytics won’t cut it.

Weather-sensitive operations without running a full weather model

The team demonstrated next-step prediction on a logistic map (a classic chaotic system) and on weather time series. Utilities don’t need a full weather forecast at every pole. They need local, short-horizon signals:

- is wind output about to ramp up or down?

- is irradiance about to drop due to cloud edge effects?

- is temperature-driven demand about to spike?

Reservoir computing can act as a local “nowcasting” primitive embedded near solar farms, wind sites, or critical feeders.

What to ask before adopting reservoir computing in utility edge AI

Reservoir computing is strong for time-series prediction, but it’s not a universal replacement for deep learning. If you’re evaluating it for an energy AI roadmap, the right questions are concrete.

1) Is your problem truly time-series and dynamical?

Good fits:

- next-step prediction

- anomaly detection via prediction error

- forecasting ramps and transitions

- modeling nonlinear, history-dependent behavior

Poor fits:

- high-dimensional perception tasks (complex imagery)

- long-horizon planning without recent-history dependence

2) What’s your latency budget—and where does it break today?

Map the end-to-end loop:

- sensor sampling

- preprocessing

- inference

- decision logic

- actuation / alerting

Edge reservoir hardware is compelling when the bottleneck is inference latency or compute power rather than sensor or actuation delays.

3) How will you handle drift and seasonal pattern changes?

Energy time series drift constantly: seasons, DER penetration, new EV chargers, equipment aging.

One advantage of reservoir computing is that the readout training can be lightweight. That opens doors to:

- periodic on-device recalibration

- site-specific personalization (like the rock-paper-scissors gesture training)

- fast retraining after topology changes

But you still need governance: versioning, rollback, and monitoring.

4) What’s the deployment unit: per asset, per feeder, or per substation?

Reservoir computing performs well when tuned to a specific signal family. In utilities, that often means designing at the right granularity:

- per-transformer for thermal and loading prediction

- per-feeder for net load and voltage behavior

- per-inverter or plant controller for renewable ramps

Trying to build one model for everything is where programs slow down.

“Beats you at rock-paper-scissors” is a proxy for something bigger

The chip doesn’t read minds. It learns motion patterns from an acceleration sensor, then predicts what’s next quickly enough to respond in real time. That’s the story.

The utility story is sharper: edge AI for energy is mostly a race between physics and latency. Loads change, weather shifts, assets degrade, and power electronics respond on tight timelines. If your AI can’t keep up, it becomes reporting, not control.

I’ve found that the best way to evaluate an edge AI approach is to ask a blunt question: what decision gets better because the prediction arrives earlier than it does today? If you can’t name the decision, don’t ship the model.

For teams building in the AI in Energy & Utilities series themes—grid optimization, demand forecasting, predictive maintenance—reservoir computing hardware is a strong signal that the “always-on, ultra-low-power predictor” is becoming realistic.

If you’re exploring how to embed real-time forecasting into field devices (without blowing up your power budget), it’s time to rethink your edge stack. What would you optimize first: predictive maintenance signals, feeder net-load nowcasting, or inverter ramp prediction?