AI data centers are forcing a new grid reality: time-to-power is now the bottleneck. Here’s how utilities can respond with AI-driven grid optimization.

AI Data Centers Are Stress-Testing the Grid—Now What?

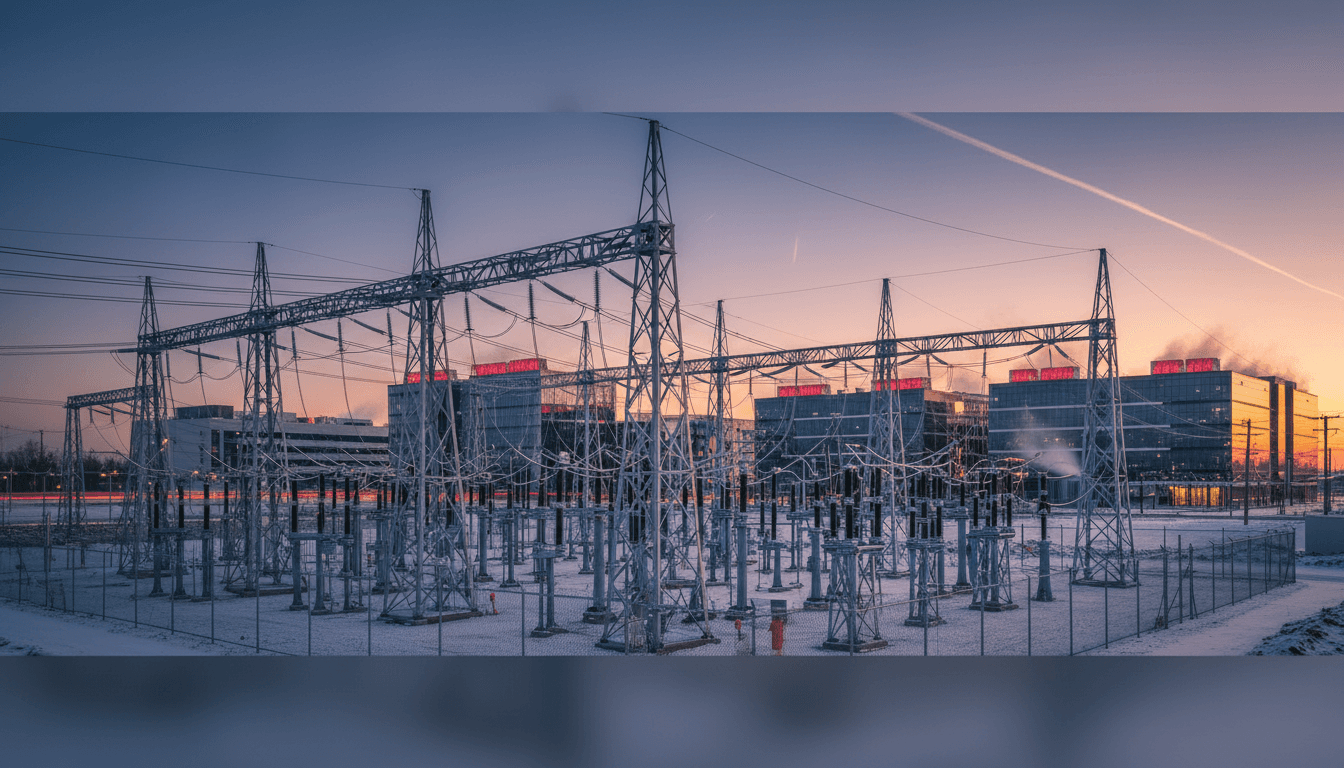

A single large AI data center can demand as much power as a mid-size city—and it’s rarely “steady” demand. It ramps, it spikes, and it needs reliability that most grids were never designed to provide on short notice. That’s why the uncomfortable truth for 2026 planning is this: the AI race is becoming a grid access race.

Todd Fowler’s recent argument that “the energy race is the AI race” lands because it reflects what utilities and developers are seeing right now: queue backlogs, transmission constraints, and permitting timelines that move in years while AI infrastructure scales in quarters. If you run grid operations, generation planning, T&D, or large customer programs, you’re not watching a trend—you’re managing a capacity and interconnection problem with economic and political consequences.

This post is part of our AI in Energy & Utilities series, and I’m going to take a stance: energy companies that treat AI solely as a new load will fall behind. The winners will use AI as a tool for grid optimization, demand forecasting, predictive maintenance, and renewable energy integration—because that’s the only way to meet AI-driven demand growth without breaking affordability or reliability.

The bottleneck isn’t generation—it’s “delivery”

The grid constraint is increasingly about transmission, interconnection, and time-to-power, not just megawatts available somewhere on the system. That’s the key operational shift behind the “energy race” framing.

The source article highlights a core mismatch: our capital deployment model and regulatory processes were built for a slower era. Even where generation can be built quickly, the practical path to energize a new large load often runs through:

- Transmission availability (and the ability to move power to the node where the data center lands)

- Interconnection studies and upgrades (often sequential, often delayed)

- Permitting and siting (local + state + federal layers, with real risk of rework)

- Substation and feeder build-outs (supply chain + workforce constraints)

The result is a new kind of project risk: you can have land, servers, and contracts—and still miss your commercial operation date because the grid can’t deliver.

Why this matters to utilities now

Utilities are getting squeezed from two sides:

- Very large loads want faster timelines than traditional planning cycles.

- Regulators and communities want reliability and reasonable rates—and they’re skeptical of socializing upgrades for private growth.

That tension is already reshaping how “who pays” is negotiated, how queues are managed, and how utilities justify investments in a world where load forecasts can jump materially in a single year.

Data center demand growth is real—and the numbers are big

AI isn’t just “more IT.” It’s a different load shape with a different growth curve. Fowler points to an International Energy Agency projection that global data center electricity consumption could reach 1,720 TWh by 2035 under certain conditions—more than Japan’s current electricity use.

Whether your service territory hosts hyperscalers or not, the impact spreads:

- Large-load development shifts regional transmission plans.

- New generation and storage projects get repriced around capacity value.

- Industrial customers compete with data centers for interconnection slots.

- Reliability standards start to intersect with private-sector uptime expectations.

Here’s what I’ve found in conversations with operators and planners: it’s not the annual energy that scares people—it’s the coincidence of peak and the speed of load arrival. A 200 MW load arriving “all at once” can break assumptions built into five- and ten-year plans.

“Behind-the-meter” is a symptom, not a solution

The source article notes a growing move: tech companies financing dedicated, behind-the-meter (BTM) supply—co-located gas, solar + batteries, or other on-site resources—to avoid congested interconnection and long timelines.

BTM can be rational for a single site. But as a system-level pattern, it creates hard questions:

- How do we manage gas infrastructure build-out and emissions constraints?

- What happens to grid cost allocation if the biggest new customers bypass the system?

- Do BTM projects reduce or increase overall resilience, especially during extreme events?

My view: BTM will keep growing because it’s a speed hack. But utilities that respond only by defending tariffs will lose influence. Utilities that respond by offering structured “time-to-power” products, flexible interconnection options, and AI-enabled operations will keep the customer relationship—and protect the grid.

Regulation is moving, but it’s not moving at AI speed

Large-load policy is now a front-page grid issue. The article describes DOE directing FERC to begin rulemaking, and FERC responding with an Advance Notice of Proposed Rulemaking aimed at improving timely access to transmission service for large loads, explicitly flagging AI data centers.

The direction is clear: regulators see the problem. The open question is execution.

The real constraint: queue management and accountability

Most interconnection reforms focus on process. Process matters, but accountability matters more. If a queue is clogged by speculative projects, everyone pays. If upgrades are uncertain until late-stage studies, everyone delays.

Expect 2026 and 2027 to reward regions that can:

- Enforce stronger readiness requirements (site control, deposits, milestones)

- Standardize study assumptions for large loads

- Use transparent capacity maps that actually reflect deliverability

- Build “repeatable” substation designs and interconnection packages

This is where AI can help—not as buzz, but as math.

The best way to power AI is to use AI on the grid

AI is both the problem (new load growth) and the tool (grid optimization). That’s the bridge energy leaders should lean into.

Below are four practical, high-value AI use cases that directly address the bottlenecks highlighted in the source article.

1) Demand forecasting for large-load arrival (and load shape)

You can’t plan what you can’t predict. Traditional load forecasting struggles with data centers because the drivers are commercial, not demographic.

AI-enhanced demand forecasting improves planning by integrating:

- Real estate and permitting signals

- Interconnection queue behavior

- Customer expansion patterns across regions

- Data center operational profiles (training vs inference, redundancy, cooling)

A concrete operational win: better forecasts reduce “surprise upgrades” and make it easier to justify proactive transmission and substation investments.

2) Grid-enhancing technologies (GETs) need analytics to pay off

Fowler points to Grid-Enhancing Technologies as a pathway to increase effective capacity on existing assets. GETs (like dynamic line ratings, topology optimization, and advanced power flow control) are valuable—but only if you operate them intelligently.

AI helps by:

- Converting sensor streams into dispatchable capacity estimates

- Recommending operator actions (switching, reconfiguration) that stay within constraints

- Detecting when GET performance is degrading due to equipment issues

The takeaway: GETs without AI are often underutilized. With AI, they become a real capacity resource.

3) Predictive maintenance to protect reliability under higher utilization

When you push more power through the same infrastructure, failures get expensive. Predictive maintenance is the reliability insurance policy utilities can actually scale.

AI models can prioritize maintenance and replacements by estimating failure risk for:

- Transformers (thermal stress, dissolved gas analysis patterns)

- Switchgear and breakers (operation counts, partial discharge signals)

- Line components (vegetation risk, conductor temperature cycles)

This matters for AI-era planning because higher load factors and tighter reserve margins mean you have less room for unplanned outages.

4) Renewable energy integration and storage dispatch under “fast” load

AI-heavy load growth collides with a grid that’s adding renewables and storage. That combination can work—but it requires more sophisticated balancing.

AI supports renewable energy integration by:

- Forecasting short-term wind/solar output more accurately

- Optimizing battery dispatch for both energy and capacity value

- Coordinating flexible loads (when available) to reduce peak stress

The stance here is simple: if your integration strategy depends on perfect forecasts, it’s not a strategy. AI improves forecast accuracy and operational decision-making enough to make higher renewable penetration compatible with reliability.

Capital is shifting—and utilities should shape it, not chase it

The article raises the question everyone is quietly asking: where does the capital come from? Traditional rate-based expansion alone won’t meet the speed or scale of the build-out being demanded. Private equity, capital markets, and tech companies are already stepping in.

Energy leaders should treat this as a design problem: create deal structures that fund infrastructure quickly while keeping customers and regulators aligned. The patterns showing up most often include:

- Customer-funded or customer-guaranteed upgrades with defined milestones

- Phased energization (partial service earlier, full build later)

- Hybrid supply portfolios (grid + on-site generation + storage)

- Performance-based arrangements tied to time-to-power and reliability metrics

A practical caution: if contracts don’t clearly define curtailment rights, backup expectations, and upgrade triggers, disputes will become the new delay.

A pragmatic playbook for 2026 grid readiness

The goal isn’t “more megawatts.” The goal is dependable time-to-power without rate shock. Here’s a focused checklist utilities and energy providers can act on in the next two quarters.

Operational moves (0–6 months)

- Stand up a large-load “fast lane” team that combines interconnection, planning, tariff, and engineering.

- Publish a deliverability-first capacity view (even if imperfect) to reduce speculative requests.

- Standardize interconnection packages for common load sizes (50 MW, 100 MW, 250 MW) to speed engineering.

- Deploy AI-assisted queue triage to identify non-viable projects early.

Infrastructure moves (6–24 months)

- Prioritize substation expansions and high-value transmission reinforcements that unlock multiple sites.

- Scale GETs where they defer major builds (and instrument them properly).

- Expand predictive maintenance programs on the corridors that will carry new large-load growth.

- Build storage strategy around capacity and reliability, not only arbitrage.

Governance moves (ongoing)

- Align regulators early on cost allocation principles for large-load upgrades.

- Require clearer customer commitments (deposits, milestones, exit fees) to keep queues healthy.

- Set reliability expectations with large loads in plain language—backup, curtailment, and ride-through standards.

Snippet-worthy reality: The AI boom isn’t blocked by innovation. It’s blocked by interconnection timelines.

What this means for the “AI in Energy & Utilities” roadmap

Fowler’s point that the energy transition and AI expansion are now entangled is exactly why this topic series exists. The grid has to modernize, but it also has to stay reliable and affordable. AI in utilities isn’t a side project anymore—it’s operational infrastructure.

If you’re deciding where to invest first, pick the areas that reduce time-to-power risk the fastest: AI-enabled demand forecasting, grid optimization with GETs, predictive maintenance for high-utilization assets, and renewable energy integration tied to dispatchable capacity.

The question that will define 2026 isn’t whether AI data centers will get built. They will. The real question is: will your grid be the place they can connect on time—without making reliability the collateral damage?